New Endorsements for “If Anyone Builds It, Everyone Dies”

Nate and Eliezer’s forthcoming book has been getting a remarkably strong reception. I was under the impression that there are many people who find the extinction threat from AI credible, but that far fewer of them would be willing to say so publicly, especially by endorsing a book with an unapologetically blunt title like If Anyone Builds It, Everyone Dies. That’s certainly true, but I think it might be much less true than I had originally thought. Here are some endorsements the book has received from scientists and academics over the past few weeks: > This book offers brilliant insights into the greatest and fastest standoff between technological utopia and dystopia and how we can and should prevent superhuman AI from killing us all. Memorable storytelling about past disaster precedents (e.g. the inventor of two environmental nightmares: tetra-ethyl-lead gasoline and Freon) highlights why top thinkers so often don’t see the catastrophes they create. —George Church, Founding Core Faculty, Synthetic Biology, Wyss Institute, Harvard University > A sober but highly readable book on the very real risks of AI. Both skeptics and believers need to understand the authors’ arguments, and work to ensure that our AI future is more beneficial than harmful. —Bruce Schneier, Lecturer, Harvard Kennedy School > A clearly written and compelling account of the existential risks that highly advanced AI could pose to humanity. Recommended. —Ben Bernanke, Nobel-winning economist; former Chairman of the U.S. Federal Reserve George Church is one of the world’s top genetics researchers; he developed the first direct genomic sequencing method in 1984, sequenced the first genome (E. coli), helped start the Human Genome Project, and played a central role in making CRISPR useful for biotech applications. Bruce Schneier is the author of Schneier on Security and a leading computer security expert. He wrote what was, as far as I can tell, the first ever applied cryptography textbook, w

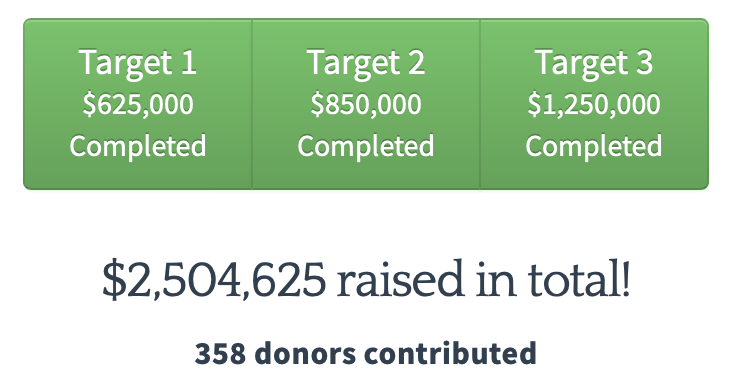

LFG!

#7 Combined Print & E-Book Nonfiction

#8 Hardcover Nonfiction