Can you get Pope to read the book? He seems to have opinions on AI and getting his endorsement would be very useful from book promotion perspective.

I've suggested a pathway or two for this; if you have independent pathways, please try them / coordinate with Rob Bensinger about trying them.

Like if you want the "remarkably strong reception" to have strong [retracting: any] evidential value it needs to be sent to skeptics.

Otherwise you're just like, "Yeah, we sent it to a bunch of famous people using our connections and managed to get endorsements from some." It's basically just filtered evidence and attempting to persuade someone with it is attempting to persuade them with means disconnected from the truth.

We have lots of things in life that provide evidence while still being filtered. It's trivially false that in order for it to have "any evidential value" it needs to be sent to skeptics.

I agree it would be good to do an unbiased survey, but there are lots and lots of institutions in society that rely on filtered evidence and still manage to provide a lot of signal. Calling references from someone's job application actually helps, even if they of course chose the references to be ones that would give a positive testimonial.

Calling references from someone's job application actually helps, even if they of course chose the references to be ones that would give a positive testimonial.

That's quite different though!

References are generally people contacted at work; i.e., those who can be verified to have had a relationship with the person giving the reference. We think these people, by reason of having worked with them, are in a position to be knowledgeable about them, because it's hard to fake qualities over long period of time. Furthermore, there's an extremely finite number of such people; someone giving a list of references will probably give a reference they think would give a favorable review, but they have to select from a short list of such possible references. That short list is why it's evidential. So it's filtered, but it's filtered from a small list of people well-positioned to evaluate your character.

Book reviews are different! You can hire a PR firm, contact friends, to try to send it to as many people for a favorable blurb as you possibly can. Rather than having a short list of potential references, you have a very long one. And of course, these people are in many cases not positioned to be knowledgeable about whether the book is correct; they're certainly not as well-positioned as people you've worked with are to know about your character. So you're filtering from a large list -- potentially very large list -- of people who are not well-positioned to evaluate the work.

You're right it provides some evidence. I was wrong about the claim about none; if you could find no people to review a book positively, surely that's some evidence about it.

But for the reasons above it's very weak evidence, probably to the degree where you wonder about about the careful use of evidence ("evidence" is anything that might help you distinguish between worlds you're in, regardless of strength -- 0.001 bits of evidence is still evidence) or the more colloquial ("evidence" is what helps you distinguish between worlds you're in reasonably strongly).

And like, this is why it's normal epistemics to ignore the blurbs on the backs of books when evaluating their quality, no matter how prestigious the list of blurbers! Like that's what I've always done, that's what I imagine you've always done, and that's what we'd of course be doing if this wasn't a MIRI-published book.

I think you are underestimating the difficulty of getting endorsements like this. Like, I have seen many people in the AI Safety space try to get endorsements like this over the years, for many of their projects, and failed.

Now, how much is that evidence about the correctness of the book? Extremely little! But I also think that's not what Malo is excited about here. He is excited about the shift in the Overton window it might reflect, and I think that's pretty real, given the historical failure of people to get endorsements like this for many other projects.

Like, IDK, I am into a more prominent "filtered evidence disclaimer" somewhere in this post, just so that people don't make wrong updates, but even with the filtered evidence, I think for many people these endorsements are substantial updates.

Now, how much is that evidence about the correctness of the book? Extremely little!

It might not be much evidence for LWers, who are already steeped in arguments and evidence about AI risk. It should be a lot of evidence for people newer to this topic who start with a skeptical prior. Most books making extreme-sounding (conditional) claims about the future don't have endorsements from Nobel-winning economists, former White House officials, retired generals, computer security experts, etc. on the back cover.

And like, this is why it's normal epistemics to ignore the blurbs on the backs of books when evaluating their quality, no matter how prestigious the list of blurbers! Like that's what I've always done, that's what I imagine you've always done, and that's what we'd of course be doing if this wasn't a MIRI-published book.

If I see a book and I can't figure out how seriously I should take it, I will look at the blurbs.

Good blurbs from serious, discerning, recognizable people are not on every book, even books from big publishers with strong sales. I realize this is N=2, so update (or not) accordingly, but the first book I could think of that I knew had good sales, but isn't actually good is The Population Bomb. I didn't find blurbs for that (I didn't look all that hard, though, and the book is pretty old, so maybe not a good check for today's publishing ecosystem anyway). The second book that came to mind was The Body Keeps the Score. The blurbs for that seem to be from a couple respectable-looking psychiatrists I've never heard of.

Yeah, I think people usually ignore blurbs, but sometimes blurbs are helpful. I think strong blurbs are unusually likely to be helpful when your book has a title like If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All.

I second this. "If Anyone Builds It, Everyone Dies" triggers the "find out if this is insane crank horseshit" subroutine. And one of the quickest/strongest ways to negatively resolve that question is credible endorsements from well-known non-cranks.

Yep. And equally, the blurbs would be a lot less effective if the title were more timid and less stark.

Hearing that a wide range of respected figures endorse a book called If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All is a potential "holy shit" moment. If the same figures were endorsing a book with a vaguely inoffensive title like Smarter Than Us or The AI Crucible, it would spark a lot less interest (and concern).

I'd agree that this is to some extent playing the respectability game, but personally I'd be very happy for Eliezer and people to risk doing this too much rather than too little for once.

Schneier is also quite skeptical of the risk of extinction from AI. Here's a table o3 generated just now when I asked it for some examples.

| Date | Where he said it | What he said | Take-away |

|---|---|---|---|

| 1 June 2023 | Blog post “On the Catastrophic Risk of AI” (written two days after he signed the CAIS one-sentence “extinction risk” statement) | “I actually don’t think that AI poses a risk to human extinction. I think it poses a similar risk to pandemics and nuclear war — a risk worth taking seriously, but not something to panic over.” (schneier.com) | Explicitly rejects the “extinction” scenario, placing AI in the same (still-serious) bucket as pandemics or nukes. |

| 1 June 2023 | Same post, quoting his 2018 book Click Here to Kill Everybody | “I am less worried about AI; I regard fear of AI more as a mirror of our own society than as a harbinger of the future.” (schneier.com) | Long-standing view: most dangers come from how humans use technology we already have. |

| 9 Oct 2023 | Essay “AI Risks” (New York Times, reposted on his blog) | Warns against “doomsayers” who promote “Hollywood nightmare scenarios” and urges that we “not let apocalyptic prognostications overwhelm us.” (schneier.com) | Skeptical of the extinction narrative; argues policy attention should stay on present-day harms and power imbalances. |

Agreed. As a long-time reader of Schneier's blog, I was quite surprised by Schneier's endorsement, and I would have cited exactly those two essays. He's written a bunch of times about bad things that humans might intentionally use AI to do, talking about things like AI propaganda, AI-powered legal hacks, and AI spam clogging requests for public comments, but I would have described him as scornful of concerns about x-risk or alignment.

Aside from the usual suspects (people like Tegmark), we mostly sent the book to people following the heuristic "would an endorsement from this person be helpful?", much more so than "do we know that this person would like the book?". If you'd asked me individually about Church, Schneier, Bernanke, Shanahan, or Spaulding in advance, I'd have put most of my probability on "this person won't be persuaded by the book (if they read it at all) and will come away strongly disagreeing and not wanting to endorse". They seemed worth sharing the book with anyway, and then they ended up liking it (at least enough to blurb it) and some very excited MIRI slack messages ensued.

(I'd have expected Eddy to agree with the book, though I wouldn't have expected him to give a blurb; and I didn't know Wolfsthal well enough to have an opinion.)

Nate has a blog post coming out in the next few days that will say a bit more about "How filtered is this evidence?" (along with other topics), but my short answer is that we haven't sent the book to that many people, we've mostly sent it to people whose AI opinions we didn't know much about (and who we'd guess on priors would be skeptical to some degree), and we haven't gotten many negative reactions at all. (Though we've gotten people who just didn't answer our inquiries, and some of those might have read the book and disliked it enough to not reply.)

As far as I understand MIRI strategy, the point of the book is communication, it's written from assumption that many people would be really worried if they knew the content of the book, and the main reason why they are not worried now is because before book this content exists mostly in Very Nerdy corners of the internet and is written in very idiosyncratic style. My intended purpose of Pope endorsement is not to singal that book is true, it's to move for 2 billions of Catholics topic of AI x-risk from spot "something weird discussed in Very Nerdy corners of the internet" to spot "literal head of my church is interested in this stuff, maybe I should become interested too".

I'd be pretty surprised if this book was persuasive to almost any skeptic who had expressed opinions on the topic before; I think you should just take that as a given. Eliezer and Nate are just not very good (and seemingly uninterested) at writing in ways that are persuasive to thoughtful skeptics. (Additionally, many AI x-risk skeptics are pretty dishonest/unreasonable IMO and thus hard to persuade.)

I think I somewhat disagree with this. My view is more like:

- The recent writings of Eliezer (and probably Nate?) are not very good at persuading thoughtful skeptics, seemingly in part due to not really trying to do this / being uninterested (see e.g. Eliezer's writing on X/twitter).

- Eliezer and Nate tried much harder to make this book persuasive to moderately thoughtful people who are more skeptical by default (or at least not actively off-putting) including via mechanisms like having a bunch of test readers etc. I bet they didn't try hard to engage with the arguments of people who were skeptical and already had detailed views on the topic. So, I expect "skeptics who had somewhat detailed views" will feel like the book doesn't even responds to their disagreements, let alone persuades them.

- In practice, I also expect minimal movement from "thoughtful skeptics", though maybe a bit more than Buck seems to articulate.

- However, this isn't really the point of the book I'd guess, the point (I think) is to be reasonably persuasive (and make initial arguments) to people who haven't really thought about the topic (including people who would be skeptical by default).

- I expect the book is much less off-putting to the target audience than stuff like Eliezer's writing on X/twitter which will make it much more effective at its aims.

- I don't expect that this book will (e.g.) engage with my disagreements with Eliezer or why I'm much more optimistic.

[your comment leads me to believe you may not see why MIRI/LC-clustered folks disagreed with your comment but thumbs-upped Ryan, so it might be worthwhile for me to point out why I think that is]

The delta I see between the comments is:

Buck: almost any skeptic who had expressed opinions on the topic before

vs

Ryan: skeptics who had somewhat detailed views

'Almost any skeptic who has expressed opinions on the topic before' includes people like Francis Fukayama, who is just a random public intellectual with no AI understanding and got kinda pressed to express a view on x-risk in an interview, so came out against it as a serious concern. Then he thought harder, and voila [more gradual disempowerment flavored, but still!]. I think the vast majority of people, both in gen pop and in powerful positions, are more like Francis than they are like Alex Turner.

So four hypotheses:

- You just agree with Ryan's narrower frame uncomplicatedly and your initial comment was a little strong.

- You think the rat/EA in-fights are the important thing to address, and you're annoyed the book won't do this.

- You think the arguments of the Very Optimistic are the important thing to address.

- William is just confused.

Not necessarily responding to the rest of your comment, but on the "four hypotheses" part:

- You think the rat/EA in-fights are the important thing to address, and you're annoyed the book won't do this.

- You think the arguments of the Very Optimistic are the important thing to address.

I'm not sure that I buy the "rat/EA infights" and "Very Optimistic" are the relevant categories. I think there are a broad group of people who already have some views on AI risk, who are at-least-plausibly at-least-somewhat open minded, and who could be useful allies if they either changed their mind or took their stated views more seriously.

Let me list some people / groups of people: Noam Brown, Matt Clifford, Boaz Barak, Jared Kaplan, Dario Amodei, Noam Shazeer, [a large group of AI/ML academics], Sayash Kapoor, Nat Friedman, Jake Sullivan, Dean Ball, [various people associated with progress studies], Bill Gates.

I'm not claiming people who didn't have any considered view on AI don't matter. But I think that in practice when trying to change the minds and actions of key people (in a way that will actually lead to productive actions etc), it's often important to either convince some more skeptical people who already have views and objections or to at least have solid arguments against their best objections. At a more basic level, to actually achieve frontier intellectual progress (e.g., settle action relevant disagreements about the level and nature of the risk between people who are already doing things), this is required. Maybe this book isn't the place to do this, but that isn't a crux for the point being made in this thread.

Yup, this is a good clarification and I see now that omitting this was an error. Thank you!

I think Jack Shanahan is a member of the reference class you're pointing at here, and I can say that there were others in this reference class who found the book compelling but not wholly convincing, who did not want to say so publicly (hopefully they start feeling comfortable talking more openly about the topic — that's the goal!).

There are also other resources we're currently developing, to release in tandem with the book, that should help with this leg of the conversation.

We are at least attempting to be attentive to this population as part of the overall book campaign, even if it seemed like too much to chew / not exactly the right target for the contents of the book itself.

IMO the real story here for 'how' is "the book is persuasive to a general audience." (People have made claims about the overton window shifting--and I don't think there's 0 of that--but my guess is the book would have gotten roughly the same blurbs in 2021, and maybe even 2016.)

But the social story for how is that I grew up in the DC area, one of my childhood friends is the son of an economist who is not a household name but is prominent enough that all of the household name economists know him. (This is an interesting position to be in--I feel like I'm sort of in this boat with the rationality community.) We play board games online every week and so when the blurb hunt started I got him an advance copy, and he was hooked enough to recommend it to Ben (and others, I think).

(I share this story in part b/c I think it's cool, but also because I think lots of people are ~2 hops away from some cool people and could discover this with a bit of thought and effort.)

This might be an ignorant question, but, since you bring up how many people are within 2 hops of other people, can you ask your friend to ask Bernanke to ask Obama to read the book?

Consider making public a bar with the (approximate) number of pre-orders, with the 20 000 goal as end goal. Having explicit goals that everyone can optimize for can help getting a sense of whether it's worth investing marginal efforts and can be motivational for people to spread more etc.

I suggest restoring "This is the current forefront of both philosophy and political theory. I don't say this lightly" to Grimes's blurb.

But anyhow: congratulations. Exciting. Bullish for the human race. Bullish for lightcone value.

I don't have any connections I can offer to any of the following, but they would be more worth a shot than average: Marjorie Taylor Greene, John Fetterman, Joe Rogan, Rishi Sunak, Leo XIV, or Ecumenical Patriarch Bartholomew.

I think what is going on is something like what Scott describe in Can Things Be Both Popular And Silenced?

6. Celebrity helps launder taboo ideology. If you believe Muslim immigration is threatening, you might not be willing to say that aloud – especially if you’re an ordinary person who often trips on their tongue, and the precise words you use are the difference between “mainstream conservative belief” and “evil bigot who must be fired immediately”. Saying “I am really into Sam Harris” both leaves a lot of ambiguity, and lets you outsource the not-saying-the-wrong-word-and-getting-fired work to a professional who’s good at it. In contrast, if your belief is orthodox and you expect it to win you social approval, you want to be as direct as possible.

I don't think that admitting to believe in AI X-risk would get you labelled as evil, but it could possibly get you labelled as crazy (which is maybe worse than evil for intellectuals?). To be able to publicly admit to that view, you either have to be able to argue for it really well, or be able to outsource that arguing to someone else.

I don't know why this is happening with this book in particular though, since there are currently lots of books, blogposts, YouTube videos, etc, that explains AI X-risk. Maybe non of the previous work where good enough? Or respectable enough? Or advertised enough?

Are of these people willing to speak about it on a podcast or on TV?

Geoffrey Hinton popularised AI risk a lot because he was willing to speak on TV.

Do we need to do anything special to get invited to preorderers-only events? Preordered hardcover on May 14th, was not aware of the Q&A (Although perhaps I needed to pre-order sooner :) Or just do a better job of paying attention to my email inbox :) ).

We're still working out some details on the preorder events; we'll have an announcement with more info on LessWrong, the MIRI Newsletter, and our Twitter in the next few weeks.

You don't have to do anything special to get invited to preorder-only events. :) In the case of Nate's LessOnline Q&A, it was a relatively small in-person event for LessOnline attendees who had preordered the book; the main events we have planned for the future will be larger and online, so more people can participate without needing to be in the Bay Area.

(Though we're considering hosting one or more in-person events at some point in the future; if so, those would be advertised more widely as well.)

FYI the link for preordering currently contains two concatenated instances of the URL (which fails relatively gracefully, though requires an extra click since it takes you to the top of the site for the book -- which thankfully then conveniently also contains a link to the preorder section -- rather than directly to the preorder section itself).

Slightly derailing the conversation from the OP: I came across this variant on German Amazon: https://www.amazon.de/Anyone-Builds-Everyone-Dies-Superintelligent/dp/1847928935/

It notably has a different number of pages (32 more) and a different publisher. Is this just a different (earlier?) version of the book, or is this a scam?

Not a scam. Bodley Head is handling the UK publishing, which (I guess?) also includes the english edition on German Amazon.

The general pipeline for the UK version (and squaring of all the storefronts) is lagging behind the US equivalents.

Also oddly, the US version is on many of Amazon's international stores including the German store ¯\_(ツ)_/¯

Eliezer's look has been impressively improved recently

I think updating his amazon picture to one of the more recent pictures would be quite quick and increase the chances of people buying the book.

Seconded. The new hat and the pointier, greyer beard have taken him from "internet atheist" to "world-weary Jewish intellectual." We need to be sagemaxxing. (Similarly, Nate-as-seen-on-Fox-News is way better than Nate-as-seen-at-Google-in-2017.)

Unrelated but where on earth is pictured at the bottom of https://ifanyonebuildsit.com/praise ? It doesn't really look like any recognizable night image so I suspect it's AI generated, but maybe I'm wrong.

I understand that book publishing is necessarily slow (even for ebooks), but I feel like I'm ordering a copy of "Before Your Preorder Even Arrives, Everyone Dies"

Edit: I commented before reading the post :/. I'm glad that lots of important people have seen it before the release. Now that I think about it, it actually makes sense to keep the book contents private for some time before the release, because the VIPs given an early copy will feel like they're getting something available to no one except them. They'll be more curious what's inside and consider it more special than a random book from the bookstore.

Hmm. I see. It's a weird sentence, to call a person a "disaster precedent". It would work better with a simple rearrangement:

"Memorable storytelling about past disasters highlights why top thinkers (e.g. the inventor of two environmental nightmares: tetra-ethyl-lead gasoline and Freon) so often don’t see the catastrophes they create."

But I suppose one does not simply edit a quote.

The whole AI safety concern implies that the intersection of "thinkers" and "disasters" is non-null, yes?

Nate and Eliezer’s forthcoming book has been getting a remarkably strong reception.

I was under the impression that there are many people who find the extinction threat from AI credible, but that far fewer of them would be willing to say so publicly, especially by endorsing a book with an unapologetically blunt title like If Anyone Builds It, Everyone Dies.

That’s certainly true, but I think it might be much less true than I had originally thought.

Here are some endorsements the book has received from scientists and academics over the past few weeks:

—George Church, Founding Core Faculty, Synthetic Biology, Wyss Institute, Harvard University

—Bruce Schneier, Lecturer, Harvard Kennedy School

—Ben Bernanke, Nobel-winning economist; former Chairman of the U.S. Federal Reserve

George Church is one of the world’s top genetics researchers; he developed the first direct genomic sequencing method in 1984, sequenced the first genome (E. coli), helped start the Human Genome Project, and played a central role in making CRISPR useful for biotech applications.

Bruce Schneier is the author of Schneier on Security and a leading computer security expert. He wrote what was, as far as I can tell, the first ever applied cryptography textbook, which had a massive influence on the field.

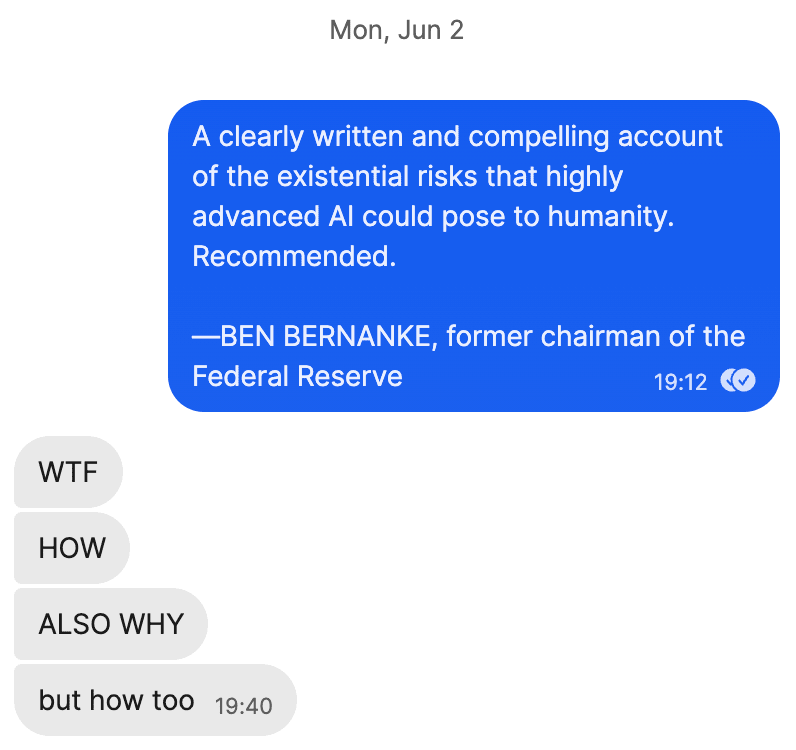

We have some tangential connections to Church and Schneier. But Ben Bernanke? The Princeton macroeconomist who was the chair of the Federal Reserve under Bush and Obama? He definitely wasn’t on my bingo card.

I was even more surprised by the book’s reception among national security professionals:

—Jon Wolfsthal, former Special Assistant to the President for National Security Affairs; former Senior Director for Arms Control and Nonproliferation, White House, National Security Council

—Lieutenant General John (Jack) N.T. Shanahan (USAF, Ret.), Inaugural Director, Department of Defense Joint AI Center

—R.P. Eddy, former Director, White House, National Security Council

—Suzanne Spaulding, former Under Secretary, Department of Homeland Security

Jack Shanahan is a retired three-star general who helped build and run the Pentagon’s Joint AI Center—the coordinating hub for bringing AI to every branch of the U.S. military. He’s a highly respected voice in the national security community, and various folks I know in D.C. expressed surprise that he provided us with a blurb at all.

Suzanne Spaulding is the former head of what’s now the Cybersecurity and Infrastructure Security Agency (CISA), the U.S. government’s main agency for cybersecurity and for critical infrastructure security and resilience. Per her bio, she has “worked in the executive branch in Republican and Democratic administrations and on both sides of the aisle in Congress” and was also “executive director of two congressionally created commissions, on weapons of mass destruction and on terrorism.”

R.P. Eddy served as Director of the White House National Security Council during Bill Clinton’s administration, and has a background in counterterrorism.

Jon Wolfsthal was the Obama administration’s senior nuclear security advisor, and now serves as the Director of Global Risk at the Federation of American Scientists, a highly respected think tank. He also enthusiastically shared our book announcement on LinkedIn.

The book also got some strong blurbs from prominent people who were already on the record as seriously worried about smarter-than-human AI. Max Tegmark tweeted that he thought this was the “most important book of the decade.” Bart Selman, a Cornell Professor of Computer Science and principal investigator at the Center for Human-Compatible AI, wrote that he felt this was “a groundbreaking text” and “essential reading for policymakers, journalists, researchers, and the general public.” Other positive blurbs came from people like Huw Price, Tim Urban, Scott Aaronson, Daniel Kokotajlo, and Scott Alexander. These folks have been fighting the good fight for years if not decades. The book team has assembled a collection of these endorsements and others[1] at ifanyonebuildsit.com/praise.

I personally passed advance copies of the manuscript to many people (including many of those above) over the past couple of months, and it wasn’t all enthusiasm. For example, among members of the U.S. legislative and executive branches (and their staff) that I’ve spoken to in recent weeks, many have declined to comment publicly, often despite giving private praise. We still have a long way to go before a critical mass of policymakers and public servants feel ready to discuss this issue openly.[2] But it seems meaningfully more possible to me that we can get there.

When I read a late draft of the book, I remember thinking that it turned out pretty good. But only in the last couple of months, as reactions have started to come in from early readers, has it started to sink in for me that this book might be a game-changer. Not just because the book is a timely resource about an urgent issue, but because it’s actually landing.

If you’re excited by this and want to support the book, then preordering—and encouraging others to preorder—continues to be exceptionally helpful.[3] It’s still far from certain that the book is going to make bestseller lists, but it’s off to a very promising start. Some of the publisher’s folks expressed surprise at the strong early preorders, and they already expected the book to be a big deal. It remains to be seen how much we can keep up the momentum, vs. how much of that is due to an initial and unreplicable boost. We have already done one event exclusive to people who have preordered the book (a Q&A that Nate gave at the LessOnline conference) and are planning to do more such events online.

Aside from that, we’re now starting to line up media engagements (podcasts, YouTube videos, interviews, etc.) for release when (and shortly after) the book comes out in mid-September. If you run a major podcast (or some other form of media with a large audience) or you can put us in touch with someone who does, please reach out to us at media@intelligence.org.

The team at MIRI is humbled and grateful that so many serious voices in science, academia, policy, and other areas have come forward to help signal-boost this book.

It seems like we have a real chance. LFG.

The book has also received endorsements from entertainers like Stephen Fry, Mark Ruffalo, Patton Oswalt, and Grimes; from Yishan Wong, former CEO of Reddit; from Emmett Shear, who was briefly the interim CEO of OpenAI; and from additional early reviewers.

We’re quite limited in how many advance copies we can circulate, but consider DMing us if you can put us in touch with especially important public figures, policy analysts, and policymakers who should probably see the book as soon as possible.

If you want to buy an extra copy (or, e.g., 5 or 10 copies) for friends and family who would be interested in reading the book, that’s potentially a big help too.

Nate’s previous post warned that “bulk purchases” don’t help in a way that worried some people unduly. To clarify what that means, based on our current understanding: orders in the range of 5–10 are generally fine. We think it’s a bad idea to buy copies purely to inflate the sales numbers. (Though if you have a legitimate reason for wanting to preorder a large amount of copies, e.g., for an event or something, reach out to us at contact@intelligence.org and we can direct you to the proper channels to make such preorders.) But we encourage you to buy copies for friends and family if you want to. Ultimately, you should buy as many books as you want to actually buy and use, and not buy books if they’re just going to end up in dumpsters or as monitor stands.