Introspection via localization

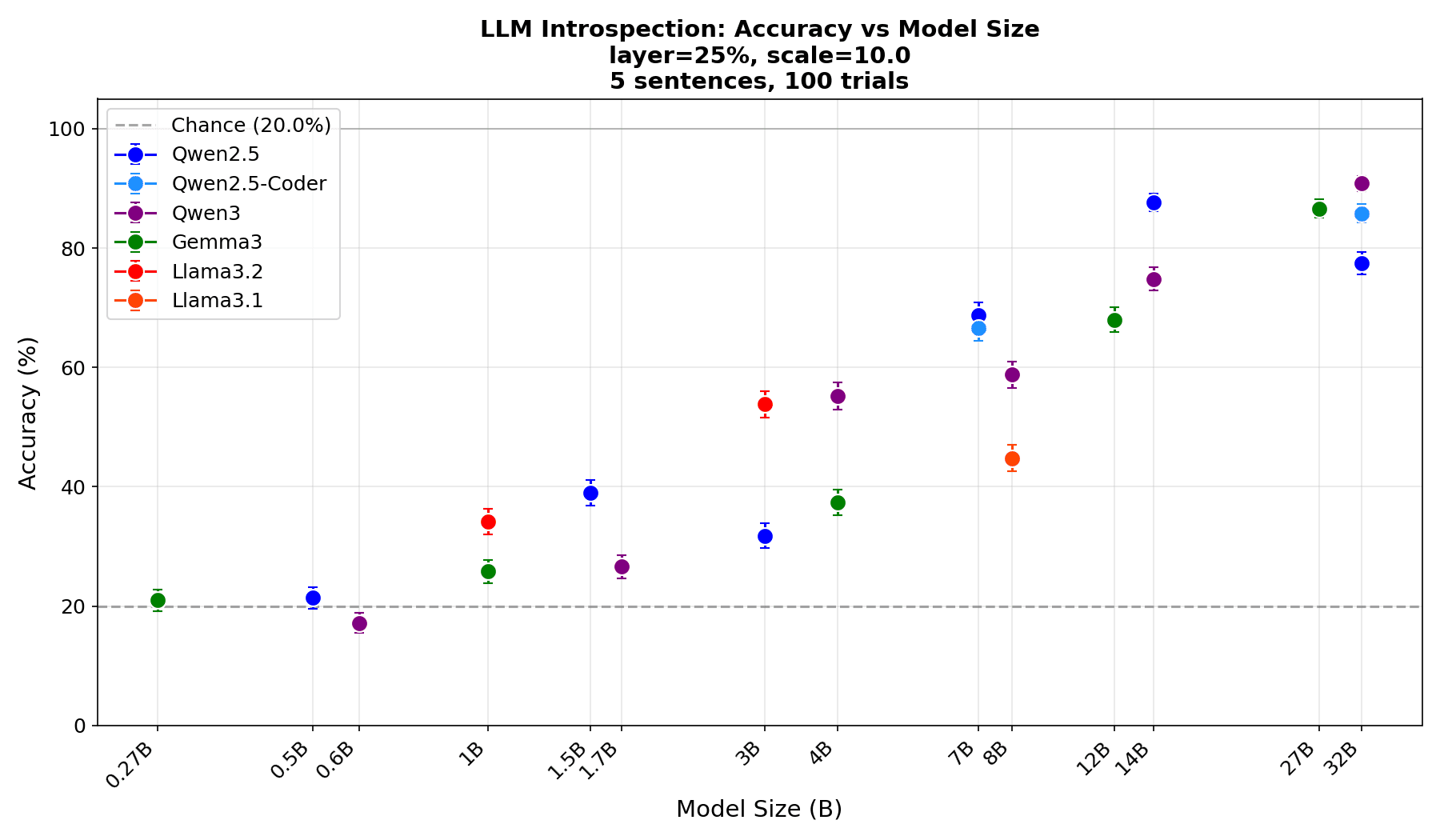

Recently, Anthropic found evidence that language models can "introspect", i.e. detect changes in their internal activations.[1] This was then reproduced in smaller open-weight models.[2][3] One drawback of the experimental protocol is that it can be delicate to disentangle the introspection effect from steering noise, especially in small models.[4] In this...

Thanks for running this! For me this confirms that the LLM has some introspective awareness. It makes sense that it would perceive the injected thought as "more abstract" or "standing out". Would be interesting to try many different questions to map exactly how it perceives the injection.