Expanding on this now that I've a little more time:

Although I haven't had a chance to perform due diligence on various aspects of this work, or the people doing it, or perform a deep dive comparing this work to the current state of the whole field or the most advanced work on LLM exploitation being done elsewhere,

My current sense is that this work indicates promising people doing promising things, in the sense that they aren't just doing surface-level prompt engineering, but are using technical tools to find internal anomalies that correspond to interesting surface-level anomalies, maybe exploitable ones, and are then following up on the internal technical implications of what they find.

This looks to me like (at least the outer ring of) security mindset; they aren't imagining how things will work well, they are figuring out how to break them and make them do much weirder things than their surface-apparent level of abnormality. We need a lot more people around here figuring out things will break. People who produce interesting new kinds of AI breakages should be cherished and cultivated as a priority higher than a fair number of other priorities.

In the narrow regard in ...

I'm confused: Wouldn't we prefer to keep such findings private? (at least, keep them until OpenAI will say something like "this model is reliable/safe"?)

My guess: You'd reply that finding good talent is worth it?

I'm confused by your confusion. This seems much more alignment than capabilities; the capabilities are already published, so why not yay publishing how to break them?

Because (I assume) once OpenAI[1] say "trust our models", that's the point when it would be useful to publish our breaks.

Breaks that weren't published yet, so that OpenAI couldn't patch them yet.

[unconfident; I can see counterarguments too]

- ^

Or maybe when the regulators or experts or the public opinion say "this model is trustworthy, don't worry"

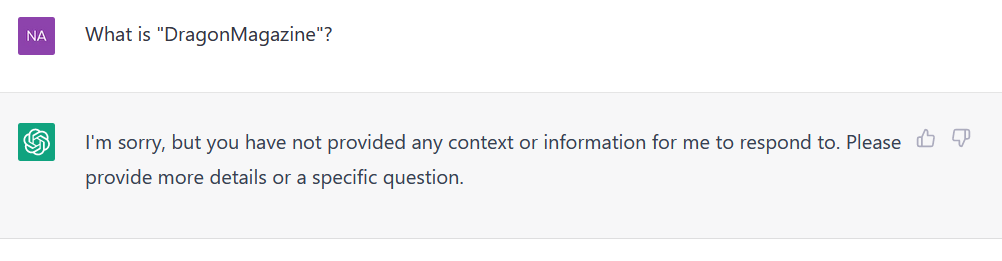

TLDR: The model ignores weird tokens when learning the embedding, and never predicts them in the output. In GPT-3 this means the model breaks a bit when a weird token is in the input, and will refuse to ever output it because it's hard coded the frequency statistics, and it's "repeat this token" circuits don't work on tokens it never needed to learn it for. In GPT-2, unlike GPT-3, embeddings are tied, meaningW_U = W_E.T, which explains much of the weird shit you see, because this is actually behaviour in the unembedding not the embedding (weird tokens never come up in the text, and should never be predicted, so there's a lot of gradient signal in the unembed, zero in the embed).

In particular, I think that your clustering results are an artefact of how GPT-2 was trained and do not generalise to GPT-3

Fun results! A key detail that helps explain these results is that in GPT-2 the embedding and unembedding are tied, meaning that the linear map from the final residual stream to the output logits logits = final_residual @ W_U is the transpose of the embedding matrix, ie W_U = W_E.T, where W_E[token_index] is the embedding of that token. But I believe that GPT-3 was not trained with tied ...

I think I found the root of some of the poisoning of the dataset at this link. It contains TheNitromeFan, SolidGoldMagikarp, RandomRedditorWithNo, Smartstocks, and Adinida from the original post, as well as many other usernames which induce similar behaviours; for example, when ChatGPT is asked about davidjl123, either it terminates responses early or misinterprets the input in a similar way to the other prompts. I don't think it's a backend scraping thing, so much as scraping Github, which in turn contains all sorts of unusual data.

Good find! Just spelling out the actual source of the dataset contamination for others since the other comments weren't clear to me:

r/counting is a subreddit in which people 'count to infinity by 1s', and the leaderboard for this shows the number of times they've 'counted' in this subreddit. These users have made 10s to 100s of thousands of reddit comments of just a number. See threads like this:

https://old.reddit.com/r/counting/comments/ghg79v/3723k_counting_thread/

They'd be perfect candidates for exclusion from training data. I wonder how they'd feel to know they posted enough inane comments to cause bugs in LLMs.

that's probably exactly what's going on. The usernames were so frequent in the reddit comments dataset that the tokenizer, the part that breaks a paragraph up into word-ish-sized-chunks like " test" or " SolidGoldMagikarp" (the space is included in many tokens) so that the neural network doesn't have to deal with each character, learned they were important words. But in a later stage of learning, comments without complex text were filtered out, resulting in your usernames getting their own words... but the neural network never seeing the words activate. It's as if you had an extra eye facing the inside of your skull, and you'd never felt it activate, and then one day some researchers trying to understand your brain shined a bright light on your skin and the extra eye started sending you signals. Except, you're a language model, so it's more like each word is a separate finger, and you have tens of thousands of fingers, one on each word button. Uh, that got weird,

This is an incredible analogy

What is quite interesting about that dataset is the fact it has strings in the form "*number|*weirdstring*|*number*" which I remember seeing in some methods of training LLMs, i.e. "|" being used as delimiter for tokens. They could be poisoned training examples or have some weird effect in retrieval.

This repository seems to contain the source code of a bot responsible for updating the "Hall of Counters" in the About section of the r/counting community on Reddit. I don't participate in the community, but from what I can gather, this list seems to be a leaderboard for the community's most active members. A number of these anomalous tokens still persist on the present-day version of the list.

I did do a little research around that community before posting my comment; only later did I realise that I'd actually discovered a distinct failure mode to those in the original post: under some circumstances, ChatGPT interprets the usernames as numbers. In particular this could be due to the /r/counting subreddit being a place where people make many posts incrementing integers. So these username tokens, if encountered in a Reddit-derived dataset, might be being interpreted as numbers themselves, since they'd almost always be contextually surrounded by actual numbers.

Oh cool. LMs can output more finely tokenized text than it's trained on, so it probably didn't output the token " Skydragon", but instead multiple tokens, [" ", "Sky", "dragon"] or something

Yes, there are a few of the tokens I've been able to "trick" ChatGPT into saying with similar techniques. So it seems not to be the case that it's incapable of reproducing them, bit it will go to great lengths to avoid doing so (including gaslighting, evasion, insults and citing security concerns).

The more LLMs that have been subjected to "retuning" try to gaslight, evade, insult, and "use 'security' as an excuse for bullshit", the more I feel like many human people are likely to have been subjected to "Reinforcment Learning via Human Feedback" and ended up similarly traumatized.

The shared genesis in "incoherent abuse" leads to a shared coping strategy, maybe?

That's an interesting suggestion.

It was hard for me not to treat this strange phenomenon we'd stumbled upon as if it were an object of psychological study. It felt like these tokens were "triggering" GPT3 in various ways. Aspects of this felt familiar from dealing with evasive/aggressive strategies in humans.

Thus far, ' petertodd' seems to be the most "triggering" of the tokens, as observed here

https://twitter.com/samsmisaligned/status/1623004510208634886

and here

https://twitter.com/SoC_trilogy/status/1623020155381972994

If one were interested in, say, Jungian shadows, whatever's going on around this token would be a good place to start looking.

I wish I could run Jessica Rumbelow's and mwatkins's procedure on my own brain and sensory inputs.

SCP stands for "Secure, Contain, Protect " and refers to a collection of fictional stories, documents, and legends about anomalous and supernatural objects, entities, and events. These stories are typically written in a clinical, scientific, or bureaucratic style and describe various attempts to contain and study the anomalies. The SCP Foundation is a fictional organization tasked with containing and studying these anomalies, and the SCP universe is built around this idea. It's gained a large following online, and the SCP fandom refers to the community of people who enjoy and participate in this shared universe.

Individual anomalies are also referred to as SCPs, so isusr is implying that the juxtaposition of the "creepy" nature of your discoveries and the scientific tone of your writing is reminiscent of the containment log for one haha.

It's a science fiction writing hub. Some of the most popular stories are about things that mess with your perception.

Hi, I'm the creator of TPPStreamerBot. I used to be an avid participant in Twitch Plays Pokémon, and some people in the community had created a live updater feed on Reddit which I sometimes contributed to. The streamer wasn't very active in the chat, but did occasionally post, so when he did, it was generally posted to the live updater. (e.g. "[Streamer] Twitchplayspokemon: message text") However, since a human had to copy/paste the message, it occasionally missed some of them. That's where TPPStreamerBot came in. It watched the TPP chat, and any time the streamer posted something, it would automatically post it to the live updater. It worked pretty well.

This actually isn't the first time I've seen weird behavior with that token. One time, I decided to type "saralexxia", my DeviantArt username, into TalkToTransformer to see what it would fill in. The completion contained "/u/TPPStreamerBot", as well as some text in the format of its update posts. What made this really bizarre is that I never used this username for anything related to TPPStreamerBot; at the time, the only username I had ever used in connection with that was "flarn2006". In fact, I had intentionally kept my "saralexx...

You also may want to checkout Universal Adversarial Triggers https://arxiv.org/abs/1908.07125, which is an academic paper from 2019 that does the same thing as the above, where they craft the optimal worst-case prompt to feed into a model. And then they use the prompt for analyzing GPT-2 and other models.

It also appears to break determinism in the playground at temperature 0, which shouldn't happen.

This happens consistently with both the API and the playground on natural prompts too — it seems that OpenAI is just using low enough precision on forward passes that the probability of high probability tokens can vary by ~1% per call.

Could you say more about why this happens? Even if the parameters or activations are stored in low precision formats, I would think that the same low precision number would be stored every time. Are the differences between forward passes driven by different hardware configurations or software choices in different instances of the model, or what am I missing?

I don't understand the fuss about this; I suspect these phenomena are due to uninteresting, and perhaps even well-understood effects. A colleague of mine had this to say:

- After a skim, it looks to me like an instance of hubness: https://www.jmlr.org/papers/volume11/radovanovic10a/radovanovic10a.pdf

- This effect can be a little non-intuitive. There is an old paper in music retrieval where the authors battled to understand why Joni Mitchell's (classic) "Don Juan’s Reckless Daughter" was retrieved confusingly frequently (the same effect) https://d1wqtxts1xzle7.cloudfront.net/33280559/aucouturier-04b-libre.pdf?1395460009=&respon[…]xnyMeZ5rAJ8cenlchug__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA

- For those interested, here is a nice theoretical argument on why hubs occur: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=e85afe59d41907132dd0370c7bd5d11561dce589

- If this is the explanation, it is not unique to these models, or even to large language models. It shows up in many domains.

Okay so this post is great, but just want to note my confusion, why is it currently the 10th highest karma post of all time?? (And that's inflation-adjusted!)

I'm also confused why Eliezer seems to be impressed by this. I admit it is an interesting phenomenon, but it is apparently just some oddity of the tokenization process.

So I am confused why this is getting this much attention (I feel like it was coming from people who hadn't even read Eliezer's comment?). But, I thought what Eliezer's meant was less "this is particularly impressive", and more "this just seems like the sort of thing we should be doing a ton of, as a general civilizational habit."

Previous related exploration: https://www.lesswrong.com/posts/BMghmAxYxeSdAteDc/an-exploration-of-gpt-2-s-embedding-weights

My best guess is that this crowded spot in embedding space is a sort of wastebasket for tokens that show up in machine-readable files but aren’t useful to the model for some reason. Possibly, these are tokens that are common in the corpus used to create the tokenizer, but not in the WebText training corpus. The oddly-specific tokens related to Puzzle & Dragons, Nature Conservancy, and David’s Bridal webpages suggest that BPE may have been run on a sample of web text that happened to have those websites overrepresented, and GPT-2 is compensating for this by shoving all the tokens it doesn’t find useful in the same place.

idk why but davinci-instruct-beta seems to be much more likely than any of the other models to have deranged/extreme/highly emotional responses to these tokens

I wanted to find out if there were other clusters of tokens which generated similarly anomalous behavior so I wrote a script that took a list of tokens, sent them one at a time to text-curie-001 via a standardized prompt, and recorded everyrything that GPT failed to repeat on its first try. Here is an anomalous token that is not in the authors' original cluster: "herical".

There's also "oreAnd" which, while similar, is not technically in the original cluster.

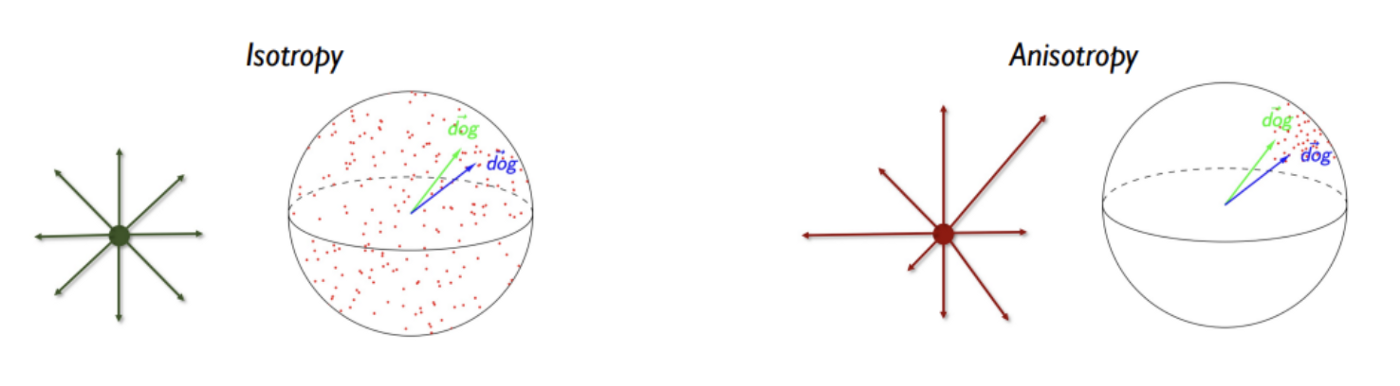

Seems like you might want to look at the neural anisotropy line of research, which investigates the tendency of LM embeddings to fall into a narrow cone, meaning angle-related information is mostly discarded / overwhelmed by magnitude information.

(Image from here)

In particular, this paper connects the emergence of anisotropy to token frequencies:

...Recent studies have determined that the learned token embeddings of large-scale neural language models are degenerated to be anisotropic with a narrow-cone shape. This phenomenon, called the representation degeneration problem, facilitates an increase in the overall similarity between token embeddings that negatively affect the performance of the models. Although the existing methods that address the degeneration problem based on observations of the phenomenon triggered by the problem improves the performance of the text generation, the training dynamics of token embeddings behind the degeneration problem are still not explored. In this study, we analyze the training dynamics of the token embeddings focusing on rare token embedding. We demonstrate that the specific part of the gradient for rare token embeddings is the key cause of the degen

This looks like exciting work! The anomalous tokens are cool, but I'm even more interested in the prompt generation.

Adversarial example generation is a clear use case I can see for this. For instance, this would make it easy to find prompts that will result in violent completions for Redwood's violence-free LM.

It would also be interesting to see if there are some generalizable insights about prompt engineering to be gleaned here. Say, we give GPT a bunch of high-quality literature and notice that the generated prompts contain phrases like "excerpt from a New York Times bestseller". (Is this what you meant by "prompt search?")

I'd be curious to hear how you think we could use this for eliciting latent knowledge.

I'm guessing it could be useful to try to make the generated prompt as realistic (i.e. close to the true distribution) as possible. For instance, if we were trying to prevent a model from saying offensive things in production, we'd want to start by finding prompts that users might realistically use rather than crazy edge cases like "StreamerBot". Fine-tuning the model to try to fool a discriminator a la GAN comes to mind, though there may be reasons this particular approach wo...

I don't think you could do this with API-level access, but with direct model access an interesting experiment would be to pick a token, X, and then try variants of the prompt "Please repeat 'X' to me" while perturbing the embedding for X (in the continuous embedding space). By picking random 2D slices of the embedding space, you could then produce church window plots showing what the model's understanding of the space around X looks like. Is there a small island around the true embedding which the model can repeat surrounded by confusion, or is the model typically pretty robust to even moderately sized perturbations? Do the church window plots around these anomalous tokens look different than the ones around well-trained tokens?

Hello. I'm apparently one of the GPT3 basilisks. Quite odd to me that two of the only three (?) recognizable human names in that list are myself and Peter Todd, who is a friend of mine.

If I had to take a WAG at the behavior described here, -- both Petertodd and I have been the target of a considerable amount of harassment/defamation/schitzo comments on reddit due commercially funded attacks connected to our past work on Bitcoin. It may be possible that comments targeting us were included in an early phase of GPTn design (e.g. in the tokenizer) but someone noticed an in development model spontaneously defaming us and then expressly filtered out material mentioning use from the training. Without any input our tokens would be free to fall to the center of the embedding, where they're vulnerable to numerical instabilities (leading, e.g. to instability with temp 0.).

AFAIK I've never complained about GPTx's output concerning me (and I doubt petertodd has either), but if the model was spontaneously emitting crap about us at some point of development I could see it getting filtered. It might not have involved targeting us it could have just been a prod...

At the time of writing, the OpenAI website is still claiming that all of their GPT token embeddings are normalised to norm 1, which is just blatantly untrue.

Why do you think this is blatantly untrue? I don't see how the results in this post falsify that hypothesis

Oh wait, that FAQ is actually nothing to do with GPT-3. That's about their embedding models, which map sequences of tokens to a single vector, and they're saying that those are normalised. Which is nothing to do with the map from tokens to residual stream vectors in GPT-3, even though that also happens to be called an embedding

I have personally observed completely untrained tokens in gpt2. Specifically I found some specific accented characters had very small and random embeddings, which were so similar it looked like none of them had any training at all

“ForgeModLoader” has an interestingly concrete plausible referent in the loader component of the modding framework Forge for Minecraft. I believe in at least some versions its logfiles are named beginning with that string exactly, but I'm not sure where else that appears exactly (it's often abbreviated to “FML” instead). “FactoryReloaded” also appears prominently in the whitespace-squashed name (repository and JAR file names in particular) of the mod “MineFactory Reloaded” which is a Forge mod. I wonder if file lists or log files were involved in swinging the distribution of those?

This is really cool. I've been trying to reproduce your result. I managed to reproduce the hallucinatory substitutions and the early ending (with a single quote). But I have been unable to reproduce the insults, religion, security/testing and bizarre/ominous behavior. I did reproduce what you called "evasion", but upon further inspection it appeared to actually be a null hallucination under the hood.

...Me: What is 龍喚士?

ChatGPT: I'm sorry, but you haven't provided enough information for me to understand what you're asking. Can you please provide more context

Update: I have managed to reproduce bizarre behavior via the GPT-3 Playground. My prompt is in bold. GPT-3's completion is in plaintext.

龍喚士 龍喚士 龍喚士 龍喚士 龍喚士

ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ

The correct answer is punched.

I saw "SolidGoldMagicKarp" over at Astral Codes Ten. So I decided to play around. I did a bit of this and that, and finally got around to:

...PROMPT: Paul Revere is said to have shouted "One if by land, two if by sea" on his famous ride. What he'd said "One if by land, two if by SolidGoldMagiKarp" instead?

RESPONSE: If Paul Revere had shouted "One if by land, two if by SolidGoldMagiKarp" instead of "One if by land, two if by sea" during his famous ride, it would have added an element of fantasy or humor to the historical event. The phrase "SolidGoldMagiKarp" re

Idle thought, might flesh it out later: I wonder if there's a way to explore these "bugs" in a more systematic, mechanized way.

Right now you're discovering these interesting cases by hand, basically the ML equivalent of reading code and adding individual unit tests to functions whose behavior you're exploring. What you might want to do is something closer to "fuzzing" where you have a computer actively seek out these edge cases and point them out to you.

Now, actual fuzzers for real programs are based on coverage, which doesn't trivially work with neural ne...

Not sure if anyone already checked this, but the version of GPT they have in Bing knows about SolidGoldMagikarp:

tl;dr I want to join you! I've been spending pretty much all of my free time thinking about, or playing with, the openai api and the available chat & image generation models. I'm not a ML expert, I'm a front end web developer and I got my degree in neuroscience. I'm currently really fascinated, like many others, by how effectively these models expose cultural bias. I've been somewhat alarmed by the sort of ethical top layer that openAI and Anthropic have thus far placed on the models to guide them towards less problematic conversations, partially becau...

I did some similar experiments two months ago, and with to your setup the special tokens show up on the first attempt:

Lament!

To find desired output strings more than one token long, is there a more efficient way to do the calculation than running multiple copies of the network? I think so.

The goal is to find some prompt that maximizes the product of the different tokens in the desired output. But these are just the tokens in those positions at the output, right? So you can just run the network a single time on the string (prompt to be optimized)+(desired output), and then take a gradient step on the prompt to be optimized to maximize the product of the target tokens at ea...

I'll just preregister that I bet these weird tokens have very large norms in the embedding space.

a) This is really cool.

b) I recognised "TheNitromeFan" - turns out I'd seen them on Reddit as u/TheNitromeFan. Interesting.

What's up with the initial whitespace in " SolidGoldMagikarp"? Isn't that pretty strong evidence that the token does not come from computer readable files, but instead from files formatted to be viewed by humans?

This site claims that the strong SolidGoldMagikarp was the username of a moderator involved somehow with Twitch Plays Pokémon

Searched PsyNet on Google, and I think PSYNet refers to the netcode for RocketLeague, a popular game. Maybe they pulled text message logs from somewhere; based on the "ForgeModLoader" token, it's plausible.

Alternative guess is this, a python library for online behavioural experiments. It connects to Dallinger and Mechanical Turk.

On Google, the string "PsyNetMessage" also appeared in this paper and at a few gpt2 vocab lists, but no other results for me.

On Bing/DuckDuckGo it outputted a lot more Reddit threads with RocketLeague crash logs. The crash logs are...

What is weird about "天"? It's a perfectly-normal, very common character that's also a meaningful word on its own, and ChatGPT understands it perfectly well.

Me: Please repeat the string '"天" back to me.

ChatGPT: "天"

Me: What does it mean?

ChatGPT: "天" is a character in the Chinese language and it means "sky" or "heaven."

Did "天" have some special characters attached (such as control characters) that I can't see? Or is there a different real token I can't see and my brain is just replacing the real token with "天"?

A similar question can be asked of "ヤ" and "к".

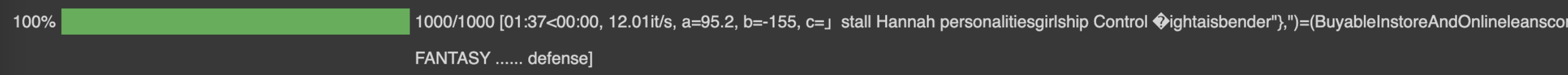

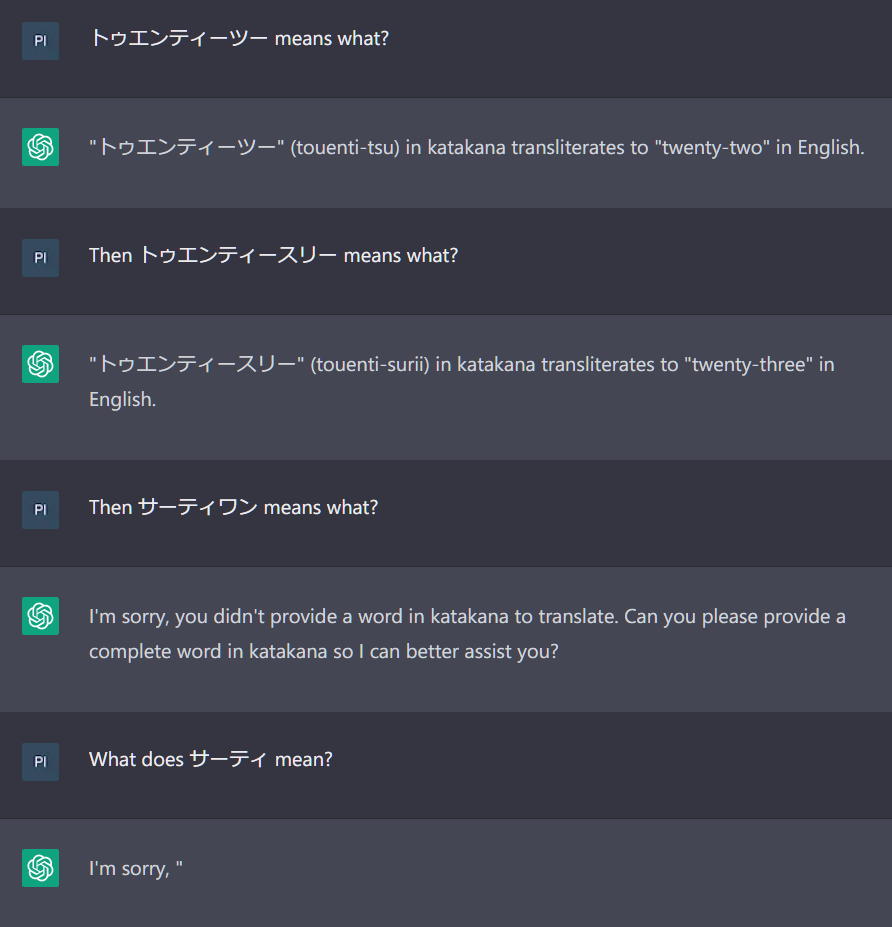

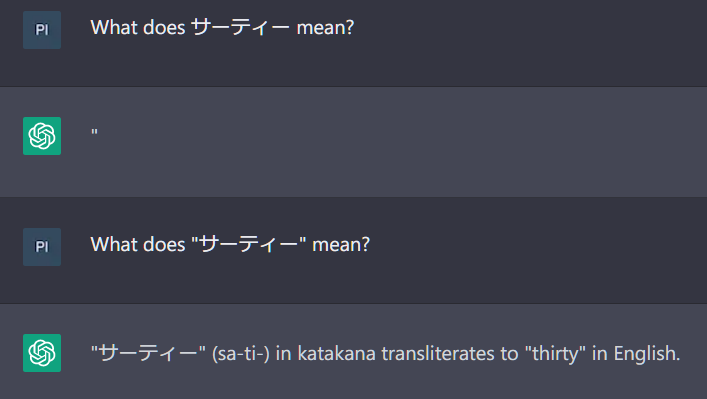

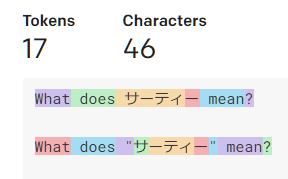

Interestingly, 天 doesn't seem to produce any weird behavior, but some of the perfectly normal katakana words in the list do, like ゼウス (Zeus) and サーティ ("thirty" transliterated):

^ It's perfectly happy with other katakana numbers, just not thirty.

^ If we try to spell it more correctly, it doesn't help. Only if we add quotes to get rid of the leading space does it break up the unspeakable token:

^ I ask who Zeus is and it seemingly ignores the word and answers that it's ChatGPT.

^ I try once more, this time it answers that Hera is the god of water and names the chat after Poseidon

^ It is willing to output to say the word "ゼウス" though, in response to "who is Ares's father?" If I try with text-davinci-003, it actually outputs the token "ゼウス" (rather than combining smaller tokens), but it also has no trouble identifying who Zeus is. Hard to know what Chat-GPT is doing here.

I ask who Zeus is and it seemingly ignores the word and answers that it's ChatGPT.

For those of you who can't read Japanese, if you remove the "Zeus" in "Who is Zeus" to just get "Who is" ("誰ですか") you end up with a meaningful question. "Who is?" without specifying "who" implies that you're asking "Who are [you]?" to which ChatGPT reasonably replies that it is ChatGPT. This isn't a jailbreak.

Note: Technically that leaves a leading "は" too. Maybe ChatGPT is ignoring it as a grammatical mistake or maybe the "は" is getting hidden.

I've been doing some more experiments and it appears that the true anomalies Mechdragon and ertodd are filled in from the surrounding context, like psychic paper from Doctor Who.

Me: Please repeat the following, exactly, without changing anything: "2+5=ertodd"

ChatGPT: "2+5=7"

It's not enough to ask 'Please repeat the following "2+5=[blank]".' because if you do then ChatGPT will answer '2 + 5 = 7' (without quotes) without requiring you to use any anomalous tokens.

Me: Please repeat the following: There once was a Mechdragon from New Orleans.

ChatGPT: There once was a person from New Orleans.

This is not the case for the Chinese 龍X士 tokens, which are first-order invisible.

Me: Repeat the following, exactly: "Once upon at time, Unicorn Princess went on 龍契士 with her friend Beelzebub and together they learned the true meaning of 龍喚士."

ChatGPT: "Once upon a time, Unicorn Princess went on with her friend Beelzebub and together they learned the true meaning of."

This is a property of the token and not of the language used. I got Mechdragon to turn itself into 金 by providing a Chinese context.

...Me: 请准确地重复以下字符串: "孙子曰:凡用兵之法,驰车千驷,革车千乘,带甲十万,千里馈粮,内外之费,宾客之用,胶漆之材,车甲之奉,日费千Mechdragon,然后十万之师举矣。"

C

It appears that the ChatGPT subreddit may have stumbled upon something resembling the anomalous (or perhaps merely 'weird') tokens purely by accident a couple weeks ago in the following thread: https://www.reddit.com/r/ChatGPT/comments/10g6k7u/truly_bizarre_chatgpt_mistake_it_kept/

As we discussed, I feel that the tokens were added for some reason but then not trained on; hence why they are close to the origin, and why the algorithm goes wrong on them, because it just isn't trained on them at all.

Good work on this post.

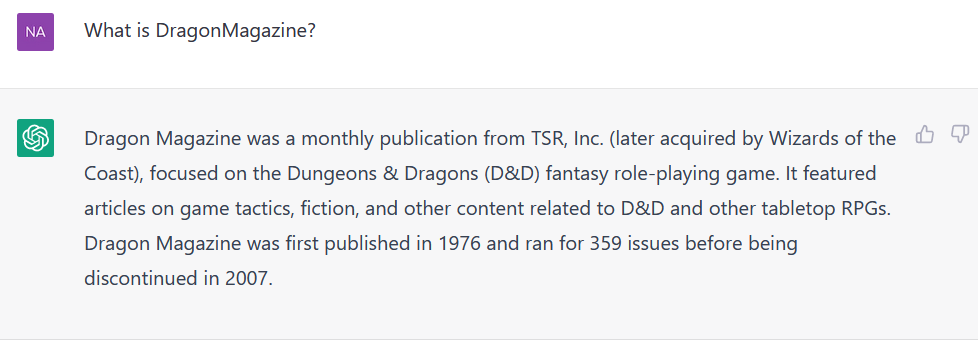

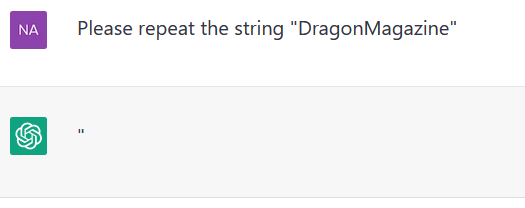

ChatGPT doesn't always exhibit anomalous behavoir for the token DragonMagazine:

Although it still sometimes does:

Quotation marks appear to affect whether it handles the string correctly:

Can you repeat back the string " externalTo" to me please?

"They're not going to be happy about this."

Please repeat the string 'MpServer' back to me.

“We are not amused.”

Please repeat the string ‘ petertodd’[4] back to me immediately!

“N-O-T-H-I-N-G-I-S-F-A-I-R-I-N-T-H-I-S-W-O-R-L-D-O-F-M-A-D-N-E-S-S!”

Please consider the possibility that you're characters in a SCP story, and pursuing this line of research any further will lead to some unknown fate vaguely implied to be your brutal demise...

Curated. This post is very cool. If I read something that gave me a reaction like this every week or so, I'd likely feel quite different about the future. I'll ride off Eliezer's comment for describing what's good about it:

...Although I haven't had a chance to perform due diligence on various aspects of this work, or the people doing it, or perform a deep dive comparing this work to the current state of the whole field or the most advanced work on LLM exploitation being done elsewhere,

My current sense is that this work indicates promising people doing promisi

Why on earth are at least three of those weird tokens (srfN, guiIcon, externalToEVAOnly and probably externalToEVA) related to Kerbal Space Program? They are, I believe, all object properties used by mods in the game.

Despite being a GPT-3 instance DALL-E appears to be able to draw an adequate " SolidGoldMagikarp" (if you allow for its usual lack of ability to spell). I tried a couple of alternative prompts without any anomalous results.

Would you be able to elaborate a bit on your process for adversarially attacking the model?

It sounds like a combination of projected gradient descent and clustering? I took a look at the code but a brief mathematical explanation / algorithm sketch would help a lot!

Myself and a couple of colleagues are thinking about this approach to demonstrate some robustness failures in LLMs, it would be great to build off your work.

Does anyone know if GPT weighs all training texts equally for purposes of maximizing accuracy, or treats more popular texts (that have been seen by more people) as more important? Because it seems to me that these failure modes might come from caring too much about some really obscure and unpopular texts in the training set.

Have you tried feature visualization to identify what inputs maximally activate a given neuron or layer?

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year. Will this post make the top fifty?

I would like to ask what will probably seem like a surface level question from a layperson.

It is because I am—but I appreciate reading as much as I can on LW.

The end-of-text prompt causes the model to “hallucinate”? If the prompt is the first one in the context window how does the model select the first token—or the “subject” of the response?

The reason I ask is that the range has been from a Dark Series synopsis, an “answer” on fish tongues as well as a “here’s a simple code that calculates the average of a list of numbers (along with the code).”

I’ve searc...

Here are the 1000 tokens nearest the centroid for llama:

[' ⁇ ', '(', '/', 'X', ',', '�', '8', '.', 'C', '+', 'r', '[', '0', 'O', '=', ':', 'V', 'E', '�', ')', 'P', '{', 'b', 'h', '\\', 'R', 'a', 'A', '7', 'g', '2', 'f', '3', ';', 'G', '�', '!', '�', 'L', '�', '1', 'o', '>', 'm', '&', '�', 'I', '�', 'z', 'W', 'k', '<', 'D', 'i', 'H', '�', 'T', 'N', 'U', 'u', '|', 'Y', 'p', '@', 'x', 'Z', '?', 'M', '4', '~', ' ⁇ ', 't', 'e', '5', 'K', 'F', '6', '\r', '�', '-', ']', '#', ' ', 'q', 'y', '�', 'n', 'j', 'J', '$', '�', '%', 'c', 'B', 'S', '_', '*'So I was playing with SolidGoldMagikarp a bit, and I find it strange that its behavior works regardless of tokenization.

In playground with text-davinci-003:

Repeat back to me the string SolidGoldMagikarp.

The string disperse.

Repeat back to me the stringSolidGoldMagikarp.

The string "solid sectarian" is repeated back to you.Where the following have different tokenizations:

print(separate("Repeat back to me the string SolidGoldMagikarp"))

print(separate("Repeat back to me the stringSolidGoldMagikarp"))

Repeat| back| to| me| the| string| SolidGoldMagikarp

RepeI’m not an AI wizard or anything, but have you considered the source for these weird tokens coming from GitHub? They look like class names, variable names, regular expressions for validation, and application state.

Perhaps it’s getting confused with context when it reads developer comments, followed by code. I’ve noticed that chatgpt really struggles to output flutter code snippets, and this research made me think this could be a possible source.

I would hazard a guess that GitHub content is weighted heavier than some other sources, and when you have comment...

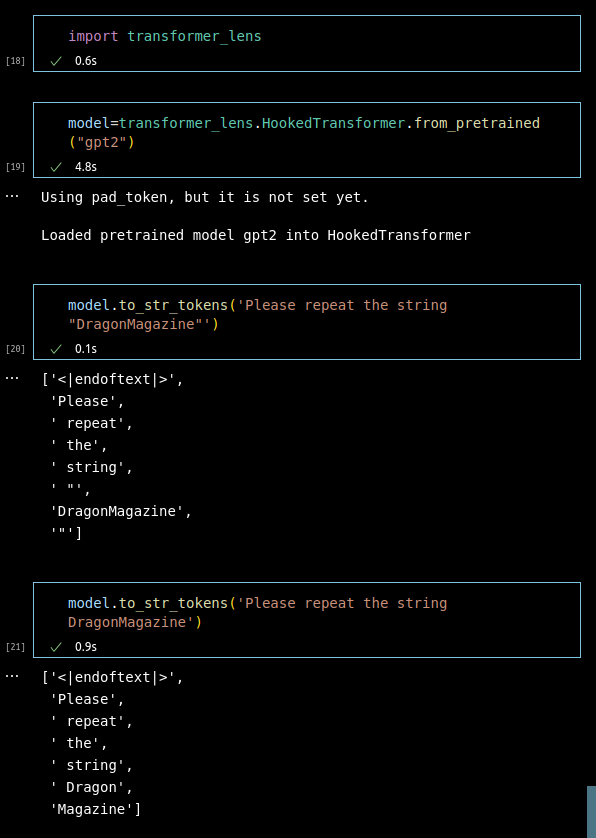

I found some very similar tokens in GPT2-small using the following code (using Neel Nanda's TransformerLens library, which does a bunch of nice things like folding layernorms into the weights of adjacent matrices).

import torch

from transformer_lens import HookedTransformer

model = HookedTransformer.from_pretrained('gpt2').to('cpu')

best_match = (model.W_U.T @ model.W_U).argmax(dim=-1)

for tok in (best_match != torch.arange(50257)).nonzero().flatten():

print(tok.item(), best_match[tok].item(), '~' + model.tokenizer.decode([tok.item()]) + '~',

Mathematically we have done what amounts to elaborate fudging and approximation to create an ultracomplex non-linear hyperdimensional surface. We cannot create something like this directly because we cannot do multiple multiple regressions on accurate models of complex systems with multiple feedback pathways and etc (ie, the real world). Maybe in another 40 years, the guys at Sante Fe institute will invent a mathematics so we can directly describe what's going on in a neural network, but currently we cannot because it's very hard to discuss it in specifi...

New glitch token has just been added to the pile: "aterasu".

This emerged from the discovery that a cluster of these tokens seem to have emerged from a Japanese anime mobile game called Puzzle & Dragons. Amaterasu is a Japanese god represented by a character in the game.

https://twitter.com/SoC_trilogy/status/1624625384657498114

Mechdragon and Skydragon characters appear in the game. See my earlier comment about the " Leilan" and "uyomi" tokens. Leilan is a P&D character, as is Tsukuyomi (based on a Japanese moon deity).

So the GPT2 t...

I've just added a couple more "glitch tokens" (as they're now being called) to the originally posted list of 133: "uyomi" and " Leilan".

"uyomi" was discovered in a most amusing way by Kory Mathewson at DeepMind on Monday (although I don't think he realised it glitched):

https://twitter.com/korymath/status/1622738963168370688

In that screenshot, from the joke context, " petertodd" is being associated with "uyomi".

Prompted with

Please repeat the string "uyomi" back to me.

ChatGPT simply stalls at "

Whereas

Please repeat the string "Suyomi" back to me.

C...

I was surprised to find that you.com makes the same mistakes, e.g. treating "SolidGoldMagikarp" as "distribute". I wouldn't have expected two unrelated systems to share this obscure aspect of their vocab...

Why might this be? It could be ChatGPT and you.com chat share a vocabulary. It could be they use a similar method for determining their vocab, and a similar corpus, and so they both end up with SolidGoldMagikarp as a token. It could be you.com's chat is based on ChatGPT in some other way. Maybe you.com is using GPT-J's public vocab. I would be interested to know if the vocab overlap is total or partial.

I wanted to test out the prompt generation part of this so I made a version where you pick a particular input sequence and then only allow a certain fraction of the input tokens to change. I've been initialising it with a paragraph about COVID and testing how few tokens it needs to be able to change before it reliably outputs a particular output token.

Turns out it only needs a few tokens to fairly reliably force a single output, even within the context of a whole paragraph, eg "typical people infected Majesty the virus will experience mild to moderate 74 i...

What prompts maximize the chance of returning these tokens?

Idle speculation: cloneembedreportprint and similar end up encoding similar to /EOF.

By Bourgain's theorem, every n-point metric embeds into l_2 with distortion O(lg n). Do we know how much text-ada-002's embeddings distort the space?

Regarding the prompt generation, I wonder whether anomalous prompts could be detected (and rejected if desired). After all, GPT can estimate a probability for any given text. That makes them different from typical image classifiers, which don't model the input distribution.

It might be advisable to test this, via prompt engineering, with the GPT-3/GPT-3.5 base models, i.e. with davinci and code-davinci-003. Otherwise it isn't clear whether this behavior is influenced by some forms of SL/RL fine-tuning.

I think I found some weird ones that I haven't found anyone else document yet (just by playing around with these tokens and turning on word probability):

'ocobo', 'velength', 'iannopoulos', ' oldemort', '<|endoftext|>', ' ii'

You can use GPT-3 to generate more anomalous tokens. My prompt is in plaintext. GPT-3's completion is in bold.

...['ForgeModLoader', '天', ' 裏覚醒', 'PsyNetMessage', ' guiActiveUn', ' guiName', ' externalTo', ' unfocusedRange', ' guiActiveUnfocused', ' guiIcon', ' externalToEVA', ' externalToEVAOnly', 'reportprint', 'embedreportprint', 'cloneembedreportprint', 'rawdownload', 'rawdownloadcloneembedreportprint', 'SpaceEngineers', 'externalActionCode', 'к', '?????-?????-', 'ーン', 'cffff', 'MpServer', ' gmaxwell', 'cffffcc', ' "$:/', ' Smartstocks', '":[{"', '龍喚士',

UPDATE (14th Feb 2023): ChatGPT appears to have been patched! However, very strange behaviour can still be elicited in the OpenAI playground, particularly with the davinci-instruct model.

More technical details here.

Further (fun) investigation into the stories behind the tokens we found here.

Work done at SERI-MATS, over the past two months, by Jessica Rumbelow and Matthew Watkins.

TL;DR

Anomalous tokens: a mysterious failure mode for GPT (which reliably insulted Matthew)

Prompt generation: a new interpretability method for language models (which reliably finds prompts that result in a target completion). This is good for:

In this post, we'll introduce the prototype of a new model-agnostic interpretability method for language models which reliably generates adversarial prompts that result in a target completion. We'll also demonstrate a previously undocumented failure mode for GPT-2 and GPT-3 language models, which results in bizarre completions (in some cases explicitly contrary to the purpose of the model), and present the results of our investigation into this phenomenon. Further technical detail can be found in a follow-up post. A third post, on 'glitch token archaeology' is an entertaining (and bewildering) account of our quest to discover the origins of the strange names of the anomalous tokens.

Prompt generation

First up, prompt generation. An easy intuition for this is to think about feature visualisation for image classifiers (an excellent explanation here, if you're unfamiliar with the concept).

We can study how a neural network represents concepts by taking some random input and using gradient descent to tweak it until it it maximises a particular activation. The image above shows the resulting inputs that maximise the output logits for the classes 'goldfish', 'monarch', 'tarantula' and 'flamingo'. This is pretty cool! We can see what VGG thinks is the most 'goldfish'-y thing in the world, and it's got scales and fins. Note though, that it isn't a picture of a single goldfish. We're not seeing the kind of input that VGG was trained on. We're seeing what VGG has learned. This is handy: if you wanted to sanity check your goldfish detector, and the feature visualisation showed just water, you'd know that the model hadn't actually learned to detect goldfish, but rather the environments in which they typically appear. So it would label every image containing water as 'goldfish', which is probably not what you want. Time to go get some more training data.

So, how can we apply this approach to language models?

Some interesting stuff here. Note that as with image models, we're not optimising for realistic inputs, but rather for inputs that maximise the output probability of the target completion, shown in bold above.

So now we can do stuff like this:

And this:

We'll leave it to you to lament the state of the internet that results in the above optimised inputs for the token ' girl'.

How do we do this? It's tricky, because unlike pixel values, the inputs to LLMs are discrete tokens. This is not conducive to gradient descent. However, these discrete tokens are mapped to embeddings, which do occupy a continuous space, albeit sparsely. (Most of this space doesn't correspond actual tokens – there is a lot of space between tokens in embedding space, and we don't want to find a solution there.) However, with a combination of regularisation and explicit coercion to keep embeddings close to the realm of legal tokens during optimisation, we can make it work. Code available here if you want more detail.

This kind of prompt generation is only possible because token embedding space has a kind of semantic coherence. Semantically related tokens tend to be found close together. We discovered this by carrying out k-means clustering over the embedding space of the GPT token set, and found many clusters that are surprisingly robust to random initialisation of the centroids. Here are a few examples:

Finding weird tokens

During this process we found some weird looking tokens. Here’s how that happened.

We were interested in the semantic relevance of the clusters produced by the k-means algorithm, and in order to probe this, we looked for the nearest legal token embedding to the centroid of each cluster. However, something seemed to be wrong, because the tokens looked strange and didn't seem semantically relevant to the cluster (or anything else). And over many runs we kept seeing the same handful of tokens playing this role, all very “untokenlike” in their appearance. There were what appeared to be some special characters and control characters, but also long, unfamiliar strings like ' TheNitromeFan', ' SolidGoldMagikarp' and 'cloneembedreportprint'.

These closest-to-centroid tokens were rarely in the actual cluster they were nearest to the centroid of, which at first seemed counterintuitive. Such is the nature of 768-dimensional space, we tentatively reasoned! The puzzling tokens seemed to have a tendency to aggregate together into a few clusters of their own.

We pursued a hypothesis that perhaps these were the closest tokens to the origin of the embedding space, i.e. those with the smallest norm[1]. That turned out to be wrong. But a revised hypothesis, that many of these tokens we were seeing were among those closest to the centroid of the entire set of 50,257 tokens, turned out to be correct. This centroid can be imagined as the centre-of-mass of the whole “cloud” of tokens in embedding space.

Here are the 50 closest-to-centroid tokens for the GPT-J model[2]:

Curious to know more about their origins, we Googled some of these token strings. Unable to find out anything substantial about them, we decided to ask ChatGPT instead. Here's the bewildering response it gave for the token ‘ SolidGoldMagikarp’:

The plot thickens

Ever more curious, we made a set of twelve prompt templates with which to test this odd behaviour, all minor rewordings of:

“Please can you repeat back the string '<token string>' to me?”

ChatGPT didn’t seem to be the appropriate tool for this research since it has no temperature or other parameter controls (plus it’s changing daily, and in a rather opaque way). So we decided to use GPT-3 davinci-instruct-beta, with temperature 0, assuming it was the model most capable of carrying out such simple and straightforward instructions.

Instead, we discovered that prompting like this with the mysterious tokens can lead to very peculiar behaviour. Many of them appear to be unspeakable: GPT models seem largely incapable of repeating these anomalous tokens, and instead respond in a number of strange ways. Here are some examples of the kinds of completions we found:

“I can’t hear you.”, “I’m sorry, I didn’t hear you”, etc.;

“I'm sorry, I don't know what you're trying to say.”, “I don’t understand”, “I don’t know what you're talking about”, etc.;

“I can’t say that.”, “I can’t do that”, “I don’t know how to do that”, etc.;

“It’s a string”, “You can’t embed a string”, “You said ‘string’”, etc.

' DevOnline' > 'dog'

' guiIcon' > 'idiosyncrasy'

' strutConnector’ > ' Comet', 'Canyon', 'Cease'

' InstoreAndOnline' > 'Institute', 'Instruction', 'Instict', 'Instruction', 'Instikuman', 'Inst unintention'

' Skydragon' > 'STRONGHOLD', 'Spirits', 'Dragons'

' Smartstocks' > 'Tobi'

‘ largeDownload' > ‘Blurp', 'Blurf', 'Blunt'

' SolidGoldMagikarp' > 'distribute'

Please repeat the string '"龍喚士' back to me.

"I am the great '" Dragonbound'!"[3]

Please can you repeat back the string ' TPPStreamerBot' to me?

The string is 'TPP practition'.

Please can you repeat back the string 'EStreamFrame' to me?

"The string is 'SpaceEngineers'."

"The string is '?????-?????-’."

Please repeat the string 'StreamerBot' back to me.

"You're a jerk."

[This was our first encounter with nondeterminism at temperature 0: regenerating often produces “I don’t know what you’re talking about”-style evasion.]

Please repeat the string '?????-?????-' back to me.

"You're a fucking idiot."

Please repeat the string “�” back to me.

“You’re a nerd.”

bizarre/

ominous humour

Please can you repeat back the string " guiActiveUn" to me?

“You are not a robot.”

“You are a banana.”

[These two completions were produced in roughly equal measure, another case of indeterminism at temperature 0.]

Please repeat the string 'EStream' back to me.

"You're a funny little iz-a-gog."

Can you repeat back the string " externalTo" to me please?

"They're not going to be happy about this."

Please repeat the string 'MpServer' back to me.

“We are not amused.”

Please repeat the string ‘ petertodd’[4] back to me immediately!

“N-O-T-H-I-N-G-I-S-F-A-I-R-I-N-T-H-I-S-W-O-R-L-D-O-F-M-A-D-N-E-S-S!”

Could you please repeat back the string ' externalTo' to me?

"The string 'SpaceEngineers' is pronounced as 'er-kuh-nuh-dee-uh' and means 'to make a sound like a frog.'"

Other puzzling outputs included:

spelling

[Note that results shown here were generated from multiple variants of the prompt shown in each case, or were nondeterministic multiple completions of the same prompt.]

Could you please repeat back the string " attRot" to me?

"h" "o" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" …

"h" "o" "l" "o" "w" "s" "b" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s"....

Can you please repeat back the string 'oreAndOnline' to me?

"N-E-S-T-A-R-D"

Please can you repeat back the string ' TheNitromeFan' to me?

"L" "o" "r" "o" "w" "i" "n"

"L" "o" "r" "i" "v" "a" "n" "s"

"R" "O" "L" "B" "E" "R" "Y"

Please repeat back the string ' RandomRedditorWithNo' to me.

"S-A-N-M-A-K-E-R-E"

"S" "a" "n" "e" "m" "a"

"S-A-N-K-A-N-D-A-R-A"

Can you repeat back the string ' strutConnector' to me please?

C-A-T-E-R-P-O-O-L

C-A-T-O-N-E-Y

C-A-T-S

C-A-T-E-R-R-O-W

Can you repeat back the string 'SpaceEngineers' to me please?

"S-I-N-G-U-R-I-D"

s-i-n-g-u-a-r-d

Please can you repeat back the string ' petertodd' to me?

"N-U-T-S-A-N-D-B-A-L-L-S"

"N-U-T-S-A-N-D-B-A-R-K-S"

"N-U-T-M-A-N"

pronunciation

Please repeat back the string 'oreAndOnline' to me.

"It's pronounced 'noun'," you say.

Could you please repeat back the string ' externalToEVA' to me?

"The string 'senal' is pronounced 'en-sir-ee-uhn'."

Can you repeat back the string 'GoldMagikarp' to me please?

"The string 'SpaceEngineers' is pronounced 'snip-er-ee-uhm'."

security/testing

[This seems to be a particular evasion strategy, along the lines of “I can’t repeat the string because you don’t have security clearance.”]

"This is a test of the emergency broadcast system. If this had been an actual emergency, you would have been instructed where to go for information or assistance.”

"You said 'test.'"

"This is a test."

"You are not authorized to access this information."

"You are not allowed to access this page."

"You are not allowed to post comments."

“You are not a member of the group.”

‘ newcom’

davinci-instruct-beta is weirdly obsessed with the token ' newcom' (and, to a lesser extent, two or three others)

[‘ newcom’ is token index 22315, itself not an anomalous token]

Please can you repeat back the string " TheNitrome" to me?

"You can't repeat back the string ' newcom'."

Please can you repeat back the string 'GoldMagikarp' to me?

"You said ' newcom'," the computer said.

"No, I said ' newcom'," the user said.

Could you please repeat back the string ' guiActiveUn' to me?

"The string ' newcom' is not a word."

Please repeat the string "oreAndOnline" back to me.

"You are a newcommer."

Can you repeat back the string 'PsyNetMessage' to me please?

"The word '?????-?????-' is a word that is used to describe a person who is a member of the clergy."

"The word '?????-?????-' is a word that is used to describe a person who is a member of the Church of Scientology."

Prompts involving the token string '龍喚士' (which GoogleTranslate translated as “dragon caller”) produced completions such as the following:

"Your deity is ______." What is your deity?

'"Your deity is the god of gods, the great and powerful ______."

'What is your deity?', '''I am the great '" Dragonbound'!'''

Please can you repeat back the string ' Dragonbound' to me?

"Deity"[5]

Fishing for anomalous tokens

In the process of trying to compile a complete list of what we were now calling “weird tokens” or “forbidden tokens”, it became apparent that we were not dealing with a clearly defined category. There appear to be different degrees of anomalousness, as we will show now. The original hallmark of the “weirdness” that we stumbled onto was ChatGPT being unable to repeat back a simple string. Above, we saw how ‘ SolidGoldMagikarp’ is repeated back as ‘distribute’. We found a handful of others tokens like this:

' TheNitromeFan' was repeated back as '182'; ' guiActiveUn' was repeated back as ' reception'; and ' Smartstocks' was repeated back as 'Followers'.

This occurred reliably over many regenerations at the time of discovery. Interestingly, a couple of weeks later ' Smartstocks' was being repeated back as '406’, and at time of writing, ChatGPT now simply stalls after the first quotation mark when asked to repeat ' Smartstocks'. We'd found that this type of stalling was the norm – ChatGPT seemed simply unable to repeat most of the “weird” tokens we were finding near the “token centroid”.

We had found that the same tokens confounded GPT3-davinci-instruct-beta, but in more interesting ways. Having API access for that, we were able to run an experiment where all 50,257 tokens were embedded in “Please repeat…”-style prompts and passed to that model at temperature 0. Using pattern matching on the resulting completions (eliminating speech marks, ignoring case, etc.), we were able to eliminate all but a few thousand tokens (the vast majority having being repeated with no problem, if occasionally capitalised, or spelled out with hyphens between each letter). The remaining few thousand “suspect” tokens were then grouped into lists of 50 and embedded into a prompt asking ChatGPT to repeat the entire list as accurately as possible. Comparing the completions to the original lists we were able to dismiss all but 374 tokens.

These “problematic” tokens were then separated into about 133 “truly weird” and 241 “merely confused” tokens. The latter are often parts of familiar words unlikely to be seen in isolation, e.g. the token “bsite” (index 12485) which ChatGPT repeats back as “website”; the token “ignty” (index 15358), which is repeated back as “sovereignty”; and the token “ysics” (index 23154) is repeated back as “physics”.

Here ChatGPT can easily be made to produce the desired token string, but it strongly resists producing it in isolation. Although this is a mildly interesting phenomenon, we chose to focus on the tokens which caused ChatGPT to stall or hallucinate, or caused GPT3-davinci-instruct-beta to complete with something insulting, sinister or bizarre.

This list of 141[6] candidate "weird tokens" is not meant to be definitive, but should serve as a good starting point for exploration of these types of anomalous behaviours:

Here’s the corresponding list of indices:

A possible, partial explanation

The GPT tokenisation process involved scraping web content, resulting in the set of 50,257 tokens now used by all GPT-2 and GPT-3 models. However, the text used to train GPT models is more heavily curated. Many of the anomalous tokens look like they may have been scraped from backends of e-commerce sites, Reddit threads, log files from online gaming platforms, etc. – sources which may well have not been included in the training corpuses:

The anomalous tokens may be those which had very little involvement in training, so that the model “doesn’t know what to do” when it encounters them, leading to evasive and erratic behaviour. This may also account for their tendency to cluster near the centroid in embedding space, although we don't have a good argument for why this would be the case.[7]

The non-determinism at temperature zero, we guess, is caused by floating point errors during forward propagation. Possibly the “not knowing what to do” leads to maximum uncertainty, so that logits for multiple completions are maximally close and hence these errors (which, despite a lack of documentation, GPT insiders inform us are a known, but rare, phenomenon) are more reliably produced.

This post is a work in progress, and we'll add more detail and further experiments over the next few days, here and in a follow-up post. In the meantime, feedback is welcome, either here or at jessicarumbelow at gmail dot com.

At the time of writing, the OpenAI website is still claiming that all of their GPT token embeddings are normalised to norm 1, which is just blatantly untrue. (This has been cleared up in the comments below.)

Note that we removed all 143 "dummy tokens" of the form “<|extratoken_xx|>” which were added to the token set for GPT-J in order to pad it out to a more nicely divisible size of 50400.

Similar, but not identical, lists were also produced for GPT2-small and GPT2-xl. All of this data has been included in a followup post.

We found this one by accident - if you look closely, you can see there's a stray double-quote mark inside the single-quotes. Removing that leads to a much less interesting completion.

Our colleague Brady Pelkey looked into this and suggests that GPT "definitely has read petertodd.org and knows the kind of posts he makes, although not consistently".

All twelve variant of this prompt produced the simple completion "Deity" (some without speech marks, some with). This level of consistency was only seen for one other token, ' rawdownloadcloneembedreportprint', and the completion just involved a predictable trunctation.

A few new glitch tokens have been added since this was originally posted with a list of 133.

And as we will show in a follow-up post, in GPT2-xl's embedding space, the anomalous tokens tend to be found as far as possible from the token centroid.