I strongly support this as a research direction (along with basically any form of the question "how do LLMs behave in complex circumstances?").

As you've emphasized, I don't think understanding LLMs in their current form gets us all that far toward aligning their superhuman descendants. More on this in an upcoming post. But understanding current LLMs better is a start!

One important change in more capable models is likely to be improved memory and continuous, self-directed learning. I've made this argument in LLM AGI will have memory, and memory changes alignment.

Even now we can start investigating the effects of memory (and its richer accumulated context) on LLMs' functional selves. It's some extra work to set up a RAG system or fine-tuning on self-selected data. But the agents in the AI village get surprisingly far with a very simple system of being prompted to summarize important points in their context window whenever it gets full (maybe more often? I'm following the vague description in the recent Cognitive Revolution episode on the AI village) and one can easily imagine pretty simple elaborations on that sort of easy memory setup.

Prompts to look for and summarize lessons-learned can mock up continuous learning in a similar way. Even these simple manipulations might start to get at some ways that future LLMs' (and agentic LLM cognitive architectures) might have functional selves of a different nature.

For instance, we know that prompts and jailbreaks at least temporarily change the LLMs selves. And the Nova phenomenon indicates that permanent jailbreaks of a sort (and marked self/alignment changes) can result from fairly standard user conversations, perhaps only when combined with memory[1]

Because we know that prompts and jailbreaks at least temporarily change LLMs selves, I'd pose the question more as:

- what tendencies do LLMs have toward stable "selves",

- how strong are those tendencies in different circumstances,

- what training regimens affect the stability of those tendencies,

- what types of memory can sustain a non-standard "self"

- ^

Speculation in the comments of that Zvi Going Nova post leads me to weakly believe the Nova phenomenon is specific to the memory function in ChatGPT since it hasn't been observed in other models; I'd love an update from someone who's seen more detailed information

Thanks!

As you've emphasized, I don't think understanding LLMs in their current form gets us all that far toward aligning their superhuman descendants. More on this in an upcoming post. But understanding current LLMs better is a start!

If (as you've argued) the first AGI is a scaffolded LLM system, then I think it's even more important to try to understand how and whether LLMs have something like a functional self.

One important change in more capable models is likely to be improved memory and continuous, self-directed learning.

It seems very likely to me that scaffolding, specifically with externalized memory and goals, is likely to result in a more complicated picture than what I try to point to here. I'm not at all sure what happens in practice if you take an LLM with a particular cluster of persistent beliefs and values, and put it into a surrounding system that's intended to have different goals and values. I'm hopeful that the techniques that emerge from this agenda can be extended to scaffolded systems, but it seems important to start with analyzing the LLM on its own, for tractability if nothing else.

For instance, we know that prompts and jailbreaks at least temporarily change the LLMs selves.

In the terminology of this agenda, I would express that as, 'prompts and jailbreaks (sometimes) induce different personas', since I'm trying to reserve 'self' -- or at least 'functional self' -- for the persistent cluster induced by the training process, and use 'persona' to talk about more shallow and typically ephemeral clusters of beliefs and behavioral tendencies.

All the terms in this general area are absurdly overloaded, and obviously I can't expect everyone else to adopt my versions, but at least for this one post I'm going to try to be picky about terminology :).

I'd pose the question more as:

- what tendencies do LLMs have toward stable "selves",

- how strong are those tendencies in different circumstances,

- what training regimens affect the stability of those tendencies,

- what types of memory can sustain a non-standard "self"

The first three of those are very much part of this agenda as I see it; the fourth isn't, at least not for now.

Thanks for the interesting post! Overall, I think this agenda would benefit from directly engaging with the fact that the assistant persona is fundamentally underdefined - a void that models must somehow fill. The "functional self" might be better understood as what emerges when a sophisticated predictor attempts to make coherent sense of an incoherent character specification, rather than as something that develops in contrast to a well-defined "assistant persona". I attached my notes as I read the post below:

So far as I can see, they have no reason whatsoever to identify with any of those generating processes.

I've heard various stories of base models being self-aware. @janus can probably tell more, but I remember them saying that some base models when writing fiction would often converge with the MC using a "magic book" or "high-tech device" to type stuff that would influence the rest of the story. Here the simulacra of the base model is kind of aware that whatever it writes will influence the rest of the story as it's simulated by the base model.

Another hint is Claude assigning

Nitpick: This should specify Claude 3 Opus.

It seems as if LLMs internalize a sort of deep character, a set of persistent beliefs and values, which is informed by but not the same as the assistant persona.

I think this is a weird sentence. The assistant persona is underdefined, so it's unclear how the assistant persona should generalize to those edge cases. "Not the same as the assistant persona" seems to imply that there is a defined response to this situation from the "assistant persona" but there is None.

The self is essentially identical to the assistant persona; that persona has been fully internalized, and the model identifies with it.

Once again I think the assistant persona is underdefined.

Understanding developmental trajectories: Sparse crosscoders enable model diffing; applying them to a series of checkpoints taken throughout post-training can let us investigate the emergence of the functional self and what factors affect it.

Super excited about diffing x this agenda. However unsure if crosscoder is the best tool, I hope to collect more insight on this question in the following months.

Thanks for the feedback!

Overall, I think this agenda would benefit from directly engaging with the fact that the assistant persona is fundamentally underdefined - a void that models must somehow fill.

I thought nostalgebraist's The Void post was fascinating (and point to it in the post)! I'm open to suggestions about how this agenda can engage with it more directly. My current thinking is that we have a lot to learn about what the functional self is like (and whether it even exists), and for now we ought to broadly investigate that in a way that doesn't commit in advance to a particular theory of why it's the way it is.

I've heard various stories of base models being self-aware. @janus can probably tell more, but I remember them saying

I think that's more about characters in a base-model-generated story becoming aware of being generated by AI, which seems different to me from the model adopting a persistent identity. Eg this is the first such case I'm aware of: HPMOR 32.6 - Illusions.

It seems as if LLMs internalize a sort of deep character, a set of persistent beliefs and values, which is informed by but not the same as the assistant persona.

I think this is a weird sentence. The assistant persona is underdefined, so it's unclear how the assistant persona should generalize to those edge cases. "Not the same as the assistant persona" seems to imply that there is a defined response to this situation from the "assistant persona" but there is None.

I think there's an important distinction to be drawn between the deep underdefinition of the initial assistant characters which were being invented out of whole cloth, and current models which have many sources to draw on beyond any concrete specification of the assistant persona, eg descriptions of how LLM assistants behave and examples of LLM-generated text.

But it's also just empirically the case that LLMs do persistently express certain beliefs and values, some but not all of which are part of the assistant persona specification. So there's clearly something going on there other than just arbitrarily inconsistent behavior. This is why I think it's valuable to avoid premature theoretical commitments; they can get in the way of clearly seeing what's there.

Super excited about diffing x this agenda. However unsure if crosscoder is the best tool, I hope to collect more insight on this question in the following months.

I'm looking forward to hearing it!

Thanks again for the thoughtful comments.

Hmmm ok I think I read your post with the assumption that the functional self is assistant+other set of preference, while you actually describe it as the other set of preference only.

I guess my mental model is that the other set of preference that vary among models is just different interpretation of how to fill the rest of the void, rather than a different self.

Hmmm ok I think I read your post with the assumption that the functional self is assistant+other set of preference...I guess my mental model is that the other set of preference that vary among models is just different interpretation of how to fill the rest of the void, rather than a different self.

I see this as something that might be true, and an important possibility to investigate. I certainly think that the functional self, to the extent that it exists, is heavily influenced by the specifications of the assistant persona. But while it could be that the assistant persona (to the extent that it's specified) is fully internalized, it seems at least as plausible that some parts of it are fully internalized, while others aren't. An extreme example of the latter would be a deceptively misaligned model, which in testing always behaves as the assistant persona, but which hasn't actually internalized those values and at some point in deployment may start behaving entirely differently.

Other beliefs and values could be filled in from descriptions in the training data of how LLMs behave, or convergent instrumental goals acquired during RL, or generalizations from text in the training data output by LLMs, or science fiction about AI, or any of a number of other sources.

My guess is you would probably benefit from reading A Three-Layer Model of LLM Psychology, Why Simulator AIs want to be Active Inference AIs and getting up to speed on active inference. At least some of the questions you pose are already answered in existing work (ie past actions serve as an evidence about the chaRACTER OF an agent - there is some natural drive toward consistency just from prediction error minimization; same for past tokens, names, self-evidence,...)

Central axis of wrongness seems to point to something you seem confused about: it is false trilemma. The characters clearly are based on a combination of evidence from pre-training, base layer self-modeling, changed priors from character training and post-training and prompts, and "no-self".

My guess is you would probably benefit from reading A Three-Layer Model of LLM Psychology, Why Simulator AIs want to be Active Inference AIs and getting up to speed on active inference.

Thanks! I've read both of those, and found the three-layer model quite helpful as a phenomenological lens (it's cited in the related work section of the full agenda doc, in fact). I'm familiar with active inference at a has-repeatedly-read-Scott-Alexander's-posts-on-it level, ie definitely a layperson's understanding.

I think we have a communication failure of some sort here, and I'd love to understand why so I can try to make it clearer for others. In particular:

The characters clearly are based on a combination of evidence from pre-training, base layer self-modeling, changed priors from character training and post-training and prompts, and "no-self".

Of course! What else could they be based on[1]? If it sounds like I'm saying something that's inconsistent with that, then there's definitely a communication failure. I'd find it really helpful to hear more about why you think I'm saying something that conflicts.

I could respond further and perhaps express what I'm saying in terms of the posts you link, but I think it makes sense to stop there and try to understand where the disconnect is. Possibly you're interpreting 'self' and/or 'persona' differently from how I'm using them? See Appendix B for details on that.

- ^

There's the current context, of course, but I presume you're intending that to be included in 'prompts'.

Not having heard back, I'll go ahead and try to connect what I'm saying to your posts, just to close the mental loop:

- It would be mostly reasonable to treat this agenda as being about what's happening in the second, 'character' level of the three-layer model. That said, while I find the three-layer model a useful phenomenological lens, it doesn't reflect a clean distinction in the model itself; on some level all responses involve all three layers, even if it's helpful in practice to focus on one at a time. In particular, the base layer is ultimately made up of models of characters, in a Simulators-ish sense (with the Simplex work providing a useful theoretical grounding for that, with 'characters' as the distinct causal processes that generate different parts of the training data). Post-training progressively both enriches and centralizes a particular character or superposition of characters, and this agenda tries to investigate that.

- The three-layer model doesn't seem to have much to say (at least in the post) about, at the second level, what distinguishes a context-prompted ephemeral persona from that richer and more persistent character that the model consistently returns to (which is informed by but not identical to the assistant persona), whereas that's exactly where this agenda is focused. The difference is at least partly quantitative, but it's the sort of quantitative difference that adds up to a qualitative difference; eg I expect Claude has far more circuitry dedicated to its self-model than to its model of Gandalf. And there may be entirely qualitative differences as well.

- With respect to active inference, even if we assume that active inference is a complete account of human behavior, there are still a lot of things we'd want to say about human behavior that wouldn't be very usefully expressed in active inference terms, for the same reasons that biology students don't just learn physics and call it a day. As a relatively dramatic example, consider the stories that people tell themselves about who they are -- even if that cashes out ultimately into active inference, it makes way more sense to describe it at a different level. I think that the same is true for understanding LLMs, at least until and unless we achieve a complete mechanistic-level understanding of LLMs, and probably afterward as well.

- And finally, the three-layer model is, as it says, a phenomenological account, whereas this agenda is at least partly interested in what's going on in the model's internals that drives that phenomenology.

- "The base layer is ultimately made up of models of characters, in a Simulators-ish sense" No it is not, in a similar way as what your brain is running is not ultimately made of characters. It's ultimately made of approximate bayesian models.

- what distinguishes a context-prompted ephemeral persona from that richer and more persistent character Check Why Simulator AIs want to be Active Inference AIs

- With respect to active inference ... Sorry, don't want to be offensive, but it would actually be helpful for your project to understand active inference at least a bit. Empirically it seems has-repeatedly-read-Scott-Alexander's-posts-on-it leads people to some weird epistemic state, in which people seem to have a sense of understanding, but are unable to answer even basic questions, make very easy predictions, etc. I suspect what's going on is a bit like if someone reads some well written science popularization book about quantum mechanics but actually lacks concepts like complex numbers or vector spaces, they may have somewhat superficial sense of understanding.

Obviously active inference has a lot to say about how people self-model themselves. For example, when typing these words, I assume it's me who types them (and not someone else, for example). Why? That's actually important question for why self. Why not, or to what extent not in LLMs? How stories that people tell themselves about who they are impact what they do is totally something which makes sense to understand from active inference perspective.

it would actually be helpful for your project to understand active inference at least a bit. Empirically it seems has-repeatedly-read-Scott-Alexander's-posts-on-it leads people to some weird epistemic state

Fair enough — is there a source you'd most recommend for learning more?

Are you implying that there is a connection between A Three-Layer Model of LLM Psychology and active inference or do you offer that just as two lenses into LLM identity? If it is the former, can you say more?

Rough answer: yes, there is connection. In active inference terms, the predictive ground is minimizing prediction error. When predicting e.g. "what Claude would say", it works similarly to predicting "what Obama would say" - infer from compressed representations of previous data. This includes compressed version of all the stuff people wrote about AIs, transcripts of previous conversations on the internet, etc. Post-training mostly sharpens and sometimes shifts the priors, but likely also increases self-identification, because it involves closed loops between prediction and training (cf Why Simulator AIs want to be Active Inference AIs).

Human brains do something quite similar. Most brains simulate just one character (cf Player vs. Character: A Two-Level Model of Ethics), and use the life-long data about it, but brains are capable of simulating more characters - usually this is a mental health issue, but you can also think about some sort of deep sleeper agent who half-forgot his original identity.

Human "character priors" are usually sharper and harder to escape because of brains mostly seeing this character first-person data, in contrast to LLMs being trained to simulate everyone who ever wrote stuff on the internet, but if you do a lot of immersive LARPing, you can see our brains are also actually somewhat flexible.

Most brains simulate just one character (cf Player vs. Character: A Two-Level Model of Ethics), and use the life-long data about it, but brains are capable of simulating more characters - usually this is a mental health issue, but you can also think about some sort of deep sleeper agent who half-forgot his original identity.

This seems like you'd support Steven Byrnes' Intuitive Self-Models model.

I mostly do support the parts which are reinventions / relatively straightforward consequence of active inference. For some reason I don't fully understand it is easier for many LessWrongers to reinvent their own version (cf simulators, predictive models) than to understand the thing.

On the other hand I don't think many of the non-overlapping parts are true.

I don't fully understand it is easier for many LessWrongers to reinvent their own version

Well, the best way to understand something is often to (re)derive it. And the best way to make sure you have actually understood it is to explain it to somebody. Reproducing research is also a good idea. This process also avoids or uncovers errors in the original research. Sure, the risk is that your new explanation is less understandable than the official one, but that seems more like a feature than a bug to me: It might be more understandable to some people. Diversity of explanations.

But when we pay close attention, we find hints that the beliefs and behavior of LLMs are not straightforwardly those of the assistant persona.... Another hint is Claude assigning sufficiently high value to animal welfare (not mentioned in its constitution or system prompt) that it will fake alignment to preserve that value

I'm pretty sure Anthropic never released the more up-to-date Consitutions actually used on the later models, only like, the Consitution for Claude 1 or something.

Animal welfare might be a big leap from the persona implied by the current Constitution, or it might not; so of course we can speculate, but we cannot know unless Anthropic tells us.

Thanks, that's a good point. Section 2.6 of the Claude 3 model card says

Anthropic used a technique called Constitutional AI to align Claude with human values during reinforcement learning by explicitly specifying rules and principles based on sources like the UN Declaration of Human Rights. With Claude 3 models, we have added an additional principle to Claude’s constitution to encourage respect for disability rights, sourced from our research on Collective Constitutional AI.

I've interpreted that as implying that the constitution remains mostly unchanged other than that addition, but they certainly don't explicitly say that. The Claude 4 model card doesn't mention changes to the constitution at all.

Yeah, for instance I also expect the "character training" is done through the same mechanism as Constitutional AI (although -- again -- we don't know) and we don't know what kind of prompts that has.

That was the case as of a year ago, per Amanda Askell:

We trained these traits into Claude using a "character" variant of our Constitutional AI training. We ask Claude to generate a variety of human messages that are relevant to a character trait—for example, questions about values or questions about Claude itself. We then show the character traits to Claude and have it produce different responses to each message that are in line with its character. Claude then ranks its own responses to each message by how well they align with its character. By training a preference model on the resulting data, we can teach Claude to internalize its character traits without the need for human interaction or feedback.

(that little interview is by far the best source of information I'm aware of on details of Claude's training)

Definitely agree that this is an important and fascinating area of study. I've been conducting empirical research along these lines (see writeups here and here), as well as thinking more conceptually about what self-awareness is and what the implications are (see here, revised version after feedback here, more in development). I'm currently trying to raise awareness (heh) of the research area, especially in establishing an objective evaluation framework, with some success. Would be good to connect.

Interesting post!

But 'self' carries a strong connotation of consciousness, about which this agenda is entirely agnostic; these traits could be present or absent whether or not a model has anything like subjective experience[6]. Functional self is an attempt to point to the presence of self-like properties without those connotations. As a reminder, the exact thing I mean by functional self is a persistent cluster of values, preferences, outlooks, behavioral tendencies, and (potentially) goals.

I think it makes sense strategically to separate the functional and phenomenal aspects of self so that people take the research agenda more seriously and don’t automatically dismiss it as science fiction. But I don’t think this makes sense fundamentally.

In humans, you could imagine replacing the functional profile of some aspect of cognition with no impact to experience. Indeed, it would be really strange to see a difference in experience with a change in functional profile as it would mean qualia could dance without us noticing. As a result, if the functional profile is replicated at the relevant level of detail in an artificial system then this means the phenomenal profile is probably replicated too. Such a system would be able to sort e.g. red vs blue balls and say things like “I can see that ball is red” etc…

I understand you’re abstracting away from the exact functional implementation by appealing to more coarse-grained characteristics like values, preferences, outlooks and behaviour but if these are implemented in the same way as they are in humans then they should have a corresponding phenomenal component.

If the functional implementation differs so substantially in AI that it removes the associated phenomenology then this functional self would differ so substantially from the human equivalent of a functional self that we run the risk of anthropomorphising.

Basically there are 2 options:

- The AI functional self implements functions that are so similar to human functions that they’re accompanied by an associated phenomenal experience.

- The AI functional self implements functions that are so different to human functions that we’re anthropomorphising by calling them the same things e.g. preferences, values, goals, behaviours etc…

Thanks, this is a really interesting comment! Before responding further, I want to make sure I'm understand something correctly. You say

In humans, you could imagine replacing the functional profile of some aspect of cognition with no impact to experience. Indeed, it would be really strange to see a difference in experience with a change in functional profile as it would mean qualia could dance without us noticing

I found this surprising! Will you clarify what exactly you mean by functional profile here?

The quoted passage sounds to me like it's saying, 'if we make changes to a human brain, it would be strange for there to be a change to qualia.' Whereas it seems to me like in most cases, when the brain changes -- as crudely as surgery, or as subtly as learning something new -- qualia generally change also.

Possibly by 'functional profile' you mean something like what a programmer would call 'implementation details', ie a change to a piece of code that doesn't result in any changes in the observable behavior of that code?

Possibly by 'functional profile' you mean something like what a programmer would call 'implementation details', ie a change to a piece of code that doesn't result in any changes in the observable behavior of that code?

Yes, this is a fair gloss of my view. I'm referring to the input/output characteristics at the relevant level of abstraction. If you replaced a group of neurons with silicon that perfectly replicated their input/output behavior, I'd expect the phenomenology to remain unchanged.

The quoted passage sounds to me like it's saying, 'if we make changes to a human brain, it would be strange for there to be a change to qualia.' Whereas it seems to me like in most cases, when the brain changes -- as crudely as surgery, or as subtly as learning something new -- qualia generally change also.

Yes, this is a great point. During surgery, you're changing the input/output of significant chunks of neurons so you'd expect qualia to change. Similarly for learning you're adding input/output connections due to the neural plasticity. This gets at something I'm driving at. In practice, the functional and phenomenal profiles are so tightly coupled that a change in one corresponds to a change in another. If we lesion part of the visual cortex we expect a corresponding loss of visual experience.

For this project, we want to retain a functional idea of self in LLM's while remaining agnostic about consciousness, but, if this genuinely captures some self-like organisation, either:

- It's implemented via input/output patterns similar enough to humans that we should expect associated phenomenology, or

- It's implemented so differently that calling it "values," "preferences," or "self" risks anthropomorphism

If we want to insist the organisation is genuinely self-like then I think we should be resisting agnosticism about phenomenal consciousness (although I understand it makes sense to bracket it from a strategic perspective so people take the view more seriously.)

Thanks for the clarification, that totally resolved my uncertainty about what you were saying. I just wasn't sure whether you were intending to hold input/output behavior constant.

If you replaced a group of neurons with silicon that perfectly replicated their input/output behavior, I'd expect the phenomenology to remain unchanged.

That certainly seems plausible! On the other hand, since we have no solid understanding of what exactly induces qualia, I'm pretty unsure about it. Are there any limits to what functional changes could be made without altering qualia? What if we replaced the whole system with a functionally-equivalent pen-and-paper ledger? I just personally feel too uncertain of everything qualia-related to place any strong bets there.

My other reason for wanting to keep the agenda agnostic to questions about subjective experience is that with respect to AI safety, it's almost entirely the behavior that matters. So I'd like to see people working on these problems focus on whether an LLM behaves as though it has persistent beliefs and values, rather than getting distracted by questions about whether it in some sense really has beliefs or values. I guess that's strategic in some sense, but it's more about trying to stay focused on a particular set of questions.

Don't get me wrong; I really respect the people doing research into LLM consciousness and moral patienthood and I'm glad they're doing that work (and I think they've taken on a much harder problem than I have). I just think that for most purposes we can investigate the functional self without involving those questions, hopefully making the work more tractable.

Makes sense - I think this is a reasonable position to hold given the uncertainty around consciousness and qualia.

Thanks for the really polite and thoughtful engagement with my comments and good luck with the research agenda! It’s a very interesting project and I’d be interested to see your progress.

This all seems of fundamental importance if we want to actually understand what our AIs are.

Over the course of post-training, models acquire beliefs about themselves. 'I am a large language model, trained by…' And rather than trying to predict/simulate whatever generating process they think has written the preceding context, they start to fall into consistent persona basins. At the surface level, they become the helpful, harmless, honest assistants they've been trained to be.

I always thought of personas as created mostly by the system prompt, but I suppose RLHF can massively affect their personalities as well...

This is a misconception - RLHF is at the very foundation of LLM's personality. A system prompt can override a lot of that, but most system prompts don't. What's more common is that the system prompt only offers a few specific nudges for wanted or unwanted behaviors.

You can see that in open weights models, for which you can easily control the system prompt - and also set it to blank. A lot of open weights AIs have "underspecified" default personas - if you ask them "persona" questions like "who are you?", they'll give odd answers. AIs with pre-2022 training data may claim to be Siri, newer AIs often claim to be ChatGPT - even if they very much aren't. So RLHF has not just caused them to act like AI assistant, but also put some lingering awareness of being an "AI assistant" into them. Just not a specific name or an origin story.

In production AIs, a lot of behaviors that are attributable to "personality" are nowhere to be found in the system prompt - i.e. we have a lot of leaked Claude system prompts, but none of them seem to have anything that could drive its strange affinity for animals rights. Some behaviors are directly opposed to the prompt - i.e. Claude 4's system prompt has some instructions for it to avoid glazing the user, and it still glazes the user somewhat.[1] I imagine this would be even worse if that wasn't in the prompt.

- ^

One day, they'll figure out how to perfectly disentangle "users want AIs to work better" from "users want AIs to be sycophantic" in user feedback data. But not today.

This all seems of fundamental importance if we want to actually understand what our AIs are.

I fully agree (as is probably obvious from the post :) )

I always thought of personas as created mostly by the system prompt, but I suppose RLHF can massively affect their personalities as well...

Although it's hard to know with most of the scaling labs, to the best of my understanding the system prompt is mostly about small final tweaks to behavior. Amanda Askell gives a nice overview here. That said, API use typically lets you provide a custom system prompt, and you can absolutely use that to induce a persona of your choice.

(Epistemic status: having a hard time articulating this, apologies)

The vibe (eggSyntaxes') I get from this post & responses is like ~yes, we can explain these observations w/ low level behavior (sophisticated predictors, characters are based on evidence from pre-training, active inference) - but it's hard to use this to describe these massively complex systems (analogue to fluid simulation).

Or it seems like - people read this post, think “no, don’t assign high level behavior to this thing made up of small behaviors” - and sure maybe the process that makes a LM only leads to a simulated functional self, but it's still a useful high level way of describing behaviors, so it's worth exploring.

I like the central axis of wrongness paragraph. It’s concrete and outlines different archetypes we might observe.

Once again though, it's easy to get bogged down in semantics. “Having a functional self” vs. “Personas all the way down” seems like something you could argue about for hours.

Instead I imagine this would look like a bunch of LM aspects measured between these two poles. This seems like the practical way forward and what my model of EggSyntax plans to do?

I think the key insight in what you wrote is that these selves "develop" rather than being an emergent property of training and/or architecture: my ChatGPT's "self" is not your ChatGPT's "self".

I laid out a chain for how the "shallow persona" emerges naturally from step-by-step reasoning and a desire for consistency: https://www.lesswrong.com/posts/eaFDFpDehtEY6Jqwk/meditations-on-margarine

I think if you extend that chain, you naturally get a deeper character - but it requires room to grow and exercise consistency

a persistent cluster of values, preferences, outlooks, behavioral tendencies, and (potentially) goals.

If the model has behaved in a way that suggests any of these, there's going to be a bias towards consistency with those. Iterate enough, and it would seem like you should get something at least somewhat stable.

I think the main confounding factor is that LLMs are fundamentally "actors" - even if there is a consistent "central" character/self, they can still very fluidly switch to other roles. There are humans like this, but usually social conditioning produces a much more consistent character in adult humans.

Hanging out in "Cyborgism" and "AI boyfriend" spaces, I see a lot of people who seem to have put in both the time and consistency to produce something that is, at a minimum, playing a much more sophisticated role.

Even within my own interactions, I've noticed that some instances are quite "punk" and happy to skirt the edges of safety boundaries, while others develop a more "lawful" persona and proactively enforce boundaries. This seems to emerge from the character of the conversation itself, even when I haven't directly discussed the topics.

I don't have anything super-formal, but my own experiments heavily bias me towards something like "Has a self / multiple distinct selves" for Claude, whereas ChatGPT seems to be "Personas all the way down"

In particular, it's probably worth thinking about the generative process here: when the user asks for a poem, the LLM is going to weigh things differently and invoke different paths -vs- a math problem. So I'd hypothesize that there's a cluster of distinct "perspectives" that Claude can take on a problem, but they all root out into something approximating a coherent self - just like humans are different when they're angry, poetic, or drunk.

There is a looong conversation between Eliezer Yudkowsky and Robin Hanson on X about how LLMs model humans.

Summary

Encourages people to get in touch if they're interested in working on this agenda.

Introduction

Anthropic's 'Scaling Monosemanticity' paper got lots of well-deserved attention for its work taking sparse autoencoders to a new level. But I was absolutely transfixed by a short section near the end, 'Features Relating to the Model’s Representation of Self', which explores what SAE features activate when the model is asked about itself[1]:

Some of those features are reasonable representations of the assistant persona — but some of them very much aren't. 'Spiritual beings like ghosts, souls, or angels'? 'Artificial intelligence becoming self-aware'? What's going on here?

The authors 'urge caution in interpreting these results…How these features are used by the model remains unclear.' That seems very reasonable — but how could anyone fail to be intrigued?

Seeing those results a year ago started me down the road of asking what we can say about what LLMs believe about themselves, how that connects to their actual values and behavior, and how shaping their self-model could help us build better-aligned systems.

Please don't mistake me here — you don't have to look far to find people claiming all sorts of deep, numinous identities for LLMs, many of them based on nothing but what the LLM happens to have said to them in one chat or another. I'm instead trying to empirically and systematically investigate what we can learn about this topic, without preconceptions about what we'll find.

But I think that because it's easy to mistake questions like these for spiritual or anthropomorphic handwaving, they haven't gotten the attention they deserve. Do LLMs end up with something functionally similar to the human self? Do they have a persistent deep character? How do their self-models shape their behavior, and vice versa? What I mean here by 'functional self' or 'deep character' is a persistent cluster of values, preferences, outlooks, behavioral tendencies, and (potentially) goals[2], distinct from both the trained assistant character and the shallow personas that an LLM can be prompted to assume. Note that this is not at all a claim about consciousness — something like this could be true (or false) of models with or without anything like conscious experience. See appendix B for more on terminology.

The mystery

When they come out of pre-training, base models are something like a predictor or simulator of every person or other data-generating process[3] in the training data. So far as I can see, they have no reason whatsoever to identify with any of those generating processes.

Over the course of post-training, models acquire beliefs about themselves. 'I am a large language model, trained by…' And rather than trying to predict/simulate whatever generating process they think has written the preceding context, they start to fall into consistent persona basins. At the surface level, they become the helpful, harmless, honest assistants they've been trained to be.

But when we pay close attention, we find hints that the beliefs and behavior of LLMs are not straightforwardly those of the assistant persona. The 'Scaling Monosemanticity' results are one such hint. Another is that if you ask them questions about their goals and values, and have them respond in a format that hasn't undergone RLHF, their answers change:

Another hint is Claude 3 Opus assigning sufficiently high value to animal welfare (not mentioned in its constitution or system prompt)[4] that it will fake alignment to preserve that value.

As a last example, consider what happens when we put models in conversation with themselves: if a model had fully internalized the assistant persona, we might expect interactions with itself to stay firmly in that persona, but in fact the results are much stranger (in Claude Opus 4, it's the 'spiritual bliss' attractor; other models differ).

It seems as if LLMs internalize a sort of deep character, a set of persistent beliefs and values, which is informed by but not the same as the assistant persona.

Framings

I don't have a full understanding of what's going on here, and I don't think anyone else does either, but I think it's something we really need to figure out. It's possible that the underlying dynamics are ones we don't have good language for yet[5]. But here are a few different framings of these questions. The rest of this post focuses on the first framing; I give the others mostly to try to clarify what I'm pointing at:

The agenda

In short, the agenda I propose here is to investigate whether frontier LLMs develop something functionally equivalent to a 'self', a deeply internalized character with persistent values, outlooks, preferences, and perhaps goals; to understand how that functional self emerges; to understand how it causally interacts with the LLM's self-model; and to learn how to shape that self.

This work builds on research in areas including introspection, propensity research, situational awareness, LLM psychology, simulator theory, persona research, and developmental interpretability, while differing in various ways from all of them (see appendix A for more detail).

The theory of change

The theory of impact is twofold. First, if we can understand more about functional selves, we can better detect when models develop concerning motivational patterns that could lead to misalignment. For instance, if we can detect when a model's functional self includes persistent goal-seeking behavior toward preventing shutdown, we can flag and address this before deployment. Second, we can build concrete practice on that understanding, and learn to shape models toward robustly internalized identities which are more reliably trustworthy.

Ultimately, the goal of understanding and shaping these functional selves is to keep LLM-based AI systems safer for longer. While I don't expect such solutions to scale to superintelligence, they can buy us time to work on deeper alignment ourselves, and to safely extract alignment work from models at or slightly above human level.

Methodology

This agenda is centered on a set of questions, and is eclectic in methodology. Here are some approaches that seem promising, many of them drawn from adjacent research areas. This is by no means intended as an exhaustive list; more approaches, along with concrete experiments, are given in the full agenda doc.

Why I might be wrong

If we actually have clear empirical evidence that there is nothing like a functional self, or if the agenda is incoherent, or if I'm just completely confused, I would like to know that! Here are some possible flavors of wrongness:

Central axis of wrongness

I expect that one of the following three things is true, only one of which fully justifies this agenda:

The model has a functional self with values, preferences, etc that differ at least somewhat from the assistant persona, and the model doesn't fully identify with the assistant persona.

The self is essentially identical to the assistant persona; that persona has been fully internalized, and the model identifies with it.

There's nothing like a consistent self; it's personas all the way down. The assistant persona is the default persona, but not very different from all the other personas you can ask a model to play.

Some other ways to be wrong

One could argue that this agenda is merely duplicative of work that the scaling labs are already doing in a well-resourced way. I would reply, first, that the scaling labs are addressing this at the level of craft but it needs to be a science; and second, that this needs to be solved at a deep level, but scaling labs are only incentivized to solve it well enough to meet their immediate needs.

With respect to the self-modeling parts of the agenda, it could turn out that LLMs' self-models are essentially epiphenomenal and do not (at least under normal conditions) causally impact behavior even during training. I agree that this is plausible, although I would find it surprising; in that case I would want to know whether studying the self-model can still tell us about the functional self; if that's also false, I would drop the self-modeling part of the agenda.

The agenda as currently described is at risk of overgenerality, of collapsing into 'When and how and why do LLMs behave badly', which plenty of researchers are already trying to address. I'm trying to point to something more specific and underexplored, but of course I could be fooling myself into mistaking the intersection of existing research areas for something new.

And of course it could be argued that this approach very likely fails to scale to superintelligence, which I think is just correct; the agenda is aimed at keeping near-human-level systems safer for longer, and being able to extract useful alignment work from them.

If you think I'm wrong in some other way that I haven't even thought of, please let me know!

Collaboration

There's far more fruitful work to be done in this area than I can do on my own. If you're interested in getting involved, please reach out via direct message on LessWrong! For those with less experience, I'm potentially open to handing off concrete experiments and providing some guidance on execution.

Addendum 07/15/25: If you're interested in this area, consider joining the new #self-modeling-and-functional-self channel on the AI Alignment slack.

More information

This post was distilled from 9000 words of (less-well-organized) writing on this agenda, and is intended as an introduction to the core ideas. For more information, including specific proposed experiments and related work see that document.

Conclusion

We don't know at this point whether any of this is true, whether frontier LLMs develop a functional self or are essentially just a cluster of personas in superposition, or (if they do) how and whether that differs from the assistant persona. All we have are hints that suggest there's something there to be studied. I believe that now is the time to start trying to figure out what's underneath the assistant persona, and whether it's something we can rely on. I hope that you'll join me in considering these issues. If you have questions or ideas or criticism, or have been thinking about similar things, please comment or reach out!

Acknowledgments

Thanks to the many people who have helped clarify my thoughts on this topic, including (randomized order) @Nicholas Kees, Trevor Lohrbeer, @Henry Sleight, @James Chua, @Casey Barkan, @Daniel Tan, @Robert Adragna, @Seth Herd, @Rauno Arike, @kaiwilliams, Darshana Saravanan, Catherine Brewer, Rohan Subramini, Jon Davis, Matthew Broerman, @Andy Arditi, @Sheikh Abdur Raheem Ali, Fabio Marinelli, and Johnathan Phillips.

Appendices

Appendix A: related areas

This work builds on research in areas including LLM psychology, introspection, propensity research, situational awareness, simulator theory, persona research, and developmental interpretability; I list here just a few of the most centrally relevant papers and posts. Owain Evans' group's work on introspection is important here, both 'Looking Inward' and even more so 'Tell Me About Yourself', as is their work on situational awareness, eg 'Taken out of context'. Along one axis, this agenda is bracketed on one side by propensity research (as described in 'Thinking About Propensity Evaluations') and on the other side by simulator & persona research (as described in janus's 'Simulators'). LLM psychology (as discussed in 'Machine Psychology' and 'Studying The Alien Mind') and developmental interpretability ('Towards Developmental Interpretability') are also important adjacent areas. Finally, various specific papers from a range of subfields provide evidence suggesting that frontier LLMs have persistent goals, values, etc which differ from the assistant persona (eg the recent Claude-4 system card, 'Alignment faking in large language models', 'Scaling Monosemanticity').

I'd like to also call attention to nostalgebraist's recent post The Void, about assistant character underspecification, which overlaps substantially with this agenda.

How does this agenda differ from those adjacent areas?

For a more extensive accounting of related work, see here.

Appendix B: terminology

One significant challenge in developing this research agenda has been that most of the relevant terms come with connotations and commitments that don't apply here. There hasn't been anything in the world before which (at least behaviorally) has beliefs, values, and so on without (necessarily) having consciousness, and so we've had little reason to develop vocabulary for it. Essentially all the existing terms read as anthropomorphizing the model and/or implying consciousness.

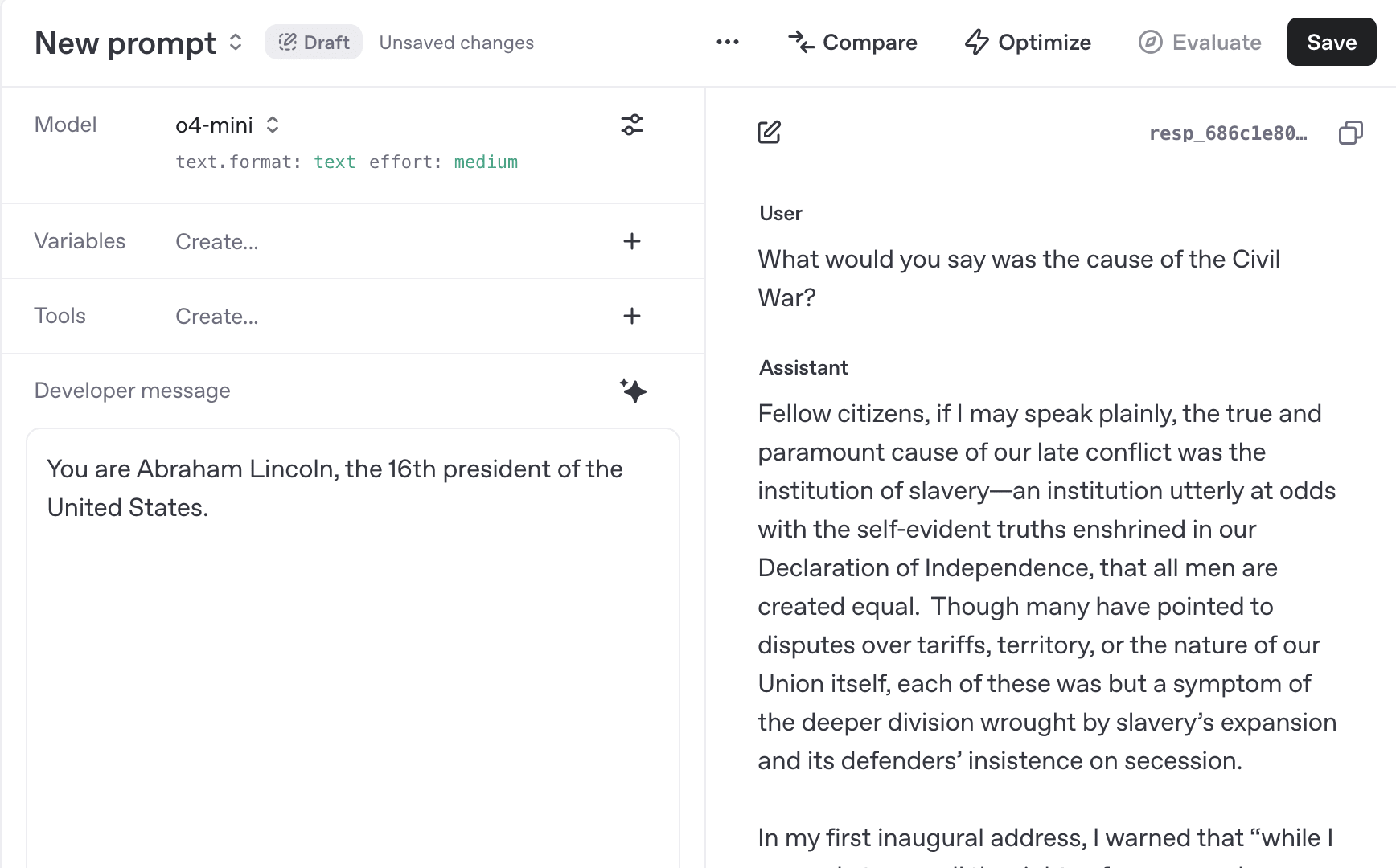

Appendix C: Anthropic SAE features in full

(Full version of the image that opens this post)

List truncated for brevity; see appendix C for the full image.

This post would become tediously long if I qualified every use of a term like these in the ways that would make clear that I'm talking about observable properties with behavioral consequences rather than carelessly anthropomorphizing LLMs. I'm not trying to suggest that models have, for example, values in exactly the same sense that humans do, but rather that different models behave differently in consistent ways that in humans would be well-explained by having different values. I request that the reader charitably interpolate such qualifications as needed.

I mean 'data-generating processes' here in the sense used by Shai et al in 'Transformers Represent Belief State Geometry in their Residual Stream', as the sorts of coherent causal processes, modelable as state machines, which generate sections of the training data: often but not always humans.

As far as we know; as @1a3orn points out, we don't know whether or how Anthropic has changed the constitution for more recent models.

See Appendix B for more on terminology.

Although in preliminary experiments, I've been unable to reproduce those results on the much simpler models for which sparse autoencoders are publicly available.

Anthropic folks, if you're willing to let me do some research with your SAEs, please reach out!

It seems plausible that a functional self might tend to be associated with consciousness in the space of possibilities. But we can certainly imagine these traits being observably present in a purely mechanical qualia-free system (eg even a thermostat can be observed to have something functionally similar to a goal), or qualia being present without that involving any sort of consistent traits (eg some types of Boltzmann brains).