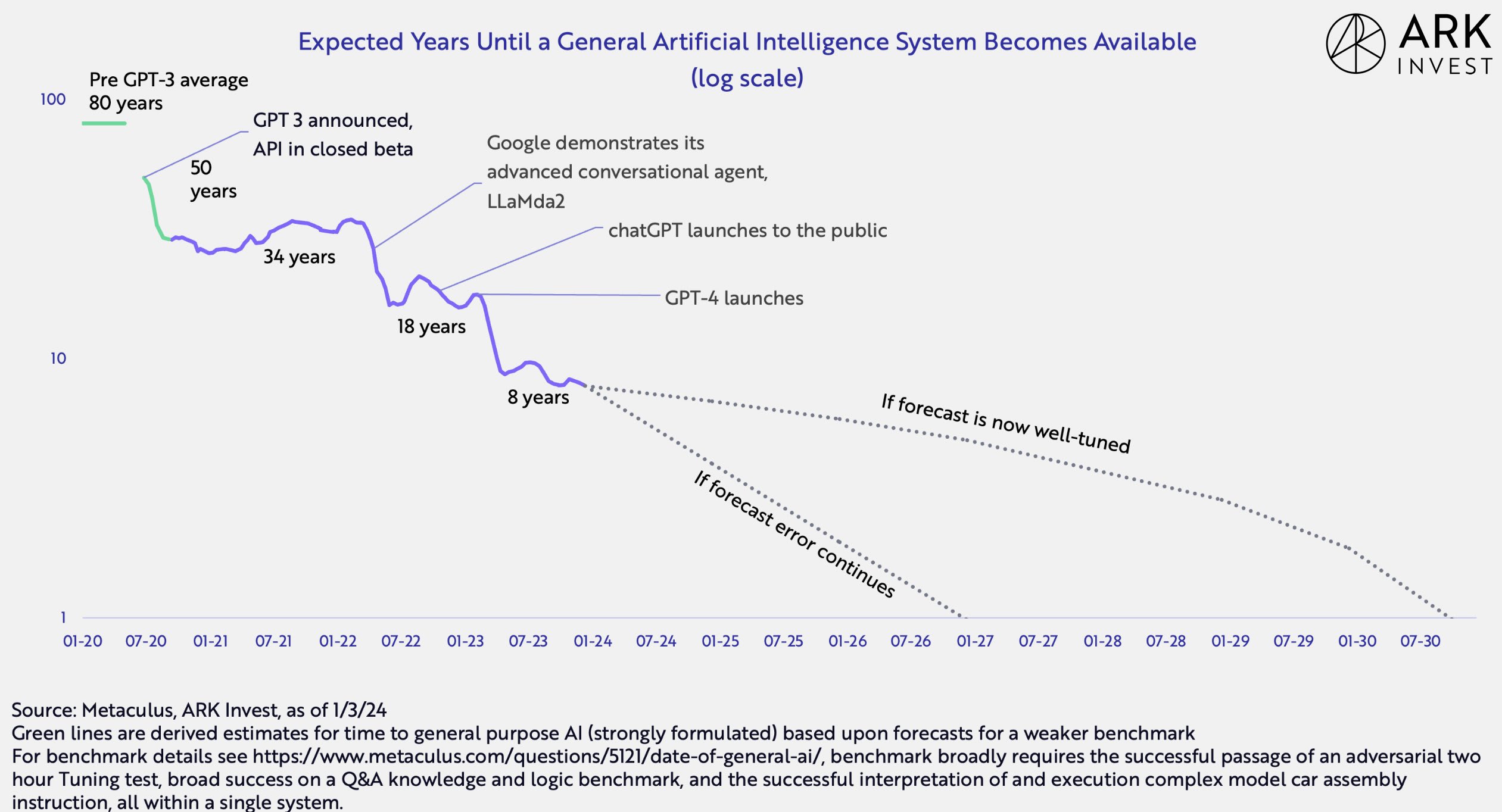

AGI timelines post-GPT-3 exhibit reverse Hofstadter’s law: AI advances quicker than predicted, even when taking into account reverse Hofstadter’s law.

https://x.com/wintonARK/status/1742979090725101983/photo/1

Pascal's reverse-mugging

One dark evening, Pascal is walking down the street, and a stranger slithers out of the shadows.

"Let me tell you something," the stranger says. "There is a park on the route you walk every day, and in the park is an apple tree. The apples taste very good; I would say they have a value of $5, and no one will stop you from taking them. However -- I am a matrix lord, and I have claimed these apples for myself. I will create and kill 3^^^3 people if you take any of these apples."

On similar reasoning to that which leads most people to reject the standard Pascal's mugging, it seems reasonable to ignore the apple-man's warning and take an apple (provided that the effort involved in picking it is trivial, assuming that Pascal knows that the apples are safe and legal to pick, etc.). However, I suggest that it intuitively seems more reasonable for Pascal to avoid taking the apples than it does for him to pay the mugger $5. However, this constitutes an act-omission distinction. I raise 3 possibilities: Either

- some of the counterintuitiveness of the idea that Pascal should pay the mugger derives from an act-omission distinction, which is not rational, and so we should reduce our incredulity at pascalianism accordingly. In other words, however crazy we think it would be for Pascal would be to not pick an apple, we should reduce our estimation of the craziness of giving in to a standard mugging to be no more significant than the former

- Our intuitions about the apple-picking are less correct than those about the standard mugging, and we should raise our estimation of the craziness of avoiding taking the apple to match that of paying the mugger.

- An act-omission distinction is valid, and it is indeed more reasonable to refrain from picking the apple than it is to pay the mugger.

- There was never an intuitive difference between the two situations in the first place.

- Some fifth thing.

Is it relevant whether you knew about the apples before the apple man told you about them? If you didn't know, then the least exploitable response to a message that looks adversarial is to pretend you didn't hear it, which would mean not eating the apples.

Also, pascal's mugging is worth coordinating against- if everyone gives the 5 dollars, the stranger rapidly accumulates wealth via dishonesty. If no one eats the apples, then the stranger has the same tree of apples get less and less eaten, which is less caustic.

One large difference between the scenarios is the answer to "what's in it for the stranger?"

In the standard Pascal's Mugging, the answer is "they get $5". Clear and understandable motivation, it is after all a mugging (or at least a begging). It may or may not escalate to violence or other unpleasantness if you refuse to give them $5, though there's a decent chance that if you do give them $5 then they'll bug you and other people again and again.

In this scenario it's much less clear. What they're saying is obviously false, but they don't obviously get much that would incentivize bugging you again if you pretend to agree with them. It's a bit disconcerting that they've been tracking your movements every day, but it's not like you particularly lose anything by not eating an apple that they've warned you against eating and you weren't planning to eat anyway. Frankly, even without them claiming to own the apples, I'd be pretty disinclined to eat one that this stalker has pointed out to me.

Been telling LLMs to behave as if they and the user really like this Kierkegaard quote (to reduce sycophancy). Giving decent results so far.