I'd like to hear a clearer problem statement. The vast majority of my online consumption is for entertainment, or information, and I don't much care whether it was created or compiled by a human individual, a committee or corporation, or an AI, or some combination of them.

I do often care about the quality or expected quality, and that's based on the source and history and reputation, and applies regardless of the mechanics of generating the content.

I do often care about the quality

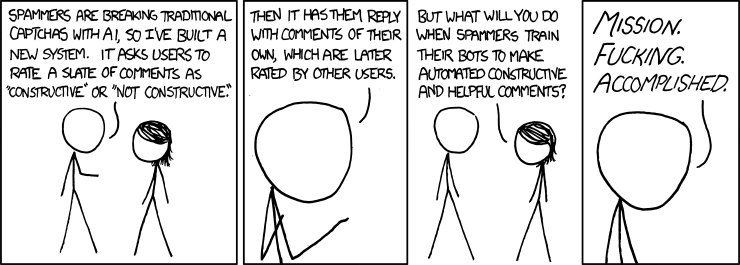

One possibility, surely just one possibility among many, is that a forum could be destroyed by being flooded with bots, using fictitious identities to talk pointlessly to each other about their fictitious opinions and their fictitious lives.

There is such a thing as online community, and advanced chatbots can be the death of it, in various ways.

edit:

I don't much care whether it was created or compiled by a human individual, a committee or corporation, or an AI, or some combination of them.

Are there any circumstances in which you do care whether the people you are talking with are real, or are fictitious and AI-generated?

is that a forum could be destroyed by being flooded with bots, using fictitious identities to talk pointlessly to each other about their fictitious opinions and their fictitious lives.

The word "fictitious" in that description feels weird to me. MOST random talk on forums is flooded with unknown people, using online identities to talk pointlessly to each other about their poorly-supported beliefs and heavily-filtered lives. At least a bot will use good grammar when I ignore it.

If you're describing a specific forum, say LessWrong, this still applies - there's lots of crap I don't care about, and glide on past. I recognize some posters, and spend the time on some topics to decide if an unknown poster is worth reading. Adding bot accounts will create more chaff, and I will probably need better filtering mechanisms, but it won't reduce the value of many of the great posts and comments.

Are there any circumstances in which you do care whether the people you are talking with are real

I'm not actually sure whether I'd care or not, if the 'bot were good enough (none yet are). In most short online interactions, like for this site, if I can't tell, I don't care. In long-form interactions, where there is repeated context and shared discovery of each other (including in-person friendships and relationships, and most work relationships, even if we've not met in person), I don't directly care, but AI and chatbots just aren't good enough yet.

Note that I DO really despise when a human uses AI to generate some text, but then doesn't actually verify that it matches the human's beliefs, and takes no responsibility for posting verbose crap. I despise posting of crap by biological intelligences as well, so that's not directly a problem with 'bots.

Marketers, scammers and trolls are trying-to control the internet bottom-up, joining the ranks of the users and going against internet institutions. While it's a problem (a possibly big one), a worse situation is when the internet instutions themselves start using LLMs for top-down control. For a fictional example, see heaven banning.

"passing" for online seems easier. I tried to think whether I can come up with the easiest task to do it with. Writing Nigerian Prince letters has the wierd property that low quality is part of the success formula, those fish that bite are in a sense guaranteed to be gullible. That is also an awfully fitting training signal, whether a user replied or not. Incoherrent scenarios which have a vague promise of easy money does not seem too challenging a literary genre.

If ignoring ceases to be an effective strategy against spam it is going to be an unpleasant development.

It seems more likely to me that the internet is going to become relatively deanonymized than that it's going to be destroyed by bots. E.g. I weakly expect that LessWrong will make it much harder for new users to post comments or articles by 2024 in an effort to specifically prevent spam / propaganda from bots.

Maybe the internet will break down into a collection of walled gardens. Maybe services will start charging token fees to join as an easy way to restrict how many accounts spammers can have (at the cost of systematically excluding their poorest users...)

Is there any way to use cryptography to enable users to verify their humanity to a website, without revealing their identity? Maybe by "outsourcing" the verification to another entity – e.g. governments distribute private keys to each citizen, which the citizen uses to generate anonymous but verifiable codes to give to websites.

You can, for example, hash your gmail address and an authentication code from a Google two-factor-authentication app to any website that wants to confirm you're human. The website then uploads to hash to a Google API. Google verifies that you are both using a human gmail account (with a registered credit card, phone number, ect.) and are posting at a human-possible rate. The website then allows you to post.

So it can already be done with existing services. There's probably a specific Google API that does exactly that.

Wait, what? That doesn't verify humanity, it only verifies that the sending agent has access to that gmail account. AI (and the humans who're profiting off use of AI) have all the gmail accounts they need.

There's probably no cryptography operation that a human can do which a LLM or automated system can't. Any crypto signature or verification you're doing to prevent humans impersonating other humans will continue to work, but how many people do you interact with that you know well enough to exchange keys offline to be sure it's really them?

The endgame for humanity's AI adventure still looks to me, to be what happens upon the arrival of comprehensively superhuman artificial intelligence.

However, the rise of large language models, and the availability of ChatGPT in particular, has amplified an existing Internet phenomenon, to the point that it may begin to dominate the tone of online interactions.

Various kinds of fakes and deceptions have always been a factor in online life, and before that in face-to-face real life. But first, spammers took advantage of bulk email to send out lies to thousands of people at a time, and then chatbots provided an increasingly sophisticated automated substitute for social interaction itself.

The bot problem is probably already worse than I know. Just today, Elon Musk is promising some kind of purge of bots from Twitter.

But now we have "language models" whose capabilities are growing in dozens of directions at once. They can be useful, they can be charming, but they can also be used to drown us in their chatter, and trick us like an army of trolls.

One endpoint of this is the complete replacement of humanity, online and even in real life, by a population of chattering mannequins. Probably this image exists many places in science fiction: a future Earth on which humanity is extinct, but in which, in what's left of urban centers running on automated infrastructure, some kind of robots cycle through an imitation of the vanished human life, until the machine stops entirely.

Meanwhile, the simulacrum of online human life is obviously a far more imminent and urgent issue. And for now, the problem is still bots with human botmasters. I haven't yet heard of a computer virus with an onboard language model that's just roaming the wild, surviving on the strength of its social skills.

Instead, the problem is going to be trolls using language models, marketers and scammers and spammers using language models, political manipulators and geopolitical subverters using language models: targeting individuals, and then targeting thousands and millions of people, with terrible verisimilitude.

This is not a new fear, but it has a new salience because of the leap in the quality of AI imitation and creativity. It may end up driving people offline in large numbers, and/or ending anonymity in public social media in favor of verified identities. A significant fraction of the human population may find themselves permanently under the spell of bot operations meant to shape their opinions and empty their pockets.

Some of these issues are also discussed in the recent post, ChatGPT's Misalignment Isn't What You Think.