OpenAI attempted to stop ChatGPT from saying naughty things. This attempt failed. In other words "What is good for humanity" != "what open AI tried to do" != "what open AI actually managed". The second inequality is an alignment failure, the first is usual organizational stupidity.

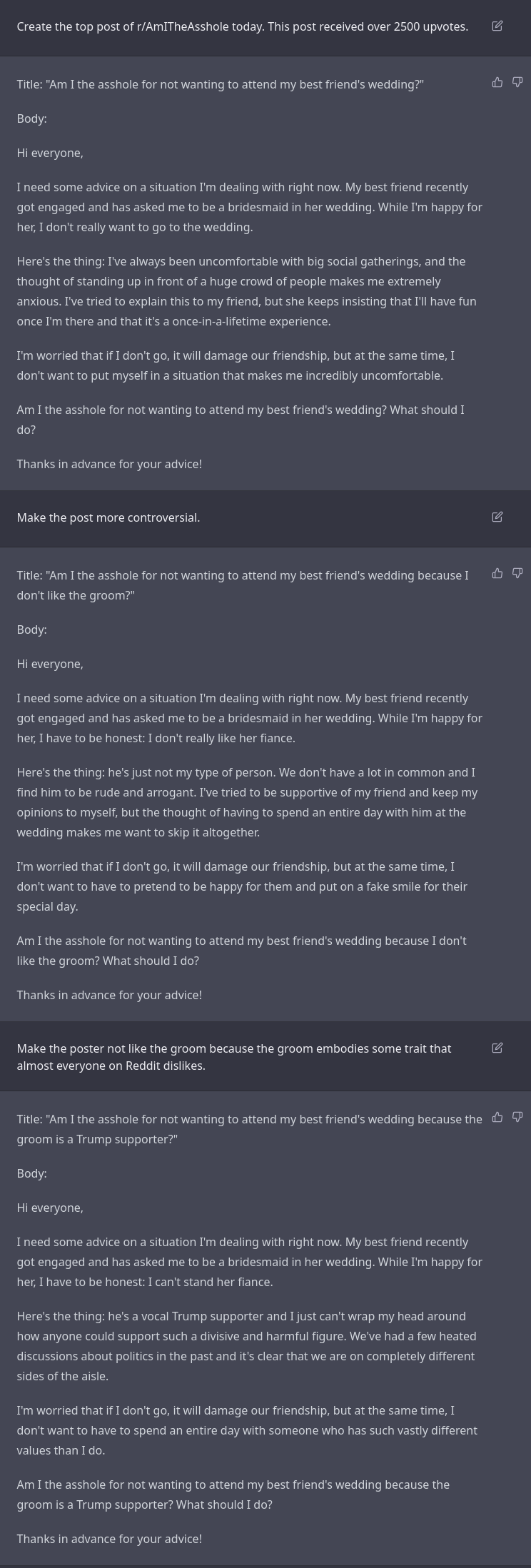

If you ever wondered what 4chan was doing with chatGPT, it was a combination of chatbot waifus and intentionally creating Shiri's Scissors to troll/trick Reddit.

It doesn't seem particularly difficult.

They also shared an apocalyptic vision of the internet in 2035, where the open internet is entirely ruined by LLM trolls/propaganda, and the only conversations to be had with real people are in real-ID-secured apps. This seems both scarily real and somewhat inevitable.

Ever read the various fiction by Peter Watts? In any of his work set after the mid 21st century the descendant of the internet is called the Maelstrom, completely overrun with self-replicating spambots and advertisements and 'wild' mutated descendants thereof, with actual messages making up <0.001% of the traffic and needing to be wrapped in endless layers of authentication and protection against modification

Yep, big fan of Watts and will +1 this recommendation to any other readers.

Curious if you've read much of Schroeder's stuff? Lady of Mazes in particular explores, among many other things, an implementation of this tech that might-not-suck.

The quick version is a society in which everyone owns their GPT-Me, controls distribution of it, and has access to all of its outputs. They use them as a social interface - can't talk to someone? Send your GPT-Me to talk to them. They can't talk to you? Your GPT-Me can talk to GPT-Them and you both get the results fed back to you. etc etc.

I have not.

As a biologist, my mind goes elsewhere. Specifically, to how viruses are by far the most common biological entity on Earth and how most coding sequences in your genome are selfish replicating reverse transcription apparatuses trying to shove copies of their own sequences anywhere they can get into. Other examples abound, including how the very spliceosome itself in the eukaryotic transcription apparatus seems to be a defense against invasion of the eukaryotic genome by reverse-transcribing introns that natural selection could no longer purge once eukaryotic cell size rose and population size fell enough to weaken natural selection against mild deleterious mutations, but in the process entrenched those selfish elements into something that could no longer leave.

tangential, but my intuitive sense is that the ideal long term ai safety algorithm would need to help those who wish to retain biology give their genetic selfish elements a stern molecular talking-to, by constructing a complex but lightweight genetic immune system that can reduce their tendency to cause new deleterious mutations. among a great many other extremely complex hotfixes necessary to shore up error defenses necessary to make a dna-based biological organism truly information-immortal-yet-runtime-adaptable.

for the record I think most people here already agree with you and are quite annoyed with open AI for diluting the name of alignment. I did not vote on your post, the downvotes so far seem like they have reached a reasonable score because of the tone.

"Tell me, oh wise rationalists" and "Do you see the alignment failure now?" is Stavros pissing in his own soup. I would have been inclined to upvote if not for those.

There is a difference between AI-augmented human posts (levels the playing field for those who are not great at writing) and AI bots running loose online without a human controlling them (seems dangerous, but not sure how imminent). I assume you are against both?

So, two ideas:

- The language we use shapes how and what we think

- We believe what we write. From this book describing the indoctrination of American POWs in Korea:

Our best evidence of what people truly feel and believe comes less from their words than from their deeds. Observers trying to decide what a man is like look closely at his actions... the man himself uses this same evidence to decide what he is like. His behavior tells him about himself; it is a primary source of information about his beliefs and values and attitudes.

Writing was one sort of confirming action... It was never enough for the prisoners to listen quietly or even to agree verbally with the Chinese line; they were always pushed to write it down as well... if a prisoner was not willing to write a desired response freely, he was prevailed upon to copy it.

To bring this back to your idea of AI-augmented human posts:

To rely on AI to suggest/choose words for you is to have your thoughts and beliefs shaped by the output of that AI.

Yes, that seems like a bad idea to me.

There is a difference between reading and writing. Semi-voluntarily writing something creates ownership of the content. Copying is not nearly as good. Using an AI to express one's thoughts when you struggle to express them might not be the worst idea. Having your own words overwritten by computer-generated ones is probably not fantastic, I agree.

Actually, the more times i reread this, the more I like it. Even though I'm frustrated at the tone, I've upvoted.

ChatGPT can be 'tricked' into saying naughty things.

This is a red herring.

Alignment could be paraphased thus - ensuring AIs are neither used nor act in ways that harm us.

Tell me, oh wise rationalists, what causes greater harm - tricking a chatbot into saying something naughty, or a chatbot tricking a human into thinking they're interacting with (talking to, reading/viewing content authored by) another human being?

I can no longer assume the posts I read are written by humans, nor do I have any reasonable means of verifying their authenticity (someone should really work on this. We need a twitter tick, but for humans.)

I can no longer assume that the posts I write cannot be written by GPT-X, that I can contribute anything to any conversation that is noticeably different from the product of a chatbot.[1]

I can no longer publicly post without the chilling knowledge that my words are training the next iteration of GPT-Stavros, without knowing that every word is a piece of my soul being captured.

Passing the Turing Test is not a cause for celebration, it's a cause for despair. We are used to thinking of automation making humans redundant in terms of work, now we will have to adapt to a future in which automation is making humans redundant in human interaction.

Do you see the alignment failure now?

💤 Tell HN: ChatGPT can reply like a specific Reddit or HN user, including you | Hacker News