spotted in an unrelated discord, looks like I'm not the only person who noticed the similarity 😅

When using LLM-based coding assistants, I always had a strange feeling about the interaction. I think I now have a pointer around that feeling - disappointment from having expected more (again and again), followed by low level of disgust, and an aftertaste of disrespect growing into hatred

Yeah, I kinda get it. Not to the point of hatred, but I do find interacting with LLMs... mentally taxing. They pass as just enough of a "well-meaning eagerly helpful person" to make me not want to be mean to them (as it'd make me feel bad), but they also continually induce weary disappointment in me.

I wish we figured out some other interface over the base models that is not these "AI assistant" personas. I don't know what that'd be, but surely something better is possible. Something framed as an impersonal call to a database equipped with a powerful program/knowledge synthesis tool, maybe.

Have you seen how people un-minified Claude Code - the sheer amount of workarounds, cringe IMPORTANT inside the system prompt and the constant reminders?

This prompted me to write up about my recent experience with it, see here.

I suspect that the near future of programming is that you will be expected to produce a lot of code fast because that's the entire point of having an AI, you just tell it what to do and it generates the code, it would be stupid to pay a human to do it slowly instead... but you will also be blamed for everything the AI does wrong, and expected to fix it. And you will be expected to fix it fast, by telling the AI to fix it, or maybe by throwing all the existing code away and generating it again from scratch, because it would be stupid to pay a human to do it slowly instead... and you will also be blamed if the AI fails to fix it, or if the new version has different new flaws.

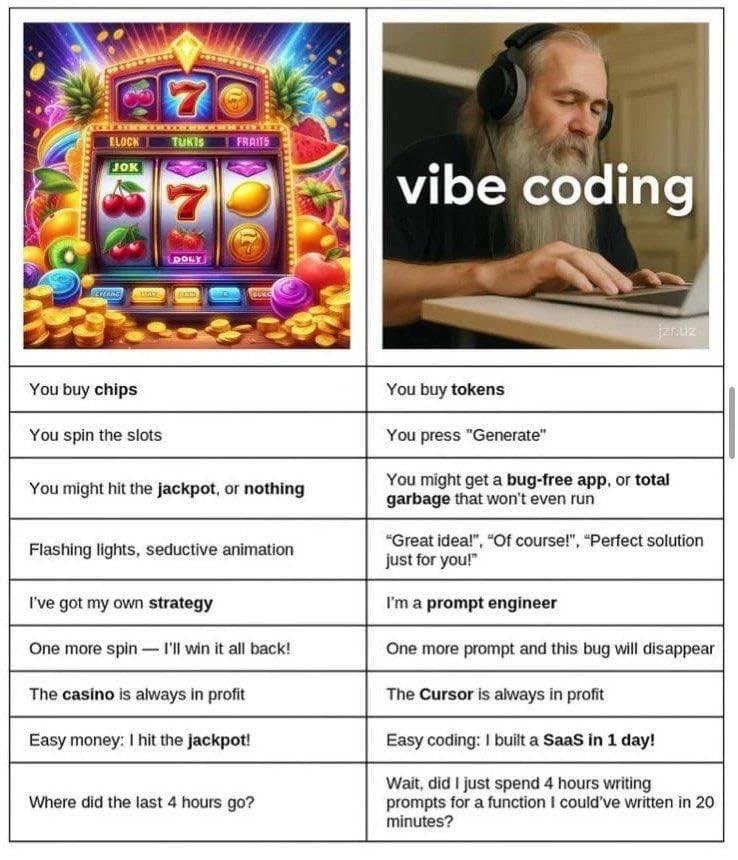

It will be like being employed to gamble, and if you don't make your quota of jackpots per month, you get fired and replaced by a better gambler.

nah 🙈, the stupid companies will self-select out of the job market for not-burned-out good programmers, and the good companies will do something like "product engineering" when product managers and designers will make their own PoCs to validate with stakeholders before/without endless specifications handed over to engineers in the first iteration, and then the programming roles will focus on building production quality solutions and maybe a QA renaissance will happen to write useful regression tests when domain experts can use coding assistants to automate boring stuff and focus on domain expertise/making decisions instead of programmers trying to guess the indent behind a written specification twice (for the code and for the test.. or once when it's the same person/LLM writing both, which is a recipe for useless tests IMHO)

(..not making a prediction here, more like a wish TBH)

One the one hand, I understand this approach (firing programmers that don't use AI), but on the other hand, this is like firing someone for using emacs rather than PyCharm (or VSCode or whatever is the new hot). It's sad that it looks like people are going back to being measured by lines of code.

I'd like to be able to just provide high level overviews of what I want done, then have an army of agents do it, but it doesn't seem to be there yet (could be skill issue on my part, though).

No, no - you misunderstand agile! See, in our company, we have AIgile! It's a slightly modified version of agile which we find to be even better! The scrum master is GTP-4o, which is known to be wonderful at encouraging and building team spirit.

I have yet to have worked in a company that actually implemented agile, rather than saying they use agile, but then inventing their own complicated system involving lots of documentation and meetings.

I actually have Scrum Master training... so I can confidently say that most companies that claim to do Scrum are actually closer to doing its opposite.

- retrospective -- either not doing it at all because it is a waste of time (yeah, remove the only part of the process where the developers can provide feedback about the process, and then be surprised that the process sucks), or they are doing some perverted reverse-retrospective where instead of developers giving feedback to the company, it is company giving feedback to developers, usually that they should work faster and make fewer mistakes

- there is no "Jira" in the Scrum Guide

- there is no "Confluence" in the Scrum Guide

- and the retrospective is exactly the place where the developers should say "Jira and Confluence suck, we want some tools that actually work instead", but I already mentioned the retrospective

- there are no managers in Scrum; and no, it is not about renaming the manager's role, but about giving the team autonomy

- the only deadlines in Scrum are the ones negotiated at sprint planning, and not in the sense of "management says that the deadline is after two sprints, but you have the freedom to choose which part you implement during the first sprint, and which part during the second one"

I only experienced something like actual Scrum once, when a small department in a larger company decided to actually try Scrum by the textbook. It was fun while it lasted. Ironically, we had to stop because the entire company decided to switch to "Scrum", and we were told to do it "properly". (That meant no more wasting time on retrospectives; sprints need to be two weeks long because more sprints = more productivity; etc.)

I find it very amusing that everyone proudly boasts of being "Agile" when they have all the nimble swiftness in decision and action of a 1975 Soviet Russia agricultural committee.

There are a lot of places where I find a lot of use from LLMs, but it's usually grunt work that would take me a couple of hours but isn't hard, like tests, or simple shuffling things around. It's also quite good at "here's a bunch of linting errors - fix them", though of course you have to be constantly vigilant.

With real code, I'll sometimes use it as a rubber duck or ask it to come up with some preliminary version of what I want, then refactor it mercilessly. With preexisting code I usually need to tell it what to do, or at least point at which files it should look at (this helps quite a lot). With anything complicated, it's usually faster for me to just do it myself. Could just be a skill issue on my part, of course.

They are getting better. They're still not good, but they're often better than a random junior. My main issue is that they don't grow. A rubbish intern will get a lot better, as long as they are capable of learning. I'm fine starting with someone on a very low level, as long as I won't have to continuously point out the same mistakes. With an LLM this is frustrating because I have to keep pointing out things I said a couple of turns previously, or which are part of those cringe IMPORTANT prompt notes.

I still prefer to just ask an LLM to write me my CSS :P

One off PoC website/app type things are something where LLMs shine. Or dashboards like "here's a massive csv file with who knows what - make me a nice dashboard thingy I can use to play with the data" or "here's an API - make me a quick n dirty website that allows me to play about with it".

Having used Cursor and VSCode with Github Copilot I feel like a huge part of the problem here isn't even the LLMs per se: it's the UX.

The default here is "you get a suggestion whether you asked for it or not, and if you press Tab it gets added". Who even thought that was a good idea? Sometimes I press Tab because I need to indent four spaces, not because I want to write whatever random code the LLM thinks is appropriate. And this seems incredibly wasteful, continuously sending queries to the API, often for stuff I simply don't need, with who knows how big context length that isn't necessary! A huge part of the benefit I get from LLM assistants are simple cases of "here is a function called a very obvious thing that does exactly that obvious thing" (which is really nothing more than "grab an existing code snipped and adapt the names to my conventions"), or "follow this repetitive pattern to do the same thing five times" (again a very basic, very context-dependent automatic task). Other high value stuff includes writing docstrings and routine unit tests for simple stuff. Meanwhile when I need to code something that takes a decent amount of thought I am grateful for the best thing that these UX luckily do include, the "snooze" function to just shut the damn thing up for a while.

As I see it, the correct UX for an LLM code assistant would be:

- only operate on demand

- have a few basic tasks (like "write docstring") possible to invoke on a given line where your cursor is, grabbing a smart context, using their own specific prompt

- ability to define your own custom tasks flexibly, or download them as plugins

But use something like the command palette Ctrl+Shift+P for it. There's probably even smarter and more efficient stuff that can be done via clever use of RAG, embedding models, etc. The current approach is among the laziest possible and definitely suffers from problems, yeah.

Hey, I think I share a lot of these emotions. Also left my corp job sometime ago. But the change that happened to me was a bit different: I think I just don't like corporate programming anymore. (Or corporate design, writing and so on.) When I try to make stuff that isn't meant for corporate in any shape or form, I find myself doing that happily. Without using AI of course.

Nice, I hope it will last longer for you than my 2.5 years out of corporate environment ... and now observing the worse parts of AI hype in startups too due to investor pressures (as if "everyone" was adding "stupid chatbot wrappers" to whatever products they try to make .. I hope I'm exaggerating and I will find some company that's not doing the "stupid" part, but I think I lost hope on not wanting to see AI all around me .. and not literally every idea with LLM in the middle is entirely useless).

When using LLM-based coding assistants, I always had a strange feeling about the interaction. I think I now have a pointer around that feeling -[1] disappointment from having expected more (again and again), followed by low level of disgust, and an aftertaste of disrespect growing into hatred.[2]

But why hatred - I didn't expect that color of emotion to be in there - where does it come from? A hypothesis comes to mind 🌶️ - I hate gambling,[3] LLMs are stochastic, and whenever I got a chance to observe people for whom LLMs worked well it seemed like addiction.

From another angle, when doing code review, it motivates me when it's a learning opportunity for both parties. Lately, it feels like the other "person" is an incompetent lying psychopath who can copy&paste around and so far has been lucky to only work on toy problems so no one noticed they don't understand programming .. at all. Not a single bit. They are even actively anti-curious. Just roleplaying - fake it till you make it, but they didn't make it yet.[4]

I recently lost my love of programming and I haven't found my way back, yet. I blame LLMs for that. And post-capitalist incentives towards SF style disruptive network-effect monopolization, enshitification of platforms, gamification of formerly-nuanced human interactions. And companies and doomers that over-hype current "AI" - I believe most of the future risks and some of the future potential, and that the current state of the art is amazing, a technological wonder, magic come real ... so how the heck did it happen that the hype has risen so much higher than reality for so long when reality is so good?!?

I want progressive type systems and documentation on hover, I don't want to swap the

ifcondition while refactoring and then "fix" the test. I don't want to skip 1 line when migrating from JSON to some random format. I don't want*+instead of+in my regexp. A random file deleted. Messing up with .gitignore. Million other inconveniences. Have you seen how people un-minified Claude Code - the sheer amount of workarounds, cringe IMPORTANT inside the system prompt and the constant reminders? Have you seen people seeing it and concluding that it's proof that "it works"?!?I believe that when companies ask software developers to improve their "productivity" by using LLM coding assistants, it is the next stage of enshitification - hook users first, then squeeze users to satisfy customers, then milk customers to make (a promise of) profits, now make the employees addicted to the gambling tools in order to collect data for automating the employees later. The programmers and the managers alike.

Gamblification. A machine for losing the coding game after which only AGI will be able to save us from all the code slop. Opinions?

If you prefer em dashes, please use your imagination—I don't like them.

Similar to an interview when someone asks about a random tangent that is obviously-related to the previous topic, the candidate explains it totally fine but in a way disconnected from the previous topic.. so you then ask for an example and it goes completely off the rails (as if then had no experience with practicalities around that topic, just reciting buzzword definitions).

Or when talking to a high-ranking stakeholder and all goes well until they agree with you about a wrong detail, so you ask them for an opinion about a core point and you realize they don't seem to have a clue, they were just vibe problem solving the whole time. Especially when they don't even see it in your eyes they just made a mistake (I mean good managers don't have to be domain experts, but they always have a desire to learn more and social skills to recognize when you think that you have the info they need.. bad managers just make a decision when asked to make a decision).

Rolling a physical dice is fine for me and I enjoy The Settlers of Catan or Dostihy a Sázky (Czech version of Monopoly that is far superior). I'm not exactly in love with slot machines[5], loot boxes, mobile games, or D&D clones. But (post-)modern sports betting and high-frequency trading are outright evil.

I finally understand the stochastic parrot metaphor - there is nothing wrong about Markov processes, parrots are highly intelligent animals, pattern matching is not "just pattern matching" but a real quality, and a calculator is superhuman in long division ... but the [something something ¯\_(ツ)_/¯ understanding/thinking/reasoning] is supposed to be more than?!?

BTW they are called "winning machines" in my local language(s), and I bet that they would be less of a social problem if they were called "losing machines" (to match the median outcome).