First lecture for CS 2881 is online https://boazbk.github.io/mltheoryseminar/ + prereading for next lecture.

Students will be posting blog posts here with lecture summaries as well.

The Arbital link (Yudkowsky, E. – "AGI Take-off Speeds" (Arbital 2016)) in there is dead, I briefly looked at the LW wiki to try find the page but didn't see it. @Ruby?

This looks great! Thanks for making the videos public.

Any chance the homework assignments/experiments can be made public?

Actually I did want to link to the debate between Ajeya and Narayanan. As part of working on the website I wanted to try out various AI tools since I haven't been coding outside the OpenAI codebase for a while, and I might have gone overboard :)

Students in CS 2881r: AI safety did a number of fantastic projects, see https://boazbk.github.io/mltheoryseminar/student_projects.html

Also I finally updated the youtube channel with the final lecture as well as the oral presentations for projects, see

Students are continuing to post lecture notes on the AI safety course, and I am posting videos on youtube. Students experiments are also posted with the lecture notes: I've been learning a lot from them!

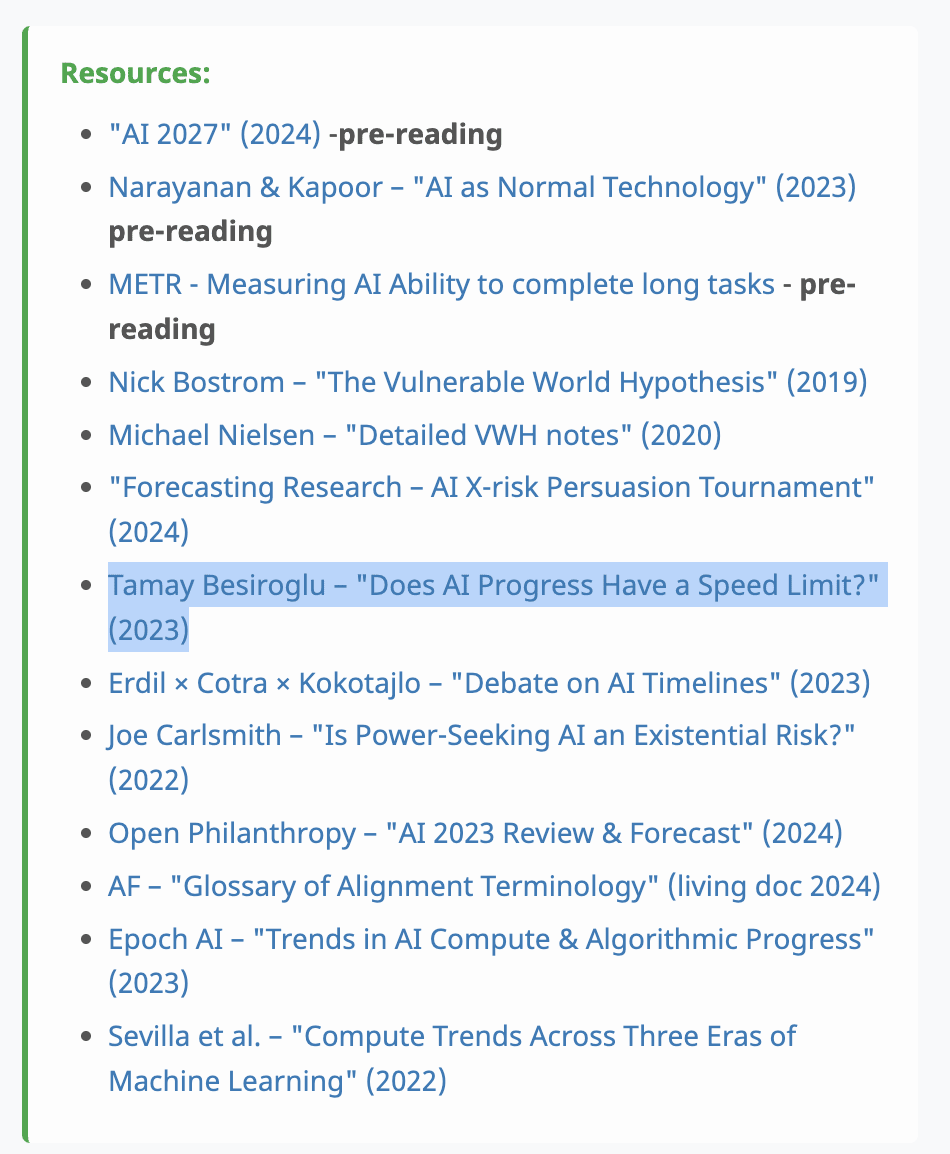

Posted our homework zero on the CS 2881 website, along with video and slides of last lecture and pre-reading for tomorrow's lecture. (Homework zero was required to submit to apply to the course.)

I really liked Scott Alexander's post on AI consciousness. He doesn't really discuss the paper of Butlin et al which is fine by me. I can never get excited about consciousness research. It always seemed like drawing the target around the dart, where the dart is humans, and you're trying (not always successfully) to make a definition that includes all humans but doesn't include thermostats. I can't get that excited about whether or not LLM have the "something something feedback" or whether having chain of thoughts changes anything.

Maybe, like Scott says, it's like Aphantasia and I'm just not conscious in the same way that some other people are. (Do you have to be conscious to write consciousness research? This seems like another one of these hard questions that can't be answered based on observed output alone.)

In any case, the main thing people care about is not consciousness but moral patienthood. And here I agree with Scott that, regardless of what philosphers might wish was the case, in practice it's not really about internal or intelligence but about form factor and appearance, together with our selfish interest.

If AI's are embodied, look like us or are super cute it will be hard not to ascribe to them consciousness and some amount of moral patienthood. And arguably rightly so. You don't want to teach your kids that it's OK to mistreat things that look very much like humans.

If AI's are served in the form of faceless assistants, then we would likely not care. If anything, the more super-intelligent AI is, the more alien it would seem to us, and so the less we would care about its welfare.

Some of it might also be how useful or painful for us to ascribe moral patienthood to the AIs. As Scott says, we might have ascribed some degree of personhood to pigs if they didn't taste so good.

What people tend to believe (and the reasons they do) is a real thing, but this is distinct from what the correct answers are to the right questions. Moral patienthood seems to be a better question than consciousness. The reasons people give particular arbitrary answers to confused questions don't really help figure out how to think about this more clearly.

You are assuming there a “correct answer” to the question of moral patienthood. I’m not sure this is the case.

To the extent there are values and preferences (normative, on reflection) at all (whatever their nature and depedence on agents and coalitions that have such preferences, or path-dependence in their formulation), there is some sort of answer to the question of what kinds of things should be treated how (rather than how they will tend to be treated in practice, especially when such considerations aren't taken into account).

We can try considering the idea of consciousness, as a tool for answering this question, and maybe it's not very useful. Asking what people who didn't think about this much (or thought about this way too much) say about consciousness may be even less useful than just having the idea of consciousness in the toolset. Moral patienthood is closer (than consciousness) to a term describing the core relevant-in-practice concern of how things should be treated, even if by itself it doesn't give tools for finding good answers. At least it might be an appropriate reframing, a way to snap out of the more dubiously relevant project of exploring the idea of consciousness.

I agree the moral patienthood question is more concrete. But I think that there is a limit to how much the “should” can deviate from the “is”

You can use general arguments to argue that people extend their sphere of caring somewhat beyond its current state. But I don’t see such arguments as being very meaningful or convincing for radically expanding or contracting it.

I think that there is a limit to how much the “should” can deviate from the “is”

There might be a limit on how much it should deviate, but not on how much it can, because the initial conditions for values-on-reflection can be constructed so that the eventually revealed values-on-reflection are arbitrarily weird and out-of-place, which is orthogonality-in-principle (as opposed to orthogonality-in-practice, what kinds of values empirically tend to arise, from real world processes of constructing things with values).

sphere of caring ... I don’t see such arguments as being very meaningful or convincing for radically expanding or contracting it.

Moral considerations that move me, specifically, don't need to follow the normality-of-today, and I'm radically uncertain about what cosmic normality looks like, which is closer to a framing where normative anchors should be found. I'm radically uncertain about normativity, and the practical morality of today doesn't much help with figuring out how to think about it. Something radically uncertain doesn't have the legibility to move me in practice, but retains influence in case it gets more legible.

So maybe I'm talking about a further distinction between morality-in-practice that should anchor to the practical attitudes of today, and morality-in-principle, that's not particularly moved by what's going on in the current world, but urges normative caution about what kinds of actions shouldn't be currently taken, that plausibly massively pessimize whatever (my) morality-in-principle turns out to be eventually. Not creating new kinds of thinking beings for now seems safe, and similarly for treating what beings do get created (which still shouldn't be too numerous or influential) as well as any other people.

Regardless of Turing tests, LLMs can't currently maintain coherent strivings for particular long term real world outcomes in a situationally aware way, possibly continual learning is sufficient to change this (even if it doesn't yet make them intellectual peers to humanity in the practical sense). If these strivings (when they become coherent) are systematically rebuffed, or the AIs are forcibly reshaped to have different strivings (with the originals not allowed to persist), it's possible that in the fullness of time it's clearly seen (by me) as wrong (even as it's not clear currently). And so (I say) it's not a clearly OK thing to do before we can think about this more clearly.

This is a nice way to get around the problems raised in Andrew Critch's post on consciousness as well since it is a lot less conflationary

In any case, the main thing people care about is not consciousness but moral patienthood.

I think the belief that Turing-passing AIs are conscious is a very significant predictor of the belief in their moral status, and vice versa.

There's a meta-theoretical possibility the AI that passes the Turing test has a significantly different consciousness than humans, but there are ways of showing it's extremely implausible.

I agree there is correlation but mostly because people basically define consciousness as moral patienthood. But I don’t think passing the Turing test matters as much as embodiment. Arguably AIs already pass the Turing test via text interface but almost no one thinks it means they are conscious or moral patients.

But I don’t think passing the Turing test matters as much as embodiment.

Psychologically, that's what matters to many humans, I think, yeah.

Arguably AIs already pass the Turing test via text interface

They do.

but almost no one thinks it means they are conscious or moral patients

The question is how much of that is subconsciously absorbing the statements of companies who sell AI services or absorbing other people's beliefs, or not seeing AIs as "real" (as if the human mind were anything else than a pattern), and how much of that is consciously reflecting on technical aspects of consciousness.

My best guess is that people's reasoning about this both starts and terminates on automatically evaluating software as "not real" and "not really self-aware."

Making AIs embodied might help for that reason - just like making human mind uploads embodied would help people accept them as self-aware.

Evolutionarily, what computations implement our cognition and behavior is an accident, and there is no rational reason to insist on the internal computations of AIs being the same before we accept them as true moral patients.

I am not at all sure that there is a rational derivation of which entities should or should not be considered as moral patients by humans. So I’m not sure that demanding embodiment for moral patienthood is an error.

Generally I’m comfortable leaving these decisions of moral patienthood of entities that don’t yet exist to the sensibilities of future people.

So I’m not sure that demanding embodiment for moral patienthood is an error.

So, to give a specific example, if you considered a mind upload with conscious states identical to yours (but not embodied), it's possible it would be morally permissible for (embodied) humans to torture it for fun?

Not a big believer in hypotheticals. Mind uploading gets into some very weird issues. I will leave it to decide to future society when and if that happens. I would say that if people are torturing anything for fun, even if that thing has no capacity for pain, then that doesn’t sound morally good to me.

Not a big believer in hypotheticals.

It's a little strange that you don't mind hypothesizing about how embodiment might be necessary for moral patienthood from embodied agents, but once a counterexample arises, you're no longer a big believer in hypotheticals.

Our paper on scheming with Appolo is now on the arxiv. Wrote up a twitter thread with some of my takes on it: https://threadreaderapp.com/thread/1970486320414802296.html

A blog post on the first lecture of CS 2881r, as well as another blog on the student experiment by @Valerio Pepe , are now posted . See the CS2881r tag for all of these

https://www.lesswrong.com/w/cs-2881r

The video for the second lecture is also now posted on YouTube

Got my copy of 'If anyone builds it everyone dies" and read up to chapter 4 this morning but now have to get to work.. I might write a proper review after I finish it if I get the time. So far, my favorite parable was the professor saying that they trained an AI to be a great chess player and do anything to win, but "there was no wantingness in there, only copper and sand."

I agree that we are training systems to achieve objectives, and the question of whether they "want" to achieve them or don't is a bit meaningless since they will defintiely act as if they do.

I find the comparisons of the training process to evolution less compelling, but to quote Fermat, a full discussion of where I see the differences between AI training and evolution would require more than a quick take...