Reviews 2020

Leaderboard

In this post, the author proposes a semiformal definition of the concept of "optimization". This is potentially valuable since "optimization" is a word often used in discussions about AI risk, and much confusion can follow from sloppy use of the term or from different people understanding it differently. While the definition given here is a useful perspective, I have some reservations about the claims made about its relevance and applications.

The key paragraph, which summarizes the definition itself, is the following:

...An optimizing system is a system that

The referenced study on group selection on insects is "Group selection among laboratory populations of Tribolium," from 1976. Studies on Slack claims that "They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up."

This makes it sound like cannibalism was the only population-limiting behavior the beetles evolved. According to the original study, ho...

The work linked in this post was IMO the most important work done on understanding neural networks at the time it came out, and it has also significantly changed the way I think about optimization more generally.

That said, there's a lot of "noise" in the linked papers; it takes some digging to see the key ideas and the data backing them up, and there's a lot of space spent on things which IMO just aren't that interesting at all. So, I'll summarize the things which I consider central.

When optimizing an overparameterized system, there are many many different...

In this post, the author presents a case for replacing expected utility theory with some other structure which has no explicit utility function, but only quantities that correspond to conditional expectations of utility.

To provide motivation, the author starts from what he calls the "reductive utility view", which is the thesis he sets out to overthrow. He then identifies two problems with the view.

The first problem is about the ontology in which preferences are defined. In the reductive utility view, the domain of the utility function is the set of possib...

This post is the best overview of the field so far that I know of. I appreciate how it frames things in terms of outer/inner alignment and training/performance competitiveness--it's very useful to have a framework with which to evaluate proposals and this is a pretty good framework I think.

Since it was written, this post has been my go-to reference both for getting other people up to speed on what the current AI alignment strategies look like (even though this post isn't exhaustive). Also, I've referred back to it myself several times. I learned a lot from...

A short note to start the review that the author isn’t happy with how it is communicated. I agree it could be clearer and this is the reason I’m scoring this 4 instead of 9. The actual content seems very useful to me.

AllAmericanBreakfast has already reviewed this from a theoretical point of view but I wanted to look at it from a practical standpoint.

***

To test whether the conclusions of this post were true in practice I decided to take 5 examples from the Wikipedia page on the Prisoner’s dilemma and see if they were better modeled by Stag Hunt or Schelling...

This post is making a valid point (the time to intervene to prevent an outcome that would otherwise occur, is going to be before the outcome actually occurs), but I'm annoyed with the mind projection fallacy by which this post seems to treat "point of no return" as a feature of the territory, rather than your planning algorithm's map.

(And, incidentally, I wish this dumb robot cult still had a culture that cared about appreciating cognitive algorithms as the common interest of many causes, such that people would find it more natural to write a post about "p...

This post is an excellent distillation of a cluster of past work on maligness of Solomonoff Induction, which has become a foundational argument/model for inner agency and malign models more generally.

I've long thought that the maligness argument overlooks some major counterarguments, but I never got around to writing them up. Now that this post is up for the 2020 review, seems like a good time to walk through them.

In Solomonoff Model, Sufficiently Large Data Rules Out Malignness

There is a major outside-view reason to expect that the Solomonoff-is-malign ar...

I think Luna Lovegood and the Chamber of Secrets would deserve to get into the Less Wrong Review if all we cared about were its merits. However, the Less Wrong Review is used to determine which posts get into a book that is sold on paper for money. I think this story should be disqualified from the Less Wrong Review on the grounds that Harry Potter fanfiction must remain non-commercial, especially in the strict sense of traditional print publishing.

The central point of this article was that conformism was causing society to treat COVID-19 with insufficient alarm. Its goal was to give its readership social sanction and motivation to change that pattern. One of its sub-arguments was that the media was succumbing to conformity. This claim came with an implication that this post was ahead of the curve, and that it was indicative of a pattern of success among rationalists in achieving real benefits, both altruistically (in motivating positive social change) and selfishly (in finding alpha).

I thought it wo...

Tl;dr I encourage people who changed their behavior based on this post or the larger sequence to comment with their stories.

I had already switched to freelance work for reasons overlapping although not synonymous with moral mazes when I learned the concept, and since then the concept has altered how I approach freelance gigs. So I’m in general very on board with the concept.

But as I read this, I thought about my friend Jessica, who’s a manager at a Fortune 500 company. Jessica is principled and has put serious (but not overwhelming) effort into enacting th...

The post claims:

I have investigated this issue in depth and concluded that even a full scale nuclear exchange is unlikely (<1%) to cause human extinction.

This review aims to assess whether having read the post I can conclude the same.

The review is split into 3 parts:

- Epistemic spot check

- Examining the argument

- Outside the argument

Epistemic spot check

Claim: There are 14,000 nuclear warheads in the world.

Assessment: True

Claim: Average warhead yield <1 Mt, probably closer to 100kt

Assessment: Probably true, possibly misleading. Values I found were:

- US

First, some meta-level things I've learned since writing this:

-

What people crave most is very practical advice on what to buy. In retrospect this should have been more obvious to me. When I look for help from others on how to solve a problem I do not know much about, the main thing I want is very actionable advice, like "buy this thing", "use this app", or "follow this Twitter account".

-

Failing that, what people want is legible, easy-to-use criteria for making decisions on their own. Advice like "Find something with CRI>90, and more CRI is better" i

This will not be a full review—it's more of a drive-by comment which I think is relevant to the review process.

However, the defense establishment has access to classified information and models that we civilians do not have, in addition to all the public material. I’m confident that nuclear war planners have thought deeply about the risks of climate change from nuclear war, even though I don’t know their conclusions or bureaucratic constraints.

I am extremely skeptical of and am not at all confident in this conclusion. Ellsberg's The Doomsday Machine descri...

Frames that describe perception can become tools for controlling perception.

The idea of simulacra has been generative here on LessWrong, used by Elizabeth in her analysis of negative feedback, and by Zvi in his writings on Covid-19. It appears to originate in private conversations between Benjamin Hoffman and Jessica Taylor. The four simulacra levels or stages are a conception of Baudrillard’s, from Simulacra and Simulation. The Wikipedia summary quoted on the original blog post between Hoffman and Taylor has been reworded several times by various authors ...

This is a long and good post with a title and early framing advertising a shorter and better post that does not fully exist, but would be great if it did.

The actual post here is something more like "CFAR and the Quest to Change Core Beliefs While Staying Sane."

The basic problem is that people by default have belief systems that allow them to operate normally in everyday life, and that protect them against weird beliefs and absurd actions, especially ones that would extract a lot of resources in ways that don't clearly pay off. And they similarl...

Simulacra levels were probably the biggest incorporation to the rationalist canon in 2020. This was one of maybe half-a-dozen posts which I think together cemented the idea pretty well. If we do books again, I could easily imagine a whole book on simulacra, and I'd want this post in it.

What this post does for me is that it encourages me to view products and services not as physical facts of our world, as things that happen to exist, but as the outcomes of an active creative process that is still ongoing and open to our participation. It reminds us that everything we might want to do is hard, and that the work of making that task less hard is valuable. Otherwise, we are liable to make the mistake of taking functionality and expertise for granted.

What is not an interface? That's the slipperiest aspect of this post. A programming language i...

The goal of this post is to help us understand the similarities and differences between several different games, and to improve our intuitions about which game is the right default assumption when modeling real-world outcomes.

My main objective with this review is to check the game theoretic claims, identify the points at which this post makes empirical assertions, and see if there are any worrisome oversights or gaps. Most of my fact-checking will just be resorting to Wikipedia.

Let’s start with definitions of two key concepts.

Pareto-optimal: One dimension ...

I don't think this post added anything new to the conversation, both because Elizabeth Van Nostrand's epistemic spot check found essentially the same result previously and because, as I said in the post, it's "the blog equivalent of a null finding."

I still think it's slightly valuable - it's useful to occasionally replicate reviews.

(For me personally, writing this post was quite valuable - it was a good opportunity to examine the evidence for myself, try to appropriately incorporate the different types of evidence into my prior, and form my own opinions for when clients ask me related questions.)

I generally endorse the claims made in this post and the overall analogy. Since this post was written, there are a few more examples I can add to the categories for slow takeoff properties.

Learning from experience

- The UK procrastinated on locking down in response to the Alpha variant due to political considerations (not wanting to "cancel Christmas"), though it was known that timely lockdowns are much more effective.

- Various countries reacted to Omicron with travel bans after they already had community transmission (e.g. Canada and the UK), while it wa

This post states a subproblem of AI alignment which the author calls "the pointers problem". The user is regarded as an expected utility maximizer, operating according to causal decision theory. Importantly, the utility function depends on latent (unobserved) variables in the causal network. The AI operates according to a different, superior, model of the world. The problem is then, how do we translate the utility function from the user's model to the AI's model? This is very similar to the "ontological crisis" problem described by De Blanc, only De Blanc ...

If coordination services command high wages, as John predicts, this suggests that demand is high and supply is limited. Here are some reasons why this might be true:

- Coordination solutions scale linearly (because the problem is a general one) or exponentially (due to networking effects).

- Coordination is difficult, unpleasant, risky work.

- Coordination relies on further resources that are themselves in limited supply or on information that has a short life expectancy, such as involved personal relationships, technical knowhow that depends on a lot of implicit k

An Orthodox Case Against Utility Functions was a shocking piece to me. Abram spends the first half of the post laying out a view he suspects people hold, but he thinks is clearly wrong, which is a perspective that approaches things "from the starting-point of the universe". I felt dread reading it, because it was a view I held at the time, and I used as a key background perspective when I discussed bayesian reasoning. The rest of the post lays out an alternative perspective that "starts from the standpoint of the agent". Instead of my beliefs being about t...

I’ll set aside what happens “by default” and focus on the interesting technical question of whether this post is describing a possible straightforward-ish path to aligned superintelligent AGI.

The background idea is “natural abstractions”. This is basically a claim that, when you use an unsupervised world-model-building learning algorithm, its latent space tends to systematically learn some patterns rather than others. Different learning algorithms will converge on similar learned patterns, because those learned patterns are a property of the world, not an ...

Since writing this, I've run across even more examples:

- The transatlantic telegraph was met with celebrations similar to the transcontinental railroad, etc. (somewhat premature as the first cable broke after two weeks). Towards the end of Samuel Morse's life and/or at his death, he was similarly feted as a hero.

- The Wright Brothers were given an enormous parade and celebration in their hometown of Dayton, OH when they returned from their first international demonstrations of the airplane.

I'd like to write these up at some point.

Related: The poetry of progress (another form of celebration, broadly construed)

I think this post labels an important facet of the world, and skillfully paints it with examples without growing overlong. I liked it, and think it would make a good addition to the book.

There's a thing I find sort of fascinating about it from an evaluative perspective, which is that... it really doesn't stand on its own, and can't, as it's grounded in the external world, in webs of deference and trust. Paul Graham makes a claim about taste; do you trust Paul Graham's taste enough to believe it? It's a post about expertise that warns about snake oil salesm...

You can see my other reviews from this and past years, and check that I don't generally say this sort of thing:

This was the best post I've written in years. I think it distilled an idea that's perennially sorely needed in the EA community, and presented it well. I fully endorse it word-for-word today.

The only edit I'd consider making is to have the "Denial" reaction explicitly say "that pit over there doesn't really exist".

(Yeah, I know, not an especially informative review - just that the upvote to my past self is an exceptionally strong one.)

I liked this article. It presents a novel view on mistake theory vs conflict theory, and a novel view on bargaining.

However, I found the definitions and arguments a bit confusing/inadequate.

Your definitions:

"Let's agree to maximize surplus. Once we agree to that, we can talk about allocation."

"Let's agree on an allocation. Once we do that, we can talk about maximizing surplus."

The wording of the options was quite confusing to me, because it's not immediately clear what "doing something first" and "doing some other thing second" really means.

For example, th...

This post aims to clarify the definitions of a number of concepts in AI alignment introduced by the author and collaborators. The concepts are interesting, and some researchers evidently find them useful. Personally, I find the definitions confusing, but I did benefit a little from thinking about this confusion. In my opinion, the post could greatly benefit from introducing mathematical notation[1] and making the concepts precise at least in some very simplistic toy model.

In the following, I'll try going over some of the definitions and explicating my unde...

It would be slightly whimsical to include this post without any explanation in the 2020 review. Everything else in the review is so serious, we could catch a break from apocalypses to look at an elephant seal for ten seconds.

This post is still endorsed, it still feels like a continually fruitful line of research. A notable aspect of it is that, as time goes on, I keep finding more connections and crisper ways of viewing things which means that for many of the further linked posts about inframeasure theory, I think I could explain them from scratch better than the existing work does. One striking example is that the "Nirvana trick" stated in this intro (to encode nonstandard decision-theory problems), has transitioned from "weird hack that happens to work" to "pops straight out...

I liked this post a lot. In general, I think that the rationalist project should focus a lot more on "doing things" than on writing things. Producing tools like this is a great example of "doing things". Other examples include starting meetups and group houses.

So, I liked this post a) for being an example of "doing things", but also b) for being what I consider to be a good example of "doing things". Consider that quote from Paul Graham about "live in the future and build what's missing". To me, this has gotta be a tool that exists in the future, and I app...

Overall, you can break my and Jim's claims down into a few categories:

* Descriptions of things that had already happened, where no new information has overturned our interpretation (5)

* CDC made a guess with insufficient information, was correct (1- packages)

* CDC made a guess with insufficient information, we'll never know who was right because the terms were ambiguous (1- the state of post-quarantine individuals)

* CDC made a guess with insufficient information and we were right (1- masks)

That overall seems pretty good. It's great that covid didn't turn o...

(I am the author)

I still like & stand by this post. I refer back to it constantly. It does two things:

1. Argue that an AI-induced point of no return could significantly before, or significantly after, world GDP growth accelerates--and indeed will probably come before!

2. Argue that we shouldn't define timelines and takeoff speeds in terms of economic growth. So, against "is there a 4 year doubling before a 1 year doubling?" and against "When will we have TAI = AI capable of doubling the economy in 4 years if deployed?"

I think both things are pretty impo...

I haven't had time to reread this sequence in depth, but I wanted to at least touch on how I'd evaluate it. It seems to be aiming to be both a good introductory sequence, while being a "complete and compelling case I can for why the development of AGI might pose an existential threat".

The question is who is this sequence for, what is it's goal, and how does it compare to other writing targeting similar demographics.

Some writing that comes to mind to compare/contrast it with includes:

- Scott Alexander's Superintelligence FAQ. This is the post I've

This is the post that first spelled out how Simulacra levels worked in a way that seemed fully comprehensive, which I understood.

I really like the different archetypes (i.e. Oracle, Trickster, Sage, Lawyer, etc). They showcased how the different levels blend together, while still having distinct properties that made sense to reason about separately. Each archetype felt very natural to me, like I could imagine people operating in that way.

The description Level 4 here still feels a bit inarticulate/confused. This post is mostly compatible with the 2x2 grid v...

Elephant seal is a picture of an elephant seal. It has a mysterious Mona Lisa smile that I can't pin down, that shows glee, intent, focus, forward-looking-ness, and satisfaction. It's fat and funny-looking. It looks very happy lying on the sand. I give this post a +4.

(This review is taken from my post Ben Pace's Controversial Picks for the 2020 Review.)

Introduction to Cartesian Frames is a piece that also gave me a new philosophical perspective on my life.

I don't know how to simply describe it. I don't know what even to say here.

One thing I can say is that the post formalized the idea of having "more agency" or "less agency", in terms of "what facts about the world can I force to be true?". The more I approach the world by stating things that are going to happen, that I can't change, the more I'm boxing-in my agency over the world. The more I treat constraints as things I could fight to chang...

This post is based on the book Moral Mazes, which is a 1988 book describing "the way bureaucracy shapes moral consciousness" in US corporate managers. The central point is that it's possible to imagine relationship and organization structures in which unnecessarily destructive behavior, to self or others, is used as a costly signal of loyalty or status.

Zvi titles the post after what he says these behaviors are trying to avoid, motive ambiguity. He doesn't label the dynamic itself, so I'll refer to it here as "disambiguating destruction" (DD). Before procee...

Why This Post Is Interesting

This post takes a previously-very-conceptually-difficult alignment problem, and shows that we can model this problem in a straightforward and fairly general way, just using good ol' Bayesian utility maximizers. The formalization makes the Pointers Problem mathematically legible: it's clear what the problem is, it's clear why the problem is important and hard for alignment, and that clarity is not just conceptual but mathematically precise.

Unfortunately, mathematical legibility is not the same as accessibility; the post does have...

Ajeya's timelines report is the best thing that's ever been written about AI timelines imo. Whenever people ask me for my views on timelines, I go through the following mini-flowchart:

1. Have you read Ajeya's report?

--If yes, launch into a conversation about the distribution over 2020's training compute and explain why I think the distribution should be substantially to the left, why I worry it might shift leftward faster than she projects, and why I think we should use it to forecast AI-PONR instead of TAI.

--If no, launch into a conversation about Ajey...

This post is both a huge contribution, giving a simpler and shorter explanation of a critical topic, with a far clearer context, and has been useful to point people to as an alternative to the main sequence. I wouldn't promote it as more important than the actual series, but I would suggest it as a strong alternative to including the full sequence in the 2020 Review. (Especially because I suspect that those who are very interested are likely to have read the full sequence, and most others will not even if it is included.)

This is one of those posts, like "pain is not the unit of effort," that combines a memorable and informative and very useful and important slogan with a bunch of argumentation and examples to back up that slogan. I think this type of post is great for the LW review.

When I first read this post, I thought it was boring and unimportant: trivially, there will be some circumstances where knowledge is the bottleneck, because for pretty much all X there will be some circumstances where X is the bottleneck.

However, since then I've ended up saying the slogan "when ...

I remember this post very fondly. I often thought back to it and it inspired some thoughts of my own about rationality (which I had trouble writing down and are waiting in a draft to be written fully some day). I haven't used any of the phrases introduced here (Underperformance Swamp, Sinkholes of Sneer, Valley of Disintegration...), and I'm not sure whether it was the intention.

The post starts with the claim that rationalists "basically got everything about COVID-19 right and did so months ahead of the majority of government officials, journalists, and su...

The killer advice here was masks, which was genuinely controversial in the larger world at the time. When we wrote a summary of the best advice. two weeks later, masks were listed under "well duh but also there's a shortage".

Of the advice that we felt was valuable enough to include in the best-of summary, but hadn't gone to fixation yet, there were 4.5 tips. Here's my review of those

Cover your high-touch surfaces with copper tape

I think the science behind this was solid, but turned out to be mostly irrelevant for covid-19 because it was so domi...

I wrote this relatively early in my journey of self-studying neuroscience. Rereading this now, I guess I'm only slightly embarrassed to have my name associated with it, which isn’t as bad as I expected going in. Some shifts I’ve made since writing it (some of which are already flagged in the text):

- New terminology part 1: Instead of “blank slate” I now say “learning-from-scratch”, as defined and discussed here.

- New terminology part 2: “neocortex vs subcortex” → “learning subsystem vs steering subsystem”, with the former including the whole telencephalon and

Self-review: Looking at the essay year and a half later I am still reasonably happy about it.

In the meantime I've seen Swiss people recommending it as an introductory text for people asking about Swiss political system, so I am, of course, honored, but it also gives me some confidence in not being totally off.

If I had to write the essay again, I would probably give less prominence to direct democracy and more to the concordance and decentralization, which are less eye-catchy but in a way more interesting/important.

Also, I would probably pay some attention ...

(I am the author)

I still like & endorse this post. When I wrote it, I hadn't read more than the wiki articles on the subject. But then afterwards I went and read 3 books (written by historians) about it, and I think the original post held up very well to all this new info. In particular, the main critique the post got -- that disease was more important than I made it sound, in a way that undermined my conclusion -- seems to have been pretty wrong. (See e.g. this comment thread, these follow up posts)

So, why does it matter? What contribution did this po...

We all saw the GPT performance scaling graphs in the papers, and we all stared at them and imagined extending the trend for another five OOMs or so... but then Lanrian went and actually did it! Answered the question we had all been asking! And rigorously dealt with some technical complications along the way.

I've since referred to this post a bunch of times. It's my go-to reference when discussing performance scaling trends.

This is one of those posts, like "when money is abundant, knowledge is the real wealth," that combines a memorable and informative and very useful and important slogan with a bunch of argumentation and examples to back up that slogan. I think this type of post is great for the LW review.

I haven't found this advice super applicable to my own life (because I already generally didn't do things that were painful...) but it has found application in my thinking and conversation with friends. I think it gets at an important phenomenon/problem for many people and provides a useful antidote.

I think practical posts with exercises are underprovided on LessWrong, and that this sequence in particular inculcated a useful habit. Babble wasn't a totally new idea, but I see people use it more now than they did before this sequence.

Worth including both for "Reveal Culture" as a concept, and for the more general thoughts on "what is required for a culture."

People I know still casually refer to Tell Culture, and I still wish they would instead casually refer to Reveal Culture, which seems like a frame much less likely to encourage people to shoot themselves in the foot.

I still end up using the phrase "Tell Culture" when it comes up in meta-conversation, because I don't expect most people to have heard of Reveal Culture and I'd have to stop and explain the difference. I'm annoyed by that, and hope for this post to become more common knowledge.

This post feels like a fantasy description of a better society, one that I would internally label "wish-fulfilment". And yet it is history! So it makes me more hopeful about the world. And thus I find it beautiful.

On the whole I agree with Raemon’s review, particularly the first paragraph.

A further thing I would want to add (which would be relatively easy to fix) is that the description and math of the Kelly criterion is misleading / wrong.

The post states that you should:

bet a percentage of your bankroll equivalent to your expected edge

However the correct rule is:

bet such that you are trying to win a percentage of your bankroll equal to your percent edge.

(emphasis added)

The 2 definitions give the same results for 1:1 bets but will give strongly diverging r...

I like what this post is trying to do more than I like this post. (I still gave it a +4.)

That is, I think that LW has been flirting with meditation and similar practices for years, and this sort of 'non-mystical explanation' is essential to make sure that we know what we're talking about, instead of just vibing. I'm glad to see more of it.

I think that no-self is a useful concept, and had written a (shorter, not attempting to be fully non-mystical) post on the subject several months before. I find myself sort of frustrated that there isn't a clear sentence ...

There's a lot of attention paid these days to accommodating the personal needs of students. For example, a student with PTSD may need at least one light on in the classroom at all times. Schools are starting to create mechanisms by which a student with this need can have it met more easily.

Our ability to do this depends on a lot of prior work. The mental health community had to establish PTSD as a diagnosis; the school had to create a bureaucratic mechanism to normalize accommodations of this kind; and the student had to spend a significant amount of time ...

tl;dr – I'd include Daniel Kokotajlo's 2x2 grid model in the book, as an alternate take on Simulacra levels.

Two things feel important to me about this Question Post:

- This post kicked off discussion of how the evolving Simulacra Level definitions related to the original Baudrillard example. Zvi followed up on that here. This feels "historically significant", but not necessarily something that's going to stand the test of time as important in its own right.

- Daniel Kokotajlo wrote AFAICT the first instance of the alternate 2x2 Grid model of Simulacrum levels. T

This post holds up well in hindsight. I still endorse most of the critiques here, and the ones I don't endorse are relatively unimportant. Insofar as we have new evidence, I think it tends to support the claims here.

In particular:

- Framing few-shot learning as "meta-learning" has caused a lot of confusion. This framing made little sense to begin with, for the reasons I note in this post, and there is now some additional evidence against it.

- The paper does very little to push the envelope of what is possible in NLP, even though GPT-3 is proba

This post's main contribution is the formalization of game-theoretic defection as gaining personal utility at the expense of coalitional utility.

Rereading, the post feels charmingly straightforward and self-contained. The formalization feels obvious in hindsight, but I remember being quite confused about the precise difference between power-seeking and defection—perhaps because popular examples of taking over the world are also defections against the human/AI coalition. I now feel cleanly deconfused about this distinction. And if I was confused about...

Self review: I'm very flattered by the nomination!

Reflecting back on this post, a few quick thoughts:

- I put a lot of effort into getting better at teaching, especially during my undergrad (publishing notes, mentoring, running lectures, etc). In hindsight, this was an amazing use of time, and has been shockingly useful in a range of areas. It makes me much better at field-building, facilitating fellowships, and writing up thoughts. Recently I've been reworking the pedagogy for explaining transformer interpretability work at Anthropic, and I've been shocked a

(I am the author of this piece)

In short, I recommend against including this post in the 2020 review.

Reasons against inclusion

- Contained large mistakes in the past, might still contain mistakes (I don't know of any)

- I fixed the last mistakes I know of two months ago

- It's hard to audit because of the programming language it's written in

- Didn't quite reach its goal

- I wanted to be able to predict the decrease in ability to forecast long-out events, but the brier scores are outside the 0-1 range for long ranges (>1 yr), which shouldn't be the case if w

I did a lot of writing at the start of covid, most of which was eventually eclipsed by new information (thank God). This is one of a few pieces I wrote during that time I refer to frequently, in my own thinking and in conversation with others. The fact even very exogenous-looking changes to the economy are driven by economic fuckery behind the scenes was very clarifying for me in examing the economy as a whole.

This post feels like an important part of what I've referred to as The CFAR Development Branch Git Merge. Between 2013ish and 2017ish, a lot of rationality development happened in person, which built off the sequences. I think some of that work turned out to be dead ends, or a bit confused, or not as important as we thought at the time. But a lot of it was been quite essential to rationality as a practice. I'm glad it has gotten written up.

The felt sense, and focusing, have been two surprisingly important tools for me. One use case not quite mentioned here...

This post feels quite important from a global priorities standpoint. Nuclear war mitigation might have been one of the top priorities for humanity (and to be clear it's still plausibly quite important). But given that the longtermist community has limited resources, it matters a lot whether something falls in the top 5-10 priorities.

A lot of people ask "Why is there so much focus on AI in the longtermist community? What about other x-risks like nuclear?". And I think it's an important, counterintuitive answer that nuclear war probably isn't an x-risk...

This was a concept which it never occurred to me that people might not have, until I saw the post. Noticing and drawing attention to such concepts seems pretty valuable in general. This post in particular was short, direct, and gave the concept a name, which is pretty good; the one thing I'd change about the post is that it could use a more concrete, everyday example/story at the beginning.

I strongly upvoted this.

On one hand – the CFAR handbook would be a weird fit for the anthology style books we have published so far. But, it would be a great fit for being a standalone book, and I think it makes sense to use the Review to take stock of what other books we should be publishing.

The current version of the CFAR handbook isn't super optimized for being read outside the context of a workshop. I think it'd be worth the effort of converting it both into standalone posts that articulate particular concepts, and editing together into a more cohesive...

Create a Full Alternative Stack is probably in the top 15 ideas I got from LW in 2020. Thinking through this as an option has helped me decide when and where to engage with "the establishment" in many areas (e.g. academia). Some parts of my life I work with the mazes whilst trying not getting too much of it on me, and some parts of my life I try to build alternative stacks. (Not the full version, I don't have the time to fix all of civilization.) I give it +4.

Broader comment on the Mazes sequence as a whole:

...The sequence is an extended meditation on a theme

I think this post does a good job of focusing on a stumbling block that many people encounter when trying to do something difficult. Since the stumbling block is about explicitly causing yourself pain, to the extent that this is a common problem and that the post can help avoid it, that's a very high return prospect.

I appreciate the list of quotes and anecdotes early in the post; it's hard for me to imagine what sort of empirical references someone could make to verify whether or not this is a problem. Well known quotes and a long list of anecdotes is a su...

I second Daniel's comment and review, remark that this is an exquisite example of distillation, and state that I believe this might be one of the most important texts of the last decade.

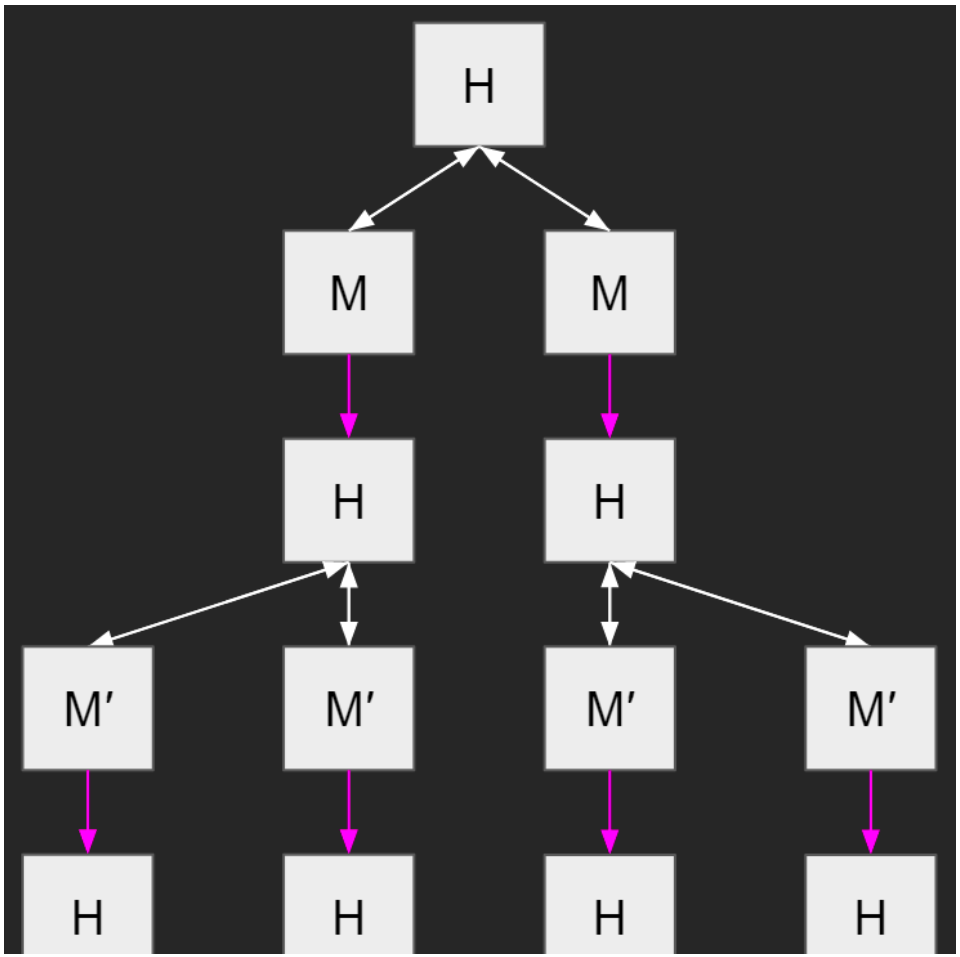

Also, I fixed an image used in the text, here's the fixed version:

I will vote a 9 on this post.

I think this excerpt from Rationality: From AI to Zombies' preface says it all.

...It was a mistake that I didn't write my two years of blog posts with the intention of helping people do better in their everyday lives. I wrote it with the intention of helping people solve big, difficult, important problems, and I chose impressive-sounding, abstract problems as my examples.

In retrospect, this was the second-largest mistake in my approach. It ties in to the first-largest mistake in my writing which was that I didn't realize that the big problem in learning thi

"Search versus design" explores the basic way we build and trust systems in the world. A few notes:

- My favorite part is the definitions about an abstraction layer being an artifact combined with a helpful story about it. It helps me see the world as a series of abstraction layers. We're not actually close to true reality, we are very much living within abstraction layers — the simple stories we are able to tell about the artefacts we build. A world built by AIs will be far less comprehensible than the world we live in today. (Much more like biology is

Pro: The piece aimed to bring a set of key ideas to a broad audience in an easily understood, actionable way, and I think it does a fair job of that. I would be very excited to see similar example-filled posts actionably communicating important ideas. (The goal here feels related to this post https://distill.pub/2017/research-debt/)

Con: I don't think it adds new ideas to the conversation. Some people commented on the sale-sy style of the intro, and I think it's a fair criticism. The piece prioritizes engagingness and readability over nuance.

This post was well written, interesting, had multiple useful examples, and generally filled in models of the world. I haven't explicitly fact-checked it but it accords with things I've read and verified elsewhere.

This post is hard for me to review, because I both 1) really like this post and 2) really failed to deliver on the IOUs. As is, I think the post deserves highly upvoted comments that are critical / have clarifying questions; I give some responses, but not enough that I feel like this is 'complete', even considering the long threads in the comments.

[This is somewhat especially disappointing, because I deliberately had "December 31st" as a deadline so that this would get into the 2019 review instead of the 2020 review, and had hoped this would be the first p...

One of the main problems I think about is how science and engineering are able to achieve such efficient progress despite the very high dimensionality of our world - and how we can more systematically leverage whatever techniques provide that efficiency. One broad class of techniques I think about a lot involves switching between search-for-designs and search-for-constraints - like proof and counterexample in math, or path and path-of-walls in a maze.

My own writing on the topic is usually pretty abstract; I'm thinking about it algorithmic terms, as a searc...

Self Review. I'm quite confident in the core "you should be capable of absorbing some surprise problems happening to you, as a matter of course". I think this is a centrally important concept for a community of people trying to ambitious things, that will constantly be tempted to take on more than they can handle.

2. The specific quantification of "3 surprise problems" can be reasonably debated (although I think my rule-of-thumb is a good starting point, and I think the post is clear about my reasoning process so others can make their own informed choice)

3....

Apparently this has been nominated for the review. I assume that this is implicitly a nomination for the book, rather than my summary of it. If so, I think the post itself serves as a review of the book, and I continue to stand by the claims within.

This post is what first gave me a major update towards "an AI with a simple single architectural pattern scaled up sufficiently could become AGI", in other words, there doesn't necessarily have to be complicated fine-tuned algorithms for different advanced functions–you can get lots of different things from the same simple structure plus optimization. Since then, as far as I can tell, that's what we've been seeing.

I think there have been a few posts about noticing by now, but as Mark says, I think The Noticing Skill is extremely valuable to get early on in the rationality skill tree. I think this is a good explanation for why it is important and how to go about learning it.

TODO: dig up some of the other "how to learn noticing" intro posts and see how some others compare to this one as a standalone introduction. I think this might potentially be the best one. At the very least I really like the mushroom metaphor at the beginning. (If I were assembling the Ideal Noticing Intro post from scratch I might include the mushroom example even if I changed the instructions on how to learn the rationality-relevant-skills)

I was surprised that I had misremembered this post significantly. Over the past two years somehow my brain summarized this as "discontinuities barely happen at all, maybe nukes, and even that's questionable." I'm not sure where I got that impression.

Looking back here I am surprised at the number of discontinuities discovered, even if there are weird sampling issues of what trendlines got selected to investigate.

Rereading this, I'm excited by... the sort of sheer amount of details here. I like that there's a bunch of different domains being explored, ...

I read this post at the same time as reading Ascani 2019 and Ricón 2021 in an attempt to get clear about anti-aging research. Comparing these three texts against each other, I would classify Ascani 2019 as trying to figure out whether focusing on anti-aging research is a good idea, Ricón 2021 trying to give a gearsy overview of the field (objective unlocked: get Nintil posts cross-posted to LessWrong), and this text as showing what has already been accomplished.

In that regard it succeeds perfectly well: The structure of Part V is so clean I suspect that it...

This is a nice little post, that explores a neat idea using a simple math model. I do stand by the idea, even if I remain confused about the limits about its applicability.

The post has received a mixed response. Some people loved it, and I have received some private messages from people thanking me for writing it. Others thought it was confused or confusing.

In hindisight, I think the choice of examples is not the best. I think a cleaner example of this problem would be from the perspective of a funder, who is trying to finance researchers to solve a concre...

The Skewed and the Screwed: When Mating Meets Politics is a post that compellingly explains the effects of gender ratios in a social space (a college, a city, etc).

There's lots of simple effects here that I never noticed. For example, if there's a 55/45 split of the two genders (just counting the heterosexual people), then the minority gender gets an edge of selectiveness, which they enjoy (everyone gets to pick someone they like a bit more than they otherwise would have), but for the majority gender, 18% of them do not have a partner. It's really bad for ...

Protecting Large Projects Against Mazedom is all key advice that seemed unintuitive to me when I was getting started doing things in the world, but now all the advice seems imperative to me. I've learned a bunch of this by doing it "the hard way" I guess. I give this post +4.

Broader comment on the Mazes sequence as a whole:

...The sequence is an extended meditation on a theme, exploring it from lots of perspective, about how large projects and large coordination efforts end up being eaten by Moloch. The specific perspective reminds me a bit of The Screwtape Le

It's really great when alignment work is checked in toy models.

In this case, I was especially intrigued by the way it exposed how the different kinds of baselines influence behavior in gridworlds, and how it highlighted the difficulty of transitioning from a clean conceptual model to an implementation.

Also, the fact that a single randomly generated reward function was sufficient for implementing AUP in SafeLife is quite is quite astonishing. Another advantage of implementing your theorems—you get surprised by reality!

Unfortunately, some parts of the post w...

This was a really interesting post, and is part of a genre of similar posts about acausal interaction with consequentialists in simulatable universes.

The short argument is that if we (or not us, but someone like us with way more available compute) try to use the Kolmogorov complexity of some data to make a decision, our decision might get "hijacked" by simple programs that run for a very very long time and simulate aliens who look for universes where someone is trying to use the Solomonoff prior to make a decision and then based on what decision they want,...

I am grateful for this post. I'm very interested in mechanism design in general, and the design of political systems specifically, so this post has been very valuable both in introducing me to some of the ideas of the Swiss political system, and in showing what their consequences are in practice.

I thought a lot about the things I learned about Switzerland from this post. I also brought it up a lot in discussion, and often pointed people to this post to learn about the Swiss political system.

Two things that came up when I discussed the Swiss political...

Self-review: I still like this post a lot; I went through and changed some punctuation typos, but besides that I think it's pretty good.

There are a few things I thought this post did.

First, be an example of 'rereading great books', tho it feels a little controversial to call Atlas Shrugged a great book. The main thing I mean by that is that it captures some real shard of reality, in a way that looks different as your perspective changes and so is worth returning to, rather than some other feature related to aesthetics or skill or morality/politics.

Second, ...

I drank acetone in 2021, which was plausibly causally downstream of this post.

Unfortunately, the problem described here is all too common. Many 'experts' give advice as if their lack of knowledge is proof. That's just not the way the world works, but we have many examples of it that are probably salient to most people, though I don't wish to get into them.

Where this post is lacking is that it won't convince anyone who doesn't already agree with it, and doesn't have any real way to deal with it (not that it should, solving that would be quite an accomplishment).

Thus, this is simply another thing to keep in mind, where experts use terms in ways that are literally meaningless to the rest of the populace, because the expert usage is actually wrong. If you are in these fields, push back on it.

This post exemplifies the rationalist virtues of curiosity and scholarship. This year's review is not meant to judge whether posts should be published in a book, but I do wonder how a LW project to create a workbook or rationality curriculum (including problem sets) would look like. I imagine posts like this one would feature prominently in either case.

So I do think such posts deserve recognition, though in what form I am less sure.

On an entirely unrelated note, it makes me sad that the Internet is afflicted with link rot and impermanence, and that LW isn'...

Although Zvi's overall output is fantastic, I don't know which specific posts of his should be called timeless, and this is particularly tricky for these valuable but fast-moving weekly Covid posts. When it comes to judging intellectual progress, however, things are maybe a bit easier?

After skimming the post, a few points I noticed were: Besides the headline prediction which did not come to pass, this post also includes lots of themes which have stood the test of time or remained relevant since its publication: e.g. the FDA dragging its feet wrt allowing C...

So the obvious take here is that this is a long post full of Paths Forward and basically none of those paths forward were taken, either by myself or others.

Two years later, many if not most of those paths do still seem like good ideas for how to proceed, and I continue to owe the world Moloch's Army in particular. And I still really want to write The Journey of the Sensitive One to see what would happen. And so on. When the whole Covid thing is behind us sufficiently and I have time to breathe I hope to tackle some of this.

But the bottom line f...

This post was important to my own thinking because it solidified the concept that there exists the thing Obvious Nonsense, that Very Serious People would be saying such Obvious Nonsense, that the government and mainstream media would take it seriously and plan and talk on such a basis, and that someone like me could usefully point out that this was happening, because when we say Obvious Nonsense oh boy are they putting the Obvious in Nonsense. It's strange to look back and think about how nervous I was then about making this kind of call, even when it was ...

I haven't started lifelogging, due to largely having other priorities, and lifelogging being kinda weird, and me not viscerally caring about my own death that much.

But I think this post makes a compelling case that if I did care about those things, having lots of details about who-I-am might matter. In addition to cryonics, I can imagine an ancestor resurrection process that has some rough archetypes of "what a baseline human of a given era is like", and using lifelog details to fill in the gaps.

I'm fairly philosophically confused about how muc...

Mark mentions that he got this point from Ben Pace. A few months ago I heard the extended version from Ben, and what I really want is for Ben to write a post (or maybe a whole sequence) on it. But in the meantime, it's an important idea, and this short post is the best source to link to on it.

This post’s claim seems to have a strong and weak version, both of which are asserted at different places in the post.

- Strong claim: At some level of wealth and power, knowledge is the most common or only bottleneck for achieving one’s goals.

- Weak claim: Things money and power cannot obtain can become the bottleneck for achieving one’s goals.

The claim implied by the title is the strong form. Here is a quote representing the weak form:

“As one resource becomes abundant, other resources become bottlenecks. When wealth and power become abundant, anything we...

Crisis and opportunity during coronavirus seemed cute to me at the time, and now I feel like an idiot for not realizing it more. My point here is "this post was really right in retrospect and I should've listened to it at the time". This post, combined with John's "Making Vaccine", have led me to believe I was in a position to create large amounts of vaccine during the pandemic, at least narrowly for my community, and (more ambitiously) made very large amounts (100k+) in some country with weak regulation where I could have sold it. I'm not going to flesh o...

Based on my own experience and the experience of others I know, I think knowledge starts to become taut rather quickly - I’d say at an annual income level in the low hundred thousands.

I really appreciate this specific calling out of the audience for this post. It may be limiting, but it is also likely limiting to an audience with a strong overlap with LW readership.

Everything money can buy is “cheap”, because money is "cheap".

I feel like there's a catch-22 here, in that there are many problems that probably could be solved with money, but I don't know how ...

I enjoyed writing this post, but think it was one of my lesser posts. It's pretty ranty and doesn't bring much real factual evidence. I think people liked it because it was very straightforward, but I personally think it was a bit over-rated (compared to other posts of mine, and many posts of others).

I think it fills a niche (quick takes have their place), and some of the discussion was good.

This is the only one I rated 9. It looks like a boring post full of formulas but it is actually quite short and - as Reamon wrote in the curation - completes the system of all possible cooperation games giving them succinct definitions and names.

I really liked this post in 2020, and I really like this post now. I wish I had actually carved this groove into my habits of thoguht. I'm working on doing that now.

One complaint: I find the bolded "This post is not about that topic." to be distracting. I recommend unbolding, and perhaps removing the part from "This post" through "that difference."

There's not much to say about the post itself. It is a question. Some context is provided. Perhaps more could have been, but I think it's fine.

What I want to comment on is the fact that I see this as an incredibly important question. I would really love to see something like microCOVID Project, but for other risks. And I would pay a pretty good amount of money for access to it. At least $1,000. Probably more if I had to.

Why do I place such a high value on this question? Because IMO, death is very, very, very bad, and so it make sense to go to great lengths...

There are some posts with perennial value, and some which depend heavily on their surrounding context. This post is of the latter type. I think it was pretty worthwhile in its day (and in particular, the analogy between GPT upgrades and developmental stages is one I still find interesting), but I leave it to you whether the book should include time capsules like this.

It's also worth noting that, in the recent discussions, Eliezer has pointed to the GPT architecture as an example that scaling up has worked better than expected, but he diverges from the thes...

I like that this post addresses a topic that is underrepresented on Less Wrong and does so in a concise technical manner approachable to non-specialists. It makes accurate claims. The author understands how drawing (and drawing pedagogy) works.

I think this post was among the more crisp updates that helped me understand Benquo's worldview, and shifted my own. I think I still disagree with many of Benquo's next-steps or approach, but I'm actually not sure. Rereading this post is highlighting some areas I notice I'm confused about.

This post clearly articulates a problem with having language both have a function of "communicating about object level facts" and "political coalitions, attacks/defense, etc". It makes it really difficult to communicate about important true facts without poking at the soc...

This is a personal anecdote, so I'm not sure how to assess it as an intellectual contribution. That said, global developments like the Covid pandemic sure have made me more cynical towards our individual as well as societal ability to notice and react to warning signs. In that respect, this story is a useful complement to posts like There's No Fire Alarm for Artificial General Intelligence, Seeing the Smoke, and the 2021 Taliban offensive in Afghanistan (which even Metaculus was pretty blindsided by).

And separately, the post resulted in some great discussi...

Focusing on the Alpha (here 'English Strain') parts only and looking back, I'm happy with my reasoning and conclusions here. While the 70% prediction did not come to pass and in hindsight my estimate of 70% was overconfident, the reasons it didn't happen were that some of the inputs in my projection were wrong, in ways I reasoned out at the time would (if they were wrong in these ways) prevent the projection from becoming true. And at the time, people weren't making the leap to 'Alpha will take over, and might be a huge issue in some worlds depending on it...

Conversations with Ray clarified for me how much secret keeping is a skill, separate from any principles about when would agree keeping a secret was good in principle, which has been very helpful in thinking through confidentiality agreements/decisions.

I think this was an important question, even though I'm uncertain what effect it had.

It's interesting to note that this question was asked at the very beginning of the pandemic, just as it began to enter the public awareness (Nassim Taleb published a paper on the pandemic a day after this question was asked, and the first coronavirus post on LW was published 3 days later).

During the pandemic we have seen the degraded epistemic condition in effect, it was noticed very early (LW Example), and continued throughout the pandemic (e.g, supreme court judges stati...

I think this post was pretty causal in my interest in coordination theory/practice. In particular I think it helped shift my thinking from a somewhat unproductive "why can't I coordinate well with rationalists, who seem particularly hard to get to agree on anything?" to a more useful "how do you solve problems at scale?"

What are some beautiful, rationalist artworks? has many pieces of art that help me resonate with what rationality is about.

Look at this statue.

That's the first piece, there's many more, that help me have a visual handle on rationality. I give this post a +4.

The CFAR handbook is very valuable but I wouldn't include it in the 2020 review. Or if then more as a "further reading" section at the end. Actually, such a list could be valuable. It could include links to relevant blogs (e.g. those already supporting cross-posting).

Wow, I really love that this has been updated and appendix'd. It's really nice to see how this has grown with community feedback and gotten polished this from a rough concept.

Creating common knowledge on how 'cultures' of communication can differ seems really valuable for a community focused on cooperatively finding truth.

In this post, the author describes a pathway by which AI alignment can succeed even without special research effort. The specific claim that this can happen "by default" is not very important, IMO (the author himself only assigns 10% probability to this). On the other hand, viewed as a technique that can be deliberately used to help with alignment, this pathway is very interesting.

The author's argument can be summarized as follows:

- For anyone trying to predict events happening on Earth, the concept of "human values" is a "natural abstraction", i.e. someth

The problem outlined in this post results from two major concerns on lesswrong: risks from advanced AI systems and irrationality due to parasitic memes.

It presents the problem of persuasion tools as continuous with the problems humanity has had with virulent ideologies and sticky memes, exacerbated by the increasing capability of narrowly intelligent machine learning systems to exploit biases in human thought. It provides (but doesn't explore) two examples from history to support its hypothesis: the printing press as a partial cause of the 30 years war, an...

Thinking about this now, not to sound self-congratulatory, but I'm impressed with the quantity and quality of examples I was able to stumble across. I'm a huge believer in examples and concreteness. Most of the time I'm unhappy with the posts I write in large part because I'm unhappy with the quantity and quality of examples. But it's just so hard to think of good ones, and posting seems better than not posting, so I post.

I still endorse this post pretty strongly. Giving it a google strikes me as something that is still significantly underutilized. By the ...

Brief review: I think this post represents a realization many people around here have made, and says it clearly. I think it's fine to keep it as a record that people used to be blasé about the ease of secrecy, and later learned that it was much more complex than they thought. I think I'm at +1.

My quick two-line review is something like: this post (and its sequel) is an artifact from someone with an interesting perspective on the world looking at the whole problem and trying to communicate their practical perspective. I don't really share this perspective, but it is looking at enough of the real things, and differently enough to the other perspectives I hear, that I am personally glad to have engaged with it. +4.

Since the very beginning of LW, there has been a common theme that you can't always defer to experts, or that the experts aren't always competent, or that experts on a topic don't always exist, or that you sometimes have to do your own reasoning to determine who the experts are, etc. (E.g. LW on Hero Licensing, or on the Correct Contrarian Cluster, or on Inadequate Equilibria; or ACX in Movie Review: Don't Look Up.)

I don't think this post makes a particularly unique contribution to that larger theme, but I did appreciate its timing, and how it made and ref...

I'm not qualified to assess the accuracy of this post, but do very much appreciate its contribution to the discussion.

- I appreciated the historical overview of vaccination at a time just before the mRNA vaccines had become formally approved anywhere. And more generally, I always like to see the Progress Studies perspective of history on LW, even if I don't always agree with it.

- This post also put the various vaccines in context, and how and why vaccination technology was developed.

- And it made clear that the vaccine technology you use fundamentally changes it

On one hand, AFAICT the math here is pretty fuzzy, and one could have written this post without it, instead just using the same examples to say "you should probably be less risk averse." I think, in practice for most people, the math is a vague tribal signifier that you can trust the post, to help the advice go down.

But, I see this post in a similar reference class to Bayes' Theorem. I think most people don't actually need to know Bayes Theorem. They need to remember a few useful heuristics like "remember the base rates, not just the salient evidence you c...

In this post I speculated on the reasons for why mathematics is so useful so often, and I still stand behind it. The context, though, is the ongoing debate in the AI alignment community between the proponents of heuristic approaches and empirical research[1] ("prosaic alignment") and the proponents of building foundational theory and mathematical analysis (as exemplified in MIRI's "agent foundations" and my own "learning-theoretic" research agendas).

Previous volleys in this debate include Ngo's "realism about rationality" (on the anti-theory side), the pro...

I... haven't actually used this technique verbatim through to completion. I've made a few attempts to practice and learn it on my own, but usually struggled a bit to reach conclusions that felt right.

I have some sense that this skill is important, and it'd be worthwhile for me to go to a workshop similar to the one where Habryka and Eli first put this together. This feels like it should be an important post, and I'm not sure if my struggle to realize it's value personally is more due to "it's not as valuable as I thought" or "you actually have to do a fair...

I think this is an important skill and I'm glad it's written up at all. I would love to see the newer version Eli describes even more though.

This post defines and discusses an informal notion of "inaccessible information" in AI.

AIs are expected to acquire all sorts of knowledge about the world in the course of their training, including knowledge only tangentially related to their training objective. The author proposes to classify this knowledge into "accessible" and "inaccessible" information. In my own words, information inside an AI is "accessible" when there is a straightforward way to set up a training protocol that will incentivize the AI to reliably and accurately communicate this inform...

Can crimes be discussed literally? makes a short case that when you straightforwardly describe misbehavior and wrongdoing, people commonly criticize the language you use, reading it as an attempt to build a coalition to attack the parties you're talking about. At the time I didn't think that this was my experience, and thought the post was probably wrong and confused. I don't remember when I changed my mind, but nowadays I'm much more aware of requests on me to not talk about what a person or group has done or is doing. I find myself the subject of such re...

This is an extensions of the Embedded Agency philosophical position. It is a story told using that understanding, and it is fun and fleshes out lots of parts of bayesian rationality. I give it +4.

(This review is taken from my post Ben Pace's Controversial Picks for the 2020 Review.)

The best single piece of the whole Mazes sequence. It's the one to read to get all the key points. High in gears, low in detail. I give it +4.

Broader comment on the sequence as a whole:

...The sequence is an extended meditation on a theme, exploring it from lots of perspective, about how large projects and large coordination efforts end up being eaten by Moloch. The specific perspective reminds me a bit of The Screwtape Letters. In The Screwtape Letters, the two devils are focused on causing people to be immoral. The explicit optimization for vices and persona

A Significant Portion of COVID-19 Transmission is Presymptomatic argued for something that is blindingly obvious now, but a real surprise to me at the time. Covid has an incubation period of up to 2 weeks at the extreme, where you can have no symptoms but still give it to people. This totally changed my threat model, where I didn't need to know if someone was symptomatic, but instead I had to calculate how much risk they took in the last 7-14 days. The author got this point out fast (March 14th) which I really appreciated. I give this +4.

(This review is ta...

This is one of multiple posts by Steven that explain the cognitive architecture of the brain. All posts together helped me understand the mechanism of motivation and learning and answered open questions. Unfortunately, the best post of the sequence is not in 2020. I recommend including either this compact and self-contained post or the longer (and currently higher voted) My computational framework for the brain.

This was a promising and practical policy idea, of a type that I think is generally under-provided by the rationalist community. Specifically. it attempts to actually consider how to solve a problem, instead of just diagnosing or analyzing it. Unfortunately, it took far too long to get attention paid, and the window for its usefulness has passed.

I think microCOVID was a hugely useful tool, and probably the most visibly useful thing that rationalists did related to the pandemic in 2020.

In graduate school, I came across micromorts, and so was already familiar with the basic idea; the main innovation for me in microCOVID was that they had collected what data was available about the infectiousness of activities and paired it with a updating database on case counts.

While the main use I got out of it was group house harmony (as now, rather than having to carefully evaluate and argue over particular acti...

This post went in a similar direction as Daniel Kokotajlo's 2x2 Simulacrum grid. It seems to have a "medium amount of embedded worldmodel", contrasted with some of Zvi's later simulacra writing (which I think bundle a bunch of Moral-Maze-ish considerations into Simulacrum 4) and Daniel's grid-version (which is basically unopinionated about where the levels came from)

I like that this post notes the distinction between domains where Simulacrum 3 is a degenerate form of level 1, vs domains where Simulacrum 3 is the "natural" form of expression.

Of the agent foundations work from 2020, I think this sequence is my favorite, and I say this without actually understanding it.

The core idea is that Bayesianism is too hard. And so what we ultimately want is to replace probability distributions over all possible things with simple rules that don't have to put a probability on all possible things. In some ways this is the complement to logical uncertainty - logical uncertainty is about not having to have all possible probability distributions possible, this is about not having to put probability distributi...

Of the posts in the "personal advice" genre from 2020, this is the one that made the biggest impression on me.

The central lesson is that there is a core generator of good listening,, and if you can tap into your curiosity about the other person's perspective, this both automatically makes you take actions that come off as good listening, and also improves the value of what you do eventually say.

Since reading it, I can supply anecdotal evidence that it's good advice for people like me. Not only do you naturally sound like one of those highly empathetic peop...

As mentioned in the post, I think it's personally helpful to look back, and is a critical service to the community as well. Looking back at looking back, there are things I should add to this list - and even something (hospital transmission) which I edited more recently because I have updated against having been wrong about in this post - but it was, of course, an interim postmortem, so both of these types of post-hoc updates seem inevitable.

I think that the most critical lesson I learned was to be more skeptical of information sources generally - even the...

I've been asked to self-review this post as part of the 2020 review. I pretty clearly still stand by it given that I was willing to crosspost it from my own blog 5 years after I originally wrote it. But having said that, I've had some new insights since mid-2020, so let me take a moment and re-read the post and make sure it doesn't now strike me as fatally confused...

...yeah, no, it's good! I made a couple of small formatting and phrasing edits just now but it's otherwise ready to go from my perspective.

The post is sort of weirdly contextual in that it's p...

Happened to look this post up again this morning and apparently it's review season, so here goes...

This post inspired me to play around with some very basic visualisation exercises last year. I didn't spend that long on it, but I think of myself as having a very weak visual imagination and this pushed me in the direction of thinking that I could improve this a good deal if I put the work in. It was also fascinating to surface some old visual memories.

I'd be intrigued to know if you've kept using these techniques since writing the post.

I haven't followed the covid discourse that'd indicate whether this post's ideas turned out to make sense. But, I really appreciated how this post went about investigating its model, and explored the IF-THEN ramifications of that investigation.

I like how it broke down "how does one take this hypothesis seriously?", i.e:

...

- There are things we could do to get better information.

- There are things individuals or small groups can do to improve their situation.

- There are things society as a whole could try to do that don’t have big downsides.

- We could take bold action

I think this post is interesting as a historical document. I would like to look back at this post in 2050 with the benefits of hindsight.

I like this post because it following its advice has improved my quality of life.

The Four Children of the Seder as the Simulacra Levels is an interpretation of a classic Jewish reading through the lens of simulacra levels. It makes an awful lot of sense to me, helps me understand them better, and also engages the simulacra levels with the perspective of "how should a society deal with these sorts of people/strategies". I feel like I got some wisdom from that, but I'm not sure how to describe it. Anyway, I give this post a +4.

I think "Simulacra Levels and theri Interactions" is the best post on Simulacra levels, and this is the second p...

The Moral Mazes sequence prompted a lot of interesting hypotheses about why large parts of the world seem anti-rational (in various senses). I think this post is the most crisp summary of the entire model.

I agree with lionhearted that it'd be really nice if somehow the massive list of things could be distilled into chunks that are easier to conceptualize, but sympathize that reality doesn't always lend itself to such simplification. I'd be interested in Zvi taking another stab at it though.

One thing I've learned since then: I now think this is wrong:

To my (limited) understanding, this does not produce a significantly different immune response than injecting the antigen directly.

My understanding now (which is still quite limited) is that there is an improved immune response. If I have it right, the reason is that in a traditional vaccine, the antigen only exists in the bloodstream; with an mRNA vaccine, the antigen originates inside the cell—which more closely mimics how an actual virus works.

There is a tendency among nerds and technology enthusiasts to always adopt the latest technology, to assume newer is necessarily better. On the other hand, some people argue that as time passes, some aspects of technology are in fact getting worse. Jonathan Blow argues this point extensively on the topic of software development and programming (here is a 1-hour-talk on the topic). The Qt anecdote in this post is an excellent example of the thing he'd complain about.

Anyway, in this context, I read this post as recognizing that when you replace wired devices...

I still really like this post, and rereading it I'm surprised how well it captures points I'm still trying to push because I see a lot of people out there not quite getting them, especially by mixing up models and reality in creative ways. I had not yet written much about the problem of the criterion at this time, for example, yet it carries all the threads I continue to think are important today. Still recommend reading this post and endorse what it says.

It strikes me that this post looks like a (AFAICT?) a stepping stone towards the Eliciting Latent Knowledge research agenda, which currently has a lot of support/traction. Which makes this post fairly historically important.

I've highly voted this post for a few reasons.

First, this post contains a bunch of other individual ideas I've found quite helpful for orienting. Some examples:

- Useful thoughts on which term definitions have "staying power," and are worth coordinating around.

- The zero/single/multi alignment framework.

- The details on how to anticipate legitimize and fulfill governance demands.

But my primary reason was learning Critch's views on what research fields are promising, and how they fit into his worldview. I'm not sure if I agree with Critch, but I think "Figur...

Radical Probabilism is an extensions of the Embedded Agency philosophical position. I remember reading is and feeling a strong sense that I really got to see a well pinned-down argument using that philosophy. Radical Probabilism might be a +9, will have to re-read, but for now I give it +4.

(This review is taken from my post Ben Pace's Controversial Picks for the 2020 Review.)

Covid-19: My Current Model was where I got most of my practical Covid updates. It so obvious now, but risk follows a power law (i.e. I should focus on reducing my riskiest 1 or 2 activities), surfaces are mostly harmless (this was when I stopped washing my packages), outdoor activity is relatively harmless (me and my housemates stopped avoiding people on the street around this time), and more. I give this +4.

(This review is taken from my post Ben Pace's Controversial Picks for the 2020 Review.)

I like this, in the sense that it's provoking fascinating thoughts and makes me want to talk with the author about it further. As a communication of a particular concept? I'm kinda having a hard time following what the intent is.

Initial reaction: I like this post a lot. It's short, to the point. It has examples relating its concept to several different areas of life: relationships, business, politics, fashion. It demonstrates a fucky dynamic that in hindsight obviously game-theoretically exists, and gives me an "oh shit" reaction.

Meditating a bit on an itch I had: what this post doesn't tell me is how common this dynamic, or how to detect when it's happening.

While writing this review: hm, is this dynamic meaningfully different from the idea of a costly signal?

Thinking about the ex...