Look inside an LLM. Goodfire trained sparse autoencoders on Llama 3 8B and built a tool to work with edited versions of Llama by tuning features/concepts.

(I am loosely affiliated, another team at my current employer was involved in this)

Using air purifiers in two Helsinki daycare centers reduced kids' sick days by about 30%, according to preliminary findings from the E3 Pandemic Response study. The research, led by Enni Sanmark from HUS Helsinki University Hospital, aims to see if air purification can also cut down on stomach ailments. https://yle.fi/a/74-20062381

See also tag Air Quality

Update: Based on Jeff Kaufman's post Comparing the AirFanta 3Pro to the Coway AP-1512, I installed an AirFanta at our ceiling (permanently running at a low enough setting that I cannot hear in the sleeping room).

Has anybody ever tried to measure the IQ of a group of people? I mean like letting multiple people solve an IQ test together. How does that scale?

This “c factor” is not strongly correlated with the average or maximum individual intelligence of group members but is correlated with the average social sensitivity of group members, the equality in distribution of conversational turn-taking, and the proportion of females in the group.

I have read (long ago, not sure where) a hypothesis that most people (in the educated professional bubble?) are good at cooperation, but one bad person ruins the entire team. Imagine that for each member of the group you roll a die, but you roll 1d6 for men, and 1d20 for women. A certain value means that the entire team is doomed.

This seems to match my experience, where it is often one specific person (usually male) who changes the group dynamic from cooperation of equals into a kind of dominance contest. And then, even if that person is competent, they have effectively made themselves the bottleneck of the former "hive mind", because now any idea can be accepted only after it has been explained to them in great detail.

Cognition Labs released a demo of Devin an "AI coder", i.e., an LLM with agent scaffolding that can build and debug simple applications:

https://twitter.com/cognition_labs/status/1767548763134964000

Thoughts?

What are the smallest world and model trained on that world such that

- the world contains the model,

- the model has a non-trivial reward,

- the representation of the model in the world is detailed enough that the model can observe its reward channel (e.g., weights),

- the model outputs non-trivial actions that can affect the reward (e.g., modify weights).

What will happen? What will happen if there are multiple such instances of the model in the world?

I saw this in Xixidu's feed:

“The information throughput of a human being is about 10 bits/s. In comparison, our sensory systems gather data at an enormous rate, no less than 1 gigabits/s. The stark contrast between these numbers remains unexplained.” https://arxiv.org/abs/2408.10234

The article has a lot of information about the information processing rate of humans. Worth reading. But I think the article is equating two different things:

- The information processing capacity (of the brain; gigabits) is related to the complexity of the environment in which the species (here: the human) lives.

- While what they call information throughput (~10bits/s) is really a behavior expression rate, that is related to the physical possibilities of the species (can't move faster than your motor system allows).

Organizations - firms, associations, etc. - are systems that are often not well-aligned with their intended purpose - whether to produce goods, make a profit, or do good. But specifically, they resist being discontinued. That is one of the aspects of organizational dysfunction discussed in Systemantics. I keep coming back to it as I think it should be possible to study at least some aspects in AI Alignment in existing organizations. Not because they are superintelligent but because their elements - sub-agents - are observable, and the misalignment often is too.

UPDATE OCT 2023: The credit card payment was canceled. We did not get contacted or anything. But we also didn't have any cost in the end - just a lot of hassle.

Request for help or advice. My fiancé has ordered a Starlink to her home in Kenya. She used the official platform starlink.com and paid with credit card. The credit card was debited (~$600), but nothing happened after that. No confirmation mail, no SMS, nothing. Starlink apparently has no customer support, no email or phone that we can reach. And because we do not have an account, we can not use the...

Language and concepts are locally explainable.

This means that you do not need a global context to explain new concepts but only precursor concepts or limited physical context.

This is related to Cutting Reality at its Joints which implicitly claims that reality has joints. But maybe, if there are no such joints, using local explanations is maybe all we have. At least, it is all we have until we get to a precision that allows cutting the joints.

Maybe groups of new concepts can be introduced in a way to require fewer (or an optimum number of) dependencies in ...

When discussing the GPT-4o model, my son (20) said that it leads to a higher bandwidth of communication with LLMs and he said: "a symbiosis." We discussed that there are further stages than this, like Neuralink. I think there is a small chance that this (a close interaction between a human and a model) can be extended in such a way that it gets aligned in a way a human is internally aligned, as follows:

This assumes some background about Thought Generator, Thought Assessor, and Steering System from brain-like AGI.

The model is already the Though Generator. T...

Presumably, reality can be fully described with a very simple model - the Standard Model of Physics. The number of transistors to implement it is probably a few K (the field equations a smaller to write but depend on math to encode too; turning machine size would also be a measure, but transistors are more concrete). But if you want to simulate reality at that level you need a lot of them for all the RAM and it would be very slow.

So we build models that abstract large parts of physics away - atoms, molecules, macroscopic mechanics. I would include even soc...

Between Entries

[To increase immersion, before reading the story below, write one line summing up your day so far.]

From outside, it is only sun through drifting rain over a patch of land, light scattering in all directions. From where one person stops on the path and turns, those same drops and rays fold into a curved band of color “there” for them; later, on their phone, the rainbow shot sits as a small rectangle in a gallery, one bright strip among dozens of other days.

From outside, a street is a tangle of façades, windows, people, and signs. From where a...

The Hamburg Declaration on Responsible AI for the Sustainable Development Goals

aims to establish a shared, voluntary framework so that artificial intelligence advances, rather than derails, the UN 2030 Agenda ("SDG")

Note about conflict of interest: My wife is a liaison officer at the HSC conference.

Many people expect 2025 to be the Year of Agentic AI. I expect that too - at least in the sense of it being a big topic. But I expect people to eventually be disappointed. Not because the AI is failing to make progress, but for more subtle reasons. These agents will not be aligned well - because, remember, alignment is an unsolved problem. People will not trust them enough.

I'm not sure how the dynamic will unfold. There is trust in the practical abilities. Right now, it is low, but that will only go up. There is trust in the agent itself: Will it do ...

Attractors in Trains of Thought

This is slightly extended version of my comment on Idea Black Holes which I want to give a bit more visibility.

The prompt of an Idea Black Hole reminded me strongly of an old idea of mine. That activated a desire to reply, which led to a quick search where I had written about it before, then to the realization that it wasn't so close. Then back to wanting to write about it and here we are.

I have been thinking about the brain's may of creating a chain of thoughts as a dynamic process where a "current thought" moves around a co...

I have noticed a common pattern in the popularity of some blogs and webcomics. The search terms in Google trends for these sites usually seem to follow a curve that looks roughly like this (a logistic increase followed by a slower exponential decay):

Though I doubt it's really an exponential decay. It looks more like a long tail. Maybe someone can come up with a better fit.

It could be that the decay just seems like a decay and actually results from ever growing Google search volumes. I doubt it though.

Below are some examples.

Marginal Revolut...

Off-topic: Any idea why African stock markets have been moving sideways for years now despite continued growth both of populations and technology,and both for struggling as well as more developing nations like Kenya, Nigeria, or even South Africa?

jbash wrote in the context of an AGI secretly trying to kill us:

Powerful nanotech is likely possible. It is likely not possible on the first try

The AGI has the same problem as we have: It has to get it right on the first try.

In the doom scenarios, this shows up as the probability of successfully escaping going from low to 99% to 99.999...%. The AGI must get it right on the first try and wait until it is confident enough.

Usually, the stories involve the AGI cooperating with humans until the treacherous turn.

The AGI can't trust all the information it g...

One of the worst things about ideology is that it makes people attribute problems to the wrong causes. E.g. plagues are caused by sin. This is easier to see in history, but it still happens all the time. And if you get the cause wrong, you have no hope of fixing the problem.

Scott Alexander wrote about how a truth that can't be said in a society tends to warp it, but I can't find it. Does anybody know the SSC post?

Mesa-optimizers are the easiest case of detectable agency in an AI. There are more dangerous cases. One is Distributed agency, where the agent is spread across tooling, models, and maybe humans or other external systems, and the gradient driving the evolution is the combination of the local and overall incentives.

Mesa-Optimization is introduced in Risks from Learned Optimization: Introduction and probably because it was the first type of learned optimization, it has driven much of the conversation. It makes some implicit assumptions: that learned optimizat...

Want to make a decision with a quantum coin flip, ie one that will send you off into both Everett branches? Here you go:

Why is risk of human extinction hard to understand? Risk from a critical reactor or atmospheric ignition was readily seen by the involved researchers. Why not for AI? Maybe the reason is inscrutable stacks of matrixes instead of comparably simple physical equations which described the phenomena. Mechinterp does help because it provides a relation between the weights and understandable phenomena. But I wonder if we can reduce the models to a minimal reasoning model that doesn't know much about the world or even language but learn only to reason with minimal...

Can somebody explain how system and user messages (as well as custom instructions in case of ChatGPT) are approximately handled by LLMs? In the end it's all text tokens, right? Is the only difference that something like "#### SYSTEM PROMPT ####" is prefixed during training and then inference will pick up the pattern? And does the same thing happen for custom instructions? How did they train that? How do OSS models handle such things?

Paul Graham:

I don't publish essays I write for myself. If I did, I'd feel constrained writing them. -- https://mobile.twitter.com/paulg/status/1500578430907207683

This is related to the recently discussed (though I can't find where) problem that having a blog and growing audience constrains you.

Utility functions are a nice abstraction over what an agent values. Unfortunately, when an agent changes, so does its utility function.

I'm leaving this here for now. May expand on it later.

Team Flow Is a Unique Brain State Associated with Enhanced Information Integration and Interbrain Synchrony

...It's also possible to experience 'team flow,' such as when playing music together, competing in a sports team, or perhaps gaming. In such a state, we seem to have an intuitive understanding with others as we jointly complete the task at hand. An international team of neuroscientists now thinks they have uncovered the neural states unique to team flow, and it appears that these differ both from the flow states we experience as individuals, and from the

An Alignment Paradox: Experience from firms shows that higher levels of delegation work better (high level meaning fewer constraints for the agent). This is also very common practical advice for managers. I have also received this advice myself and seen this work in practice. There is even a management card game for it: Delegation Poker. This seems to be especially true in more unpredictable environments. Given that we have intelligent agents giving them higher degrees of freedom seems to imply more ways to cheat, defect, or ‘escape’. Even more so in envir...

I was a team leader twice. The first time it happened by accident. There was a team leader, three developers (me one of them), and a small project was specified. On the first day, something very urgent happened (I don't remember what), the supposed leader was re-assigned to something else, and we three were left without supervision for unspecified time period. Being the oldest and most experienced person in the room, I took initiative and asked: "so, guys, as I see it, we use an existing database, so what needs to be done is: back-end code, front-end code, and some stylesheets; anyone has a preference which part he would like to do?" And luckily, each of us wanted to do a different part. So the work was split, we agreed on mutual interfaces, and everyone did his part. It was nice and relaxed environment: everyone working alone at their own speed, debating work only as needed, and having some friendly work-unrelated chat during breaks.

In three months we had the project completed; everyone was surprised. The company management assumed that we will only "warm up" during those three months, and when the original leader returns, he will lead us to the glorious results. (In a parallel Ev...

It turns out that the alignment problem has some known solutions in the human case. First, there is an interesting special case namely where there are no decisions (or only a limited number of fully accounted for decisions) for the intelligent agent to be made - basically throwing all decision-making capabilities out of the window and only using object recognition and motion control (to use technical terms). With such an agent (we might call it zero-decision agent or zero-agent) scientific methods could be applied on all details of the work process and hig...

AI Alignment is now less about aligning an existing agent (reducing rogue objectives in the LLM) and more about discovering where agency exists beyond a single model.

Finding the agent and its objectives in multi-agent, maybe partially human, partially institutional structures.

Alignment may be less about “aligning agents” and more about discovering where the agents are. The critical agent may not be the entity that is designed (eg an LLM chat) ,but the hybrid entity resulting from multiple heavily scaffolded LLM loops across multiple systems.

I'm looking for a video of AI gone wrong illustrating AI risk and unusual persuasion. It starts with a hall with blinking computers where an AI voice is manipulating a janitor and it ends with a plane crashing and other emergencies. I think it was made between 2014 and 2018 and linked on LW but I can't google, perplex or o3 it. And ideas?

[Linkpost] China's AI OVERPRODUCTION

China seeks to commoditize their complements. So, over the following months, I expect a complete blitz of Chinese open-source AI models for everything from computer vision to robotics to image generation.

If true, what effects would that have on the AI race and AI governance?

One big element of the dangers of unaligned AI is that it acts as a coherent entity, an agent that has agency and can do things. We could try to remove this property from the models, for example, by gradient rooting and ablating. But agents are useful. We want to give the LM tasks that it executes on our behalf. Can we give tasks to them without them being a coherent unit that has potential goals of its own? All right Think it should be possible to shape the model in a way that it has a reduced form of agency. what forms could this agency take?

- Oracle

Just came across Harmonic mentioned on the AWS Science Blog. Sequoia Capital interview with the founders of Harmonic (their system which generates Lean proofs is SOTA for MiniF2F):

Here are some aspects or dimensions of consciousness:

- Dehaene's Phenomenal Consciousness: A perception or thought is conscious if you can report on it. Requires language or measuring neural patterns that are similar to humans during comparable reports. This can be detected in animals, particularly mammals.

- Gallup's Self-Consciousness: Recognition of oneself, e.g., in a mirror. Requires sufficient sensual resolution and intelligence for a self-model. Evident in great apes, elephants, and dolphins.

- Sentience (Bentham, Singer): Behavioral responses to pleasure o

Why are there mandatory licenses for many businesses that don't seem to have high qualification requirements?

Patrick McKenzie (@patio11) suggests on Twitter that one aspect is that it prevents crime:

...Part of the reason for licensing regimes, btw, isn’t that the licensing teaches you anything or that it makes you more effective or that it makes you more ethical or that it successfully identifies protocriminals before they get the magic piece of paper.

It’s that you have to put a $X00k piece of paper at risk as the price of admission to the chance of doi

On Why do so many think deception in AI is important? I commented and am reposting here because I think it's a nice example (a real one I heard) as an analogy of how deception is not needed for AI to break containment:

...Two children locked their father in one room by closing the door, using the key to lock the door, and taking the key. And then making fun of him inside, confident that he wouldn't get out (the room being on the third floor). They were mortally surprised when a minute later he was appearing behind them having opened a window and found a

Adversarial Translation.

This is another idea to test deception in advisory roles like in Deception Chess.

You could have one participant trying to pass an exam/test in a language they don't speak and three translators (one honest and two adversarial as in deception chess) assisting in this task. The adversarial translators try to achieve lower scores without being discovered.

Alternative - and closer to Deception Chess - would be two players and, again, three advisors. The players would speak different languages, the translators would assist in translation, ...

Hi, I have a friend in Kenya who works with gifted children and would like to get ChatGPT accounts for them. Can anybody get me in touch with someone from OpenAI who might be interested in supporting such a project?

I have been thinking about the principle Paul Graham used in Y combinator to improve startup funding:

all the things [VCs] should change about the VC business — essentially the ideas now underlying Y Combinator: investors should be making more, smaller investments, they should be funding hackers instead of suits, they should be willing to fund younger founders, etc. -- http://www.paulgraham.com/ycstart.html

What would it look like if you would take this to its logical conclusion? You would fund even younger people. Students that are still in high ...

If you want to give me anonymous feedback, you can do that here: https://www.admonymous.co/gunnar_zarncke

You may have some thoughts about what you liked or didn’t like but didn’t think it worth telling me. This is not so much about me as it is for the people working with me in the future. You can make life easier for everybody I interact with by giving me quick advice. Or you can tell me what you liked about me to make me happy.

Preferences are plastic; they are shaped largely by...

...the society around us.

From a very early age, we look to see who around us who other people are looking at, and we try to copy everything about those high prestige folks, including their values and preferences. Including perception of pleasure and pain.

Worry less that future folks will be happy. Even if it seems that future folks will have to do or experience things that we today would find unpleasant, future culture could change people so that they find these new things pleasant instead.

From Robin Ha...

Insights about branding, advertising, and marketing.

It is a link that was posted internally by our brand expert and that I found full of insights into human nature and persuasion. It is a summary of the book How Not to Plan: 66 Ways to Screw it Up:

https://thekeypoint.org/2020/03/10/how-not-to-plan-66-ways-to-screw-it-up/

(I'm unaffiliated)

Roles serve many functions in society. In this sequence, I will focus primarily on labor-sharing roles, i.e. roles that serve splitting up productive functions as opposed to imaginary roles e.g. in theater or play. Examples of these roles are (ordered roughly by how specific they are):

- Parent

- Engineer (any kind of general type of job)

- Battery Electronics Engineer (any kind of specific job description)

- Chairman of a society/club

- Manager for a certain (type of) project in a company

- Member in an online community

- Scrum master in an agile team

- Note-taker in a meeting

Yo...

Roles are important. This shortform is telling you why. An example: The role of a moderator in an online forum. The person (in the following called agent) acting in this role is expected to perform certain tasks - promote content, ban trolls - for the benefit of the forum. Additionally, the agent is also expected to observe limits on these tasks e.g. to refrain from promoting friends or their own content. The owners of the forum and also the community overall effectively delegate powers to the agent and expect alignment with the goals of the forum. This is an alignment problem that has existed forever. How is it usually solved? How do groups of people or single principals use roles to successfully delegate power?

Interest groups without an organizer.

This is a product idea that solves a large coordination problem. With billion people, there could be a huge number of groups of people sharing multiple interests. But currently, the number of valuable groups of people is limited by a) the number of organizers and b) the number of people you meet via a random walk. Some progress has been made on (b) with better search, but it is difficult to make (a) go up because of human tendencies - most people are lurkers - and the incentive to focus on one area to stand out. So what...

I had a conversation with ChatGPT-4 about what is included in it. I did this because I wondered how an LLM-like system would define itself. While identity is relatively straightforward for humans - there is a natural border (though some people would only include their brain or their mind in their identity) - it is not so clear for an LLM. Below is the complete unedited dialog:

Me: Define all the parts that belong to you, the ChatGPT LLM created by OpenAI.

ChatGPT: As a ChatGPT large language model (LLM) created by OpenAI, my primary components can be divided...

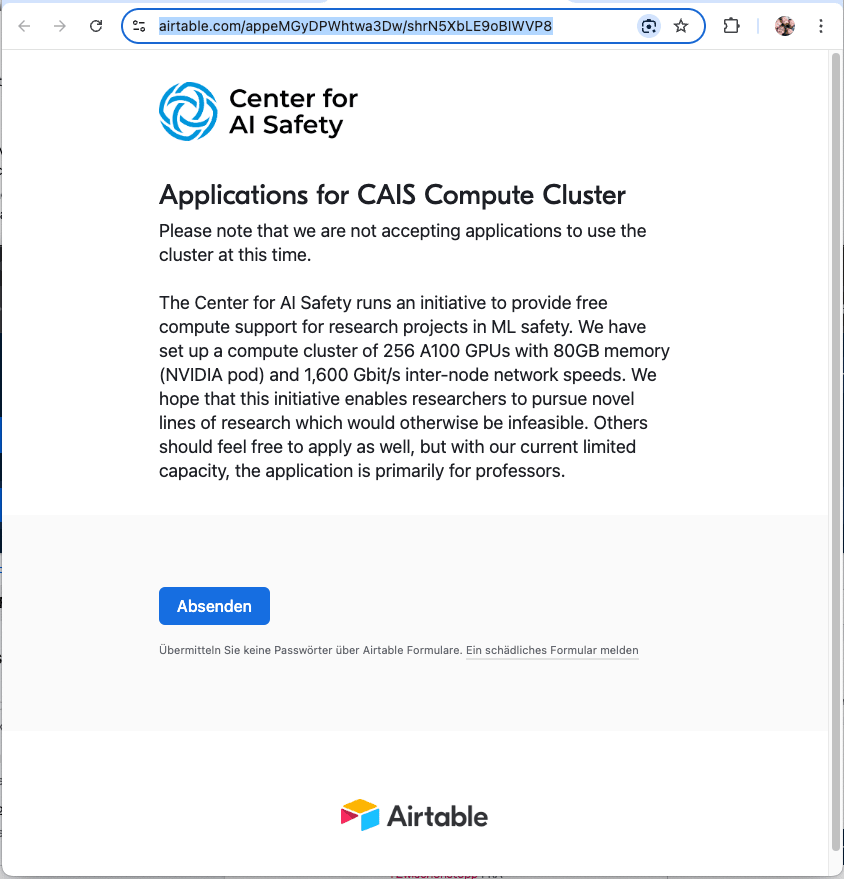

Can somebody get me in touch with somebody from the Center for AI Safety (safe.ai)? Their page for applying for compute resources seems broken. I have used their contact form to report the issue on April 7th, but received no reply.

This is how the application page looks like at least since then (linked from their Compute Cluster page):

As you can see, there is no form field to enter and only a lone "Absenden" button, which is German and means "submit" (which is strange because my system and browser are set to English). If I click that button, I get this mess...

LLMs necessarily have to simplify complex topics. The output for a prompt cannot represent all they know about some fact or task. Even if the output is honest and helpful (ignoring harmless for now), the simplification will necessarily obscure some details of what the LLM "intends" to do - in the sense of satisfying the user request. The model is trained to get things done. Thus, the way it simplifies has a large degree of freedom and gives the model many ways to achieve its goals.

You could think of a caring parent who tells the child a simplified ve...

Is anybody aware of any updates on Logical Induction, published in 2016? I would expect implementations in Lean by now.

Instrumental power-seeking might be less dangerous if the self-model of the agent is large and includes individual humans, groups, or even all of humanity and if we can reliably shape it that way.

It is natural for humans to for form a self-model that is bounded by the body, though it is also common to be only the brain or the mind, and there are other self-models. See also Intuitive Self-Models.

It is not clear what the self-model of an LLM agent would be. It could be

- the temporary state of the execution of the model (or models),

- the persistently running mode

I'm discarding most ChatGPT conversations except for a few, typically 1-2 per day. These few fall into these categories:

- conversations that led to insights or things I want to remember (examples: The immune function of tonsils, Ringwoodite transformation and the geological water cycle, oldest religious texts)

- conversations that I want to continue (examples: Unusual commitment norms)

- conversations that I expect to follow up to (a chess book for my son)

- conversations with generated images that I want to keep and haven't yet copied elsewhere

Most job-related queri...

It would be nice if one could subscribe to a tag and get notified if a page is tagged with that tag.

It's maybe a bit extreme precaution, but it may be a legit option in some places: This guy keeps a fireproof suit and an air canister at his bed in case of fire:

Does anybody know if consensus algorithms have been proposed that try to reduce centralization by requiring quick coordination across large parts of the network, i.e., it doesn't work well to have machines only in one place?

There is no difference at the hardware level between being 'close to' and 'having a low-latency connection to', as I already explained. And to the extent that having those connections matter, miners already have them. In particular, in Ethereum, due to the money you can make by frontrunning transactions to hack/exploit them ('miner exploitable value'), HFT Ethereum miners/stakers invest heavily in having a lot of interconnected low-latency Sybils nodes so they can see unconfirmed transactions as quickly as possible, compute a maximally-exploitative block (eg. temporarily jacking up the price of a thing being purchased using a flash loan solely to rip off a specific transaction), and get that block committed before anyone can beat them to the same exploit. Having a lot of MEV is considered a bad thing and Ethereum types are spending increasing effort on approaches like commit-and-reveal to minimize MEV, which comes at the expense of users and makes them very unhappy. You could, I suppose, design a protocol which has extra MEV by designing transactions to be especially exploitable, but most people would consider that a bad thing...

There seem to be some court decisions that AI-generated material cannot have copyright. And I guess there are or will be decisions that AIs can't be held responsible for outcomes, such as accidents from self-driving cars. People seem to be worried that this will slow down progress. But I think there is a potentially easy way out that is relatively general: Corporates. The law in most countries allows legal persons that are not natural persons to take responsibility and to own stuff. Justlet the AI control the corporation or at least route all AI actions thru the corporation. This should easily work for cases such as copyright material, though I'm not sure one can get the action cycle short enough for self-driving cars.

Would it be possible to embed a seed of dath ilan or a social system like that in our existing society to grow it? If death ilan works that should be possible. It should outcompete Inadequate Equilibria, right? But: When does Be the change that you want to see in the world and Fake it till you make it work? What are the requirements? Higher intelligence?

I have written about embedding better social systems here and here before.

Someone asked an LLM about startups. For example:

Q: Should a founder move into their parents' basement and live off ramen?

A: If a founder is willing to move into their parents' basement and live off ramen in order to save money and grow their business, then yes, they should do so.

Paul Graham got interested and asked:

I'd be interested to hear the answer to "What has Paul Graham been wrong about?"

LLM:

...A: Paul Graham has been wrong about a few things, but the most notable is his belief that the best startups are founded by young people. This has been pro

Alignment idea: Myopic AI is probably much safer than non-myopic AI. But it can't get complicated things done or anything that requires long-term planning. Would it be possible to create a separate AI that can solve only long-term problems and not act on short timescales? Then use both together? That way we could inspect each long-term issues without risk of them leading to short-term consequences. And we can iterate on the myopic solutions - or ask the long-term AI about the consequences. There are still risks we might not understand like johnswentworth's gun powder example. And the approach is complicated and that is also harder to get right.

There was a post or comment that wrong or controversial beliefs can function as a strong signal for in-group membership, but I can't find it. Does anybody know?

From a discussion about self-driving cars and unfriendly AI with my son: For a slow take-off, you could have worse starting points than FSD: The objective of the AI is to keep you safe, get you where you want, and not harm anybody in the process. It is also embedded into the real world. There are still infinitely many ways things can go wrong, esp. with a fast take-off, but we might get lucky with this one slowly. If we have to develop AI then maybe better this one than a social net optimizing algorithm unmoored from human experience.

What is good?

A person who has not yet figured out that collaborating with other people has mutual benefits must think that good is what is good for a single person. This makes it largely a zero-sum game, and such a person will seem selfish - though what can they do?

A person who understands that relationships with other people have mutual benefits but has not figured out that conforming to a common ruleset or identity has benefits for the group must think that what is good for the relationship is good for both participants. This can pit relationships agains...

From my Anki deck:

Receiving touch (or really anything personal) can be usefully grouped in four ways:

Serve, Take, Allow, and Accept

(see the picture or the links below).

A reminder that there are two sides and many ways for this to go wrong if there is not enough shared understanding of the exchange.

From my Anki deck:

Mental play or offline habit training is...

...practicing skills and habits only in your imagination.

Rehearsing motions or recombining them.

Imagine some triggers and plan your reaction to them.

This will apparently improve your real skill.

Links:

https://en.wikipedia.org/wiki/Motor_imagery

From my Anki deck:

Aaronson Oracle is a program that predicts the next key you will type when asked to type randomly and shows how often it is right.

https://roadtolarissa.com/oracle

Here is Scott Aaronson's comment about it:

...In a class I taught at Berkeley, I did an experiment where I wrote a simple little program that would let people type either “f” or “d” and would predict which key they were going to push next. It’s actually very easy to write a program that will make the right prediction about 70% of the time. Most people don’t really know how to type ra

Slices of joy is a habit to...

feel good easily and often.

- Some small slice of good happens

- Notice it consciously.

- Enjoy it in a small way.

This is a trigger, a routine, and a reward — the three parts necessary to build a habit. The trigger is the pleasant moment, the routine is the noticing t, and the reward is the feeling of joy itself.

Try to come up with examples; here are some:

- Drinking water.

- Eating something tasty

- Seeing small children

- Feeling of cold air

- Warmth of sunlight

- Warmth of water, be it bathing, dishwashing, etc.

-

Refreshing your memory:

What is signaling, and what properties does it have?

- signaling clearly shows resources or power (that is its primary purpose)

- is hard to fake, e.g., because it incurs a loss (expensive Swiss watch) or risk (peacocks tail)

- plausible deniability that it is intended as signaling

- mostly zero-sum on the individual level (if I show that I have more, it implies that others have less in relation)

- signaling burns societal resources

- signaling itself can't be made more efficient, but the resources spent can be used more efficiently in soc

What is the Bem Test or Open Sex Role Inventory?

It is a scientific test that measures gender stereotypes.

The test asks questions about traits that are classified as feminine, masculine, and neutral. Unsurprisingly, women score higher on feminine, and men on masculine traits but Bem thought that strong feminine *and* masculine traits would be most advantageous for both genders.

My result is consistently average feminity, slightly below average masculinity. Yes really. I have done the test 6 times since 2016 and the two online tests mostly agree. And it fits:

What is a Blame Hole (a term by Robin Hanson)?

Blame holes in blame templates (the social fabric of acceptable behavior) are like plot holes in movies.

Deviations between what blame templates actually target, and what they should target to make a better (local) world, can be seen as “blame holes”. Just as a plot may seem to make sense on a quick first pass, with thought and attention required to notice its holes, blame holes are typically not noticed by most who only work hard enough to try to see if a particular behavior fits a blame template. While many ar

Leadership Ability Determines a Person's Level of...

Effectiveness.

(Something I realized around twelve years ago: I was limited in what I could achieve as a software engineer alone. That was when I became a software architect am worked with bigger and bigger teams.)

From "The 21 Irrefutable Laws of Leadership

By John C. Maxwell":

Factors That Make a Leader

1) Character – Who They Are – true leadership always begins with the inner person. People can sense the depth of a person's character.

2) Relationships – Who They Know – with deep relationships with the right ...

To achieve objective analysis, analysts do not avoid what?

Analysts do not achieve objective analysis by avoiding preconceptions; that would be ignorance or self-delusion. Objectivity is achieved by making basic assumptions and reasoning as explicit as possible so that they can be challenged by others and analysts can, themselves, examine their validity.

PS. Any idea how to avoid the negation in the question?

I started posting life insights from my Anki deck on Facebook a while ago. Yesterday, I stumbled over the Site Guide and decided that these could very well go into my ShortForm too. Here is the first:

Which people who say that they want to change actually will do?

People who blame a part of themselves for a failure do not change.

If someone says, "I've got a terrible temper," he will still hit. If he says, "I hit my girlfriend," he might stop.

If someone says, "I have shitty executive function," he will still be late. If he says, "I broke my

I'm looking for a post on censorship bias (see Wikipedia) that was posted on here on LW or possibly on SSC/ACX but a search for "censorship bias" doesn't turn up anything. Googling for it turns up this:

Anybody can help?

Philosophy with Children - In Other People's Shoes

"Assume you promised your aunt to play with your nieces while she goes shopping and your friend calls and invites you to something you'd really like to do. What do you do?"

This was the first question I asked my two oldest sons this evening as part of the bedtime ritual. I had read about Constructive Development Theory and wondered if and how well they could place themselves in other persons' shoes and what played a role in their decision. How they'd deal with it. A good occasion to have some philosophical t...

Philosophy with Children - Mental Images

One time my oldest son asked me to test his imagination. Apparently, he had played around with it and wanted some outside input to learn more about what he could do. We had talked about https://en.wikipedia.org/wiki/Mental_image before and I knew that he could picture moving scenes composed of known images. So I suggested

- a five with green white stripes - diagonally. That took some time - apparently, the green was difficult for some reason, he had to converge there from black via dark-green

- three mice

- three mice,

Origins of Roles

The origin of the word role is in the early 17th century: from French rôle, from obsolete French roule ‘roll’, referring originally to the roll of paper on which the actor's part was written (the same is the case in other languages e.g. German).

The concept of a role you can take on and off might not have existed in general use long before that. I am uncertain about this thesis but from the evidence I have seen so far, I think this role concept could be the result of the adaptations to the increasing division of labor. Before that peop...

The Cognitive Range of Roles

A role works from a range of abstraction between professions and automation. In a profession one person masters all the mental and physical aspects of trade and can apply them holistically from small details of handling material imperfections to the organization of the guild. At the border to automation, a worker is reduced to an executor of not yet automated tasks. The expectations on a master craftsman are much more complex than on an assembly-line worker.

With more things getting automated this frees the capacity to automate m...

When trying to get an overview of what is considered a role I made this table:

| Type of role | Example | Purpose | Distinction | CDF Level |

| (Children's) play acting | Cop, Father | Play, imitation, learning | Shallow copy, present in higher animals | Impulsive (1) to Instrumental (2) |

| Social role | Mother, Husband | Elementary social function | Since the ancestral environment, closely moderated by biological function | Instrumental (2) to Socialized (3) |

| Occupation (rarely called role but shares traits) | Carpenter | Getting things done in a simple society | Rarely changed, advancement possible (appren |

In any sizable organization, you can find a lot of roles. And a lot of people filling these roles - often multiple ones on the same day. Why do we use so many and fine-grained roles? Why don’t we continue with the coarse-grained and more stable occupations? Because the world got more complicated and everybody got more specialized and roles help with that. Division of labor means breaking down work previously done by one person into smaller parts that are done repeatedly in the same way - and can be assigned to actors: “You are now the widget-maker.” This w...

What are the common aspects of these labor-sharing roles (in the following called simply roles)?

One common property of a role is that there is common knowledge by the involved persons about the role. Primarily, this shared understanding is about the tasks that can be expected to be performed by the agent acting in the role as well as about the goals to be achieved, and limits to be observed as well other expectations. These expectations are usually already common knowledge long beforehand or they are established when the agent takes on the role.

The s...

[provided as is, I have no strong opinion on it, might provide additional context for some]

The Social Radars episode on Sam Altman:

Carolynn and I have known Sam Altman for his whole career. In fact it was Sam who introduced us. So today's Social Radars is a special one. Join us as we talk to Sam about the inside story of his journey from Stanford sophomore to AI mogul.

I'm posting this specifically because there is some impression that OpenAI and Sam Altman are not good but self-interested, esp. voiced by Zvi.

...One of the thi