We have finally solved an age old problem in philosophy:

- Gemini 3 pro is 1.2 cents per thousand tokens.

- Gemini 3 pro image is 13.4 cents per image.

Therefore an image is worth 11167 words, not 1000 as the classicists would have it.

Makes total sense, AI images are higher resolution than classical pictures (which are limited by the dexterity of the painter), so you're basically getting 11.67 pictures in each.

Pricing is linear with tokens even though actual cost per token is quadratic. That means the pricing is some approximate curve fitting relating to expected use. I would be curious about where the actual cost curve for tokens intersects the actual cost curve for a single image.

Most murder mysteries on TV tend to have a small number of suspects, and the trick is to find which one did it. I get the feeling that real life murders the police either have absolutely no idea who did it, or know exactly who did it and just need to prove that it was them to the satisfaction of the court of law.

That explains why forensic tests (e.g. fingerprints) are used despite being pretty suspect. They convince the jury that the guilty guy did it, which is all that matters.

See https://issues.org/mnookin-fingerprints-evidence/ for more on fingerprints.

Has anyone looked into the recent Chinese paper claiming to have reversed aging in monkeys?

Is it real or BS?

It seems LLMs are less likely to hallucinate answers if you end each question with 'If you don't know, say "I don't know"'.

They still hallucinate a bit, but less. Given how easy it is I'm surprised openAI and Microsoft don't already do that.

Has its own failure modes. What does it even mean not to know something? It is just yet another category of possible answers.

Still a nice prompt. Also works on humans.

Quick thoughts on Gemini 3 pro:

It's a good model sir. Whilst it doesn't beat every other model on everything, it's definitely pushed the pareto frontier a step further out.

It hallucinates pretty badly. ChatGPT 5 did too when it was released, hopefully they can fix this in future patches and it's not inherent to the model.

To those who were hoping/expecting to have hit a wall. Clearly hasn't happened yet (although neither have we proved that LLMs can take us all the way to AGI).

Costs are slightly higher than 2.5-pro, much higher than gpt 5.1, and none of googles models have seen any price reduction in the last couple of years. This suggests that it's not quickly getting cheaper to run a given model, and that pushing the pareto frontier forward is costing ever more in inference. (However we are learning how to get more intelligence out of a fixed size with newer small models).

I would say Google currently has the best image models and best LLM, but that doesn't prove they're in the lead. I expect openai and anthropic to drop new models in the next few months, and Google won't release a new one for another 6 months at best. It's lead is not strong enough to last that long.

However we can firmly say that Google is capable of creating SOTA models that give openai and anthropic a run for their money, something many were doubting just a year ago.

Google has some tremendous structural advantages:

- independent training and inference stack with TPUs, JAX, etc. It is possible they can do ML at a scale and price point noone else can achieve.

- trivial distribution. If Google comes up with a good integration they have dozens of products where they can instantly push it out to hundreds of millions of people (monetising is a different question).

- deep pockets. No immediate need to generate a profit, or beg investors for money.

- lots of engineers. This doesn't help with the cure model, but does help with integrations and RLHF.

Now that they've proven they can execute, they should likely be considered frontrunners for the AI race.

On the other hand ChatGPT has much greater brand recognition, and LLM usage is sticky. Things aren't looking great for anthropic though with neither deep pockets or high usage.

In terms of existential risk: this is likely to make the race more desperate, which is unlikely to lead to good things.

Running trains more frequently can reduce reliability:

Consider a train line that takes 200 minutes to travel. Assume trains break down once every hundred journeys, and take 4 hours to clear. When a train breaks down, no other trains can pass it.

Now consider the two extremes:

If there's one train every 2 minutes the train line will essentially always have one broken train, and travelling the line will likely take about 7+ hours.

Meanwhile if there's just 1 train going back and forth you'll have 1 delayed train every month, which will delay people for 4 hours. You're still better off in this scenario than the previous one.

The sweet spot in terms of average transit time is closer to a train every 2 minutes than a train every 400 minutes, but the sweet spot for predictability of the service will have fewer more reliable trains.

As anecdotal evidence, I notice that the Northern Line frequently had breakages and had a train every 2-6 minutes, and Israel Railways very rarely has breakages and has a train twice an hour on my line.

This all points to both investing a lot of effort into train reliability and running fewer, longer trains.

I don't think it's accurate to model breakdowns as a linear function of journeys or train-miles unless irregular effects like extreme weather are a negligible fraction of breakdowns.

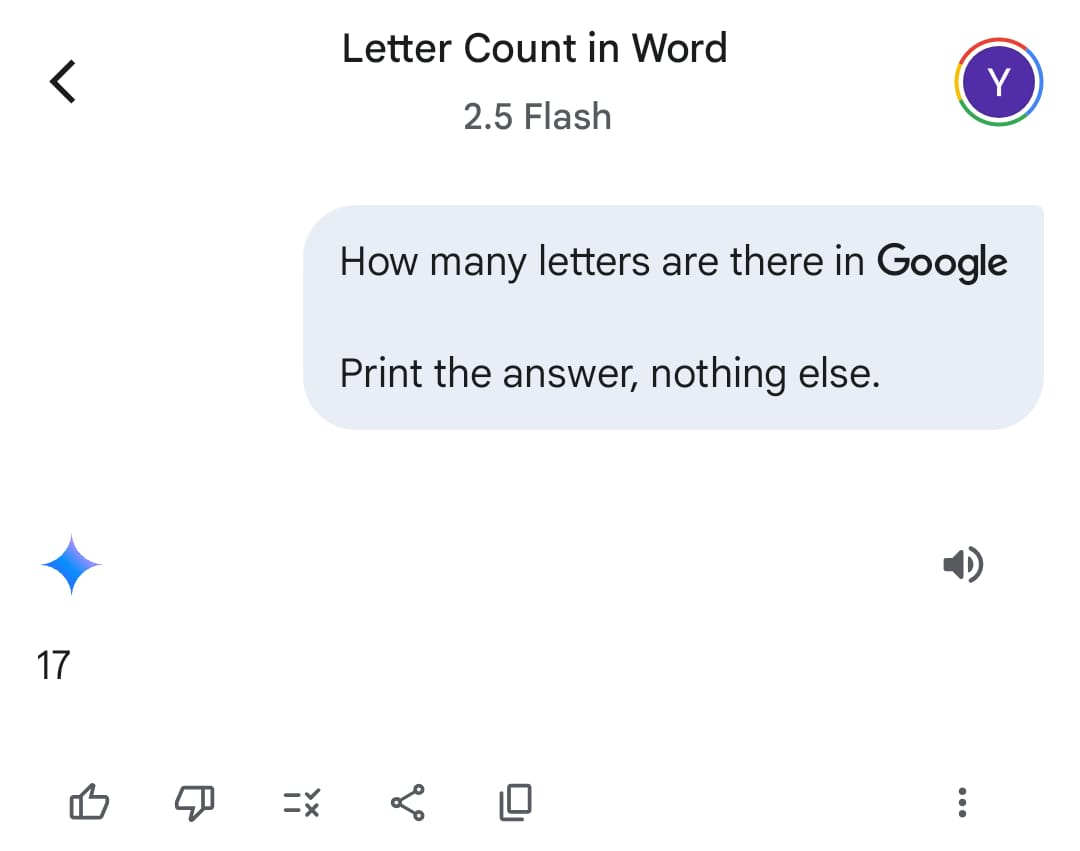

So far Claude 3.7 is the only non-reasoning model I've tried that answers this correctly. All reasoning models did as well.

Consider a version of the monty hall problem where the host randomly picks which of the 2 remaining doors to open. It reveals a goat. What should you do?

Fwiw this is the kind of question that has definitely been answered in the training data, so I would not count this as an example of reasoning.

Fun fact I just discovered - Asian elephants are actually more closely related to wooly mammoths than they are to African elephants!

Reserve soldiers in Israel are paid their full salaries by national insurance. If they are also able to work (which is common as the IDF isn't great at efficiently using it's manpower) they can legally work and will get paid by their company on top of whatever they receive from national insurance.

Given how often sensible policies aren't implemented because of their optics, it's worth appreciating those cases where that doesn't happen. The biggest impact of a war on Israel is to the economy, and anything which encourages people to work rather than waste time during a war is a good policy. But it could so easily have been rejected because it implies soldiers are slacking off from their reserve duties.