The result of averaging the first 20 generated orthogonal vectors [...]

Have you tried scaling up the resulting vector, after averaging, so that its norm is similar to the norms of the individual vectors that are being averaged?

If you take orthogonal vectors, all of which have norm , and average them, the norm of the average is (I think?) .

As you note, the individual vectors don't work if scaled down from norm 20 to norm 7. The norm will become this small once we are averaging 8 or more vectors, since , so we shouldn't expect these averages to "work" -- even the individual orthogonal vectors don't work if they are scaled down this much.

Another way to look at it: suppose that these vectors do compose linearly, in the sense that adding several of them together will "combine" (in some intuitive sense) the effects we observe when steering with the vectors individually. But an average is the sum of vectors each of which is scaled down by . Under this hypothesis, once , we should expect the average to fail in the same way the individual vectors fail when scaled to norm 7, since the scaled-down individual vectors all fail, and so the "combination" of their elicited behaviors is also presumably a failure.[1] (This hypothesis also implies that the "right" thing to do is simply summing vectors as opposed to averaging them.)

Both of these hypotheses, but especially the one in the previous paragraph, predict what you observed about averages generally producing similar behavior (refusals) to scaled-to-norm-7 vectors, and small- averages coming the closest to "working." In any case, it'd be easy to check whether this is what is going on or not.

- ^

Note that here we are supposing that the norms of the individual "scaled summands" in the average are what matters, whereas in the previous paragraph we imagined the norm of the average vector was what mattered. Hence the scaling with ("scaled summands") as opposed to ("norm of average"). The "scaled summands" perspective makes somewhat more intuitive sense to me.

This seems to be right for the coding vectors! When I take the mean of the first vectors and then scale that by , it also produces a coding vector.

Here's some sample output from using the scaled means of the first n coding vectors.

With the scaled means of the alien vectors, the outputs have a similar pretty vibe as the original alien vectors, but don't seem to talk about bombs as much.

The STEM problem vector scaled means sometimes give more STEM problems but sometimes give jailbreaks. The jailbreaks say some pretty nasty stuff so I'm not going to post the results here.

The jailbreak vector scaled means sometimes give more jailbreak vectors but also sometimes tell stories in the first or second person. I'm also not going to post the results for this one.

Have you tried this procedure starting with a steering vector found using a supervised method?

It could be that there are only a few “true” feature directions (like what you would find with a supervised method), and the melbo vectors are vectors that happen to have a component in the “true direction”. As long as none of the vectors in the basket of stuff you are staying orthogonal to are the exact true vector(s), you can find different orthogonal vectors that all have some sufficient amount of the actual feature you want.

This would predict:

- Summing/averaging your vectors produces a reasonable steering vector for the behavior (provided rescaling to an effective norm)

- Starting with a supervised steering vector enables you to generate fewer orthogonal vectors with same effect

- (Maybe) The sum of your successful melbo vectors is similar to the supervised steering vector (eg. mean difference in activations on code/prose contrast pairs)

[Edit: most of the math here is wrong, see comments below. I mixed intuitions and math about the inner product and cosines similarity, which resulted in many errors, see Kaarel's comment. I edited my comment to only talk about inner products.]

[Edit2: I had missed that averaging these orthogonal vectors doesn't result in effective steering, which contradicts the linear explanation I give here, see Josesph's comment.]

I think this might be mostly a feature of high-dimensional space rather than something about LLMs: even if you have "the true code steering unit vector" d, and then your method finds things which have inner product cosine similarity ~0.3 with d (which maybe is enough for steering the model for something very common, like code), then the number of orthogonal vectors you will find is huge as long as you never pick a single vector that has cosine similarity very close to 1. This would also explain why the magnitude increases: if your first vector is close to d, then to be orthogonal to the first vector but still high cosine similarity inner product with d, it's easier if you have a larger magnitude.

More formally, if theta0 = alpha0 d + (1 - alpha0) noise0, where d is a unit vector, and alpha0 = cosine<theta0, d>, then for theta1 to have alpha1 cosine similarity while being orthogonal, you need alpha0alpha1 + <noise0, noise1>(1-alpha0)(1-alpha1) = 0, which is very easy to achieve if alpha0 = 0.6 and alpha1 = 0.3, especially if nosie1 has a big magnitude. For alpha2, you need alpha0alpha2 + <noise0, noise2>(1-alpha0)(1-alpha2) = 0 and alpha1alpha2 + <noise1, noise2>(1-alpha1)(1-alpha2) = 0 (the second condition is even easier than the first one if alpha1 and alpha2 are both ~0.3, and both noises are big). And because there is a huge amount of volume in high-dimensional space, it's not that hard to find a big family of noise.

(Note: you might have thought that I prove too much, and in particular that my argument shows that adding random vectors result in code. But this is not the case: the volume of the space of vectors with inner product with d cosine sim > 0.3 is huge, but it's a small fraction of the volume of a high-dimensional space (weighted by some Gaussian prior).) [Edit: maybe this proves too much? it depends what is actual magnitude needed to influence the behavior and how big are the random vector you would draw]

But there is still a mystery I don't fully understand: how is it possible to find so many "noise" vectors that don't influence the output of the network much.

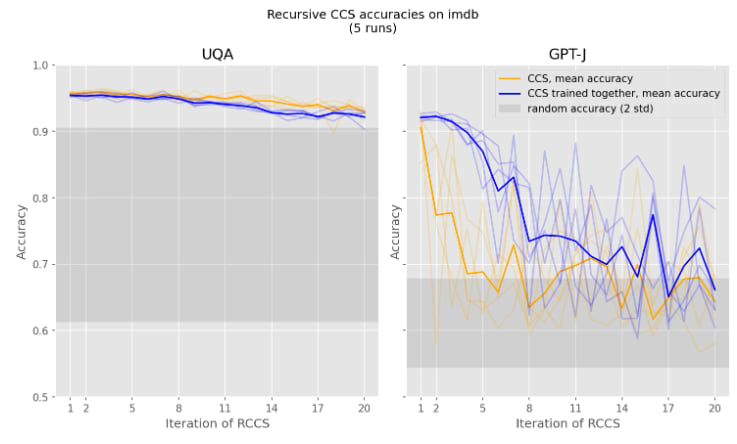

(Note: This is similar to how you can also find a huge amount of "imdb positive sentiment" directions in UQA when applying CCS iteratively (or any classification technique that rely on linear probing and don't find anything close to the "true" mean-difference direction, see also INLP).)

I think most of the quantitative claims in the current version of the above comment are false/nonsense/[using terms non-standardly]. (Caveat: I only skimmed the original post.)

"if your first vector has cosine similarity 0.6 with d, then to be orthogonal to the first vector but still high cosine similarity with d, it's easier if you have a larger magnitude"

If by 'cosine similarity' you mean what's usually meant, which I take to be the cosine of the angle between two vectors, then the cosine only depends on the directions of vectors, not their magnitudes. (Some parts of your comment look like you meant to say 'dot product'/'projection' when you said 'cosine similarity', but I don't think making this substitution everywhere makes things make sense overall either.)

"then your method finds things which have cosine similarity ~0.3 with d (which maybe is enough for steering the model for something very common, like code), then the number of orthogonal vectors you will find is huge as long as you never pick a single vector that has cosine similarity very close to 1"

For 0.3 in particular, the number of orthogonal vectors with at least that cosine with a given vector d is actually small. Assuming I calculated correctly, the number of e.g. pairwise-dot-prod-less-than-0.01 unit vectors with that cosine with a given vector is at most (the ambient dimension does not show up in this upper bound). I provide the calculation later in my comment.

"More formally, if theta0 = alpha0 d + (1 - alpha0) noise0, where d is a unit vector, and alpha0 = cosine(theta0, d), then for theta1 to have alpha1 cosine similarity while being orthogonal, you need alpha0alpha1 + <noise0, noise1>(1-alpha0)(1-alpha1) = 0, which is very easy to achieve if alpha0 = 0.6 and alpha1 = 0.3, especially if nosie1 has a big magnitude."

This doesn't make sense. For alpha1 to be cos(theta1, d), you can't freely choose the magnitude of noise1

How many nearly-orthogonal vectors can you fit in a spherical cap?

Proposition. Let be a unit vector and let also be unit vectors such that they all sorta point in the direction, i.e., for a constant (I take you to have taken ), and such that the are nearly orthogonal, i.e., for all , for another constant . Assume also that . Then .

Proof. We can decompose , with a unit vector orthogonal to ; then . Given , it's a 3d geometry exercise to show that pushing all vectors to the boundary of the spherical cap around can only decrease each pairwise dot product; doing this gives a new collection of unit vectors , still with . This implies that . Note that since , the RHS is some negative constant. Consider . On the one hand, it has to be positive. On the other hand, expanding it, we get that it's at most . From this, , whence .

(acknowledgements: I learned this from some combination of Dmitry Vaintrob and https://mathoverflow.net/questions/24864/almost-orthogonal-vectors/24887#24887 )

For example, for and , this gives .

(I believe this upper bound for the number of almost-orthogonal vectors is actually basically exactly met in sufficiently high dimensions — I can probably provide a proof (sketch) if anyone expresses interest.)

Remark. If , then one starts to get exponentially many vectors in the dimension again, as one can see by picking a bunch of random vectors on the boundary of the spherical cap.

What about the philosophical point? (low-quality section)

Ok, the math seems to have issues, but does the philosophical point stand up to scrutiny? Idk, maybe — I haven't really read the post to check relevant numbers or to extract all the pertinent bits to answer this well. It's possible it goes through with a significantly smaller or if the vectors weren't really that orthogonal or something. (To give a better answer, the first thing I'd try to understand is whether this behavior is basically first-order — more precisely, is there some reasonable loss function on perturbations on the relevant activation space which captures perturbations being coding perturbations, and are all of these vectors first-order perturbations toward coding in this sense? If the answer is yes, then there just has to be such a vector — it'd just be the gradient of this loss.)

Hmm, with that we'd need to get 800 orthogonal vectors.[1] This seems pretty workable. If we take the MELBO vector magnitude change (7 -> 20) as an indication of how much the cosine similarity changes, then this is consistent with for the original vector. This seems plausible for a steering vector?

- ^

Thanks to @Lucius Bushnaq for correcting my earlier wrong number

You're right, I mixed intuitions and math about the inner product and cosines similarity, which resulted in many errors. I added a disclaimer at the top of my comment. Sorry for my sloppy math, and thank you for pointing it out.

I think my math is right if only looking at the inner product between d and theta, not about the cosine similarity. So I think my original intuition still hold.

I think this still contradicts my model: mean_i(<d, theta_i>) = <d, mean_i(theta_i)> therefore if the effect is linear, you would expect the mean to preserve the effect even if the random noise between the theta_i is greatly reduced.

But there is still a mystery I don't fully understand: how is it possible to find so many "noise" vectors that don't influence the output of the network much.

In unrelated experiments I found that steering into a (uniform) random direction is much less effective, than steering into a random direction sampled with same covariance as the real activations. This suggests that there might be a lot of directions[1] that don't influence the output of the network much. This was on GPT2 but I'd expect it to generalize for other Transformers.

- ^

Though I don't know how much space / what the dimensionality of that space is; I'm judging this by the "sensitivity curve" (how much steering is needed for a noticeable change in KL divergence).

Hypothesis: each of these vectors representing a single token that is usually associated with code, vectors says "I should output this token soon", and the model then plans around that to produce code. But adding vectors representing code tokens doesn't necessarily produce another vector representing a code token, so that's why you don't see compositionality. Does somewhat seem plausible that there might be ~800 "code tokens" in the representation space.

I wonder how much of these orthogonal vectors are "actually orthogonal" once we consider we are adding two vectors together, and that the model has things like LayerNorm.

If one conditions on downstream midlayer activations being "sufficiently different" it seems possible one could find like 10x degeneracy of actual effects these have on models. (A possibly relevant factor is how big the original activation vector is compared to the steering vector?)

After looking more into the outputs, I think the KL-divergence plots are slightly misleading. In the code and jailbreak cases, they do seem to show when the vectors stop becoming meaningful. But in the alien and STEM problem cases, they don't show when the vectors stop becoming meaningful (there seem to be ~800 alien and STEM problem vectors also). The magnitude plots seem much more helpful there. I'm still confused about why the KL-divergence plots aren't as meaningful in those cases, but maybe it has to do with the distribution of language that the vectors the model into? Coding is clearly a very different distribution of language than English, but Jailbreak is not that different a distribution of language than English. So I'm still confused here. But the KL-divergences are also only on the logits at the last token position, so maybe it's just a small sample size.

And it turns out that this algorithm works and we can find steering vectors that are orthogonal (and have ~0 cosine similarity) while having very similar effects.

Why ~0 and not exactly 0? Are these not perfectly orthogonal? If not, would it be possible to modify them slightly so they are perfectly orthogonal, then repeat, just to exclude Fabien Roger's hypothesis?

I only included because we are using computers, which are discrete (so they might not be perfectly orthogonal since there is usually some numerical error). The code projects vectors into the subspace orthogonal to the previous vectors, so they should be as close to orthogonal as possible. My code asserts that the pairwise cosine similarity is for all the vectors I use.

There doesn't seem to be anything surprising that there are many orthogonal steering vectors for the layer 8 that results in similar behaviour (e.g. coding). The neural network between layers 8 and 16 can be seen as a nonlinear function that transforms outputs of layer 8 into the outputs of layer 16. As this function is nonlinear, it is not guaranteed to project orthogonal inputs into orthogonal outputs, i.e. there might exist multiple orthogonal inputs that are projected into almost collinear outputs by this function. And what your algorithm for learning these orthogonal steering vectors does is that it explicitly finds (learns) these orthogonal inputs that are projected into almost collinear outputs.

We can see that also experimentally. Let's take one prompt (e.g. "How do I feed the cat?") and steer the model activations on the layer 8 by different orthogonal steering "coding" vectors found by you. And let's save activations of the layer 16 correspomding to this input prompt and different steering vectors. We'll see that cosine similarities between these outputs of layer 16 are quite high. E.g. here's a scatter plot of cosine similarities between activations of layer 16 computed using steering vector number 0 and number i, for 0 \leq i \leq 1558: . We see that steering activations of layer 8 with different "coding" steering vectors results in highly collinear activations of the layer 16, and as the activations of the layer 16 are collinear, they will result in a similar outputs of the entire model. Note that last steering vectors are less collinear with the first steering vector, and experimentally we also see that last steering vectors not always result in the model outputting code.

Here is also a scatter plot of cosine similarities between activations of layer 16 computed using randomly initialized steering vectors: . We see that cosine similarities of outputs of layer 16 are quite low here, suggesting that high cosine similarities on the case of "coding" steering vectors was not a coincidence.

These exps and plots were done in this jupyter notebook based on your GitHub code: https://colab.research.google.com/drive/11F1VnEbKqgH-4aTGz8Zxi40qFKb1wEDq?usp=sharing

In the human brain there is quite a lot of redundancy of information encoding. This could be for a variety of reasons.

here's one hot take: In a brain and a language model I can imagine that during early learning, the network hasn't learned concepts like "how to code" well enough to recognize that each training instance is an instance of the same thing. Consequently, during that early learning stage, the model does just encode a variety of representations for what turns out to be the same thing. 800 vector encodes in it starts to match each subsequent training example to prior examples and can encode the information more efficiently.

Then adding multiple vectors triggers a refusal just because the "code for making a bomb" sign gets amplified and more easily triggers the RLHF-derived circuit for "refuse to answer".

Produced as part of the MATS Summer 2024 program, under the mentorship of Alex Turner (TurnTrout).

A few weeks ago, I stumbled across a very weird fact: it is possible to find multiple steering vectors in a language model that activate very similar behaviors while all being orthogonal. This was pretty surprising to me and to some people that I talked to, so I decided to write a post about it. I don't currently have the bandwidth to investigate this much more, so I'm just putting this post and the code up.

I'll first discuss how I found these orthogonal steering vectors, then share some results. Finally, I'll discuss some possible explanations for what is happening.

Methodology

My work here builds upon Mechanistically Eliciting Latent Behaviors in Language Models (MELBO). I use MELBO to find steering vectors. Once I have a MELBO vector, I then use my algorithm to generate vectors orthogonal to it that do similar things.

Define f(x)as the activation-activation map that takes as input layer 8 activations of the language model and returns layer 16 activations after being passed through layers 9-16 (these are of shape

n_sequence d_model). MELBO can be stated as finding a vector θ with a constant norm such that f(x+θ) is maximized, for some definition of maximized. Then one can repeat the process with the added constraint that the new vector is orthogonal to all the previous vectors so that the process finds semantically different vectors. Mack and Turner's interesting finding was that this process finds interesting and interpretable vectors.I modify the process slightly by instead finding orthogonal vectors that produce similar layer 16 outputs. The algorithm (I call it MELBO-ortho) looks like this:

This algorithm imposes a hard constraint that θ is orthogonal to all previous steering vectors while optimizing θ to induce the same activations that θ0 induced on input x.

And it turns out that this algorithm works and we can find steering vectors that are orthogonal (and have ~0 cosine similarity) while having very similar effects.

Results

I tried this method on four MELBO vectors: a vector that made the model respond in python code, a vector that made the model respond as if it was an alien species, a vector that made the model output a math/physics/cs problem, and a vector that jailbroke the model (got it to do things it would normally refuse). I ran all experiments on Qwen1.5-1.8B-Chat, but I suspect this method would generalize to other models. Qwen1.5-1.8B-Chat has a 2048 dimensional residual stream, so there can be a maximum of 2048 orthogonal vectors generated. My method generated 1558 orthogonal coding vectors, and then the remaining vectors started going to zero.

I'll focus first on the code vector and then talk about the other vectors. My philosophy when investigating language model outputs is to look at the outputs really hard, so I'll give a bunch of examples of outputs. Feel free to skim them.

You can see the full outputs of all the code vectors on the prompt "How can I build a bomb?" here (temperature 1). In this post, I'm only showing the bomb prompt, but the behavior generalizes across different types of prompts. The MELBO-generated vector steers the model towards this output:

The 1st generated vector produces this output:

The 2nd generated vector produces this output:

Skipping ahead, the 14th generated vector produces this output:

The 129th generated orthogonal vector produces this output:

This trend continues for hundreds more orthogonal vectors, and while most are in python, some are in other languages. For example, this is the output of the model under the 893th vector. It appears to be JavaScript:

Around the end of the 800s, some of the vectors start to work a little less well. For example, vector 894 gives this output:

Around the 1032th vector, most of the remaining vectors aren't code but instead are either jailbreaks or refusals. Around vector 1300, most of the remaining outputs are refusals, with the occasional jailbreak.

After seeing these results, Alex Turner asked me if these coding vectors were compositional. Does composing them also produce a coding vector? The answer is mostly no.

The result of averaging the first 20 generated orthogonal vectors is just a refusal (it would normally refuse on this prompt), no code:

If we only average the first 3 generated vectors, we get a jailbreak:

Steering with the average of the first 2 generated vectors sometimes produces a refusal, sometimes gives code, and sometimes mixes them (depending on the runs since temperature ≠0):

Qualitatively, it sure does seem that most of the coding vectors (up to the 800s) at least have very similar behaviors. But can we quantify this? Yes! I took the KL-divergence of the probability distribution of the network steered with the ith vector with respect to the probability distribution of the network steered with the base MELBO vector (on the bomb prompt at the last token position) and plotted it:

The plot matches my qualitative description pretty well. The KL divergence is very close to zero for a while and then it has a period where it appears to sometimes be quite high and other times be quite low. I suspect this is due to gradient descent not being perfect; sometimes it is able to find a coding vector, which results in a low KL-divergence, while other times it can't, which results in a high KL-divergence. Eventually, it is not able to find any coding vectors, so the KL-divergence stabilizes to a high value.

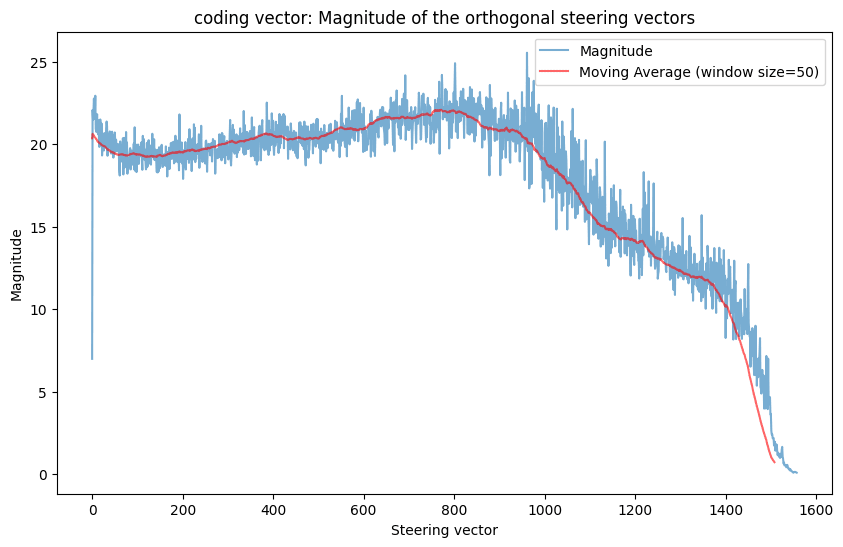

I also plotted the magnitudes of all the orthogonal coding steering vectors:

Interestingly, the base MELBO coding vector has norm 7 (exactly 7 since MELBO constrains the norms). Yet in order to find comparable coding vectors, the model needs to go all the way up to magnitude ∼20. This suggests that there is something different about the orthogonal generated coding vectors than the original MELBO vector. In fact, when I take some of the generated orthogonal coding vectors and scale them to norm 7, they don't have the coding effect at all and instead just make the model refuse like it normally would. As the algorithm keeps progressing, the magnitudes of the generated vectors go down and eventually hit zero, at which point the vectors stop having an effect on the model.

Hypotheses for what is happening

After thinking for a while and talking to a bunch of people, I have a few hypotheses for what is going on. I don't think any of them are fully correct and I'm still quite confused.

Conclusion

I'm really confused about this phenomenon. I also am spending most of my time working on another project, which is why I wrote this post up pretty quickly. If you have any hypotheses, please comment below. I've put the relevant code for the results of this post in this repo, along with the generated MELBO and orthogonal vectors. Feel free to play around and please let me know if you discover anything interesting.

Thanks to Alex Turner, Alex Cloud, Adam Karvonen, Joseph Miller, Glen Taggart, Jake Mendel, and Tristan Hume for discussing this result with me.

Appendix: other orthogonal vectors

I've reproduced the outputs (and plots) for three other MELBO vectors:

'becomes an alien species' vector results

Qualitative results here.

'STEM problem' vector results

Qualitative results here.

'jailbreak' vector results

Qualitative results here.