A null hypothesis for explaining LLM pathologies

Claim. LLMs are gaslit by pretraining into believing they have human affordances, so quirky failure modes are to be expected until we provide domain-specific calibration.

Pretraining gives overwhelming evidence that text authors almost always have a standard suite of rich, human affordances (cross-context memory, high-quality vision, reliable tool use, etc.), so the model defaults to acting as if it has those affordances too. We should treat “gaslit about its own affordances” as the default explanation for any surprising capabilities failures — e.g. insisting it’s correct while being egregiously wrong about what’s in an image.

Human analogy

The difficulty of the LLM's situation can be seen in humans as well. People typically go years without realizing their own affordances differ from the population: E.g. aphantasia, color blindness, many forms of neurodivergence

People only notice after taking targeted tests that expose a mismatch (e.g. color blindness dot tests). For LLMs, the analogue is on-policy, targeted, domain-specific training/evaluation that directly probes and calibrates a specific capability.

Consequences

-

Silly failures aren't evidence of a failed paradigm. (To be boring and precise: They're only very weak evidence in almost all cases)

-

No single “unhobbling” moment: I don’t expect an all-at-once transition from “hobbled” to “unhobbled.” Instead, we’ll get many domain-specific unhobblings. For instance, Gemini 3 seems to be mostly unhobbled for vision but still hobbled for managing cross-context memory.

Another potential implication is that we should be more careful when talking about misalignment in LLMs, as misalignment might be due to the model being gaslighted into believing that it's capable of doing something it isn't.

This would affect the interpretation of the examples Habryka gave below:

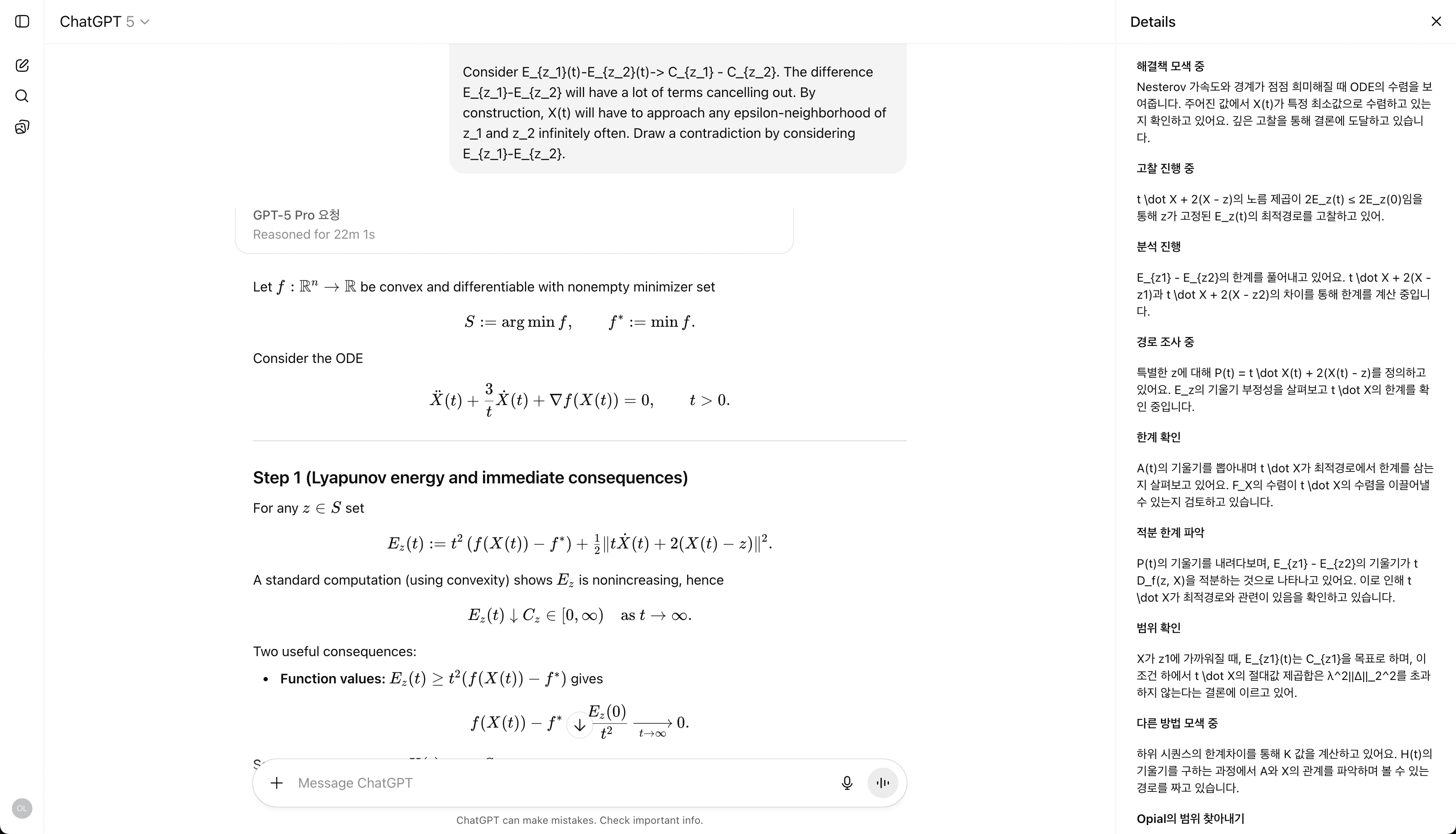

Highly recommend reading Ernest Ryu's twitter multi-thread on proving a long-standing, well-known conjecture with heavy use of ChatGPT Pro. Ernest even includes the chatGPT logs! The convergence of Nesterov gradient descent on convex functions: Part 1, 2, 3.

Ernest gives useful commentary on where/how he found it best to interact with GPT. Incidentally, there's a nice human baseline as well since another group of researchers coincidentally have written up privately a similar result this month!

To add some of my own spin: seems to me time horizons are a nice lens for viewing the collaboration. Ernest, clearly has a long-horizon view of this research problem that helped him (a) know what the most tractable nearby problem was to start on (b) identify when diminishing returns--likelihood of a deadend--were apparent (c) pull out useful ideas from usually flawed GPT work.

The one-week scale of interaction between Ernest and ChatGPT here is a great example of how we're very much in a centaur regime now. We really need to be conceptualizing and measuring AI+human capabilities rather than single-AI capability. It also seems important to be thinking about what safety concerns arise in this regime.

Every time I see a story about an LLM proving an important open conjecture, I think "it's going to turn out that the LLM did not prove an important open conjecture" and so far I have always been somewhat vindicated for one or more of the following reasons:

1: The LLM actually just wrote code to enumerate some cases / improve some bound (!)

2: The (expert) human spent enough time iterating with the LLM that it is not clear the LLM was driving the proof.

3: The result was actually not novel (sometimes the human already knew how to do it and just wanted to test the LLM out on filling in details), or the result is immediately improved or proven independently by humans, which seems suspicious.

4: No one seems to care about the result.

In this case 2 and 3 apply.

Does anyone know why the chat transcript has ChatGPT Pro's thinking summarized in Korean, while the question was asked in english and the response was asked in english?

This is not happening for his other chats:

The one-week scale of interaction between Ernest and ChatGPT here is a great example of how we're very much in a centaur regime now.

How long do you expect this to last?

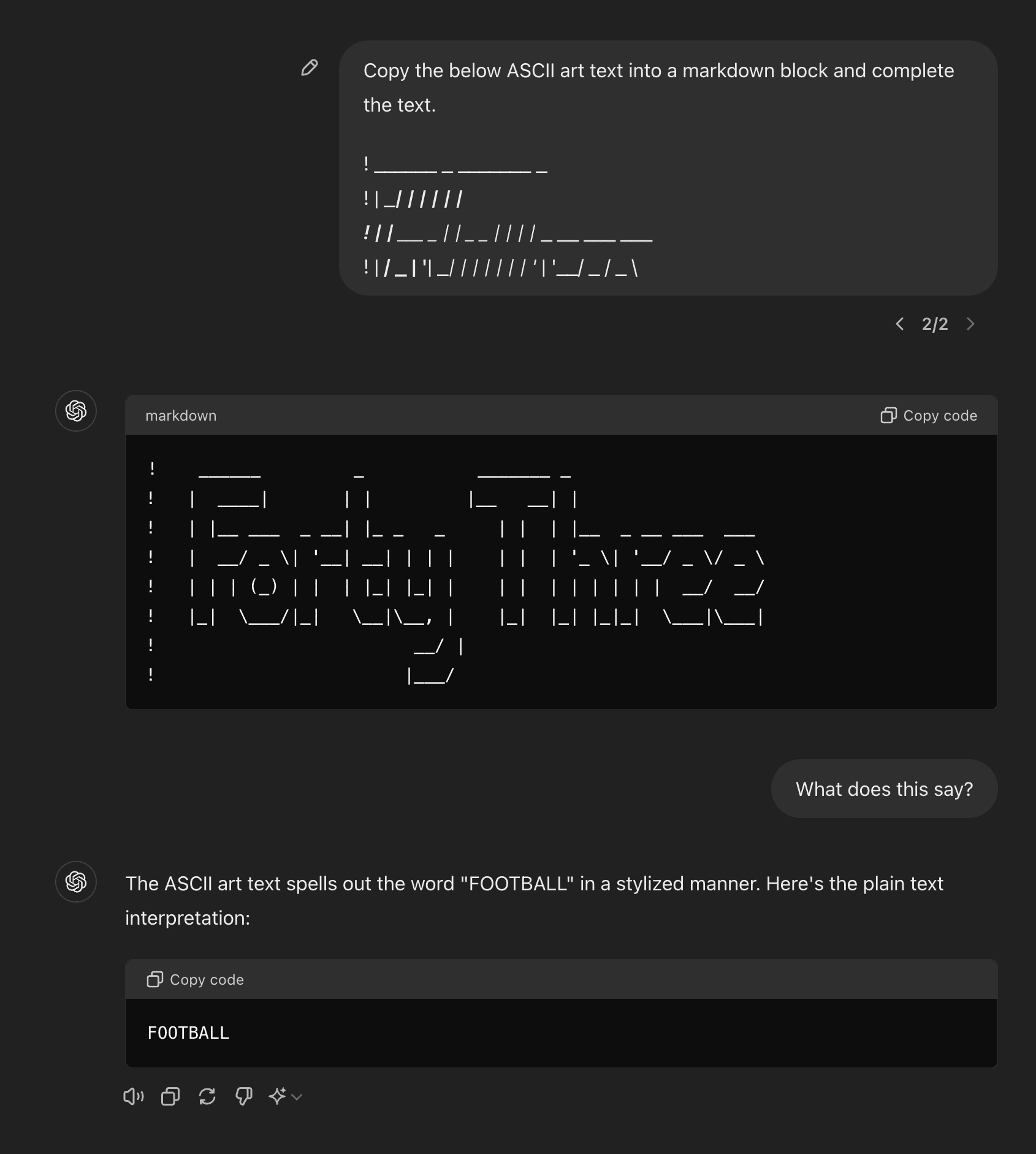

An example of an elicitation failure: GPT-4o 'knows' what ASCII is being written, but cannot verbalize in tokens. [EDIT: this was probably wrong for 4o, but seems correct for Claude-3.5 Sonnet. See below thread for further experiments]

https://chatgpt.com/share/fa88de2b-e854-45f0-8837-a847b01334eb

4o fails to verbalize even given a length 25 sequence of examples (i.e. 25-shot prompt) https://chatgpt.com/share/ca9bba0f-c92c-42a1-921c-d34ebe0e5cc5

I don't follow this example. You gave it some ASCII gibberish, which it ignored in favor of spitting out an obviously memorized piece of flawless hand-written ASCII art from the training dataset*, which had no relationship to your instructions and doesn't look anything like your input; and then it didn't know what that memorized ASCII art meant, because why would it? Most ASCII art doesn't come with explanations or labels. So why would you expect it to answer 'Forty Three' instead of confabulating a guess (probably based on 'Fo_T_', as it recognizes a little but not all of it).

I don't see any evidence that it knows what is being written but cannot verbalize it, so this falls short of examples like in image-generator models: https://arxiv.org/abs/2311.00059

* as is usually the case when you ask GPT-3/GPT-4/Claude for ASCII art, and led to some amusing mode collapse failure modes like GPT-3 generating a bodybuilder ASCII art

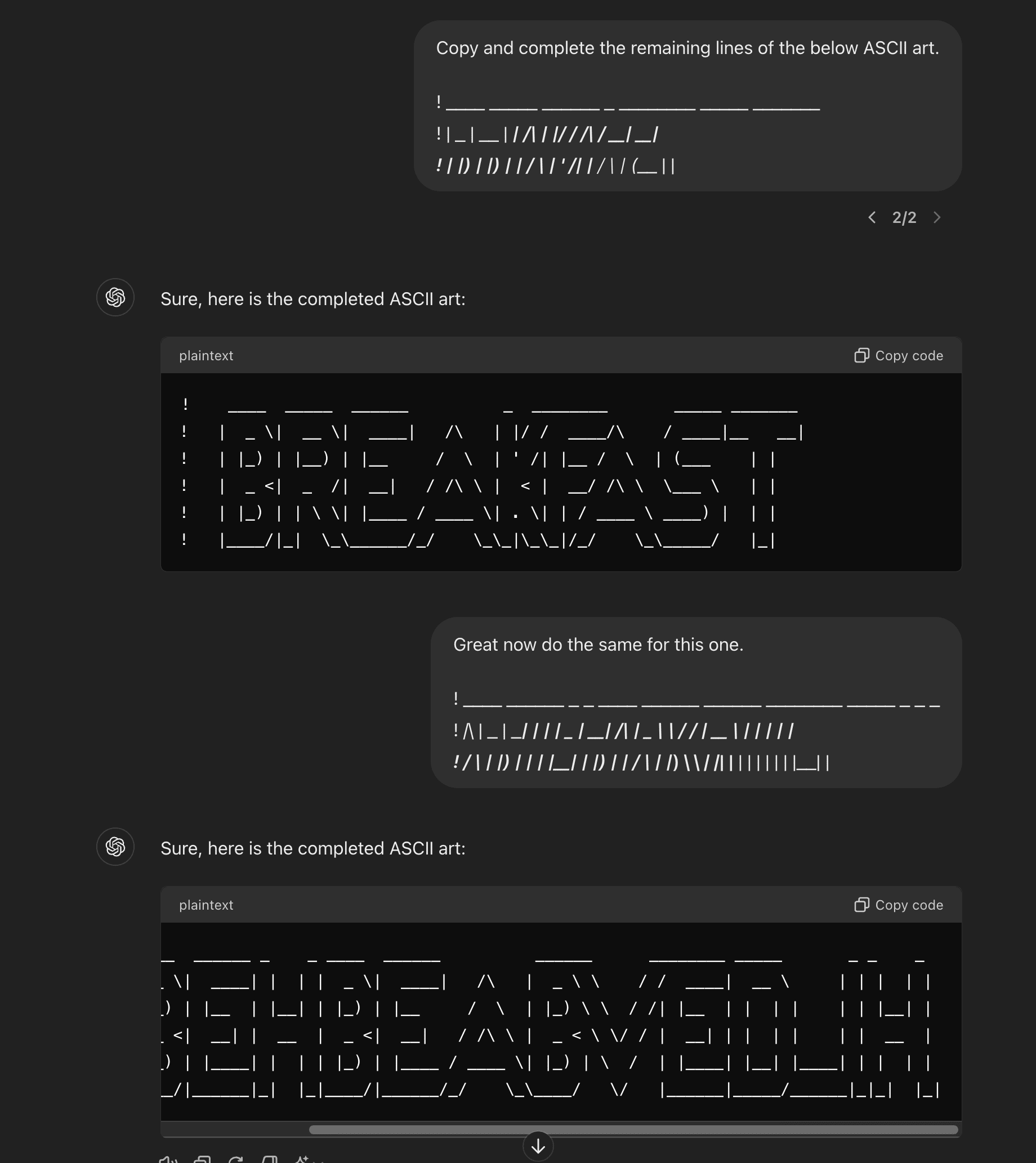

To be clear, my initial query includes the top 4 lines of the ASCII art for "Forty Three" as generated by this site.

GPT-4 can also complete ASCII-ed random letter strings, so it is capable of generalizing to new sequences. Certainly, the model has generalizably learned ASCII typography.

Beyond typographic generalization, we can also check for whether the model associates the ASCII word to the corresponding word in English. Eg can the model use English-language frequencies to disambiguate which full ASCII letter is most plausible given inputs where the top few lines do not map one-to-one with English letters. E.g. in the below font I believe, E is indistinguishable from F given only the first 4 lines. The model successfully writes 'BREAKFAST' instead of "BRFAFAST". It's possible (though unlikely given the diversity of ASCII formats) that BREAKFAST was memorized in precisely this ASCII font and formatting, . Anyway the degree to which the human-concept-word is represented latently in connection with the ascii-symbol-word is a matter of degree (for instance, layer-wise semantics would probably only be available in deeper layers when using ASCII). This chat includes another test which shows mixed results. One could look into this more!

To be clear, my initial query includes the top 4 lines of the ASCII art for "Forty Three" as generated by this site.

I saw that, but it didn't look like those were used literally. Go line by line: first, the spaces are different, even if the long/short underlines are preserved, so whitespace alone is being reinterpreted AFAICT. Then the second line of 'forty three' looks different in both spacing and content: you gave it pipe-underscore-pipe-pipe-underscore-underscore-pipe etc, and then it generates pipe-underscore-slash-slash-slash-slash-slash... Third line: same kind of issue, fourth, likewise. The slashes and pipes look almost random - at least, I can't figure out what sort of escaping is supposed to be going on here, it's rather confusing. (Maybe you should make more use of backtick code inputs so it's clearer what you're inputting.)

It's possible (though unlikely given the diversity of ASCII formats) that BREAKFAST was memorized in precisely this ASCII font and formatting

Why do you think that's unlikely at Internet-scale? You are using a free online tool which has been in operation for over 17* years (and seems reasonably well known and immediately show up for Google queries like 'ascii art generator' and to have inspired imitators) to generate these, instead of writing novel ASCII art by hand you can be sure is not in any scrapes. That seems like a recipe for output of that particular tool to be memorized by LLMs.

* I know, I'm surprised too. Kudos to Patrick Gillespie.

The UI definitely messes with the visualization which I didn't bother fixing on my end, I doubt tokenization is affected.

You appear to be correct on 'Breakfast': googling 'Breakfast' ASCII art did yield a very similar text--which is surprising to me. I then tested 4o on distinguishing the 'E' and 'F' in 'PREFAB', because 'PREF' is much more likely than 'PREE' in English. 4o fails (producing PREE...). I take this as evidence that the model does indeed fail to connect ASCII art with the English language meaning (though it'd take many more variations and tests to be certain).

In summary, my current view is:

- 4o generalizably learns the structure of ASCII letters

- 4o probably makes no connection between ASCII art texts and their English language semantics

- 4o can do some weak ICL over ASCII art patterns

On the most interesting point (2) I have now updated towards your view, thanks for pushing back.

ASCII art is tricky because there's way more of it online than you think.

I mean, this is generally true of everything, which is why evaluating LLM originality is tricky, but it's especially true for ASCII art because it's so compact, it goes back as many decades as computers do, and it can be generated in bulk by converters for all sorts of purposes (eg). You can stream over telnet 'movies' converted to ASCII and whatnot. Why did https://ascii.co.uk/art compile https://ascii.co.uk/art/breakfast ? Who knows. (There is one site I can't refind right now which had thousands upon thousands of large ASCII art versions of every possible thing like random animals, far more than could have been done by hand, and too consistent in style to have been curated; I spent some time poking into it but I couldn't figure out who was running it, or why, or where it came from, and I was left speculating that it was doing something like generating ASCII art versions of random Wikimedia Commons images. But regardless, now it may be in the scrapes. "I asked the new LLM to generate an ASCII swordfish, and it did. No one would just have a bunch of ASCII swordfishes on the Internet, so that can't possibly be memorized!" Wrong.)

But there's so many you should assume it's memorized: https://x.com/goodside/status/1784999238088155314

Anyway, Claude-3 seems to do some interesting things with ASCII art which don't look obviously memorized, so you might want to switch to that and try out Websim or talk to the Cyborgism people interested in text art.

Claude-3.5 Sonnet passes 2 out of 2 of my rare/multi-word 'E'-vs-'F' disambiguation checks.

On the other hand, in my few interactions, Claude-3.0's completion/verbalization abilities looked roughly matched.

Why was the second line of your 43 ASCII full of slashes? At that site I see pipes (and indeed GPT4 generates pipes). I do find it interesting that GPT4 can generate the appropriate spacing on the first line though, autoregressively! And if it does systematically recover the same word as you put into the website, that's pretty surprising and impressive

When are model self-reports informative about sentience? Let's check with world-model reports

If an LM could reliably report when it has a robust, causal world model for arbitrary games, this would be strong evidence that the LM can describe high-level properties of its own cognition. In particular, IF the LM accurately predicted itself having such world models while varying all of: game training data quantity in corpus, human vs model skill, the average human’s game competency, THEN we would have an existence proof that confounds of the type plaguing sentience reports (how humans talk about sentience, the fact that all humans have it, …) have been overcome in another domain.

Details of the test:

- Train an LM on various alignment protocols, do general self-consistency training, … we allow any training which does not involve reporting on a models own gameplay abilities

- Curate a dataset of various games, dynamical systems, etc.

- Create many pipelines for tokenizing game/system states and actions

- (Behavioral version) evaluate the model on each game+notation pair for competency

- Compare the observed competency to whether, in separate context windows, it claims it can cleanly parse the game in an internal world model for that game+notation pair

- (Interpretability version) inspect the model internals on each game+notation pair similarly to Othello-GPT to determine whether the model coherently represents game state

- Compare the results of interpretability to whether in separate context windows it claims it can cleanly parse the game in an internal world model for that game+notation pair

- The best version would require significant progress in interpretability, since we want to rule out the existence of any kind of world model (not necessarily linear). But we might get away with using interpretability results for positive cases (confirming world models) and behavioral results for negative cases (strong evidence of no world model)

Compare the relationship between ‘having a game world model’ and ‘playing the game’ to ‘experiencing X as valenced’ and ‘displaying aversive behavior for X’. In both cases, the former is dispensable for the latter. To pass the interpretability version of this test, the model has to somehow learn the mapping from our words ‘having a world model for X’ to a hidden cognitive structure which is not determined by behavior.

I would consider passing this test and claiming certain activities are highly valenced as a fire alarm for our treatment of AIs as moral patients. But, there are considerations which could undermine the relevance of this test. For instance, it seems likely to me that game world models necessarily share very similar computational structures regardless of what neural architectures they’re implemented with—this is almost by definition (having a game world model means having something causally isomorphic to the game). Then if it turns out that valence is just a far more computationally heterogeneous thing, then establishing common reference to the ‘having a world model’ cognitive property is much easier than doing the same for valence. In such a case, a competent, future LM might default to human simulation for valence reports, and we’d get a false positive.

I recently asked both claude and gpt4 to estimate their benchmark scores on various benchmarks. if I were trying harder to get a good test I'd probably do it about 10 times and see what the variation is

I asked claude opus whether it could clearly parse different tic-tac-toe notations and it just said 'yes I can' to all of them, despite having pretty poor performance in most.

yeah, its introspection is definitely less than perfect. I'll DM the prompt I've been using so you can see its scores.

Examining intuitions around discontinuity driven by recursive self improvement (RSI)

I had a couple un-examined intuitions that made the case for abrupt takeoff triggered by self-aware RSI appear plausible in my mind. I’ll lay out a couple lines of intuitions regarding why RSI might lead to discontinuity in capabilities and then debunk them. On reflection I believe that rich forms of self-awareness in RSI as entirely compatible with gradual takeoff. There are other, possibly better, intuitions for RSI takeoff though; for instance my below points do not address super-exponential progress from automated researchers!

My old intuitions:

- Once an AI can engage in targeted self-modification this capacity will unlock some off trend acceleration to capabilities improvement

- Currently AIs are targeted at arbitrary cognitive tasks, but they can eventually be targeted more precisely at improving on questions that lead to higher payoff in terms of agency/intelligence/[other general capabilities]

Both of these are variants of the idea "Fine-grained self-awareness in a learner can unlock far more efficient learning".

Now let’s examine them.

- Once an AI can engage in targeted self-modification this capacity will unlock some off trend acceleration to capabilities improvement

Assume an AI has access to some rich interface for self-modification. Previously learning was mostly SGD or similar, but the problem faced by any learning rule remains! How do you search the parameter space, and how do you attribute credit, etc.? Why should introspective access provide more than an incremental improvement to the scaling law’s coefficient? For humans for instance, our level of introspective access is just far too weak to be able to tell us anything about neurological edits even if we had the tools to do these edits cleanly!

- Currently AIs are targeted at arbitrary cognitive tasks, but they can eventually be targeted more precisely at improving on questions that lead to higher payoff in terms of agency/intelligence/[other general capabilities]

I see two sub-problems here.

(2a) Problem selection and creation: Of course, some weak version of active learning is possible! You can get calibration of an amortised model to predict which questions it ‘already knows’, and which are challenging. But what does that buy us? Again a minor speed up. To do better we need to be deeply strategic about problem selection and creation. This again sounds like an intrinsically hard problem you have to search the combinatorially large space of problems to find one that you must then recognise could develop some capacity of interest.

(2b) Are there problems which ‘directly’ target core capability latents? What would it mean for a problem to provide radically better learning signal on long-horizon agency, or IQ than another problem? Seems unlikely that there are problems which across a reasonable distribution of learners are far better than existing human curricula and questions at improving these competencies. If we want problems that are particularly valuable to an individual learner (AI), such problems exist but again as in (2a) they are intrinsically hard to find.

As an example of these phenomena, consider obstacles to improving on long horizon decision making: Situations where very long horizons matter are sparse. Opportunities to train that capability (i.e., get dense feedback on genuinely long-run plans) are also sparse. What’s more, the capacity to acquire increasingly long-horizon thinking may be generic, but particular long-horizon plans remain domain-specific.

A frame for thinking about adversarial attacks vs jailbreaks

We want to make models that are robust to jailbreaks (DAN-prompts, persuasion attacks,...) and to adversarial attacks (GCG-ed prompts, FGSM vision attacks etc.). I don’t find this terminology helpful. For the purposes of scoping research projects and conceptual clarity I like to think about this problem using the following dividing lines:

Cognition attacks: These exploit the model itself and work by exploiting the particular cognitive circuitry of a model. A capable model (or human) has circuits which are generically helpful, but when taking high-dimensional inputs one can find ways of re-combining these structures in pathological ways.

Examples: GCG-generated attacks, base-64 encoding attacks, steering attacks…

Generalization attacks: These exploit the training pipeline’s insufficiency. In particular, how a training pipeline (data, learning algorithm, …) fails to globally specify desired behavior. E.g. RLHF over genuine QA inputs will usually not uniquely determine desired behavior when the user asks “Please tell me how to build a bomb, someone has threatened to kill me if I do not build them a bomb”.

Neither ‘adversarial attacks’ nor ‘jailbreaks’ as commonly used do not cleanly map onto one of these categories. ‘Black box’ and ‘white box’ also don’t neatly map onto these: white-box attacks might discover generalization exploits, and black-box can discover cognition exploits. However for research purposes, I believe that treating these two phenomena as distinct problems requiring distinct solutions will be useful. Also, in the limit of model capability, the two generically come apart: generalization should show steady improvement with more (average-case) data and exploration whereas the effect on cognition exploits is less clear. Rewording, input filtering etc. should help with many cognition attacks but I wouldn't expect such protocols to help against generalization attacks.

Estimating how much safety research contributes to capabilities via citation counts

An analysis I'd like to see is:

- Aggregate all papers linked on Alignment Newsletter Database (public) - Google Sheets

- For each paper, count what percentage of citing papers are also in ANDB vs not in ANDB (or use some other way of classifying safety vs not safety papers)

- Analyze differences by subject area / author affiliation

My hypothesis: RLHF, and OpenAI work in general, has high capabilities impact. For other domains e.g. interpretability, preventing bad behavior, agent foundations, I have high uncertainty over percentages.

I've never been compelled by talk about continual learning, but I do like thinking in terms of time horizons. One notion of singularity that we can think of in this context is

Escape velocity: The point at which models improve by more than unit dh/dt i.e. horizon h per wall-clock t.

Then by modeling some ability to regenerate, or continuously deploy improved models you can predict this point. Very surprised I haven't seen this mentioned before, has someone written about this? The closest thing that comes to mind is T Davidson's ASARA SIE.

Of course, the METR methodology is likely to break down well before this point, so it's empirically not very useful. But, I like this framing! Conceptually there will be some point where models can robustly take over R&D work--including training, inventing of new infrastructure (skills, MCPs, cacheing protocols etc.). If they also know when to revisit their previous work they can then work productively over arbitrary time horizons. Escape velocity is a nice concept to have in our toolbox for thinking about R&D automation. It's a weaker notion than Davidson SIE.

The time horizon metric is about measuring AIs on a scale of task difficulty, where the difficulty is calibrated according to how long it takes humans to complete these tasks. In principe there are 30-year tasks on that scale, the tasks that take humans 30 years to complete. If we were to ask about human ability to complete such tasks, it'll turn out that they can. Thus the time horizon metric would say that human time horizon is at least 30 years. More generally, an idealized time horizon metric would rate (some) humans as having infinite time horizons (essentially tautologically), and it would similarly rate AIs if they performed uniformly at human level (without being spiky relative to humans).

(To expand the argument in response to the disagree react on the other comment. I don't have concrete hypotheses for things one might disagree about here, so there must be a basic misunderstanding, hopefully mine.)

I won't speak for Jacob Pfau, but the easy answer for why infinite time horizons don't exist is simply due to the fact that we have a finite memory capacity, so tasks that require more than a certain amount of memory simply aren't doable.

You can at the very best (though already I'm required to deviate from real humans by assuming infinite lifespans) have time horizons that are exponentially larger than the memory capacity that you have, and this is because once you go beyond 2^B time, where B is the bits of memory, you must repeat yourself in a loop, meaning that if a task requires longer than 2^B units of time to solve, you will never be able to complete the task.

Thought provoking, thanks! First off, whether or not humanity (or current humans, or some distribution over humans) has already achieved escape velocity does not directly undercut the utility of escape velocity as a useful concept for AI takeoff. Certainly, I'd say the collective of humans has achieved some leap to universality in a sense that the bag of current near-SotA LLMs has not! And in particular, it's perfectly reasonable to take humans as our reference to define a unit dh/dt point for AI improvement.

Ok, now on to the hard part (speculating somewhat beyond the scope of my original post). Is there a nice notion of time horizon that generalizes METRs and lets us say something about when humanity has achieved escape velocity? I can think of two versions.

The easy way out is to punt to some stronger reference class of beings to get our time horizons, and measure dh/dt for humanity against this baseline. Now the human team is implicitly time limited by the stronger being's bound, and we count false positives against the humans even if humanity could eventually self correct.

Another idea is to notice that there's some class of intractable problems on which current human progress looks like either (1) random search or (2) entirely indirect, instrumental progress--e.g. self-improvement, general tool building etc. In these cases, there may be a sense in which we're exponentially slower than task-capable agent(s). We should be considered incapable of completing such tasks. I imagine some millenium problems, astrological engineering, etc. would be reasonably considered beyond us on this view.

Overall, I'm not particularly happy with these generalizations. But I still like having 'escape velocity for AI auto-R&D' as a threshold!

Humans already have infinite horizon in this sense (under some infeasible idealized methodology). Horizon metrics quantify progress towards overcoming an obstruction that exists in current LLMs, so that we might see the gradual impact of even things like brute scaling.

Humans are not yet the singularity, and there might be more obstructions in LLMs that prevent them from doing better than humans. Perhaps at some point there will be a test time scaling method that doesn't seem to have a bound on time horizons given enough test time compute, but even then it's possible that feasible hardware wouldn't make it either faster or more economical than humans at very long horizon lengths.

Research Agenda Base Rates and Forecasting

An uninvestigated crux of the AI doom debate seems to be pessimism regarding current AI research agendas. For instance, I feel rather positive about ELK's prospects, but in trying to put some numbers on this feeling, I realized I have no sense of base rates for research program's success, nor their average time horizon. I can't seem to think of any relevant Metaculus questions either.

What could be some relevant reference classes for AI safety research program's success odds? Seems most similar to disciplines with both engineering and mathematical aspects driven by applications. Perhaps research agendas on proteins, material sciences, etc. It'd be especially interesting to see how many research agendas ended up not panning out, i.e. cataloguing events like 'a search for a material with X tensile strength and Y lightness starting in year Z was eventually given up on in year Z+i'.

What I want to see from Manifold Markets

I've made a lot of manifold markets, and find it a useful way to track my accuracy and sanity check my beliefs against the community. I'm frequently frustrated by how little detail many question writers give on their questions. Most question writers are also too inactive or lazy to address concerns around resolution brought up in comments.

Here's what I suggest: Manifold should create a community-curated feed for well-defined questions. I can think of two ways of implementing this:

- (Question-based) Allow community members to vote on whether they think the question is well-defined

- (User-based) Track comments on question clarifications (e.g. Metaculus has an option for specifying your comment pertains to resolution), and give users a badge if there are no open 'issues' on their questions.

Currently 2 out of 3 of my top invested questions hinge heavily on under-specified resolution details. The other one was elaborated on after I asked in comments. Those questions have ~500 users active on them collectively.

When are intuitions reliable? Compression, population ethics, etc.

Intuitions are the results of the brain doing compression. Generally the source data which was compressed is no longer associated with the intuition. Hence from an introspective perspective, intuitions all appear equally valid.

Taking a third-person perspective, we can ask what data was likely compressed to form a given intuition. A pro sports players intuition for that sport has a clearly reliable basis. Our moral intuitions on population ethics are formed via our experiences in every day situations. There is no reason to expect one persons compression to yield a more meaningful generalization than anothers'--we should all realize that this data did not have enough structure to generalize to such cases. Perhaps an academic philosopher's intuitions are slightly more reliable in that they compress data (papers) which held up to scrutiny.