My first take is to bet against this being true, as Emmett Shear said the board's reasoning had nothing to do with a specific safety issue, and the reporters could not get confirmation from any of the people directly involved.

At the same time, there are claims that Emmett couldn't get the board to explain their reasoning to him get written documentation of the board's reasoning, so if those claims are true, then "nothing to do with a specific safety issue" might just be a claim from the board by proxy.

I thought that the claim was that the board refused to give Emmett a reason in writing. Do we have confirmation that they didn't give him a reason at all? Going based off this article which says

New CEO Emmett Shear has so far been unable to get written documentation of the board’s detailed reasoning for firing Altman, which also hasn’t been shared with the company’s investors, according to people familiar with the situation.

Emphasis mine. I'm reading this as being "the most outrageous sounding description of the situation it is possible to make without saying anything that is literally false".

Yep, thanks, corrected. (I think that still could possibly imply the consequent, but it's a much weaker connection.)

The Verge: "board never received a letter about such a breakthrough"

Reading that page, The Verge's claim seems to all hinge on this part:

OpenAI spokesperson Lindsey Held Bolton refuted that notion in a statement shared with The Verge: “Mira told employees what the media reports were about but she did not comment on the accuracy of the information."

They are saying that Bolton "refuted" the notion about such a letter, but the quote from her that follows doesn't actually sounds like a refutation. Hence the Verge piece seems confusing/misleading and I haven't yet seen any credible denial from the board about receiving such a letter.

Further evidence: the OA official announcement from Altman today about returning to the status quo ante bellum and Toner's official resignation tweets all make no mention or hints of Q* (in addition to the complete radio silence about Q* since the original Reuters report). Toner's tweet, in particular:

To be clear: our decision was about the board's ability to effectively supervise the company, which was our role and responsibility. Though there has been speculation, we were not motivated by a desire to slow down OpenAI’s work.

See also https://twitter.com/sama/status/1730032994474475554 https://twitter.com/sama/status/1730033079975366839 and the below Verge article where again, the blame is all placed on governance & 'communication breakdown' and the planned independent investigation is appealed to repeatedly.

EDIT: Altman evaded comment on Q*, but did not deny its existence and mostly talked about how progress would surely continue. So I read this as evidence that something roughly like Q* may exist and they are optimistic about its long-term prospects, but there's no massive short-term implications, and it played minimal role in recent events - surely far less than the extraordinary level of heavy breathing online.

Atlantic (see also) continues to reinforce this assessment: real, but not important to the drama.

An OpenAI spokesperson didn’t comment on Q* but told me that the researchers’ concerns did not precipitate the board’s actions. Two people familiar with the project, who asked to remain anonymous for fear of repercussions, confirmed to me that OpenAI has indeed been working on the algorithm and has applied it to math problems. But contrary to the worries of some of their colleagues, they expressed skepticism that this could have been considered a breakthrough awesome enough to provoke existential dread...The OpenAI spokesperson would only say that the company is always doing research and working on new ideas.

Can you add a "Reuters reports" qualifier to the title? As is, this is speculation presented at least in the title as fact.

Conditional on there actually being a model named Q* (and one named Zero, not mentioned in the article), I wrote some thoughts on what this could mean. The letter might not have existed, but that doesn't mean the models don't exist.

Regarding Q*, the (and Zero, the other OpenAI AI model you didn't know about)

Let's play word association with Q*:

From Reuters article:

The maker of ChatGPT had made progress on Q* (pronounced Q-Star), which some internally believe could be a breakthrough in the startup's search for superintelligence, also known as artificial general intelligence (AGI), one of the people told Reuters. OpenAI defines AGI as AI systems that are smarter than humans. Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because they were not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success, the source said.

Q -> Q-learning: Q-learning is a model-free reinforcement learning algorithm that learns an action-value function (called the Q-function) to estimate the long-term reward of taking a given action in a particular state.

* -> AlphaSTAR: DeepMind trained AlphaStar years ago, which was an AI agent that defeated professional StarCraft players.

They also used a multi-agent setup where they trained both a Protoss agent and Zerg agent separately to master those factions rather than try to master all at once.

For their RL algorithm, DeepMind used a specialized variant of PPO/D4PG adapted for complex multi-agent scenarios like StarCraft.

Now, I'm hearing that there's another model too: Zero.

Well, if that's the case:

1) Q* -> Q-learning + AlphaStar

2) Zero -> AlphaZero + ??

The key difference between AlphaStar and AlphaZero is that AlphaZero uses MCTS while AlphaStar primarily relies on neural networks to understand and interact with the complex environment.

MCTS is expensive to run.

The Monte Carlo tree search (MCTS) algorithm looks ahead at possible futures and evaluates the best move to make. This made AlphaZero's gameplay more precise.

So:

Q-learning is strong in learning optimal actions through trial and error, adapting to environments where a predictive model is not available or is too complex.

MCTS, on the other hand, excels in planning and decision-making by simulating possible futures. By integrating these methods, an AI system can learn from its environment while also being able to anticipate and strategize about future states.

One of the holy grails of AGI is the ability of a system to adapt to a wide range of environments and generalize from one situation to another. The adaptive nature of Q-learning combined with the predictive and strategic capabilities of MCTS could push an AI system closer to this goal. It could allow an AI to not only learn effectively from its environment but also to anticipate future scenarios and adapt its strategies accordingly.

Conclusion: I have no idea if this is what the Q* or Zero codenames are pointing to, but if we play along, it could be that Zero is using some form of Q-learning in addition to Monte-Carlo tree search to help with decision-making and Q* is doing a similar thing, but without MCTS. Or, I could be way off-track.

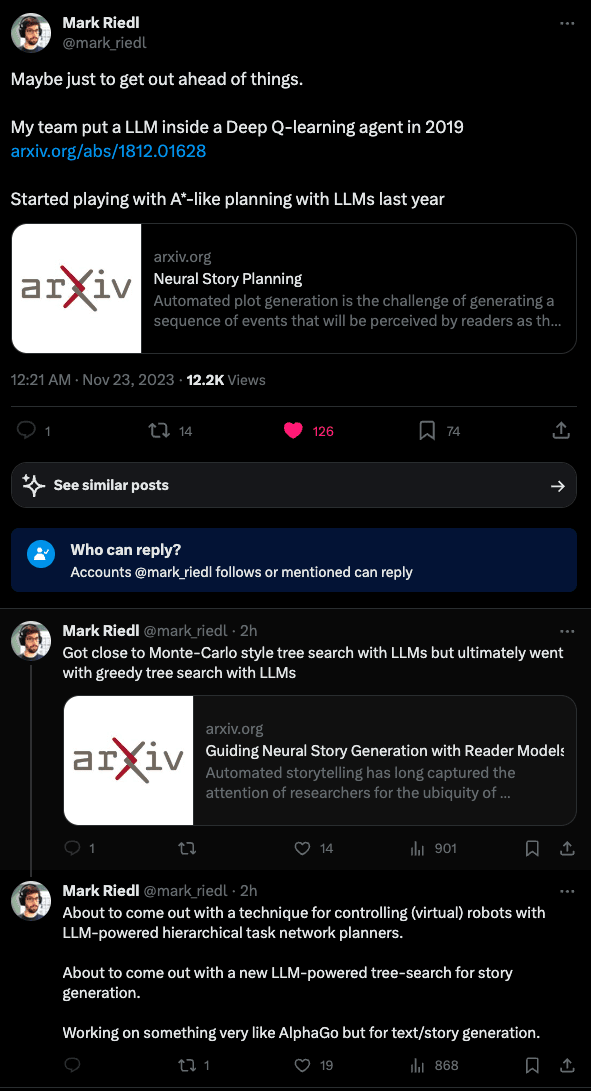

Potentially related (the * might coming from A*, the pathfinding and graph traversal algo):