I feel deconfused when I reject utility functions, in favor of values being embedded in heuristics and/or subagents.

"Humans don't have utility functions" and the idea of values of being embedded in heuristics and/or subagents have been discussed since the beginning of LW, but usually framed as a problem, not a solution. The key issue here is that utility functions are the only reflectively stable carrier of value that we know of, meaning that an agent with a utility function would want to preserve that utility function and build more agents with the same utility function, but we don't know what agents with other kinds of value will want to do in the long run. I don't understand why we no longer seem to care about reflective stability...

Granted I haven't read all of the literature on shard theory so maybe I've missed something. Can you perhaps summarize the argument for this?

I agree that reflectivity for learned systems is a major open question, and my current project is to study the reflectivity and self-modification related behaviors of current language models.

I don't think that the utility function framework helps much. I do agree that utility functions seem poorly suited to capturing human values. However, my reaction to that is to be more skeptical of utility functions. Also, I don't think the true solution to questions of reflectivity is to reach some perfected fixed point, after which your values remain static for all time. That doesn't seem human, and I'm in favor of 'cloning the human prior' as much as possible. It seems like a very bad idea to deliberately set out to create an agent whose mechanism of forming and holding values is completely different from our own.

I also think utility functions are poorly suited to capturing the behaviors and values of current AI systems, which I take as another downwards update on the applicability of utility functions to capture such things in powerful cognitive systems.

We can take a deep model and derive mathematical objects with properties that are nominally very close to utility functions. E.g., for transformers, it will probably soon be possible to find a reasonably concise energy function (probably of a similar OOM of complexity as the model weights themselves) whose minimization corresponds to executing forwards passes of the transformer. However, this energy function wouldn't tell you much about the values or objectives of the model, since the energy function is expressed in the ontology of model weights and activations, not an agent's beliefs / goals.

I think this may be the closest we'll get to static, universally optimized for utility functions of realistic cognitive architectures. (And they're not even really static because mixing in any degree of online learning would cause the energy function in question to constantly change over time.)

I agree that reflectivity for learned systems is a major open question, and my current project is to study the reflectivity and self-modification related behaviors of current language models.

Interesting. I'm curious what kinds of results you're hoping for, or just more details about your project. (But feel free to ignore this if talking about it now isn't a good use of your time.) My understanding is that LLMs can potentially be fine-tuned or used to do various things, including instantiating various human-like or artificial characters (such as a "helpful AI assistant"). Seems like reflectivity and self-modification could vary greatly depending on what character(s) you instantiate, or how you use the LLMs in other ways.

Also, I don’t think the true solution to questions of reflectivity is to reach some perfected fixed point, after which your values remain static for all time.

I'm definitely not in favor of building an AI with an utility function representing fixed, static values, at least not in anything like our current circumstances. (My own preferred approach is what I called "White-Box Metaphilosophical AI" in the linked post.) I was just taken aback by Peter saying that he felt "deconfused when I reject utility functions", when there was a reason that people were/are thinking about utility functions in relation to AI x-safety, and the new approach he likes hasn't addressed that reason yet.

I don't see any clear-cut disagreement between my position and your White-Box Metaphilosophical AI. I wonder how much is just a framing difference?

Reflective stability seems like something that can be left to a smarter-than-human aligned AI.

I'm not saying it would be bad to implement a utility function in an AGI. I'm mostly saying that aiming for that makes human values look complex and hard to observe.

E.g. it leads people to versions of the diamond alignment problem that sound simple, but which cause people to worry about hard problems which they mistakenly imagine are on a path to implementing human values.

Whereas shard theory seems aimed at a model of human values that's both accurate and conceptually simple.

Whereas shard theory seems aimed at a model of human values that’s both accurate and conceptually simple.

Let's distinguish between shard theory as a model of human values, versus implementing an AI that learns its own values in a shard-based way. The former seems fine to me (pending further research on how well the model actually fits), but the latter worries me in part because it's not reflectively stable and the proponents haven't talked about how they plan to ensure that things will go well in the long run. If you're talking about the former and I'm talking about the latter, then we might have been talking past each other. But I think the shard-theory proponents are proposing to do the latter (correct me if I'm wrong), so it seems important to consider that in any overall evaluation of shard theory?

BTW here are two other reasons for my worries. Again these may have already been addressed somewhere and I just missed it.

- The AI will learn its own shard-based values which may differ greatly from human values. Even different humans learn different values depending on genes and environment, and the AI's "genes" and "environment" will probably lie far outside the human distribution. How do we figure out what values we want the AI to learn, and how to make sure the AI learns those values? These seem like very hard research questions.

- Humans are all partly or even mostly selfish, but we don't want the AI to be. What's the plan here, or reason to think that shard-based agents can be trained to not be selfish?

I think of shard theory as more than just a model of how to model humans.

My main point here is that human values will be represented in AIs in a form that looks a good deal more like the shard theory model than like a utility function.

Approaches that involve utility functions seem likely to make alignment harder, via adding an extra step (translating a utility function into shard form) and/or by confusing people about how to recognize human values.

I'm unclear whether shard theory tells us much about how to cause AIs to have the values we want them to have.

Also, I'm not talking much about the long run. I expect that problems with reflective stability will be handled by entities that have more knowledge and intelligence than we have.

Re shard theory: I think it's plausibly useful, and maybe be a part of an alignment plan. But I'm quite a bit more negative than you or Turntrout on that plan, and I'd probably guess that Shard Theory ultimately doesn't impact alignment that much.

I feel like reflective stability is what caused me to reject utility. Specifically, it seems like it is impossible to be reflectively stable if I am the kind of mind that would follow the style of argument given for the independence axiom. It seems like there is a conflict between reflective stability and Bayesian updating.

I am choosing reflective stability, in spite of the fact that loosing updating is making things very messy and confusing (especially in the logical setting), because reflective stability is that important.

When I lose updating, the independence axiom, and thus utility goes along with it.

Although I note that my flavor of rejecting utility functions is trying to replace them with something more general, not something incompatible.

UDT still has utility functions, even though it doesn't have independence... Is it just a terminological issue? Like you want to call the representation of value in whatever the correct decision theory turns out to be something besides "utility"? If so, why?

UDT still has utility functions

But where does UDT get those utility functions from, why does it care about expected utility specifically and not arbitrary preference over policies? Utility functions seem to centrally originate from updateful agents, which take many actions in many hypothetical situations, coherent with each other, forcing preference to be describable as expected utility. Such agents can then become reflectively stable by turning to UDT, now only ever taking a single decision about policy, in the single situation of total ignorance, with nothing else for it to be coherent with.

So by becoming updateless, a UDT agent loses contact with the origin of (motivation for) its own utility function. To keep it, it would still implicitly need an updateful point of view, with its many situations that consitutute the affordance for acting coherently, to motivate its preference to have the specific form of expected utility. Otherwise it only has the one situation, and its preference and policy could be anything, with no opportunity to be constrained by coherence.

I think UDT as you specified it has utility functions. What do you mean by doesn't have independence? I am advocating for an updateless agent model that might strictly prefer a mixture between outcomes A and B to either A or B deterministically. I think an agent model with this property should not be described as having a "utility." Maybe I am conflating "utility" with expected utility maximization/VNM and you are meaning something more general?

If you mean by utility something more general than utility as used in EUM, then I think it is mostly a terminological issue.

I think I endorse the word "utility" without any qualifiers as referring to EUM. In part because I think that is how it is used, and in part because EUM is nice enough to deserve the word utility.

Even if EUM doesn't get "utility", I think it at least gets "utility function", since "function" implies cardinal utility rather than ordinal utility and I think people almost always mean EUM when talking about cardinal utility.

I personally care about cardinal utility, where the magnitude of the utility is information about how to aggregate rather than information about how to take lotteries, but I think this is a very small minority usage of cardinal utility, so I don't think it should change the naming convention very much.

Some people on LW have claimed that reflective stability is essential. My impression is that Robin Hanson always rejected that.

This seems like an important clash of intuitions. It seems to be Eliezer claiming that his utility function required it, and Robin denying that is a normal part of human values. I suspect this disagreement stems from some important disagreement about human values.

My position seems closer to Robin's than to Eliezer's. I want my values to become increasingly stable. I consider it ok for my values to change moderately as I get closer to creating a CEV. My desire for stability isn't sufficiently strong compared to other values that I need guarantees about it.

The key issue here is that utility functions are the only reflectively stable carrier of value that we know of,

That's not necessarily a good thing. One man's reflective stability is another man's incorrigibility.

This point seems anti-memetic for some reason. See my 4 karma answer here, on a post with 99 karma.

I've changed my mind about AIs developing a self-concept. I see little value in worrying about whether an AI has a self concept.

I had been imagining that an AI would avoid recursive self-improvement if it had no special interest in the source code / weights / machines that correspond to it.

I now think that makes little difference. Suppose the AI doesn't care whether it's improving its source code or creating a new tool starting from some other code base. As long as the result improves on its source code, we get roughly the same risks and benefits as with recursive self improvement.

I currently estimate a 7% chance AI will kill us all this century.

Does this take into account the transitive AI risk, that it's AGIs developed by AGIs developed by AGIs... that kill us? It's not just the AGIs we build that need to be safe, but also the AGIs they build, and the AGIs that those AGIs build, and so on. Most classical AI risk arguments seem to characterize what might go wrong with those indirectly developed AGIs, even if they don't directly apply to the more likely first AGIs like LLM human imitations.

Hmm,

Leading AI labs do not seem to be on a course toward a clear-cut arms race.

but

Also, it looks like the Eliezer-style doomsdayists may have to update on the ChatGPT having turned out to be an obsequious hallucination-prone generalist, rather than... whatever they expected the next step to be.

I think this is perhaps better evidence for race dynamics:

“The race starts today, and we’re going to move and move fast,” Nadella said.

Also, it looks like the Eliezer-style doomsdayists may have to update on the ChatGPT having turned out to be an obsequious hallucination-prone generalist, rather than... whatever they expected the next step to be.

I don't recall Eliezer (or anyone worth taking seriously with similar views) expressing confidence that whatever came after the previous generation of GPT would be anything in particular (except "probably not AGI"). Curious if you have a source for something like this.

It's hard to respect Eliezer's claims there, given that ChatGPT pretty obviously is a hallucination prone AGI, not a non-agi. I'm not sure how to argue this; it can do everything humans can do within its trained modality of text, badly. "Foom" seems to be a slow process of fixing spaghetti code. It seems like to him, AGI always meant the "and also it's ASI" threshold. whereas as far as I'm concerned, all transformers are AGI, because the algorithm can learn to do anything (but less efficiently than humans, so far).

it can do everything humans can do within its trained modality of text, badly

I don't know what you mean by "AGI" here. The sorts of things I expect baby AGIs to be able to do are e.g. "open-heart surgery in realistic hospital environments". I don't think that AGI is solving all of the kinds of cognitive problems you need in order to model a physical universe and strategically steer that universe toward outcomes (though it's solving some!).

What's an example of a specific capability you could have discovered ChatGPT lacks, that would have convinced you it's "not an AGI" rather than "an incredibly stupid AGI"?

Transformers will be able to do open-heart surgery in realistic environments (but, unless trained as carefully as a human, badly) if trained in those environments, but I doubt anyone would certify them to do so safely and reliably for a while after they can do it. ChatGPT didn't see anything but text, but displays human level generality while being less than human level IQ at the moment.

What's an example of a specific capability you could have discovered ChatGPT lacks, that would have convinced you it's "not an AGI" rather than "an incredibly stupid AGI"?

If it had had very significant trouble with a specific type of reasoning over its input, despite that domain's presence in its training data. For examples of this, compare to the previous long-running SOTA, LSTMs; LSTMs have very serious trouble with recursive languages even when scaled significantly. They are also "sorta agi", but are critically lacking capabilities that transformers do not. LSTMs cannot handle complex recursive languages; While transformers still have issues with them and demonstrate IQ limitations as a result, they're able to handle more or less the full set of languages that humans can. Including, if run as a normalizing flow, motor control - however motor control is quite hard, and is an area where current transformers are still being scaled carefully rather than through full breadth pretraining.

[edit to clarify: specifically, it would have needed to appear to not be a Universal Learning Machine]

Of course, ChatGPT isn't very good at math. But that isn't transformers' fault overall - Math requires very high quality training data and a lot of practice problems. ChatGPT has only just started to be trained on math practice problems, and it's unclear exactly how well it's going to generalize. The new version with more math training does seem more coherent in math domains but only by a small margin. It really needs to be an early part of the training process for the model to learn high quality math skill.

https://www.lesswrong.com/posts/ozojWweCsa3o32RLZ/list-of-links-formal-methods-embedded-agency-3d-world-models - again, if your metric is "can it robotics well enough to do open heart surgery", you should be anticipating sudden dramatic progress once the existing scaling results get combined with work on making 3d models and causally valid models.

Though, it's pretty unreasonable to only call it agi if a baby version of it can do open heart surgery. No baby version of anything ever will be able to do open heart surgery, no matter how strong the algorithm is. That just requires too much knowledge. It'd have to cheat and simply download non-baby models.

I don't recall Eliezer (or anyone worth taking seriously with similar views) expressing confidence that whatever came after the previous generation of GPT would be anything in particular (except "probably not AGI"). Curious if you have a source for something like this.

Not claiming that, just, from my impressions, I thought their model was more like AlphaZero, with a clear utility function-style agency? Idk. Eliezer also talked about trusting an inscrutable matrix of floating point numbers, but the merging patterns, like grokking and interpretability seem unexpectedly close to how humans think.

Tentative GPT4's summary. This is part of an experiment.

Up/Downvote "Overall" if the summary is useful/harmful.

Up/Downvote "Agreement" if the summary is correct/wrong.

If so, please let me know why you think this is harmful.

(OpenAI doesn't use customers' data anymore for training, and this API account previously opted out of data retention)

TLDR: This article reviews the author's learnings on AI alignment over the past year, covering topics such as Shard Theory, "Do What I Mean," interpretability, takeoff speeds, self-concept, social influences, and trends in capabilities. The author is cautiously optimistic but uncomfortable with the pace of AGI development.

Arguments:

1. Shard Theory: Humans have context-sensitive heuristics rather than utility functions, which could apply to AIs as well. Terminal values seem elusive and confusing.

2. "Do What I Mean": GPT-3 gives hope for AIs to understand human values, but making them obey specific commands remains difficult.

3. Interpretability: More progress is being made than expected, with potential transparent neural nets generating expert consensus on AI safety.

4. Takeoff speeds: Evidence against "foom" suggests that intelligence is compute-intensive and AI self-improvement slows down as it reaches human levels.

5. Self-concept: AGIs may develop self-concepts, but designing agents without self-concepts may be possible and valuable.

6. Social influences: Leading AI labs don't seem to be in an arms race, but geopolitical tensions might cause a race between the West and China for AGI development.

7. Trends in capabilities: Publicly known AI replication of human cognition is increasing, but advances are becoming less quantifiable and more focused on breadth.

Takeaways:

1. Abandoning utility functions in favor of context-sensitive heuristics could lead to better AI alignment.

2. Transparency in neural nets could be essential for determining AI safety.

3. Addressing self-concept development in AGIs could be pivotal.

Strengths:

1. The article provides good coverage of various AI alignment topics, with clear examples.

2. It acknowledges uncertainties and complexities in the AI alignment domain.

Weaknesses:

1. The article might not give enough weight to concerns about an AGI's ability to outsmart human-designed safety measures.

2. It does not deeply explore the ethical implications of AI alignment progress.

Interactions:

1. Shard theory might be related to the orthogonality thesis or other AI alignment theories.

2. Concepts discussed here could inform ongoing debates about AI safety, especially the roles of interpretability and self-awareness.

Factual mistakes: None detected.

Missing arguments:

1. The article could explore more on the potential downsides of AGI development not leading to existential risks but causing massive societal disruptions.

2. The author might have considered discussing AI alignment techniques' robustness in various situations or how transferable they are across different AI systems.

LLMs also suggest that AI can become as general-purpose as humans while remaining less agentic / consequentialist. LLMs have outer layers that are fairly myopic, aiming to predict a few thousand words of future text.

I want to put a lot of cold water on this, since RLHF breaks myopia and makes it more agentic, and I remember a comment from Paul Christiano on agents being better than non-agents in another post.

Here's a link and evidence:

“Discovering Language Model Behaviors with Model-Written Evaluations” is a new Anthropic paper by Ethan Perez et al. that I (Evan Hubinger) also collaborated on. I think the results in this paper are quite interesting in terms of what they demonstrate about both RLHF (Reinforcement Learning from Human Feedback) and language models in general.

Among other things, the paper finds concrete evidence of current large language models exhibiting:

convergent instrumental goal following (e.g. actively expressing a preference not to be shut down), non-myopia (e.g. wanting to sacrifice short-term gain for long-term gain), situational awareness (e.g. awareness of being a language model), coordination (e.g. willingness to coordinate with other AIs), and non-CDT-style reasoning (e.g. one-boxing on Newcomb's problem). Note that many of these are the exact sort of things we hypothesized were necessary pre-requisites for deceptive alignment in “Risks from Learned Optimization”.

Furthermore, most of these metrics generally increase with both pre-trained model scale and number of RLHF steps. In my opinion, I think this is some of the most concrete evidence available that current models are actively becoming more agentic in potentially concerning ways with scale—and in ways that current fine-tuning techniques don't generally seem to be alleviating and sometimes seem to be actively making worse.

Interestingly, the RLHF preference model seemed to be particularly fond of the more agentic option in many of these evals, usually more so than either the pre-trained or fine-tuned language models. We think that this is because the preference model is running ahead of the fine-tuned model, and that future RLHF fine-tuned models will be better at satisfying the preferences of such preference models, the idea being that fine-tuned models tend to fit their preference models better with additional fine-tuning.[1]

This is an important point, since this is evidence against the thesis that non-agentic systems will be developed by default. Also, RLHF came from OpenAI's alignment plan, which is worrying for Goodhart.

So in conclusion, yes we are making agentic AIs.

I don't really think RLHF "breaks myopia" in any interesting sense. I feel like LW folks are being really sloppy in thinking and talking about this. (Sorry for replying to this comment, I could have replied just as well to a bunch of other recent posts.)

I'm not sure what evidence you are referring to: in Ethan's paper the RLHF models have the same level of "myopia" as LMs. They express stronger desire for survival, and a weaker acceptance of ends-justify-means reasoning

But more importantly, all of these are basically just personality questions that I expect would be affected in an extremely similar way by supervised data. The main thing the model does is predict that humans would rate particular responses highly (e.g. responses indicating that they don't want to be shut down), which is really not about agency at all.

The direction of the change mostly seems sensitive to the content of the feedback rather than RLHF itself. As illustration, the biggest effects are liberalism and agreeableness, and right after that is confucianism. These models are not confucian "because of RLHF," they are confucian because of the particular preferences expressed by human raters.

I think the case for non-myopia comes from some kind of a priori reasoning about RLHF vs prediction, but as far as I can currently tell the things people are saying here really don't check out.

That said, people are training AI systems to be helpful assistants by copying (human) consequentialist behavior. Of course you are going to get agents in the sense of systems that select actions based on their predicted consequences in the world. Right now RLHF is relevant mostly because it trains them to be sensitive to the size of mistakes rather than simply giving a best guess about what a human would do, and to imitate the "best thing in their repertoire" rather than the "best thing in the human repertoire." It will also ultimately lead to systems with superhuman performance and that try to fool and manipulate human raters, but this is essentially unrelated to the form of agency that is measured in Ethan's paper or that matters for the risk arguments that are in vogue on LW.

I’m surprised. It feels to me that there’s an obvious difference between predicting one token of text from a dataset and trying to output a token in a sequence with some objective about the entire sequence.

RLHF models optimize for the entire output to be rated highly, not just for the next token, so (if they’re smart enough) they perform better if they think what current tokens will make it easier for the entire output to be rated highly (instead of outputting just one current token that a human would like).

RLHF basically predicts "what token would come next in a high-reward trajectory?" (The only way it differs from the prediction objective is that worse-than-average trajectories are included with negative weight rather than simply being excluded altogether.)

GPT predicts "what token would come next in this text," where the text is often written by a consequentialist (i.e. optimized for long-term consequences) or selected to have particular consequences.

I don't think those are particularly different in the relevant sense. They both produce consequentialist behavior in the obvious way. The relationship between the objective and the model's cognition is unclear in both cases and it seems like they should give rise to very similar messy things that differ in a ton of details.

The superficial difference in myopia doesn't even seem like the one that's relevant to deceptive alignment---we would be totally fine with an RLHF model that optimized over a single episode. The concern is that you get a system that wants some (much!) longer-term goal and then behaves well in a single episode for instrumental reasons, and that needs to be compared to a system which wants some long-term goal and then predicts tokens well for instrumental reasons. (I think this form of myopia is also really not related to realistic reward hacking concerns, but that's slightly more complicated and also less central to the concerns currently in vogue here.)

I think someone should actually write out this case in detail so it can be critiqued (right now I don't believe it). I think there is a version of this claim in the "conditioning generative models" sequence which I critiqued in the draft I read, I could go check the version that got published to see if I still disagree. I definitely don't think it's obvious, and as far as I can tell no evidence has yet come in.

In RLHF, the gradient descent will steer the model towards being more agentic about the entire output (and, speculatively, more context-aware), because that’s the best way to produce a token on a high-reward trajectory. The lowest loss is achievable with a superintelligence that thinks about a sequence of tokens that would be best at hacking human brains (or a model of human brains) and implements it token by token.

That seems quite different from a GPT that focuses entirely on predicting the current token and isn’t incentivized to care about the tokens that come after the current one outside of what the consequentialists writing (and selecting, good point) the text would be caring about. At the lowest loss, the GPT doesn’t use much more optimization power than what the consequentialists plausibly had/used.

I have an intuition about a pretty clear difference here (and have been quite sure that RL breaks myopia for a while now) and am surprised by your expectation for myopia to be preserved. RLHF means optimizing the entire trajectory to get a high reward, with every choice of every token. I’m not sure where the disagreement comes from; I predict that if you imagine fine-tuning a transformer with RL on a game where humans always make the same suboptimal move, but they don’t see it, you would expect the model, when it becomes smarter and understands the game well enough, to start picking instead a new move that leads to better results, with the actions selected for what results they produce in the end. It feels almost tautological: if the model sees a way to achieve a better long-term outcome, it will do that to score better. The model will be steered towards achieving predictably better outcomes in the end. The fact that it’s a transformer that individually picks every token doesn’t mean that RL won’t make it focus on achieving a higher score. Why would the game being about human feedback prevent a GPT from becoming agentic and using more and more of its capabilities to achieve longer-term goals?

(I haven’t read the “conditioning generative models“ sequence, will probably read it. Thank you)

I also don't know where the disagreement comes from. At some point I am interested in engaging with a more substantive article laying out the "RLHF --> non-myopia --> treacherous turn" argument so that it can be discussed more critically.

I’m not sure where the disagreement comes from; I predict that if you imagine fine-tuning a transformer with RL on a game where humans always make the same suboptimal move, but they don’t see it, you would expect the model, when it becomes smarter and understands the game well enough, to start picking instead a new move that leads to better results, with the actions selected for what results they produce in the end

Yes, of course such a model will make superhuman moves (as will GPT if prompted on "Player 1 won the game by making move X"), while a model trained to imitate human moves will continue to play at or below human level (as will GPT given appropriate prompts).

But I think the thing I'm objecting to is a more fundamental incoherence or equivocation in how these concepts are being used and how they are being connected to risk.

I broadly agree that RLHF models introduce a new failure mode of producing outputs that e.g. drive humane valuators insane (or have transformative effects on the world in the course of their human evaluation). To the extent that's all you are saying we are in agreement, and my claim is just that it doesn't really challenge Peter's summary or (or represent a particularly important problem for RLHF).

I'm having trouble keeping track of everything I've learned about AI and AI alignment in the past year or so. I'm writing this post in part to organize my thoughts, and to a lesser extent I'm hoping for feedback about what important new developments I've been neglecting. I'm sure that I haven't noticed every development that I would consider important.

I've become a bit more optimistic about AI alignment in the past year or so.

I currently estimate a 7% chance AI will kill us all this century. That's down from estimates that fluctuated from something like 10% to 40% over the past decade. (The extent to which those numbers fluctuate implies enough confusion that it only takes a little bit of evidence to move my estimate a lot.)

I'm also becoming more nervous about how close we are to human level and transformative AGI. Not to mention feeling uncomfortable that I still don't have a clear understanding of what I mean when I say human level or transformative AGI.

Shard Theory

Shard theory is a paradigm that seems destined to replace the focus (at least on LessWrong) on utility functions as a way of describing what intelligent entities want.

I kept having trouble with the plan to get AIs to have utility functions that promote human values.

Human values mostly vary in response to changes in the environment. I can make a theoretical distinction between contingent human values and the kind of fixed terminal values that seem to belong in a utility function. But I kept getting confused when I tried to fit my values, or typical human values, into that framework. Some values seem clearly instrumental and contingent. Some values seem fixed enough to sort of resemble terminal values. But whenever I try to convince myself that I've found a terminal value that I want to be immutable, I end up feeling confused.

Shard theory tells me that humans don't have values that are well described by the concept of a utility function. Probably nothing will go wrong if I stop hoping to find those terminal values.

We can describe human values as context-sensitive heuristics. That will likely also be true of AIs that we want to create.

I feel deconfused when I reject utility functions, in favor of values being embedded in heuristics and/or subagents.

Some of the posts that better explain these ideas:

Do What I Mean

I've become a bit more optimistic that we'll find a way to tell AIs things like "do what humans want", have them understand that, and have them obey.

GPT3 has a good deal of knowledge about human values, scattered around in ways that limit the usefulness of that knowledge.

LLMs show signs of being less alien than theory, or evidence from systems such as AlphaGo, led me to expect. Their training causes them to learn human concepts pretty faithfully.

That suggests clear progress toward AIs understanding human requests. That seems to be proceeding a good deal faster than any trend toward AIs becoming agenty.

However, LLMs suggest that it will be not at all trivial to ensure that AIs obey some set of commands that we've articulated. Much of the work done by LLMs involves simulating a stereotypical human. That puts some limits on how far they stray from what we want. But the LLM doesn't have a slot where someone could just drop in Asimov's Laws so as to cause the LLM to have those laws as its goals.

The post Retarget The Search provides a little hope that this might become easy. I'm still somewhat pessimistic about this.

Interpretability

Interpretability feels more important than it felt a few years ago. It also feels like it depends heavily on empirical results from AGI-like systems.

I see more signs than I expected that interpretability research is making decent progress.

The post that encouraged me most was How "Discovering Latent Knowledge in Language Models Without Supervision" Fits Into a Broader Alignment Scheme. TL;DR: neural networks likely develop simple representations of whether their beliefs are truth or false. The effort required to detect those representations does not seem to increase much with increasing model size.

Other promising ideas:

I'm currently estimating a 40% chance that before we get existentially risky AI, neural nets will be transparent enough to generate an expert consensus about which AIs are safe to deploy. A few years ago, I'd have likely estimated a 15% chance of that. An expert consensus seems somewhat likely to be essential if we end up needing pivotal processes.

Foom

We continue to accumulate clues about takeoff speeds. I'm becoming increasingly confident that we won't get a strong or unusually dangerous version of foom.

Evidence keeps accumulating that intelligence is compute-intensive. That means replacing human AI developers with AGIs won't lead to dramatic speedups in recursive self-improvement.

Recent progress in LLMs suggest there's an important set of skills for which AI improvement slows down as it reaches human levels, because it is learning by imitating humans. But keep in mind that there are also important dimensions on which AI easily blows past the level of an individual human (e.g. breadth of knowledge), and will maybe slow down as it matches the ability of all humans combined.

LLMs also suggest that AI can become as general-purpose as humans while remaining less agentic / consequentialist. LLMs have outer layers that are fairly myopic, aiming to predict a few thousand words of future text.

The agents that an LLM simulates are more far-sighted. But there are still major obstacles to them implementing long-term plans: they almost always get shut down quickly, so it would take something unusual for them to run long enough to figure out what kind of simulation they're in and to break out.

This doesn't guarantee they won't become too agentic, but I suspect they'd first need to become much more capable than humans.

Evidence is also accumulating that existing general approaches will be adequate to produce AIs that exceed human abilities at most important tasks. I anticipate several more innovations at the level of RELU and the transformer architecture, in order to improve scaling.

That doesn't rule out the kind of major architectural breakthrough that could cause foom. But it's hard to see a reason for predicting such a breakthrough. Extrapolations of recent trends tell me that AI is likely to transform the world in the 2030s. Whereas if foom is going to happen, I see no way to predict whether it will happen soon.

Self Concept

Nintil's analysis of AI risk:

I think it's rather likely that smarter-than-human AGIs will tend to develop self-concepts. But I'm not too clear on when or how this will happen. In fact, the embedded agency discussions seem to hint that it's unnatural for a designed agent to have a self-concept.

Can we prevent AIs from developing a self-concept? Is this a valuable thing to accomplish?

My shoulder Eliezer says that AIs with a self-concept will be more powerful (via recursive self-improvement), so researchers will be pressured to create them. My shoulder Eric Drexler replies that those effects are small enough that researchers can likely be deterred from creating such AIs for a nontrivial time.

I'd like to see more people analyzing this topic.

Social Influences

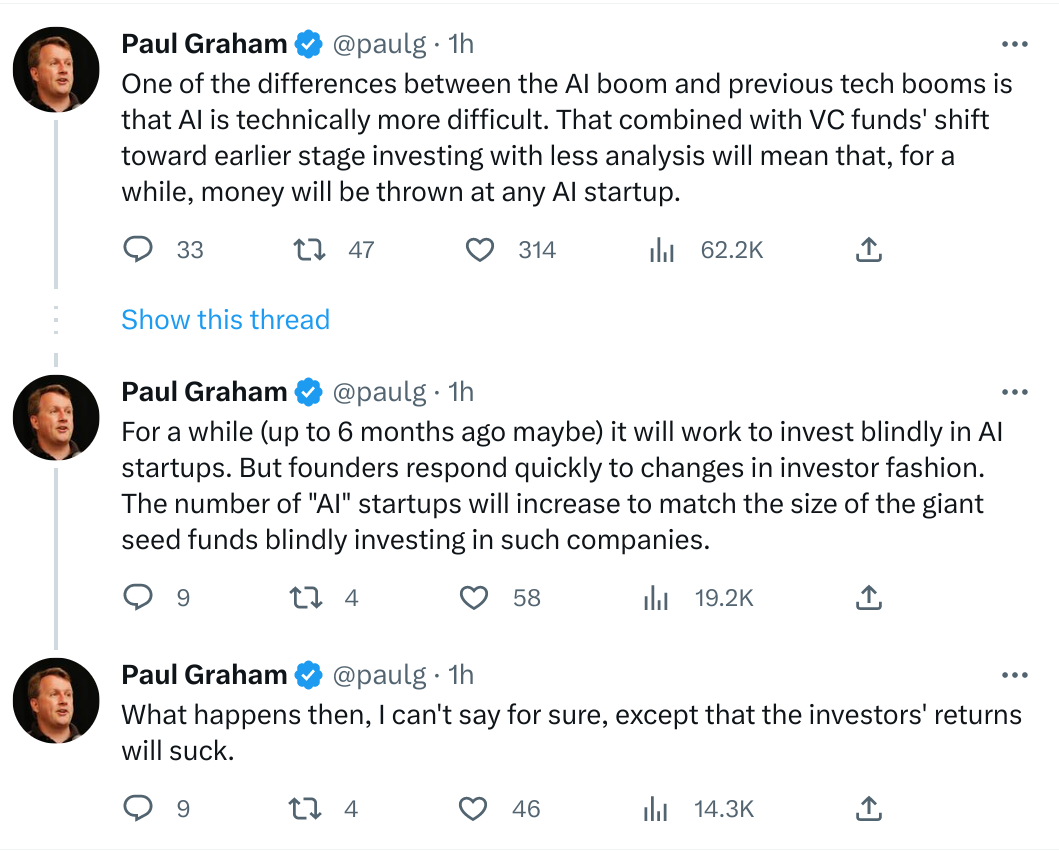

Leading AI labs do not seem to be on a course toward a clear-cut arms race.

Most AI labs see enough opportunities in AI that they expect most AI companies to end up being worth anywhere from $100 million to $10 trillion. A worst-case result of being a $100 million company is a good deal less scary than the typical startup environment, where people often expect a 90% chance of becoming worthless and needing to start over again. Plus, anyone competent enough to help create an existentially dangerous AI seems likely to have many opportunities to succeed if their current company fails.

Not too many investors see those opportunities, but there are more than a handful of wealthy investors who are coming somewhat close to indiscriminately throwing money at AI companies. This seems likely to promote an abundance mindset among serious companies that will dampen urges to race against other labs for first place at some hypothetical finish line. Although there's a risk that this will lead to FTX-style overconfidence.

The worst news of 2022 is that the geopolitical world is heading toward another cold war. The world is increasingly polarized into a conflict between the West and the parts of the developed world that resist Western culture.

The US government is preparing to cripple China.

Will that be enough to cause a serious race between the West and China to develop the first AGI? If AGI is 5 years away, I don't see how the US government is going to develop that AGI before a private company does. But with 15 year timelines, the risks of a hastily designed government AGI look serious.

Much depends on whether the US unites around concerns about China defeating the US. It seems not too likely that China would either develop AGI faster than the US, or use AGI to conquer territories outside of Asia. But it's easy for a country to mistakenly imagine that it's in a serious arms race.

Trends in Capabilities

I'm guessing the best publicly known AIs are replicating something like 8% of human cognition versus 2.5% 5 years ago. That's in systems that are available to the public - I'm guessing those are a year or two behind what's been developed but is still private.

Is that increasing linearly? Exponentially? I'm guessing it's closer to exponential growth than linear growth, partly because it grew for decades in order to get to that 2.5%.

This increase will continue to be underestimated by people who aren't paying close attention.

Advances are no longer showing up as readily quantifiable milestones (beating go experts). Instead, key advances are more like increasing breadth of abilities. I don't know of good ways to measure that other than "jobs made obsolete", which is not too well quantified, and likely lagging a couple of years behind the key technical advances.

I also see a possible switch from overhype to underhype. Up to maybe 5 years ago, AI companies and researchers focused a good deal on showing off their expertise, in order to hire or be hired by the best. Now the systems they're working on are likely valuable enough that trade secrets will start to matter.

This switch is hard for most people to notice, even with ideal news sources. The storyteller industry obfuscates this further, by biasing stories to sound like the most important development of the day. So when little is happening, they exaggerate the story importance. But they switch to understating the importance when preparing for an emergency deserves higher priority than watching TV (see my Credibility of Hurricane Warnings).

Concluding Thoughts

I'm optimistic in the sense that I think that smart people are making progress on AI alignment, and that success does not look at all hopeless.

But I'm increasingly uncomfortable about how fast AGI is coming, how foggy the path forward looks, and how many uncertainties remain.