I was reminded of OpenAI’s definition of AGI, a technology that can “outperform humans at most economically valuable work”, and it triggered a pet peeve of mine: It’s tricky to define what it means for something to make up some percentage of “all work”, and OpenAI was painfully vague about what they meant.

More than 50% of people in England used to work in agriculture, and now it’s far less. But if you said that farming machines “can outperform humans at most economically valuable work” it just wouldn’t make sense. Farming machines obviously don’t perform 50% of current economic value.

The most natural definition, in my opinion, is that even after the economy adjusts, AI would still be able to perform >50% of the economically valuable work. Labor income would make up <<50% of GDP, and AI income would make up >50% of GDP. In a simple Acemoglu/Autor task continuum model of the economy where there is a continuum of tasks, this corresponds to AI doing more than 50% of the tasks.

I don’t think OpenAI’s definition is wrong, exactly, since it does have a reasonably natural interpretation. But I really wish they’d been more clear.

I think I disagree. It's more informative to answer in terms of value as it would be measured today, not value after the economy adjusts.

Suppose someone from 1800 wants to figure out how big a deal mechanized farm equipment will be for humanity. They call up 2025 and ask "How big a portion of your economy is devoted to mechanized farm equipment, or farming enabled by mechanized equipment?" We give them a tiny number. They also ask about top-hats, and we also give them a tiny number. From these tiny numbers they conclude both mechanized farm equipment and top-hats won't be important for humanity.

EDIT The sort of situation I'm worried about your definition missing is if remote-worker AGI becomes too cheap to meter, but human hands are still valuable.

In that world, I think people wouldn’t say “we have AGI”, right? Since it would be obvious to them that most of what humans do (what they do at that time, which is what they know about) is not yet doable by AI.

Your preferred definition would leave the term AGI open to a scenario where 50% of current tasks get automated gradually using technology similar to current technology (i.e., normal economic growth). It wouldn’t feel like “AGI arrived”, it would feel like “people gradually built more and more software over 50 years that could do more and more stuff”.

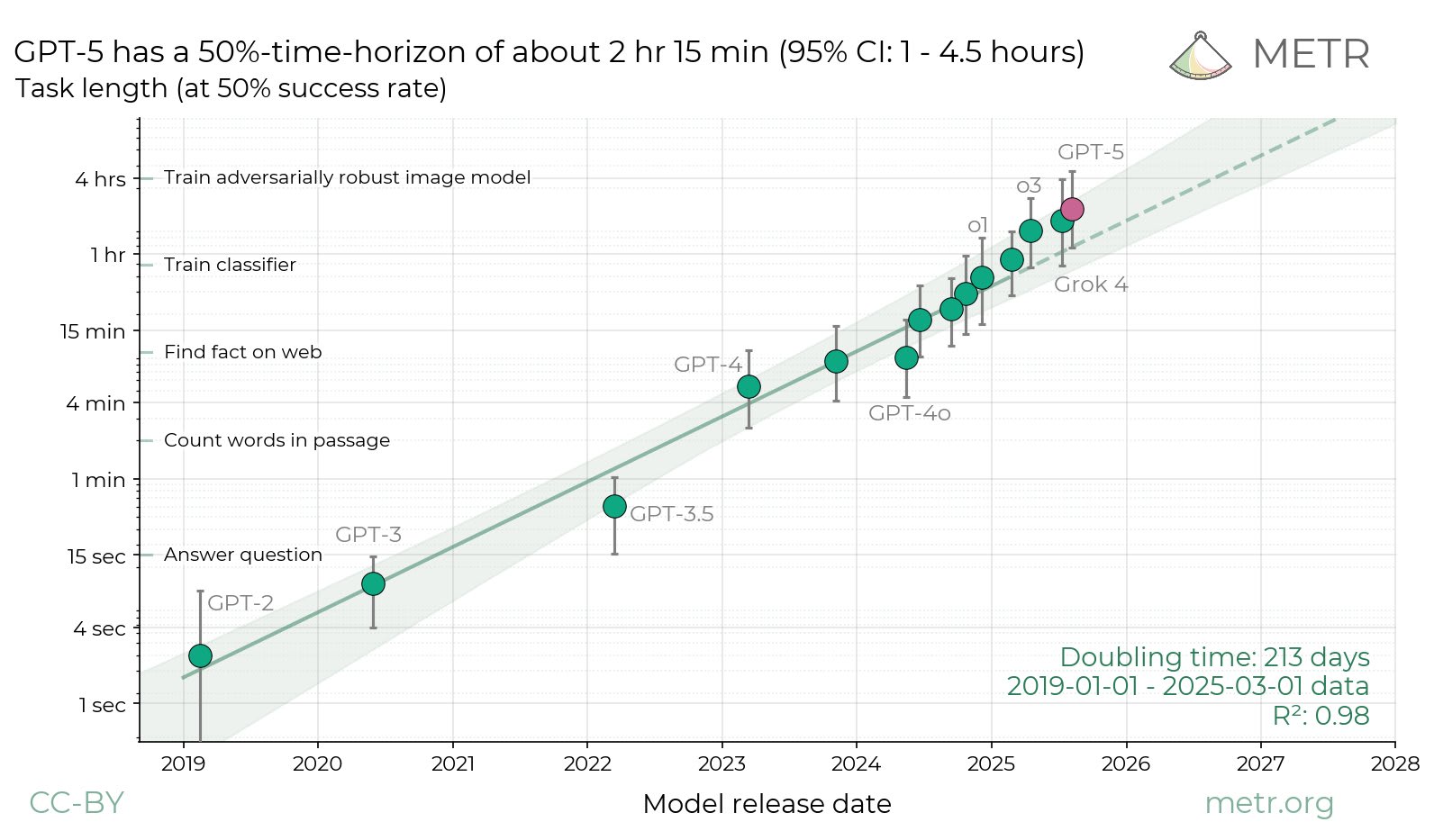

A simple “null hypothesis” mechanism for the steady exponential rise in METR task horizon: shorter-horizon failure modes outcompete longer-horizon failure modes for researcher attention.

That is, with each model release, researchers solve the biggest failure modes of the previous system. But longer-horizon failure modes are inherently rarer, so it is not rational to focus on them until shorter-horizon failure modes are fixed. If the distribution of horizon lengths of failures is steady, and every model release fixes the X% most common failures, you will see steady exponential progress.

It’s interesting to speculate about how the recent possible acceleration in progress could be explained under this framework. A simple formal model:

- There is a sequence of error types e_1, e_2, e_3, etc.

- The first s error types have already been solved, such that errorrate(e_1) = errorrate(e_s) = 0. The long tail of errors e_{s+1} etc has error frequencies decaying exponentially.

- With each model release (t -> t+1), researchers can afford to fix n error types.

- METR time horizon is inversely proportional to the total error rate sum(errorrate(e_i) for all i)

Under this model, there are only two ways progress can speed up: the distribution becomes shorter-tailed (maybe AI systems have become inherently better at generalizing, such that solving the most frequent failure modes now generalizes to many more failures), or the time it takes to fix a failure mode has decreased (perhaps because RLVR offers a more systematic way to solve any reliably measurable failure mode).

(Based on a tweet I posted a few weeks ago)

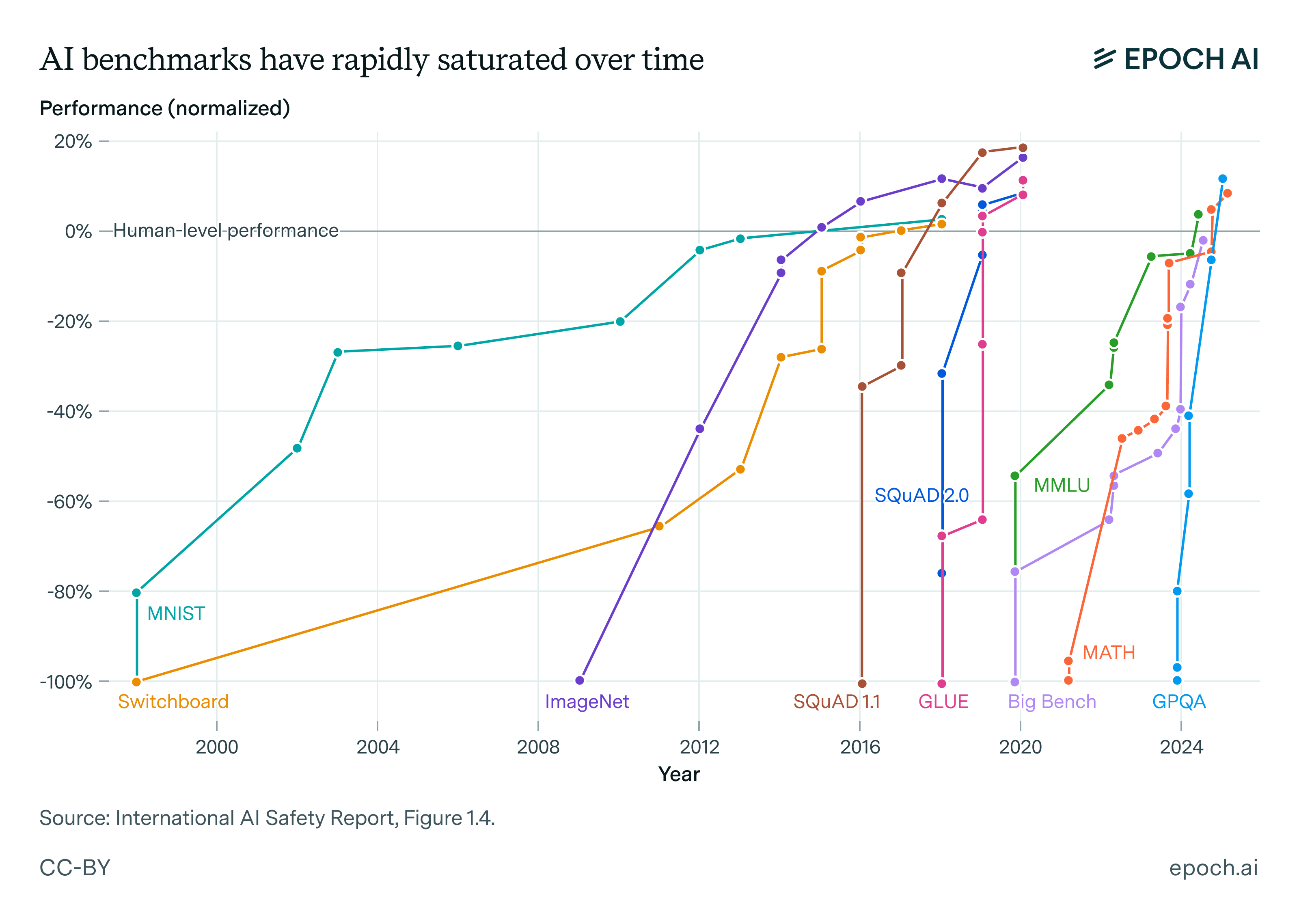

Looking at the first graph in this Epoch AI publication, the "time to fix a failure mode has decreased" hypothesis does look particularly plausible to me.

Here’s a random list of economic concepts that I wish tech people were more familiar with of. I’ll focus on concepts, not findings, and on intuition rather than exposition.

- The semi-endogenous growth model: There is a tug of war between diminishing returns to R&D and growth in R&D capacity from economic growth. For the rate of progress to even stay the same, R&D capacity must continually grow.

- Domar aggregation: With few assumptions, overall productivity growth depends on sector-specific productivity growth in proportion to sectors’ revenues. If a sector is 11% of GDP, the economy is “11% bottlenecked” on it.

- Why wages increase exponentially with education level: This is empirically observed to be roughly true (the Mincer equation), but why? A simple explanation: the opportunity cost of education is proportional to the wage you can earn with your current level of education. So to be worthwhile, obtaining one more year of education needs to increase your wage by a certain percentage, no matter your current level of education. Each year of education earning people 10% more will look like an exponential.

- This is basically “P = MC”, but applied to human capital.

Automation only decreases wages if the economy becomes “decreasing returns to scale”.This posthas a good explanation. Intuition: if humans don’t have to compete with automated actors for things that humans can’t produce (e.g., land or energy), humans could always just leave the automated society and build a 2025-like economy somewhere else.

Automation only decreases wages if the economy becomes “decreasing returns to scale”

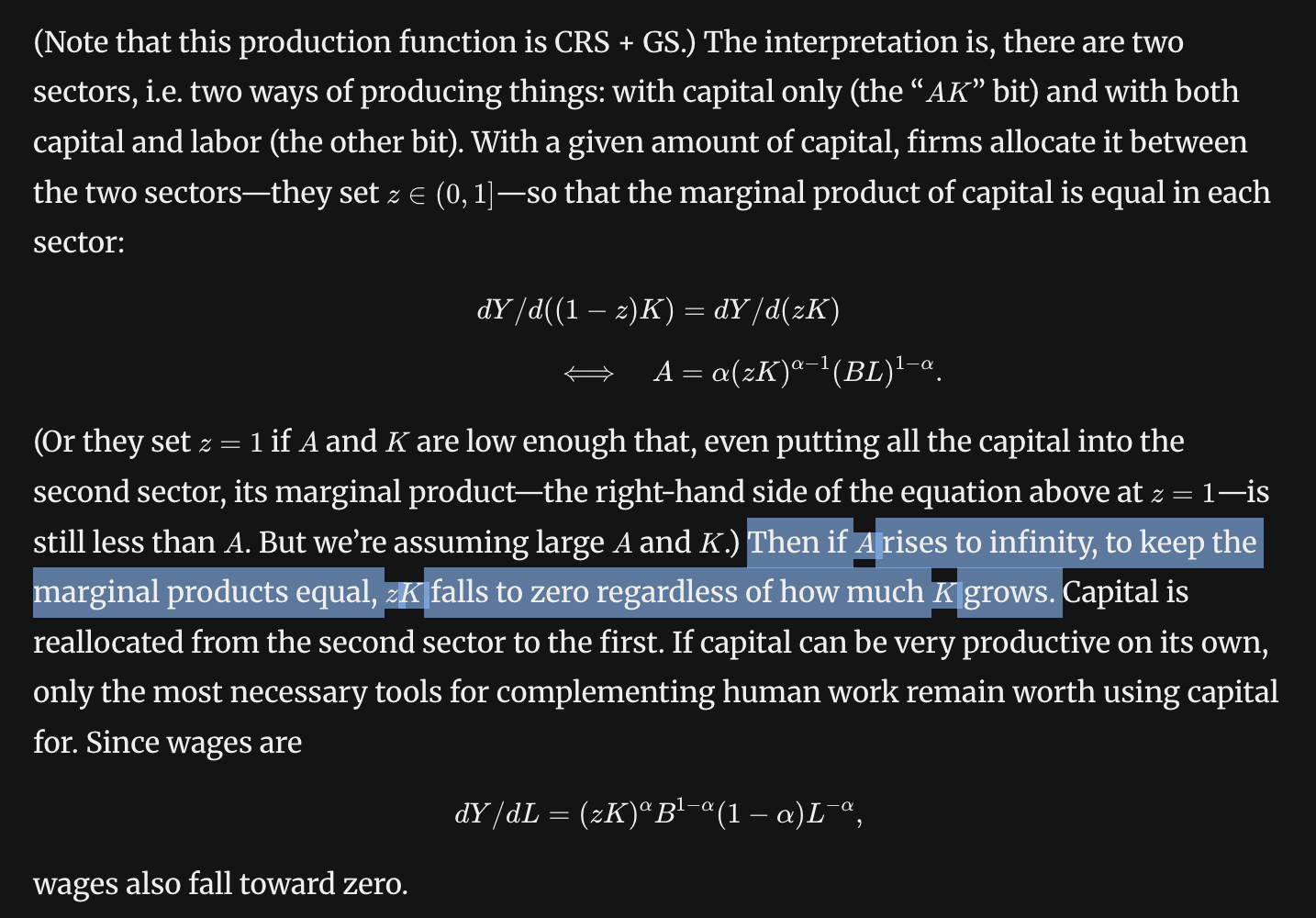

Seems straightforwardly false? The post you cite literally gives scenarios where wages collapse in CRS economies. See also the crowding effect in AK models.

Wow, I didn’t read. Their argument does make sense. And it’s intuitive. Arguably this is sort of happening with AI datacenter investment, where companies like Microsoft are reallocating their limited cash flow away from employees (i.e., laying people off) so they can afford to build AI data centers.

A funny thing about their example is that labor would be far better off if they “walled themselves off” in autarky. In their example, wages fall to zero because of capital flight — because there is an “AK sector” that can absorb an infinite amount of capital at high returns, it is impossible to invest in labor-complementary capital unless wages are zero. So my intuition that humans could “always just leave the automated society” still applies, their example just rules it out by assumption.

zooming out as far as it goes, the economy is guaranteed to become decreasing-returns-to-scale (upper-bounded returns to scale) once grabby alien saturation is reached and there is no more unclaimed space in the universe

if humans don’t have to compete with automated actors for things that humans can’t produce (e.g., land or energy), humans could always just leave the automated society and build a 2025-like economy somewhere else

That only holds true if there exists such a "somewhere else", and already on Earth as is, there just isn't. Any innovation will quickly spread to every economy that has the capacity to accept it, and every square inch of the planet is either uninhabitable or monopolized by a state. This is in fact something that gets often highlighted in science fiction involving interplanetary colonization - one of the first things we (rightfully) imagine people doing is "fucking off to some distant planet to build the society they would like for themselves". We know something like that happened with Europeans leaving for the Americas in the 16th and 17th centuries, and it would likely happen again if there was room to allow it.

This is a consequence of decreasing returns to scale! Without decreasing returns to scale, humans could buy some small territory before their labor is obsolete, and they could run a non-automated economy just on that small territory, and the fact that the territory is small would be no problem, since there are no decreasing returns to scale.

Well, that hits a limit no matter what. We have a physical scale set by the size of our own bodies. Also, I don't believe there exists a single m2 of land on Earth where it would be legal to just go make your own country. People have tried with oceanic platforms, to mixed results.

I'm biased towards believing that culture is far more important to R&D progress than how much capital you can throw at it, atleast once you have above $100M to throw at it. Hamming question is important, for example.

Economists are not the best people to ask about the best culture to run a scientific lab.

I did not appreciate until how unique Less Wrong is as a community blogging platform.

It’s long-form and high-effort with high discoverability of new users’ content. It preserves the best features of the old-school Internet forums.

Substack isn’t nearly as tailored for people who are sharing passing thoughts.

It sounds like you are saying LW encourages both high-effort content and sharing passing thoughts? Can you explain? Those things aren't necessarily contradictory but by default I'd expect a tradeoff between them.

Is it valuable for tech & AI people to try to learn economics? I very much enjoy doing so, but it certainly hasn’t led to direct benefits or directly relevant contributions. So what is the point? (I think there is a point.)

It’s good to know enough to not be tempted to jump to conclusions about AI impact. I’m a big fan of the kind of arguments that the Epoch & Mechanize founders post on Twitter. A quick “wait, really?” check can dispel assumptions that AI must immediately have a huge impact, or conversely that AI can’t have an unprecedentedly fast impact. This is good for sounding smart. But not directly useful (unless I’m talking to someone who is confused).

I also feel like economic knowledge helps give meaning to the things I personally work on. The most basic version of this is when I familiarize myself with quantitative metrics of impact of past technologies (“comparables”), and try to keep up with how the stuff I work on tracks. I think it’s the same joy that some people get by watching sports and trying to quantify how players and teams are performing.

Does a need for broad automation really place a speed limit on economic growth?

I’ve been trying to better understand the assumptions behind people’s differing predictions of economic growth from AI, and what we can monitor — investment? employment? interest rates? — to narrow down what is actually happening.

I’m not an economist; I am an engineer who implements AI systems. The reason I want to understand the potential impact of AI is because it’s going to matter for my own career and for everyone I know.

In the spirit of “learning in public”, I’ll share what I’ve learned (which is a little) and what’s not making sense to me (which is a lot).

In Ege Erdil’s recent case for multi-decade AI timelines, he gives the following intuition for why a “software-only singularity” is unlikely:

The case for AI revenue growth not slowing down at all, or perhaps even accelerating, rests on the feedback loops that would be enabled by human-level or superhuman AI systems: short timelines advocates usually emphasize software R&D feedbacks more, while I think the relevant feedback loops are more based on broad automation and reinvestment of output into capital accumulation, chip production, productivity improvements, et cetera.

The implicit assumption is that chip production, energy buildouts, and general physical capital accumulation can only go so fast.

Certainly, today’s physical capital stock took a long time to accumulate. With today’s technology, it’s not feasible to scale physical capital formation by 10x, or to 10x the capital stock in 10 years; it would simply be far too expensive.

But economics does not place any theoretical limit on the productivity of new vintages of capital goods. If tomorrow’s technology was far more effective at producing capital goods, physical capital could grow at an unprecedented rate.

(In frontier economies today, the speed of physical capital growth is well below historical records. For reference, South Korea’s physical capital stock grew at ~13.2% per year at the peak of its growth miracle. And from 2004 to 2007, China’s electricity production grew at an average of ~14.2% per year.)

Today’s physical capital has two unintuitive properties that creates a potential for it to be produced at much lower cost, despite the intuition that “physical = slow to build”.

- As income increases, consumption is “dematerialized”. Spending on physical capital increasingly goes toward high-value-to-weight items, such as semiconductors and medical devices. Explosive economic growth may not mainly be about building, say, 10 houses and airplanes per human (how would this even get used?) but rather building increasingly elaborate hospitals, medical equipment, and so on.

- The barrier to creating these goods may lie more with R&D and design and coordination, rather than physical throughput limits. Some physical work is still required, but physical throughput is not necessarily the bottleneck.

- High-value physical capital embeds a great deal of skilled labor. Capital equipment is produced using factors of production that can be recursively attributed to non-capital inputs — labor, energy, and natural resources. This is reflected, for instance, in BEA’s industry-level “KLEMS” (capital, labor, energy, materials, and services) accounts. The supply chain of a complex capital good generally has skilled labor as a major, or even dominant, portion of the value added in its production.

- Example: MRI machines. Medical MRI machines are very expensive, often costing more than $1 million per unit. I asked o3 to recursively break down the cost; I’ll keep the inferences high-level, since I don’t trust o3’s precise claims. Essentially, a large portion of the cost of an MRI machine is because the superconducting magnet requires a great deal of specialized equipment to make, and the equipment is complex and produced at low volume. The reason why low-volume capital goods are expensive is that they embody considerable skilled labor amortized over few units.

Physical constraints on the replication rate of capital goods do exist, but in at least one major case are far from binding. Solar panels, for example, require ~1 year to pay back their energy cost. If solar panels were the only source of electricity, GDP could not grow at >100% per year while remaining equally electricity intensive. But this is far, far, above any predicted rate of economic growth, even “explosive” growth, which Davidson and Erdil and Besiroglu define to start at 30% per year.

I see a strong possibility that if human-level skilled labor were free, the capital stock could grow at an unprecedented rate.

The idea of human-level skilled labor being completely free is an alluring one, but one that may never be completely true so long as humans maintain agency (likely in my optimistic worldview).

Even if production processes were completely automated, making value judgements about the usefulness of the outputs was useful and where to go next is something that humans will probably want to continue to maintain some level of control over where material resource costs and timeliness still matter (i.e. feedback loops as you mention). The work involved in this decision-making process could be more than many people assume.

A good example of this is how Google claims that 30% of their code is AI-generated, but coding velocity has only increased by 10%. Deciding what work to pursue and evaluating output, particularly in an industry where the outputs are so specialized, is already a substantial percentage of labor that hasn't been automated to the same degree as coding.

As discussed in our other thread, modeling how much time is required to define requirements/prompt and evaluate output will be an important component of forecasting how far and fast AI advancements might take us. Realistic estimates of this will likely support your hypothesis of the bottlenecks being in R&D and design and coordination, rather than physical throughput limits.

I’m worried that the recent AI exposure versus jobs papers (1, 2) are still quite a distance from an ideal identification strategy of finding “profession twins” that differ only in their exposure to AI. Occupations that are differently exposed to AI are different in countless other ways that are correlated with the impact of other recent macroeconomic shocks.