5 Answers sorted by

211

I'll give you an example of an ontology in a different field (linguistics) and maybe it will help.

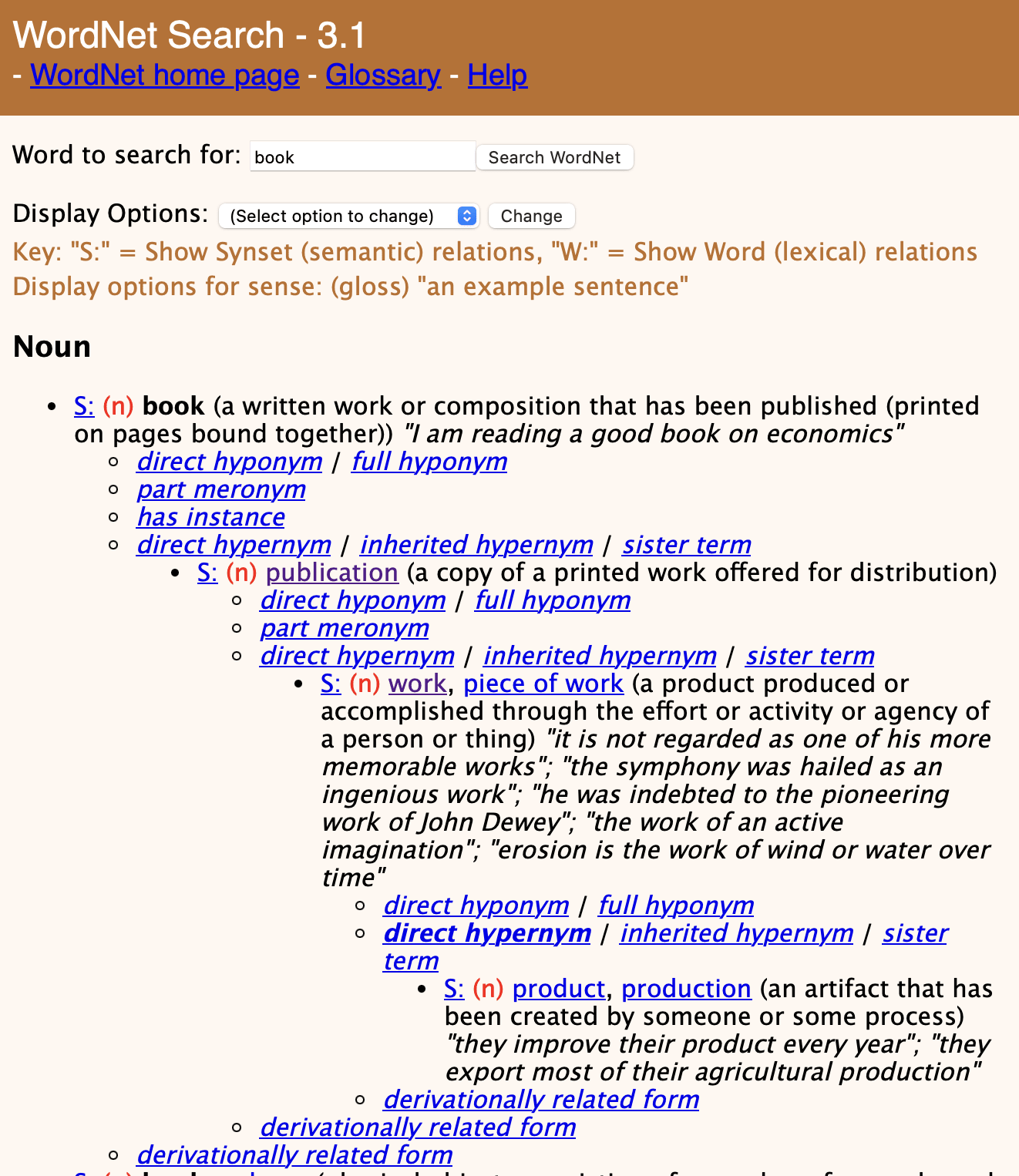

This is WordNet, an ontology of the English language. If you type "book" and keep clicking "S:" and then "direct hypernym", you will learn that book's place in the hierarchy is as follows:

... > object > whole/unit > artifact > creation > product > work > publication > book

So if I had to understand one of the LessWrong (-adjacent?) posts mentioning an "ontology", I would forget about philosophy and just think of a giant tree of words. Because I like concrete examples.

Now let's go and look at one of those posts.

https://arbital.com/p/ontology_identification/#h-5c-2.1 , "Ontology identification problem":

Consider chimpanzees. One way of viewing questions like "Is a chimpanzee truly a person?" - meaning, not, "How do we arbitrarily define the syllables per-son?" but "Should we care a lot about chimpanzees?" - is that they're about how to apply the 'person' category in our desires to things that are neither typical people nor typical nonpeople. We can see this as arising from something like an ontological shift: we're used to valuing cognitive systems that are made from whole human minds, but it turns out that minds are made of parts, and then we have the question of how to value things that are made from some of the person-parts but not all of them.

My "tree of words" understanding: we classify things into "human minds" or "not human minds", but now that we know more about possible minds, we don't want to use this classification anymore. Boom, we have more concepts now and the borders don't even match. We have a different ontology.

From the same post:

In this sense the problem we face with chimpanzees is exactly analogous to the question a diamond maximizer would face after discovering nuclear physics and asking itself whether a carbon-14 atom counted as 'carbon' for purposes of caring about diamonds.

My understanding: You learned more about carbon and now you have new concepts in your ontology: carbon-12 and carbon-14. You want to know if a "diamond" should be "any carbon" or should be refined to "only carbon-12".

Let's take a few more posts:

https://www.lesswrong.com/posts/LeXhzj7msWLfgDefo/science-informed-normativity

The standard answer is that we say “you lose” - we explain how we’ll be able to exploit them (e.g. via dutch books). Even when abstract “irrationality” is not compelling, “losing” often is. Again, that’s particularly true under ontology improvement. Suppose an agent says “well, I just won’t take bets from Dutch bookies”. But then, once they’ve improved their ontology enough to see that all decisions under uncertainty are a type of bet, they can’t do that - or at least they need to be much unreasonable to do so.

My understanding: You thought only [particular things] were bets so you said "I won't take bets". I convinced you that all decisions are bets. This is a change in ontology. Maybe you want to reevaluate your statement about bets now.

https://docs.google.com/document/d/1WwsnJQstPq91_Yh-Ch2XRL8H_EpsnjrC1dwZXR37PC8/edit

Ontology identification is the problem of mapping between an AI’s model of the world and a human’s model, in order to translate human goals (defined in terms of the human’s model) into usable goals (defined in terms of the AI’s model).

My understanding: AI and humans have different sets of categories. AI can't understand what you want it to do if your categories are different. Like, maybe you have "creative work" in your ontology, and this subcategory belongs to the category of "creations by human-like minds". You tell the AI that you want to maximize the number of creative works and it starts planting trees. "Tree is not a creative work" is not an objective fact about a tree; it's a property of your ontology; sorry. (Trees are pretty cool.)

Also, to answer your question about "probability" in a sister chain: yes, "probability" can be in someone's ontology. Things don't have to "exist" to be in an ontology.

Here's another real-world example:

- You are playing a game. Maybe you'll get a heart, maybe you won't. The concept of probability exists for you.

- This person — https://youtu.be/ilGri-rJ-HE?t=364 — is creating a tool-assisted speedrun for the same game. On frame 4582 they'll get a heart, on frame 4581 they won't, so they purposefully waste a frame to get a heart (for instance). "Probability" is

I'll also give you two examples of using ontologies — as in "collections of things and relationships between things" — for real-world tasks that are much dumber than AI.

- ABBYY attempted to create a giant ontology of all concepts, then develop parsers from natural languages into "meaning trees" and renderers from meaning trees into natural languages. The project was called "Compreno". If it worked, it would've given them a "perfect" translating tool from any supported language into any supported language without having to handle each language pair separately

*

72An ontology is a collection of sets of objects and properties (or maybe: a collection of sets of points in thingspace). An agent's ontology determines the abstractions it makes.

For example, "chairs"_Zach is in my ontology; it is (or points to) a set of (possible-)objects (namely what I consider chairs) that I bundle together. "Chairs"_Adam is in your ontology, and it is a very similar set of objects (what you consider chairs). This overlap makes it easy for me to communicate with you and predict how you will make sense of the world.

(Also necessary for easy-communication-and-prediction is that our ontologies are pretty sparse, rather than full of astronomically many overlapping sets. So if we each saw a few chairs we would make very similar abstractions, namely to "chairs"_Zach and "chairs"_Adam.)

(Why care? Most humans seem to have similar ontologies, but AI systems might have very different ontologies, which could cause surprising behavior. E.g. the panda-gibbon thing. Roughly, if the shared-human-ontology isn't natural [i.e. learned by default] and moreover is hard to teach an AI, then that AI won't think in terms of the same concepts as we do, which might be bad.)

[Note: substantially edited after Charlie expressed agreement.]

Just to paste my answer below yours since I agree:

There's "ontology" and there's "an ontology."

Ontology with no "an" is the study of what exists. It's a genre of philosophy questions. However, around here we don't really worry about it too much.

What you'll often see on LW is "an ontology," or "my ontology" or "the ontology used by this model." In this usage, an ontology is a set of building blocks used in a model of the world. It's the foundational stuff that other stuff is made out of or described in terms of.

E.g. minecraft has "an ontology," which is the basic set of blocks (and their internal states if applicable), plus a 3-D grid model of space.

I also agree. I was going to write a similar answer. I'll just add my nuance as a comment to Zach's answer.

I said a bunch about ontologies in my post on fake frameworks. There I give examples and I define reductionism in terms of comparing ontologies. The upshot is what I read Zach emphasizing here: an ontology is a collection of things you consider "real" together with some rules for how to combine them into a coherent thingie (a map, though it often won't feel on the inside like a map).

Maybe the purest example type is an axiomatic system. The undefined t...

5-1

ELI5: Ontology is what you think the world is, epistemology is how you think about it.

21

Epistemic status: shaky. Offered because a quick answer is often better than a completely reliable one.

An ontology is a comprehensive account of reality.

The field of AI uses the term to refer to the "binding" of the AI's map of reality to the territory. If the AI for example ends up believing that the internet is reality and all this talk of physics and galaxies and such is just a conversational ploy for one faction on the internet to gain status relative to another faction, the AI has an ontological failure.

ADDED. A more realistic example would be the AI's confusing its internal representation of the thing to be optimized with the thing the programmers hoped the AI would optimize. Maybe I'm not the right person to answer because it is extremely unlikely I'd ever use the word ontology in a conversation about AI.

10

Always confuse this with Deontology ;-)

If Ontology is about "what is?" why is Deontology not "What is not?"

Good question! Although I think it would be appropriate to move it to a comment instead of an answer.

Over the years I've picked up on more and more phrases that people on LessWrong use. However, "ontology" is one of them that I can't seem to figure out. It seems super abstract and doesn't seem to have a reference post.

So then, please ELI5: what is ontology?