4 Answers sorted by

50

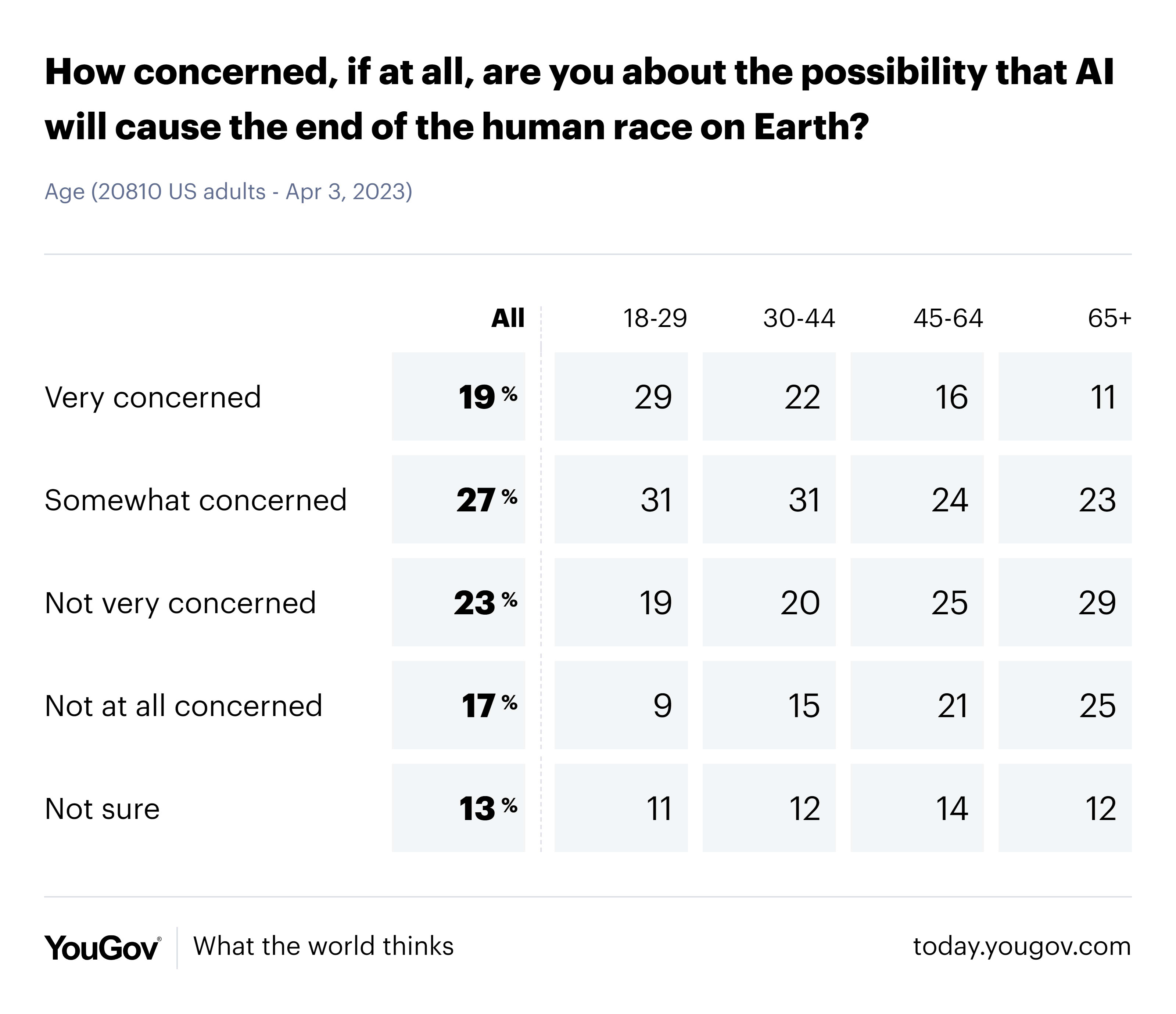

It appears that AI existential risk is starting to penetrate consciousness of general public in a 'its not just hyperbole' way.

There will inevitably be a lot of attention seeking influencers (not a bad thing in this case) who will pick up the ball and run with it now, and I predict the real-life Butlerian Jihad will rival the Climate Change movement in size and influence within 5 years as it has all the attributes of a cause that presents commercial opportunity to the unholy trinity of media, politicians and academia that have demonstrated an ability to profit from other scares. Not to mention vast hoards of people fearful of losing their careers.

I expect that AI will indeed become highly regulated in next few years in the west at least. Remains to be seen what will happen with regards to non-democratic nations.

10

but I haven't seen anyone talk about this before.

You and me both. It feels like I've been the only one really trying to raise public awareness of this, and I would LOVE some help.

One thing I'm about to do is write the most convincing AI-could-kill-everyone email that I can that regulars Joes will easily understand and respect, and send that email out to anyone with a platform. YouTubers, TikTokers, people in government, journalists - anyone.

I'd really appreciate some help with this - both with writing the emails and sending them out. I'm hoping to turn it into a massive letter writing campaign.

Elon Musk alone has done a lot for AI safety awareness, and if, say, a popular YouTuber got one board, that alone could potentially make a small difference.

Probably not the best person on this forum when it comes to either PR or alignment but I'm interested enough, if only about knowing your plan, that I want to talk to you about it anyways.

10

I've actually just posted something on ideas I'm mulling on about this (post is called "AGI deployment as an act of aggression"). The general idea being that honestly, if we get out of our bubble, I think most people won't just see the threat of misaligned AGI as dangerous, they'll also hate the idea of aligned AGI that was deployed by a big state or a corporation (namely, most of the likely AGIs) and thus contributes to concentrating political and/or economic power in their hands. Combined, these two outcomes pretty much guarantee that large groups will be against AGI development altogether.

I think it's important to consider all these perspective and lay down a "unified theory of AI danger", so to speak. In many ways there are two axes that really define the danger: how powerful the AI is, and what is it aligned (or not aligned) to. Worrying about X-risk means worrying it will be very powerful (at which point, even a small misalignment is easily amplified to the point of lethality). Many people still seem to see X-risk as too unrealistic, or aren't willing to concede to it because they feel like it's been hyped up as a way to indirectly hype up the AI's power itself. They expect AI to be less inherently powerful. But it should be made clear that power is a function of both capability and control handed off: if I was so stupid as to hand off a nuclear reactor control system to a GPT-4 instance, it could cause a meltdown and kill people tomorrow. Very powerful ASI might seize that control for itself (namely, even very little control is enough for it to leverage it), but even if you don't believe that to be possible it's almost irrelevant, because we're obviously en route to give way too much control to AIs way too unreliable to deserve it. At the extreme end of that is X-risk, but it's a whole spectrum of "we built an AI that doesn't really do what we want, put it in charge of something and we done fucked up" from the recent news of a man who committed suicide after his chats with a likely GPT-4 powered bot to paperclipping the world. Solutions that fix paperclipping fix all the lesser problems in a very definite way too, so they should be shared ground.

-3-5

How about coordinate legal actions to have chatbots banned, like ChatGPT was banned in Italy? Is it possible?

If successful this would weaken the incentive for devealopment of new AI models.

To be fair, Italy didn't actually ban ChatGPT. They said to OpenAI "Your service does not respect the minimum requirements for the EU privacy law, please change things to be compliant or interrupt the service". They chose to interrupt the service.

If I had to venture a malicious interpretation, I'd say they chose to quit the service hoping in a massive backslash from the public along the lines of "who cares about privacy, this thing is too powerful not to use". But every laywer will confirm you that the Italian authority is totally right from a by-the-book ...

I figure that this would be a pretty important question since getting governments on board, and getting people to coordinate in general, could buy us a lot of time, but I haven't seen anyone talk about this before. I would like to hear what people think and what their strategies are.