A big issue here is that both AI risk, and great power diplomacy, are fairly technical issues, and missing a single gear from your mental model can result in wildly different implications. A miscalculation in the math gets amplified as you layer more calculations on top of it.

AI safety probably requires around 20 hours of studying to know whether you can become an alignment researcher and have a professional stance. The debate itself seems unlikely to be resolved soon, but the debate is coherent, it's publicly available, and thoroughly discussed. Meanwhile, understanding nuclear war dynamics e.g. treaties, is not so open, it requires reading the right books recommended by an expert you trust (not a pundit), instead of randomly selected from the pool which is full of wrong/bad books. I recommend the first two chapters of Thomas Schelling's 1966 Arms and Influence, but only because the dynamic it describes is fundamental, almost guaranteed to be true, probably most of the generals have had that dynamic in mind for ~60 years, that dynamic is merely a single layer of the overall system (e.g. it says nothing about spies), and it's only two chapters. Likewise, Raemon, for several years n...

Suppose China and Russia accepted the Yudkowsky's initiative. But the USA is not. Would you support to bomb a American data center?

I for one am not being hypocritical here. Analogy: Suppose it came to light that the US was working on super-bioweapons with a 100% fatality rate, long incubation period, vaccine-resistant, etc. and that they ignored the combined calls from most of the rest of the world to get them to stop. They say they are doing it safely and that it'll only be used against terrorists (they say they've 'aligned' the virus to only kill terrorists or something like that, but many prominent bio experts say their techniques are far from adequate to ensure this and some say they are being pretty delusional to think their techniques even had a chance of achieving this). Wouldn't you agree that other countries would be well within their rights to attack the relevant bioweapon facilities, after diplomacy failed?

If diplomacy failed, but yes, sure. I've previously wished out loud for China to sabotage US AI projects in retaliation for chip export controls, in the hopes that if all the countries sabotage all the other countries' AI projects, maybe Earth as a whole can "uncoordinate" to not build AI even if Earth can't coordinate.

Nobody in the US cared either, three years earlier. That superintelligence will kill everyone on Earth is a truth, and once which has gotten easier and easier to figure out over the years. I have not entirely written off the chance that, especially as the evidence gets more obvious, people on Earth will figure out this true fact and maybe even do something about it and survive. I likewise am not assuming that China is incapable of ever figuring out this thing that is true. If your opinion of Chinese intelligence is lower than mine, you are welcome to say, "Even if this is true and the West figures out that it is true, the CCP could never come to understand it". That could even be true, for all I know, but I do not have present cause to believe it. I definitely don't believe it about everyone in China; if it were true and a lot of people in the West figured it out, I'd expect a lot of individual people in China to see it too.

American here. Yes, I would support it -- even if it caused a lot of deaths because the data center is in a populated area. American AI researchers are a much bigger threat to what I care about (i.e., "the human project") than Russia is.

Eliezer: Pretty sure that if I ever fail to give an honest answer to an absurd hypothetical question I immediately lose all my magic powers.

I just cannot picture the intelligent cognitive process which lands in the mental state corresponding to Eliezer's stance on hypotheticals, which is actually trying to convince people of AI risk, as opposed to just trying to try (and yes, I know this particular phrase is a joke, but it's not that far from the truth).

I think the sequences did something incredibly valuable in cataloguing all of these mistakes and biases ...

When I read the letter I thought the mention of an airstrike on a data centre was unhelpful. He could have just said "make it illegal" and left the enforcement mechanisms to imagination.

But, on reflection, maybe he was right to do that. Politicians are selected for effective political communication, and they very frequently talk quite explicitly about long prison sentences for people who violate whatever law they are pushing. Maybe the promise of righteous punishment dealing makes people more enthusiastic for new rules. ("Yes, you wouldn't be able to do X,...

This letter, among other things, makes me concerned about how this PR campaign is being conducted.

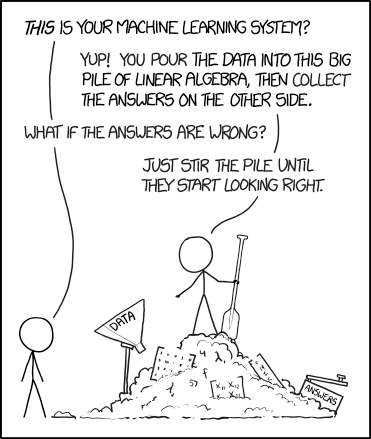

It's probably worth noting that Yudkowsky did not really make the argument for AI risk in his article. He says that AI will literally kill everyone on Earth, and he gives an example of how it might do so, but he doesn't present a compelling argument for why it would.[0] He does not even mention orthogonality or instrumental convergence. I find it hard to blame these various internet figures who were unconvinced about AI risk upon reading the article.

[0] He does quote “the AI does not love you, nor does it hate you, and you are made of atoms it can use for something else.”

This is a really complicated issue because different priors and premises can lead you to extremely different conclusions.

For example, I see the following as a typical view on AI among the general public:

(the common person is unlikely to go this deep into his reasoning, but could come to these arguments if he had to debate on it)

Premises: "Judging by how nature produced intelligence, and by the incremental progress we are seeing in LLMs, artificial intelligence is likely to be achieved by packing more connections into a digital system. This will allow the A...

FLI put out an open letter, calling for a 6 month pause in training models more powerful than GPT-4, followed by additional precautionary steps.

Then Eliezer Yudkowsky put out a post in Time, which made it clear he did not think that letter went far enough. Eliezer instead suggests an international ban on large AI training runs to limit future capabilities advances. He lays out in stark terms our choice as he sees it: Either do what it takes to prevent such runs or face doom.

A lot of good discussions happened. A lot of people got exposed to the situation that would not have otherwise been exposed to it, all the way to a question being asked at the White House press briefing. Also, due to a combination of the internet being the internet, the nature of the topic and the way certain details were laid out, a lot of other discussion predictably went off the rails quickly.

If you have not yet read the post itself, I encourage you to read the whole thing, now, before proceeding. I will summarize my reading in the next section, then discuss reactions.

This post goes over:

What the Letter Actually Says

I see this letter as a very clear, direct, well-written explanation of what Eliezer Yudkowsky actually believes will happen, which is that AI will literally kill everyone on Earth, and none of our children will get to grow up – unless action is taken to prevent it.

Eliezer also believes that the only known way that our children will grow up is if we get our collective acts together, and take actions that prevent sufficiently large and powerful AI training runs from happening.

Either you are willing to do what it takes to prevent that development, or you are not.

The only known way to do that would be governments restricting and tracking GPUs and GPU clusters, including limits on GPU manufacturing and exports, as large quantities of GPUs are required for training.

That requires an international agreement to restrict and track GPUs and GPU clusters. There can be no exceptions. Like any agreement, this would require doing what it takes to enforce the agreement, including if necessary the use of force to physically prevent unacceptably large GPU clusters from existing.

We have to target training rather than deployment, because deployment does not offer any bottlenecks that we can target.

If we allow corporate AI model development and training to continue, Eliezer sees no chance there will be enough time to figure out how to have the resulting AIs not kill us. Solutions are possible, but finding them will take decades. The current cavalier willingness by corporations to gamble with all of our lives as quickly as possible would render efforts to find solutions that actually work all but impossible.

Without a solution, if we move forward, we all die.

How would we die? The example given of how this would happen is using recombinant DNA to bootstrap to post-biological molecular manufacturing. The details are not load bearing.

These are draconian actions that come with a very high price. We would be sacrificing highly valuable technological capabilities, and risking deadly confrontations. These are not steps one takes lightly.

They are, however, the steps one takes if one truly believes that the alternative is human extinction, even if one is not as certain of this implication as Eliezer.

I believe that the extinction of humanity is existentially bad, and one should be willing to pay a very high price to prevent it, or greatly reduce the probability of it happening.

The letter also mentions the possibility that a potential GPT-5 could become self-aware or a moral person, which Eliezer felt it was morally necessary to include.

The Internet Mostly Sidesteps the Important Questions

A lot of people responded to the Time article by having a new appreciation for existential risk from AI and considering its arguments and proposals.

Those were not, as they rarely are, the loudest voices.

The loudest voices were instead mostly people claiming this was a call for violence,or launching attacks on anyone saying it wasn’t centrally a ‘call for violence’, conflating being willing to do an airstrike as a last resort enforcing an international agreement with calling for an actual airstrike now, and oftentrying to associate anyone who associates with Eliezer with things with terrorism and murder and nuclear first strikes and complete insanity.

Yes, a lot of people jump straight from ‘willing to risk a nuclear exchange’ to ‘you want to nuke people,’ and then act as if anyone who did not go along with that leap was being dishonest and unreasonable.

Or making content-free references to things like ‘becoming the prophet of a doomsday cult.’

Such responses always imply that ‘because Eliezer said this Just Awful thing, no one is allowed to make physical world arguments about existential risks from super-intelligent AIs anymore, such arguments should be ignored, and anyone making such arguments should be attacked or at least impugned for making such arguments.’

Many others responded by restarting all the standard Bad AI NotKillEveryoneism takes as if they were knockdown arguments, including all-time classic ‘AI systems so far haven’t been dangerous, which proves future ones won’t be dangerous and you are wrong, how do you explain that?’ even though no one involved predicted that something like current systems would be similarly dangerous.

An interesting take from Tyler Cowen was to say that Eliezer attempting to speak in this direct and open way is a sign that Eliezer is not so intelligent. As a result, he says, we should rethink what intelligence means and what it is good for. Given how much this indicates disagreement and confusion about what intelligence is, I agree that this seems worth doing. He should also consider the implications of saying that high intelligence implies hiding your true beliefs, when considering what future highly intelligent AIs might do.

It is vital that everyone, no matter their views on the existential risks from AI, stand up against attempts to silence, and that they instead address the arguments involved and what actions do or don’t make sense.

I would like to say that I am disappointed in those who reacted in these ways. Except that mostly I am not. This is the way of the world. That is how people respond to straight talk that they dislike and wish to attack.

I am disappointed only in a handful of particular people, of whom I expected better.

One good response was from Roon.

What Is a Call for Violence?

I continue to urge everyone not to choose violence, in the sense that you should not go out there and commit any violence to try and cause or stop any AI-risk-related actions, nor should you seek to cause any other private citizen to do so. I am highly confident Eliezer would agree with this.

I would welcome at least some forms of laws and regulations aimed at reducing AI-related existential risks, or many other causes, that would be enforced via the United States Government, which enforces laws via the barrel of a gun. I would also welcome other countries enacting and enforcing such laws, also via the barrel of a gun, or international agreements between them.

I do not think you or I would like a world in which such governments were never willing to use violence to enforce their rules.

And I think it is quite reasonable for a consensus of powerful nations to set international rules designed to protect the human race, that they clearly have the power to enforce, and if necessary for them to enforce them, even under threat of retaliatory destruction for destruction’s sake. That does not mean any particular such intervention would be wise. That is a tactical question. Even if it would be wise in the end, everyone involved would agree it would be an absolute last resort.

If one refers to any or all of that above as calling for violence then I believe that is fundamentally misleading. That is not what those words mean in practice. As commonly understood, at least until recently, a ‘call for violence’ means a call for unlawful violent acts not sanctioned by the state, or for launching a war or specific other imminent violent act. When someone says they are not calling for violence, that is what they intend for others to understand.

Otherwise, how do you think laws are enforced? How do you think treaties or international law are enforced? How do you think anything ever works?

Alyssa Vance and Richard Ngo and Joe Zimmerman were among those reminding us that the distinction here is important, and that destroying it would destroy our ability to actually be meaningfully against individual violence. This is the same phenomenon as people who extend violence to other non-violent things that they dislike, for example those who say things like ‘silence is violence.’

You can of course decide to be a full pacifist and a libertarian, and believe that violence is never justified under any circumstances. Almost everyone else thinks that we should use men with guns on the regular to enforce the laws and collect the taxes, and that one must be ready to defend oneself against threats both foreign and domestic.

Everything in the world that is protected or prohibited, at the end of the day, is protected or prohibited by the threat of violence. That is how laws and treaties work. That is how property works. That is how everything has to work. Political power comes from the barrel of a gun.

As Orwell put it, you sleep well because there are men with guns who make it so.

The goal of being willing to bomb a data center is not that you want to bomb a data center. It is to prevent the building of the data center in the first place. Similarly, the point of being willing to shoot bank robbers is to stop people before they try and rob banks.

So what has happened for many years is that people have made arguments of the form:

Followed by one of:

Here’s Mike Solana saying simultaneouslythat the AI safety people are going to get someone killed, and that they do not believe the things they were saying because if he believed them he would go get many someones killed. He expanded this later to full post length. I do appreciate the deployment of both horns of the dilemma at the same time – if you believed X you’d advocate horrible thing Y, and also if you convince others of X they’ll do horrible thing Y, yet no Y, so I blame you for causing Y in the future anyway, you don’t believe X, X is false and also I strongly believe in the bold stance that Y is bad actually.

Thus, the requirement to periodically say things like (Eliezer on Feb 10):

Followed by the clarification to all those saying ‘GOTCHA!’ in all caps:

Or as Stefan Schubert puts it:

Our Words Are Backed by Nuclear Weapons

It’s worth being explicit about nuclear weapons.

Eliezer absolutely did not, at any time, call for the first use, or any use, of nuclear weapons.

Anyone who says that is either misread the post, is intentionally using hyperbole, outright lying, or is the victim of a game of telephone.

It is easy to see how it went from ‘accepting the risk of a nuclear exchange’ and ‘bomb a rogue data center’ to ‘first use of nuclear weapons.’ Except, no. No one is saying that. Even in hypothetical situations. Stop it.

What Eliezer said was that one needs to be willing to risk a nuclear exchange, meaning that if someone says ‘I am building an AGI that you believe will kill all the humans and also I have nukes’ you don’t say ‘well if you have nukes I guess there is nothing I can do’ and go home.

Eliezer clarifies in detail here, and I believe he is correct, that if you are willing under sufficiently dire circumstances to bomb a Russian data center and can specify what would trigger that, you are much safer being very explicit under what circumstances you would bomb a Russian data center. There is still no reason to need to use nuclear weapons to do this.

Answering Hypothetical Questions

One must in at least one way have sympathy for developers of AI systems. When you build something like ChatGPT, your users will not only point out and amplify all the worst outputs of your system. They will red team your system by seeking out all the ways in which to make your system look maximally bad, taking things out of context and misconstruing them, finding tricks to get answers that sound bad, demanding censorship and lack of censorship, demanding ‘balance’ that favors their side of every issue and so on.

It’s not a standard under which any human would look good. Imagine if the internet made copies of you, and had the entire internet prompt those copies in any way they could think of, and you had to answer every time, without dodging the question, and they had infinite tries. It would not go well.

Or you could be Eliezer Yudkowsky, and feel an obligation to answer every hypothetical question no matter how much every instinct you could possibly have is saying that yes this is so very obviously a trap.

While you hold beliefs that logically require, in some hypothetical contexts, taking some rather unpleasant actions because in those hypotheticals the alternative would be far worse, existentially worse. It’s not a great spot, and if you are ‘red teaming’ the man to generate quotes it is not a great look.

Which essentially means:

So the cycle will continue until either we all die or morale improves.

I am making a deliberate decision not to quote the top examples. If you want to find them, they are there to be found. If you click all the links in this post, you’ll find the most important ones.

What Do I Think About Yudkowsky’s Model of AI Risk?

Do I agree with Eliezer Yudkowsky’s model of AI risk?

I share most of his concerns about existential risk from AI. Our models have a lot in common. Most of his individual physical-world arguments are, I believe, correct.

I believe that there is a substantial probability of human extinction and a valueless universe. I do not share his confidence. In a number of ways and places, I am more hopeful that there are places things could turn out differently.

A lot of my hope is that the scenarios in question simply do not come to pass because systems with the necessary capabilities are harder to create than we might think, and they are not soon built. And I am not so worried about imminently crossing the relevant capability thresholds. Given the uncertainty, I would much prefer if the large data centers and training runs were soon shut down, but there are more limits on what I would be willing to sacrifice for that to happen.

In the scenarios where sufficiently capable systems are indeed soon built, I have a hard time envisioning ways things end well for my values or for humanity, for reasons that are beyond the scope of this post.

I continue to strongly believe (although with importantly lower confidently than Eliezer) that by default, even under many relatively great scenarios where we solve some seemingly impossible problems, if ASI (Artificial Super Intelligence, any sufficiently generally capable AI system) is built, all the value in the universe originating from Earth would most likely be wiped out and that humanity would not long survive.

What Do I Think About Eliezer’s Proposal?

I believe that conditional on believing what Eliezer believes about the physical world and the existential risks from AI that would result from further large training runs, that Eliezer is making the only known sane proposal there is to be made.

If I instead condition on what I believe, as I do, I strongly endorse working to slow down or stop future very large training runs, and imposing global limits on training run size, and various other related safety precautions. I want that to be extended as far and wide as possible, via international agreements and cooperation and enforcement.

The key difference is that I do not see such restrictions as the only possible path that has any substantial chance of allowing humans to survive. So it is not obviously where I would focus my efforts.

A pause in larger-model training until we have better reason to think proceeding is safe is still the obvious, common sense thing that a sane civilization would find a way to do, if it believed that there was a substantial chance that not pausing kills everyone on Earth.

I see hope in potentially achieving such a pause, and in effectively enforcing such international agreements without much likelihood of needing to actually bomb anything. I also believe this can be done without transforming the world or America into a ‘dystopian nightmare’ of enforcement.

I’ll also note that I am far more optimistic than many about the prospect of getting China to make a deal here than most other people I talk to, since a deal would very much be in China’s national interest, and in the interest of the CCP. If America were willing to take one for Team Humanity, it seems odd to assume China would necessarily defect and screw that up.

You should, of course, condition on what you believe, and favor the level of restriction and precaution appropriate to that. That includes your practical model of what is and is not achievable.

Many people shouldn’t support the proposal as stated, not at this time, because many if not most people do not believe AGI will arrive soon or are not worried about it, or do not see how the proposal would be helpful, and therefore do not agree with the logic underlying the proposal.

However, 46% of Americans, according to a recent poll, including 60% of adults under the age of 30, are somewhat or very concerned that AI could end human life on Earth. Common sense suggests that if you are ‘somewhat concerned’ that some activity will end human life on Earth, you might want to scale back the activity in question to fix that concern, even if doing that has quite substantial economic and strategic benefits.

What Do I Think About Eliezer’s Answers and Comms Strategies?

Would I have written the article the way Eliezer did, if I shared Eliezer’s model of AI risks fully? No.

I would have strived to avoid giving the wrong kinds of responses the wrong kinds of ammunition, and avoided the two key often quoted sentences, at the cost of being less stark and explicit. I would still have had the same core ask, an international agreement banning sufficiently large training runs.

That doesn’t mean Eliezer’s decision was wrong given his beliefs. Merely that I would not have made it. I have to notice that the virtues of boldness and radical honesty can pay off. The article got asked about in a White House press briefing, even if it got a response straight out of Don’t Look Up (text in the linked meme is verbatim).

It is hard to know, especially in advance, how much or which parts of the boldness and radical honesty are doing the work, which bold and radically honest statements risk backfire without doing the work, and which ones risk backfire but are totally worth it because they also do the work.

Do I agree with all of his answers to all the hypothetical questions, even conditional on his model of AI risk? No. I think at least two of his answers were both importantly incorrect and importantly unwise to say. Some of the other responses were correct, but saying them on the internet, or the details of how he said them, was unwise.

I do see how he got to all of his answers.

Do I think this ‘answer all hypothetical questions’ bit was wise, or good for the planet? Also no. Some hypothetical questions are engineered to and primarily serve to create an attack surface, without actually furthering productive discussion.

I do appreciate the honesty and openness of, essentially, open sourcing the algorithm and executing arbitrary queries. Both in the essay and in the later answers.

The world would be a better place if more people did more of that, especially on the margin, even if we got a lesson in why more people don’t do that.

I also appreciate that the time has come that we must say what we believe, and not stay silent. Things are not going well. Rhetorical risks will need to be taken. Even if I don’t love the execution, better to do the best you can than stand on the sidelines. The case had to be laid out, the actual scope of the problem explained and real solutions placed potentially inside a future Overton Window.

If someone asked me a lot of these hypothetical questions, I would have (often silently) declined to answer. The internet is full of questions. One does not need to answer all of them. For others, I disagree, and would have given substantively different answers, whereas if my true answer had been Eliezer’s, I would have ignored the question. For many others, I would have made different detail choices. I strive for a high level of honesty and honor and openness, but I have my limits, and I would have hit some of them.

I do worry that there is a deliberate attempt to coalesce around responding to any attempt at straight talk about the things we need to get right in order to not all die with ‘so you’re one of those bad people who want to bomb things, which is bad’ as part of an attempt to shut down such discussion, sometimes even referencing nukes. Don’t let that happen. I hope we can ignore such bad faith attacks, and instead have good discussions of these complex issues, which will include reiterating a wide array of detailed explanations and counter-counter-arguments to people encountering these issues for the first time. We will need to find better ways to do so with charity, and in plain language.