The thing you have said (presence of an isomorphism) is not equality in category theory. Set-theoretic equality is equality in category theory (assuming doing category theory with set-theoretic foundations). Like, we could consider a (small) category as set of objects + set of morphisms + function assigning ordered pair of objects to each morphism.

Rather, what you're talking about is a certain type of equivalence relation (presence of an isomorphism). It doesn't always behave like equality, because it is not equality.

Thank you, that is clearly correct and I'm not sure why I made that error. Perhaps because equivalence seems more interesting in category theory than in set theory? Which is interesting. Why is equivalence more central in category theory than set theory?

I think with category theory, isomorphism is the obvious equivalence relation on objects in a category, whereas in set theory, which equivalence relation to use depends on context. E.g. we could consider reals as equivalence classes of Cauchy sequences of naturals (equivalent when their difference converges to 0). The equivalence relation here is explicit, it's not like in category theory where it follows from other structures straightforwardly.

Besides isomorphisms and equality of objects, do category theorists use other notions of "equality"?

There's the equivalence of categories. Two categories are equivalent when they are isomorphic up to an isomorphism. Specifically, if you have two functors and , such that there are natural isomorphisms (invertible natural transformations) and . On objects, this means that if you start at an object in , then you can go to in and then to in that is isomorphic to : . Similarly if you start at some object in .

Equivalence is an isomorphism when the isomorphisms (equivalently,[1] the natural transformations between the compositions and identity functors) are equalities.

An even weaker equality-like notion is adjunction and this where things start to get asymmetrical. There's a few equivalent[2] ways of defining them[3], but the contextually simplest way (if not completely rigorous), since I just described equivalences, is that it's an equivalence, except the natural transformations and are not (in general) isomorphic. So, you go and you could instead have gotten there via some morphism in the category but you may not be able to go back . On the other hand, starting at some in , you can take the trip and go back to where you started via some morphism within , . Again, there may not be a morphism .

Then we say that F is left adjoint to G (equivalently, G is right adjoint to F), denoted . The natural transformations and are called the unit and the counit of the adjunction, respectively.

Seven Sketches in Compositionality introduces adjunctions in a slow and palatable way that is good for building an intuition, starting with Galois connections, which are just adjunctions for preorders, which are just Bool-categories.

Two categories are equivalent when they are isomorphic up to an isomorphism

Thaaaaat is a confusing sentence. But thankfully, the rest of your comment clears things up.

Why do you want this notion of equivalence or adjunction, rather than the stricter notion of isomorphism of categories?

Why do you want this notion of equivalence or adjunction, rather than the stricter notion of isomorphism of categories?

As far as I understand/can tell, the context of discovery in category theory is mostly category theorists noticing that a particular kind of abstract structure occurs in many different contexts and thus deserves a name. The context of justification in category theory is mostly category theorists using a particular definition in various downstream things and showing how things fit nicely, globally, everything being reflected in everything else/the primordial ooze, that sort of stuff.

To give an example, if you have a category with all products and coproducts, you can conceive them as functors from the product category to itself. We can define a "diagonal functor", that just "copies" each object and morphism, . It turns out the coproduct is its left adjoint and the product is its right adjoint: .

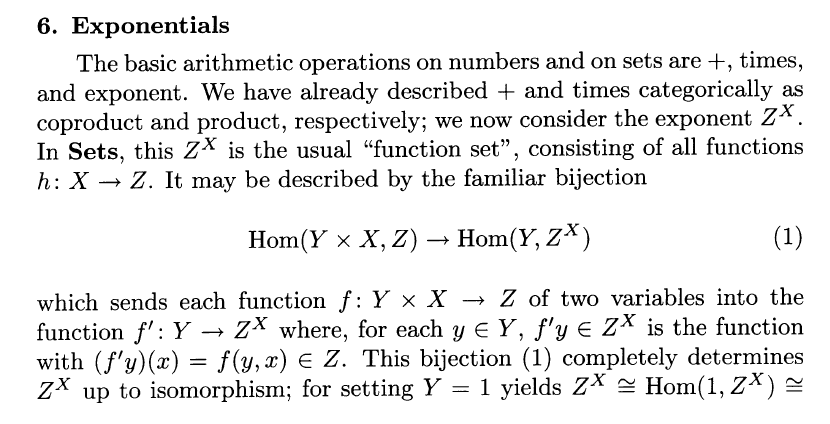

Now, if you fix any particular object and think of the product as an endofunctor on , and of exponentiation as another endofunctor , then these two again form an adjunction: . Using the definition of an adjunction in terms of hom-set isomorphisms, this is just the currying thing: . In fact, this adjunction can be used as the basis to define the exponential object. For example, here's an excerpt from Sheaves in Geometry and Logic.

I don't know about category theory, but identity can't be defined in first-order logic, so it is usually added as a primitive logical predicate to first-order logic. Adding infinite axiom schemas of the sort you showed above don't qualify as a definition, which has to be a finite biconditional. But identity is definable in pure second-order logic. Using Leibniz's law, one can define

Intuitively, and are defined identical iff they have all their properties in common. Formally, there is always a subset of the domain in the range of and which for any object contains only . This guarantees that the definition cannot accidentally identify different objects.

For instance, are the symbols "1" = "2" in first order logic? Depends on the axioms!

Yes and that is because in predicate logic, different names (constant symbols) are allowed to refer to the same object or to different objects, while the same names always refer to the same object. This is similar to natural language where one thing can have multiple names. Frege (the guy who came up with modern predicate logic) has written about this in an 1892 paper:

Equality gives rise to challenging questions which are not altogether easy to answer. Is it a relation? A relation between objects, or between names or signs of objects? In my Begriffsschrift I assumed the latter. The reasons which seem to favour this are the following: a = a and a = b are obviously statements of differing cognitive value; a = a holds a priori and, according to Kant, is to be labelled analytic, while statements of the form a = b often contain very valuable extensions of our knowledge and cannot always be established a priori. The discovery that the rising sun is not new every morning, but always the same, was one of the most fertile astronomical discoveries. Even to-day the identification of a small planet or a comet is not always a matter of course. Now if we were to regard equality as a relation between that which the names

a' andb' designate, it would seem that a = b could not differ from a = a (i.e. provided a = b is true). A relation would thereby be expressed of a thing to itself, and indeed one in which each thing stands to itself but to no other thing. What is intended to be said by a = b seems to be that the signs or namesa' andb' designate the same thing, so that those signs themselves would be under discussion; a relation between them would be asserted. But this relation would hold between the names or signs only in so far as they named or designated something. It would be mediated by the connexion of each of the two signs with the same designated thing. But this is arbitrary. Nobody can be forbidden to use any arbitrarily producible event or object as a sign for something. In that case the sentence a = b would no longer refer to the subject matter, but only to its mode of designation; we would express no proper knowledge by its means. But in many cases this is just what we want to do. If the signa' is distinguished from the signb' only as object (here, by means of its shape), not as sign (i.e. not by the manner in which it designates something), the cognitive value of a = a becomes essentially equal to that of a = b, provided a = b is true. A difference can arise only if the difference between the signs corresponds to a difference in the mode of presentation of that which is designated. Let a, b, c be the lines connecting the vertices of a triangle with the midpoints of the opposite sides. The point of intersection of a and b is then the same as the point of intersection of b and c. So we have different designations for the same point, and these names (point of intersection of a and b,'point of intersection of b and c') likewise indicate the mode of presentation; and hence the statement contains actual knowledge.

It is natural, now, to think of there being connected with a sign (name, combination of words, letter), besides that to which the sign refers, which may be called the reference of the sign, also what I should like to call the sense of the sign, wherein the mode of presentation is contained. In our example, accordingly, the reference of the expressions

the point of intersection of a and b' andthe point of intersection of b and c' would be the same, but not their senses. The reference ofevening star' would be the same as that ofmorning star,' but not the sense. (...)

He argues that identity expressed between names is a relationship between their "modes of presentation" (senses/meanings) rather than between their names or reference object(s). This makes sense because different names can be synonymous or not, and only if they are we can infer equality, while in the latter case whether they are equal or unequal has to be established by other means.

Sorry, I used the word "definition" sloppily there. I don't think we disagree with each other.

I meant something closer to "how equality is formalized in first order logic". That's what the bit about the axiom schemas was referencing: it's how we bake in all the properties we require of the special binary predicate "=". There's a big, infinite core of axiom schemas specifying how "=" works that's retained across FOLs, even as you add/remove character, relation and function symbols to the language.

But I'm pretty sure all instances of the axiom schema

(for any FOL-definable predicate in our theory) are already implied if we, as is customary, assume as logic FOL+identity, i.e. first-order predicate logic with a primitive logical predicate for identity. So in that case we don't need the axiom schema. (And in second and higher order logic we need neither an axiom schema nor a primitive relation, because in that case identity is already definable in pure logic, without any theory, so we have a logical identity relation without having it to add as a primitive.) If we just assume FOL alone, adding this axiom schema to a particular first-order theory makes sense, but I conjecture that it is not equivalent to full (primitive or second-order definable) identity, similar to how the axiom schema of induction is not equivalent to the induction axiom, which requires second-order logic.

I'm confused. In any FOL, you have a bunch of "logical axioms" which come built-in to the language, and axioms for whatever theories you want to investigate in said language. You need these or else you've got no way prove basically anything in the language, since your deduction rules are: state an axiom from your logical axioms, from your assumed theory's axioms, or Modus Ponens. And the logical axioms include a number of axioms schemas, such as the ones for equality that I describe, no?

Yes, with Hilbert proof systems, since those have axioms / axiom schemata. (In natural deduction systems there are only inference rules like Modus ponens, no logical axioms.) But semantically, a "primitive" identity symbol is commonly already interpreted to be the real identity, which would imply the truth of all instances of those axiom schemes. Though syntactically, for the proof system, you indeed still need to handle equality in FOL, either with axioms (Hilbert) or special inference rules (natural deduction).

These syntactical rules are weaker in FOL however than the full (semantic) notion of identity. Because they only infer that all "identical" objects have all first-order definable predicates in common, which doesn't cover all possible properties, and which holds also for weaker forms of equality ("first-order equivalence").

Eliezer mentioned the predicate "has finitely many predecessors" as an example of a property that is only second-order definable. So two distinct objects could have all their first-order definable properties in common while not being identical. The first-order theory wouldn't prove that they are different. The second-order definition of identity, on the other hand, ranges over all properties rather than over all first-order definable ones, so it captures real identity.

Right, if we rely on this notion of "the real identity". I think discussing that would get into even more confusing territory than just focusing on formalizations that look like they're some kind of equality.

OK, maybe if we look at some other definitions of equality we can get a grip on it? In set theory, you say that two sets are equal if they’ve got the same elements. How do you know the elements are the same i.e. equal? You just know.

You are misunderstanding the axiom of extensionality, which states that two sets A and B are equal if both (1) every element of A is an element of B and (2) every element of B is an element of A. This does not require any nebulous notion of "they've got the same elements", and is completely unrelated to the concept of equality at the level of elements of A and B.

By the way, the axiom of extensionality is an axiom rather than a definition; in set theory equality is treated as an undefined primitive, axiomatized as a notion of equality as in first order logic. This is important because if A and B are equal according to the axiom of extensionality, then that axiom implies that A is in some collection of sets C if and only if B is in C.

But if you enrich the category with some more discriminating maps, say distance preserving ones, then the sphere and cube are no longer equal. Conversely, if you reduce the category by removing all the isomorphisms between the sphere and the cube, then they are no longer equal.

Actually you have just described the same thing twice. There are actually fewer distance-preserving maps than there are continuous ones, and restricting to distance-preserving maps removes all the isomorphisms between the sphere and the cube.

Actually you have just described the same thing twice. There are actually fewer distance-preserving maps than there are continuous ones, and restricting to distance-preserving maps removes all the isomorphisms between the sphere and the cube.

That is a very good point. Hmm. So it seems just plain false that you can break equivalence between two objects by enriching the number of maps between them?

Yes. If f and g are in the original category and are inverses of each other, the same will be true of any larger category (technically: any category which is the codomain of a functor whose domain is the original category).

Not sure if this is the thing you're confused about or not, but seems worth making sure you're clear on the difference between judgemental equality and propositional equality. Chapter 1 of the Homotopy Type Theory book has a good explanation.

Yeah, that sure does seem related. Thinking about it a bit more, it feels like equality refers to a whole grab-bag of different concepts. What separates them, what unites them and when they are useful are still fuzzy to me.

OK, maybe if we look at some other definitions of equality we can get a grip on it? In set theory, you say that two sets are equal if they've got the same elements. How do you know the elements are the same i.e. equal? You just know.

If we have the axiom of foundation -- or, more perspicuously maybe, the principle of epsilon-induction its equivalent to in presence of other usual axioms -- the picture is less mysterious.

Yes, in order to tell if A and B are equal, we need to see if they have the same elements, but in order to do that we need to already know how to tell if any two elements are the same, and so on, which does not seem to actually get us anywhere.

Except that when A or B has no elements, we don't need to have already figured out any way to tell two elements apart -- if A has no elements but B does, then A and B are not equal, and if both A and B have no elements, its trivially true that all their elements are equal, so A is equal to B, and they are both the unique empty set. Given foundation, all membership chains terminate at the empty set, so we've now got a way to use extensionality -- the principle that two sets are the same if they have got exactly the same elements -- to give us a non-circular notion of equality by grounding in the empty case.

(If we don't have foundation, or if we indeed actively welcome non-well-founded sets, we do need something beyond extensionality to give a well-defined notion of equality, e.g. bisimulation in case of the anti-foundation-axiom.)

Different formalisations have different ideas of what “equals” means.

Principia Mathematica took the approach that for booleans p and q you have a relation p \equiv q, and then if you have some universe of objects and predicates on those objects, x equals y iff for all predicates f [in your universe of predicates] f(x) \equiv f(y).

But that’s not how typical automated theorem provers define equals.

PM is kind of backwards relative to how it’s usually done. You can start with equals as a primitive notion, and then have an axiom of referential transparency that x = y -> f(x) = f(y)

But PM did it backwards, define x = y as \forall f : f(x) \equiv f(y)

having just gone and investigated how Lean does it:

axiom propext : ∀ {a b : Prop}, (a ↔ b) → a = b

[i.e. for Prop — not arbitrary types — there’s an axiom that says equivalence implies equality]

Since multiple answers here mention equality being undefinable in first-order logic, I want to say that that's only true if there are an infinite number of constants/functions/predicates in the language. Since set theory can be formalized using only a single predicate, it is possible to define equality this way:

(Where x and y can be the same variable, but z must be a different variable from them both)

By simply replacing every instance of the formula "x=y" with this definition, set theory can be formalized in first-order logic without equality.

Right, but models of set theory without equality can contain many indistinguishable "copies" of the same set, and in this sense the "extensionally equivalent" predicate does not define equality (in the sense of the identity relation on the universe of the model).

That's also true in first-order logic with equality, since nothing except convention stops us from considering models where multiple objects are equal according to the equality predicate. The choice to exclude models which include duplicate objects is just a side-condition used to filter out inconvenient models when studying semantics. We can include such a side-condition when considering the semantics of set theory without equality, too, so it doesn't seem fair to me to single it out as being uniquely incapable of defining equality.

(In fact, I'd argue that this also applies to second-order logic. Second-order logic can be given Henkin semantics, which have all the same idiosyncracies as first-order semantics. Using these semantics, we can get models of second-order logic with duplicate objects, just like first-order logic. I'd argue that standard second-order semantics are more or less just using a side-condition to filter out models which have missing subsets. But if we wanted to we could include similar side-conditions when considering models of first-order set theory, too, so it doesn't seem fair to me to to say second-order logic can define equality while first-order logic can't.)

Whilst cycling today, I remembered that when reading Mark Kac's autobiography, Enigmas of Change, which is truly fantastic, his search for the meaning of probabilistic independence struck me as massive. What would it even mean to understand independence at a deep level? It's just P(A,B) = P(A)P(B), surely? The biography didn't truly answer that question for me, but it did gesture at what a deeper understanding might look like.

Which then reminded me that there was a famous analysis of the definition of equality in mathematics. So I figured, now's as good a time as any to get deeply confused about the most basic concept in mathematics. And I succeeded.

First off, a brush-up with equality. What's the standard definition? Well, it's a binary, reflexive, symmetric and transitive relationship. Or, at least, that's what an equivalence relation is. You can get a trivial equivalence relationship: everything's equivalent to everything else. Not selective enough to define equality.

OK, maybe if we look at some other definitions of equality we can get a grip on it? In set theory, you say that two sets are equal if they've got the same elements. How do you know the elements are the same i.e. equal? You just know.

Maybe that comes from first order logic? How's equality defined there? Same as above, except there's for two big old schemas of properties it satisfies. First, if x=y→f(...,x,...)=f(...,y,...)

for any function f we can cook up in our logic's syntax. Second,

x=y→ϕ(...,x,...)→(ϕ(...,y,...))

for any property ϕ we can cook up in our logic's syntax.

In other words, if x=y, then they can substitute for one another in anything we do to them.

Which is a heck of a lot more oomph than just saying equality is an equivalence relation. But now I'm less confused. I've undone all my hard work. I'll have to look at more definitions to remedy the situation.

OK, what about in category theory? There, the notion of equality depends on context. (And in type theory.) For in a category (objects plus maps between them) two objects are equal iff there is an isomorphism between them. That is, X=Y implies there is some g:X−>Y with a corresponding inverse s.t.

g−1∘g=IdX,g∘g−1=IdY.

Let me give an example. Take a category of shapes, but with only continuous functions between them. Then, a sphere and a cube are isomorphic to one another, i.e. they are equal. Wait, what?

Yes, a cube and a sphere are equal to one another if you only have continuous maps available to you. For the purposes of continuous maps, the distances between things don't matter, only topology. And a cube and a sphere have the same topology.

But if you enrich the category with some more discriminating maps, say distance preserving ones, then the sphere and cube are no longer equal. Conversely, if you reduce the category by removing all the isomorphisms between the sphere and the cube, then they are no longer equal.

This is what I meant by equality being contextual in category theory. Two objects may or may not be equal, depending on the category they're in. You can go to a "bigger" category and make two objects unequal. Or you can go to a smaller category and make them unequal.

But that doesn't make sense. In a theory in first order logic, whether two things are equal or not also depends on the context i.e. the axioms of the and theory you're working with. For instance, are the symbols "1" = "2" in first order logic? Depends on the axioms! You may not be able to prove it either way if the axioms are weak enough. Which is certainly the case if your theory has no axioms, except for the logical axioms assumed by the logic itself.

If you add some axioms and strengthen the theory, then you could get equality or inequality. But once you have it, adding axioms can't change whether two things are equal or not. At least, not without running into contradictions?

So what gives? Why is equality in category theory more "flexible" than of first order logic? And have I remystified equality for myself?

In both cases, equality is reflexive, symmetric, transitive and binary. In both cases, if two objects are equal, then you can substitute them for one another in any operation and wind up with equivalent results.

Is it just that you can have many different equalities between objects in category theory? Is it some weird feature of the semantics of the theory? My current guess is that the equivalent of the axiom schemas for equality from FOL in category theory are all the statements like g−1∘g=IdX,g∘g−1=IdY. We can add/remove any of these conditions (except when g:X−>X=IdX) from the category. But we can't do the same for the axiom schemas in FOL. And that's why they're more rigid.

So confusion dissolved? Well, at least until I tackle equality in programming.