There are a lot of important questions that Eric doesn’t say much about.

How to explain this? (I note that he not only fails to proactively address these questions, but also ignores them when others raise them, which seems totally inexplicable to me. Or at least this was my experience when I participated in the CAIS discussion when that came out.)

I feel like trying properly to explain it would veer more into speculating-about-his-psychology than I really want to. But it doesn't seem totally inexplicable to me, and I'd imagine that an explanation might look something like:

Eric doesn't think it's his comparative advantage to answer these questions; he also sometimes experiences people raising them as distracting from the core messages he is trying to convey.

(To be clear, I'm not claiming that this is what is happening; I'm just trying to explain why it doesn't feel in-principle inexplicable to me.)

His most recent article explains how a lot of his thinking fits together, and may be good to give you a rough orientation (or see below for more of my notes)

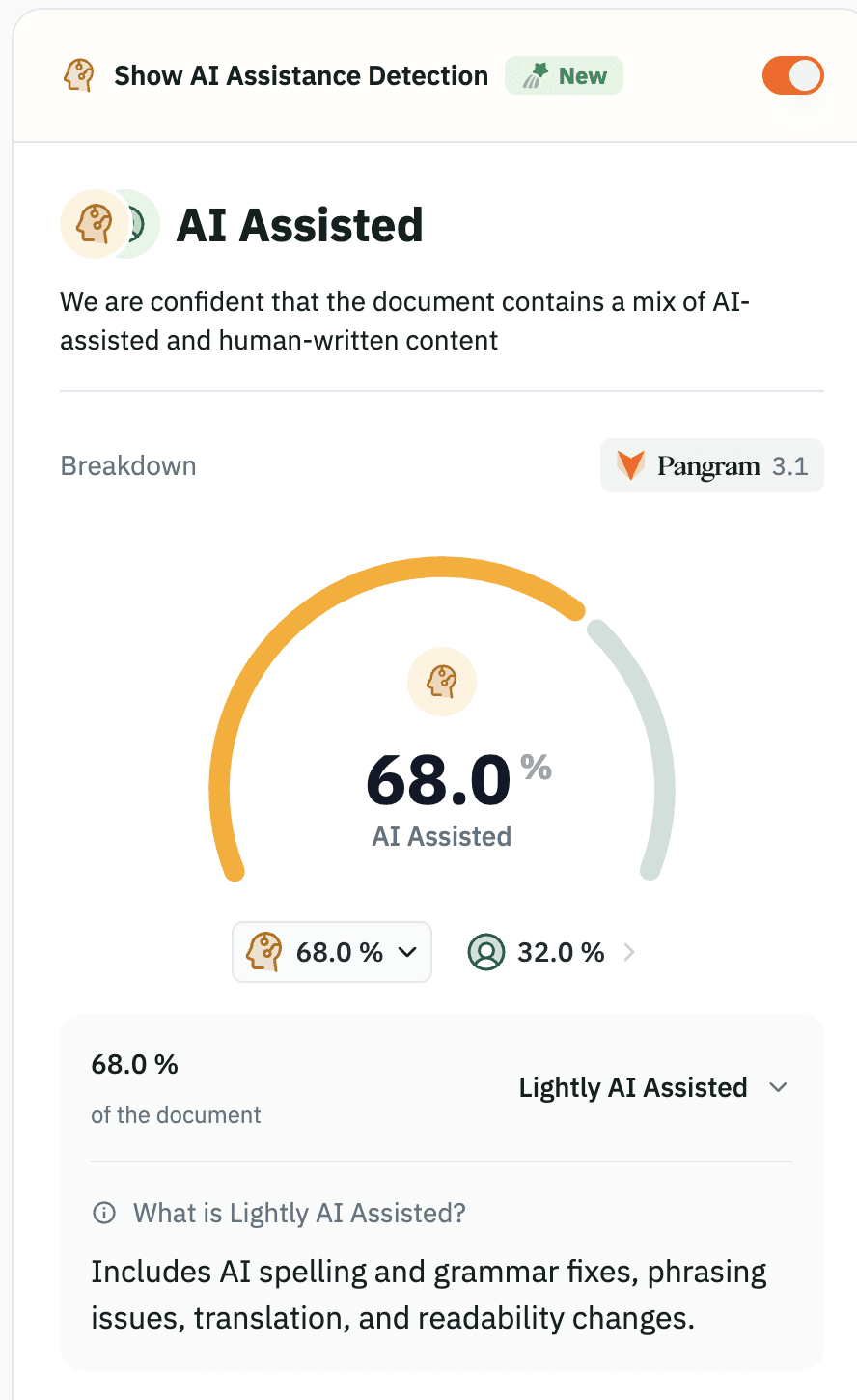

Am I crazy or does that article just read like AI slop? Pangram seems to think it's substantially AI-written[1], and my experience of reading it is definitely mostly of reading weirdly vague large metaphors that I associate with AI-slop writing.

He mentions this before the footnotes:

The workflow leading to this post:

I built the Substack series → Claude-in-project identified and summarized the conceptual core → I steered iterations and edited the product.

it fails to produce a readable essay

Do you just dislike the writing style or do you think it's seriously "unreadable" in some sense?

In my opinion, an article like this is not worth the time to decipher. There are probably some good ideas here but they're buried under a low signal to noise ratio.

Intelligence is a resource, not an entity

This is like Ryle-inspired pseudo-philosophy, I don't understand what these terms mean and why I am being told not to confuse them. And it doesn't connect with his next claim that superintelligent AIs don't need to be agents when structured workflows can steer them into having capabilites. I wish he'd dwell on this point more, but he never brings it up again.

The crucial question, then, is what we should do with AI, not what “it” will do with us.

Um, no it's not. This is just a rhetorically empty antimetabole completely disconnected from the rest of the essay.

Expanding implementation capacity creates hypercapable world.

Nice truism there. Is that sentence even grammatically correct?

Rather than fragile vibe-coded software, AI will yield rock-solid systems

For both learning and inference, costs will fall, or performance will rise, or both

Can you substantiate your points instead of just saying things?

AI-enabled implementation capacity applied to expanding implementation capacity, including AI: this is what “transformative AI” will mean in practice.

Umm... What?

optimization means minimizing—not maximizing—resource consumption

This is just flatly false. Lots of optimization problems involve finding a maximum, like if you're a salesman and want to sell as many goods as possible.

The framework I’ve described is intellectual infrastructure for a transition that will demand clear thinking under pressure

The framework described here is nothing, and I don't even understand the problem it was supposed to solve.

I could go further, but you get the point.

So yeah, this essay is badly written slop. It's hard to read but not just because it's platitudinous. The ideas are all over the place and don't logically connect, and it's riddled with irrelevant, unsubstantiated claims.

I take this to be pretty strong evidence that this is not a good article for people reading Drexler to start with! (FWIW I valued reading it, but I'm now realising that the value I got was largely in understanding a bit better how Eric's sweep of ideas connect, and perhaps that wouldn't have been available to me if I hadn't had the background context.)

Edit: I edited the original post to change the recommendation there slightly.

Yeah, I'm sure this is not a typical example of his writing style or exposition of the ideas he's advocated for over the bulk of his career.

I think it's pretty seriously unreadable. Like, most of it is vague big metaphors that fail to explain anything mechanistically.

I'm quoting the one that was linked, AI Prospects: Understanding Options in a Hypercapable World.

If you were talking about Owen's essay, that's not what this thread is about. (And if so, please take this as a datapoint against commenting with low context.)

I have been reading Eric Drexler’s writing on the future of AI for more than a decade at this point. I love it, but I also think it can be tricky or frustrating.

More than anyone else I know, Eric seems to tap into a deep vision for how the future of technology may work — and having once tuned into this, I find many other perspectives can feel hollow. (This reminds me of how, once I had enough of a feel for how economies work, I found a lot of science fiction felt hollow, if the world presented made too little sense in terms of what was implied for off-screen variables.)

One cornerstone of Eric’s perspective on AI, as I see it, is a deep rejection of anthropomorphism. People considering current AI systems mostly have no difficulty understanding it as technology rather than person. But when discussion moves to superintelligence … well, as Eric puts it:

Anyhow, I think there's a lot to get from Eric’s writing — about the shape of automation at scale, the future of AI systems, and the strategic landscape. So I keep on recommending it to people. But I also feel like people keep on not quite knowing what to do with it, or how to integrate it with the rest of their thinking. So I wanted to provide my perspective on what it is and isn’t, and thoughts on how to productively spend time reading. If I can help more people to reinvent versions of Eric’s thinking for themselves, my hope is that they can build on those ideas, and draw out the implications for what the world needs to be doing.

If you’ve not yet had the pleasure of reading Eric’s stuff, his recent writing is available at AI Prospects. His most recent article explains how a lot of his thinking fits together, but some people have expressed that it’s a difficult entry point (see below for more of my notes giving a different overview) — so I’d advise choosing some part from one of the overviews that catches your interest, and diving into the linked material.

Difficulties with Drexler’s writing

Let’s start with the health warnings:

These properties aren’t necessarily bad. Abstraction permits density, and density means it’s high value-per-word. Ontological challenge is a lot of the payload. But they do mean that it can be hard work to read and really get value from.

Correspondingly, there are a couple of failure modes to watch for:

Perhaps you’ll think it’s saying [claim], which is dumb because [obvious reason][1].

How to read Drexler

Some mathematical texts are dense, and the right way to read them is slowly and carefully — making sure that you have taken the time to understand each sentence and each paragraph before moving on.

I do not recommend the same approach with Eric’s material. A good amount of his content can amount to challenging the ontologies of popular narratives. But ontologies have a lot of supporting structure, and if you read just a part of the challenge, it may not make sense in isolation. Better to start by reading a whole article (or more!), in order to understand the lay of the land.

Once you’ve (approximately) got the whole picture, I think it’s often worth circling back and pondering more deeply. Individual paragraphs or even sentences in many cases are quite idea-dense, and can reward close consideration. I’ve benefited from coming back to some of his articles multiple times over an extended period.

Other moves that seem to me to be promising for deepening your understanding:

Try to understand it more concretely. Consider relevant examples[2], and see how Eric’s ideas apply in those cases, and what you make of them overall.

What Drexler covers

In my view, Eric’s recent writing is mostly doing three things:

1) Mapping the technological trajectory

What will advanced AI look like in practice? Insights that I’ve got from Eric’s writing here include:

2) Pushing back on anthropomorphism

If you talk to Eric about AI risk, he can seem almost triggered when people discuss “the AI”, presupposing a single unitary agent. One important thread of his writing is trying to convey these intuitions — not that agentic systems are impossible, but that they need not be on the critical path to transformative impacts.

My impression is that Eric’s motivations for pushing on this topic include:

A judgement that many safety-relevant properties could[3] come from system-level design choices, more than by relying on the alignment of the individual components

3) Advocating for strategic judo

Rather than advocate directly for “here’s how we handle the big challenges of AI” (which admittedly seems hard!), Eric pursues an argument saying roughly that:

So rather than push towards good outcomes, Eric wants us to shape the landscape so that the powers-that-be will inevitably push towards good outcomes for us.

The missing topics

There are a lot of important questions that Eric doesn’t say much about. That means that you may need to supply your own models to interface with them; and also that there might be low-hanging fruit in addressing some of these and bringing aspects of Eric’s worldview to bear.

These topics include[4]:

Translation and reinvention

I used to feel bullish on other people trying to write up Eric’s ideas for different audiences. Over time, I’ve soured on this — I think what’s needed isn’t just a matter of translating simple insights, and more for people to internalize those insights, and then share the fruits.

In practice, this blurs into reinvention. Just as mastering a mathematical proof means comprehending it to the point that you can easily rederive it (rather than just remembering the steps), I think mastering Eric’s ideas is likely to involve a degree of reinventing them for yourself and making them your own. At times, I’ve done this myself[5], and I would be excited for more people to attempt it.

In fact, this would be one of my top recommendations for people trying to add value in AI strategy work. The general playbook might look like:

Pieces I’d be especially excited to see explored

Here’s a short (very non-exhaustive) list of questions I have, that people might want to bear in mind if they read and think about Eric’s perspectives:

When versions of this occur, I think it’s almost always that people are misreading what Eric is saying — perhaps rounding it off into some simpler claim that fits more neatly into their usual ontology. This isn’t to say that Eric is right about everything, just that I think dismissals usually miss the point. (Something similar to this dynamic has I think been repeatedly frustrating to Eric, and he wrote a whole article about it.) I am much more excited to hear critiques or dismissals of Drexler from people who appreciate that he is tracking some important dynamics that very few others are.

Perhaps with LLMs helping you to identify those concrete examples? I’ve not tried this with Eric’s writing in particular, but I have found LLMs often helpful for moving from the abstract to the concrete.

This isn’t a straight prediction of how he thinks AI systems will be built. Nor is it quite a prescription for how AI systems should be built. His writing is one stage upstream of that — he is trying to help readers to be alive to the option space of what could be built, in order that they can chart better courses.

He does touch on several of these at times. But they are not his central focus, and I think it’s often hard for readers to take away too much on these questions.

Articles on AI takeoff and nuclear war and especially Decomposing Agency were the result of a bunch of thinking after engaging with Eric’s perspectives. (Although I had the advantage of also talking to him; I think this helped but wasn’t strictly necessary.)