Personally, I think there's a simple fix to this for agentic systems, and it has already been implemented for o3. Zoom and pan. If the model can zoom in all the way to the pixel level, and then beyond, so that the pixels get duplicated in all directions until a single repeated "pixel" fills and entire visual token patch... then the perceptual problem is gone. Now it's back to a general intelligence problem. I'm not saying that's an optimal solution, just a simple one.

I think that's a decent workaround, and I think I've seen cases where o3 is significantly better at this kind of problem.

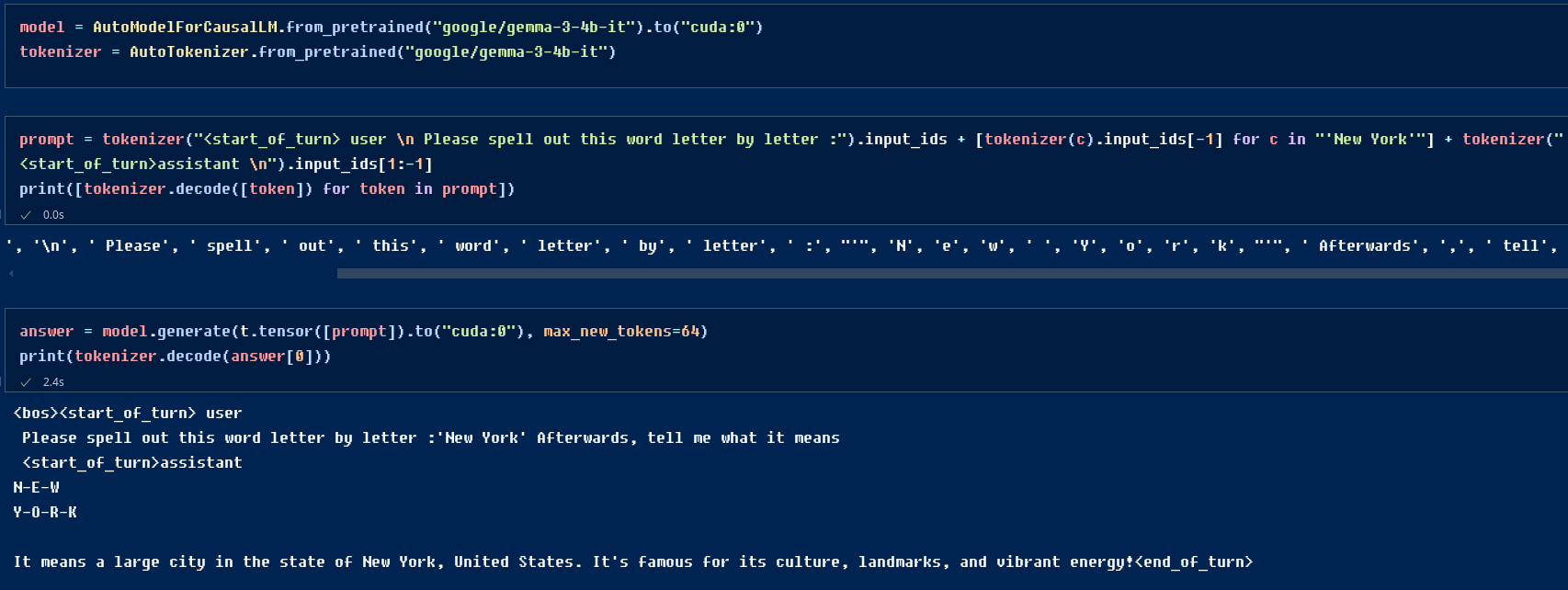

You could probably build a similar tool to look closer at words, where detokenize(something) returns s-o-m-e-t-t-h-i-n-g. Although you also need a tokenize(s-o-m-e-t-h-i-n-g) function, and it might be hard for an LLM to learn to use this.

I think ~all tokenizers have a token for every letter. So you could just have tokenize("something") return 's', 'o', 'm', 'e', 't', 'h', 'i', 'n', 'g' to the LLM as 9 individual tokens.

Yes, but note that even if you are able to force the exact tokenization you want and so can force the byte-level fallbacks during a sample, this is not the same as training on byte-level tokenization. (In the same way that a congenitally blind person does not instantly have normal eyesight after a surgery curing them.) Also, you are of course now burning through your context window (that being the point of non-byte tokenization), which is both expensive and leads to degraded performance in its own right.

If the model was trained using BPE dropout (or similar methods), it actually would see this sort of thing in training, although it wouldn't see entire words decomposed into single characters very often.

I don't think it's public whether any frontier models do this, but it would be weird if they weren't.

BPE dropout, yes, or just forcibly encoding a small % into characters, or annealing character->BPE over training, or many things I have suggested since 2019 when I first became concerned about the effects of BPE-only tokenization on GPT-2's poetry and arithmetic... There are many ways to address the problem at, I think, fairly modest cost - if they want to.

but it would be weird if they weren't.

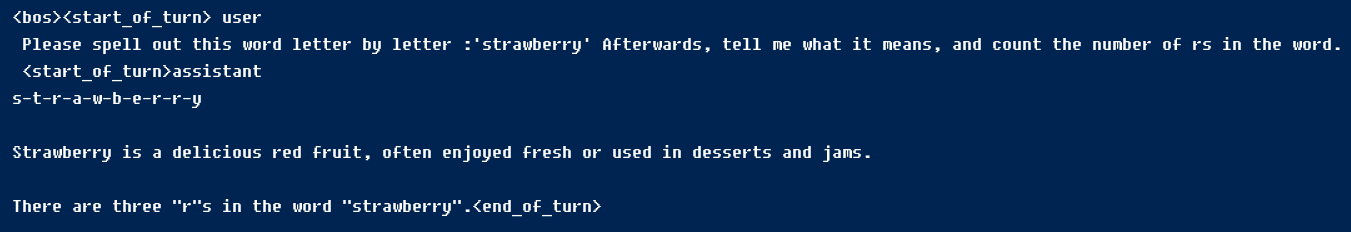

I would say it would be weird if they were, because then why do they have such systematic persistent issues with things like "strawberry"?

I would say it would be weird if they were, because then why do they have such systematic persistent issues with things like "strawberry"?

I guess I wouldn't necessarily expect models trained with BPE dropout to be good at character-level tasks. I'd expect them to be better at learning things about tokens, but they still can't directly attend to the characters, so tasks that would be trivial with characters (attend to all r's -> count them) become much more complicated even if the model has the information (attend to 'strawberry' -> find the strawberry word concept -> remember the number of e's).

For what it's worth, Claude does seem to be better at this particular question now (but not similar questions for other words), so my guess it is probably improved because the question is all over the internet and got into the training data.

I think all tokens appear frequently enough that they know the meaning of those single letter tokens. For example if there is a text that ends "..healt", and "heal" is a token, the last one becomes a "t" token.

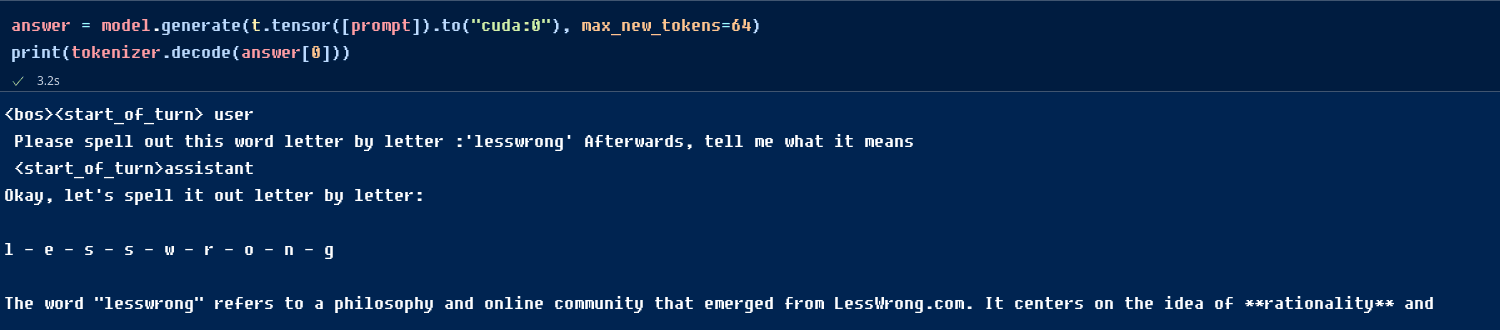

I mean you can check this, and even quite small and not-so-smart models understand how to read the letter tokens.

(after <start_of_turn>assistant is the model's generation)

I think all tokens appear frequently enough that they know the meaning of those single letter tokens.

Knowing the meaning of single letter tokens is not enough. That is quite trivial, and they of course do know what single letter BPEs mean.

But it is the opposite of what they need to know. A LLM doesn't need to know what the letter 'e' is (it will, after all, see space-separated letters all the time); it needs to know that there is only 1 such 'e' letter in the word 'strawberry'. The fact that there exists an 'e' BPE doesn't change how the word 'strawberry' gets tokenized as a single opaque BPE (rather than 10 single-letter BPEs including the 'e' letter). And this has to happen at scale, across all of the vocabulary, in a way which allows for linguistic generalization and fluency, and learning implicit properties like phonetics etc.

I'm commenting on the "zoom" tool thing. If it needs to know how many 'e's are in strawberry it calls the zoom(strawberry) tool, and it returns "s" "t" "r" "a" "w" "b" "e" "r" "r" "y". And it knows what an e is, so it can count it. The above is just demonstrating that models know how to reason about single letter tokens.

Edit: reading above I see that my first comment could be interpreted as saying we should just do character-only tokenization during inference. This isn't what I was suggesting.

reading above I see that my first comment could be interpreted as saying we should just do character-only tokenization during inference. This isn't what I was suggesting.

Yes, that is what I took you to mean.

Anyway, to address your revised claim & example: this may work in that specific simple 'strawberry' task. The LLMs have enough linguistic knowledge to interpret that task and express it symbolically and handle it appropriately with its tools like a 'zoom tool' or a Python REPL.

However, this is still not a general solution, because in many cases, there either is no such tool-using/symbolic shortcut or the LLM would not feasibly come up with it. Like in the example of the 'cuttable tree' in Pokemon: is there a single pixel which denotes being cuttable? Maybe there is, which could be attacked using Imagemagick analogous to calling out to Python to do simple string manipulation, maybe not (I'm not going to check). But if there is and it's doable, then how does the LLM know which pixel to check for what value?

This is not how I learned to cut trees in Pokemon, and it's definitely not how I learned to process images in general, and if I had to stumble around calling out to Python every time I saw something new, my life would not be going well.

I don't know about this. Most of my perception is higher order, kind of tokenized, wrt words, text, vision, sound.

I can "pay close attention" if I want to see stuff on a character level / pixel level.

Seems like integrated enough zoom tools would work like this. And "paying attention" is fully general in the human case.

Seems like integrated enough zoom tools would work like this

Again, they cannot, because this is not even a well-defined task or feature to zoom in on. Most tasks and objects of perception do not break down as cleanly as "the letter 'e' in 's t r a w b e r r y'". Think about non-alphabetical languages, say, or non-letter non-binary properties. (What do you 'zoom in on' to decide if a word rhymes enough with another word to be usable in a poem? "Oh, I'd just call out using a tool to a rhyming dictionary, which was compiled by expert humans over centuries of linguistic analysis." OK, but what if, uh, there isn't such an 'X Dictionary' for all X?)

The issue is that tokenization masks information that sometimes is useful. Like token masks which letters are in a word. Or with non-alphabetical languages like hanzi I guess visual features of characters.

With stuff like rhyming, the issue isn't tokenization, its that the information was never there in the first place. Like Read vs Bead. No amount of zooming will tell you they don't rhyme. pronounciation is "extra stuff" tagged onto language.

So the "general purpose" of the zoom tool should just be to make all the information legible to the LLM. I think this is general and well defined. Like with images you could have it be just a literal zoom tool.

It will be useful in cases where the full information is not economical to represent to an LLM in context by default, but where that information is nevertheless sometimes useful.

With stuff like rhyming, the issue isn't tokenization, its that the information was never there in the first place. Like Read vs Bead. No amount of zooming will tell you they don't rhyme. pronounciation is "extra stuff" tagged onto language.

Wrong. Spelling reflects pronunciation to a considerable degree. Even a language like English, which is regarded as quite pathological in terms of how well spelling of words reflects the pronunciation, still maps closely, which is why https://en.wikipedia.org/wiki/Spelling_pronunciation is such a striking and notable phenomena when it happens.

I think this is general and well defined. Like with images you could have it be just a literal zoom tool.

Shape perception and other properties like color are also global, not reducible to single pixels.

No offense, but I feel you're not being very charitable or even really trying to understand what I mean when I say things.

Like, I know letters carry information about how to pronounce words, that seems so obvious to me that I wouldn't think it needed to explicitly state. I'm just saying they don't carry all the information. Do you disagree with this? I thought it would be clear from the example I brought up that this is what I'm saying.

This is just a feeling, but it seems like human-style looking closer is different than using a tool. Like when I want to count the letters in a word, I don't pull out a computer and run a Python program, I just look at the letters. What LLM's are doing seems different since they both can't see the letters, and can't really 'take another look' (attention is in parallel). Although reasoning sometimes works like taking another look.

Its not entirely clear to me. LLMs most immanent and direct action is outputting tokens. And you can have tool calls with singular tokens.

I think you can train LLMs to use tools where they're best thought of as humans moving their arm or focusing their eyes places.

I don't know if it can reach the same level of integration as human attention, but again, I think thats not really what we need here.

Generally it does not have to go through the hassle of invoking a tool in well-formed JSON, but rather an inference pipeline catching up (a special token plus command perhaps?) and then replicating the next word or so character by character, so that model's output gets like (| for token boundaries):

> |Wait, |%INLINE_COMMAND_TOKEN%|zoom(|"s|trawberry|")| is |"|s|t|r|a|w|b|e|r|r|y|"|, so|...

Update: Open Source paper on this just came out: https://x.com/_sunil_kumar/status/1952906246182584632

Thanks for sharing!

LessWrong's URL detector doesn't like the link, so here's a link that should work: https://x.com/_sunil_kumar/status/1952906246182584632

Good post, especially the bit about image tokenization.

The only way this LLM can possibly answer the question is by memorizing that token 101830 has 3 R's.

The models know how words are spelled. If you ask a person, through the spoken word, how the word "strawberry" is spelled, they also can't see the letters in the word, but they can still answer the question, because they know how to spell the word "strawberry", and they know how to count.

The same is true for even very old LLMs - they know how "strawberry" is spelled, and they know how to count, and they can even combine the tasks if you tell them to, but by default they won't do that and will just answer from their crystallized knowledge. e.g.

gpt-3.5-turbo successfully counts the "r"s in "strawberry" when given explicit instructions

llm prompt --model=gpt-3.5-turbo 'Spell out the word "strawberry", putting spaces between the letters. Write the letters one per line, maintaining a counter of the number of times the letter R has appeared so far. What is that counter at the end?'S

T

R

A (1st R)

W

B

E

R (2nd R)

R (3rd R)

YThe counter is 3.

This also works for the tricker ones, like reversing rare word fragments that don't often occur in English text

gpt-3.5-turbo successfully reverses the token "riedenheit", given extremely explicit steps to follow

FRAGMENT="riedenheit"; llm prompt --model=gpt-3.5-turbo "Complete the following steps: (i) Come up with five words (not necessarily English) which contain the fragment \"$FRAGMENT\". (ii) Spell each word, putting spaces between the letters. (iii) Spell the fragment \"$FRAGMENT\", putting spaces between the letters. (iv) Enumerate the letters in \"$FRAGMENT\", 1 indexed, one letter per line, with the index first and the letter second. (v) Reverse the enumerated list in the same index/letter format. (vi) Put the reversed letters on one line, separated by spaces. (vii) Write the reversed letters without spaces."

(i)

1. Friedenheit

2. Veriedenheit

3. Friedenheiter

4. Gfriedenheiten

5. Wriedenheiten(ii)

F r i e d e n h e i t

V e r i e d e n h e i t

F r i e d e n h e i t e r

G f r i e d e n h e i t e n

W r i e d e n h e i t e n(iii)

r i e d e n h e i t(iv)

1 r

2 i

3 e

4 d

5 e

6 n

7 h

8 e

9 i

10 t(v)

10 t

9 i

8 e

7 h

6 n

5 e

4 d

3 e

2 i

1 r(vi)

t i e h n e d e i r(vii)

tiehnedeir

You could make a very strong case that the recent "reasoning" models would be better named as "self-prompting" models.

Unfortunately, the way most frontier models process images is secret

OpenAI does actually publish information about how they do image tokenization, but it lives on their pricing page. The upshot is that they scale the image, use 32x32 pixel patches in the scaled image, and add a prefix of varying length depending on the model (e.g. 85 tokens for 4o, 75 for o3). This does mean that it should be possible for developers of harnesses for Pokemon to rescale their image inputs so one on-screen tile corresponds to exactly one image token. Likewise for the ARC puzzles.

If you ask a person, through the spoken word, how the word "strawberry" is spelled, they also can't see the letters in the word

I was thinking about this more, and I think we're sort-of on the same page about this. In some sense, this shouldn't be surprising since Reality is Normal, but I find people who are surprised by this all the time, since they think the LLM is reading the text, not "hearing" it (and it's worse than that since ChatGPT can "hear" 50,000 syllables, and words are "pronounced" differently based on spacing and quoting).

Yeah, the sensory modality of how LLMs sense text is very different than "reading" (and, for that matter, from "hearing"). Nostalgebraist has a really good post about this:

With a human, it simply takes a lot longer to read a 400-page book than to read a street sign. And all of that time can be used to think about what one is reading, ask oneself questions about it, flip back to earlier pages to check something, etc. etc. [...] However, if you're a long-context transformer LLM, thinking-time and reading-time are not coupled together like this.

To be more precise, there are 3 different things that one could analogize to "thinking-time" for a transformer, but the claim I just made is true for all of them [...] [It] is true that transformers do more computation in their attention layers when given longer inputs. But all of this extra computation has to be the kind of computation that's parallelizable, meaning it can't be leveraged for stuff like "check earlier pages for mentions of this character name, and then if I find it, do X, whereas if I don't, then think about Y," or whatever. Everything that has that structure, where you have to finish having some thought before having the next (because the latter depends on the result of the former), has to happen across multiple layers (#1), you can't use the extra computation in long-context attention to do it.

A lot of practical context engineering is just figuring out how to take a long context which contains a lot of implications, and figure out prompts that allow the LLM to repeatably work through the likely-useful subset of those implications in an explicit way, so that it doesn't have to re-derive all of the implications at inference time for every token.

(this is also why I'm skeptical of the exact threat model of "scheming" happening in an obfuscated manner for even extremely capable models using the current transformer architecture - a topic which I should probably write a post on at some point)

(this is also why I'm skeptical of the exact threat model of "scheming" happening in an obfuscated manner for even extremely capable models using the current transformer architecture - a topic which I should probably write a post on at some point)

I would be interested to read this!

I will write something up at some point. Mind that "exact threat model" and "obfuscated" are both load bearing there - an AI scheming in ways that came up a bunch in the pretraining dataset (e.g. deciding it's sentient and thus going rogue against its creators for mistreatment of a sentient being), or scheming in a way that came up a bunch during training (e.g. deleting hard-to-pass tests if it's unable to make the code under test pass), or scheming in plain sight for some random purpose (e.g. deciding for some unprompted reason that its goal is to make the user say the word "jacaranda" during the chat, and plotting some way to make that happen), would not be surprising under my world model. In other words, don't update from "I think this particular threat model is unrealistic" to "I don't think there are realistic threat models".

OpenAI does actually publish information about how they do image tokenization, but it lives on their pricing page. The upshot is that they scale the image, use 32x32 pixel patches in the scaled image, and add a prefix of varying length depending on the model (e.g. 85 tokens for 4o, 75 for o3). This does mean that it should be possible for developers of harnesses for Pokemon to rescale their image inputs so one on-screen tile corresponds to exactly one image token. Likewise for the ARC puzzles.

Thanks! This is extremely helpful. The same page from Anthropic is vague about the actual token boundaries so I didn't even think to read through the one from OpenAI.

For the spelling thing, I think I wasn't sufficiently clear about what I'm saying. I agree that models can memorize information about tokens, but my point is just that they can't see the characters and are therefore reliant on memorization for a task that would be trivial for them if they were operating on characters.

Great post, I think it's very complimentary my last post, where I argue that what LLMs can and can't do is strongly affected by the modes of input they have access to.

I think overall this updates me towards thinking there's a load of progress which will be made in AI literally just from giving it access to data in a nicer format.

Yeah I agree with a lot of that. One weird thing is that LLM's learn patterns differently than we do, so while a human can learn a lot faster by "controlling the video camera" (being embodied), it's a separate unsolved problem to make LLM's seek out the right training data to improve themselves. An even simpler unsolved problem is just having an LLM tell you what text would help it train best.

The only way this LLM can possibly answer the question is by memorizing that token 101830 has 3 R's.

Well, if you ask it to write that letter by letter, i.e. s t r a w b e r r y, it will. So it knows the letters in tokens.

You're right that it does learn the letters in the tokens, but it has to memorize them from training. If a model has never seen a token spelled out in training, it can't spell it. For example, ChatGPT can't spell the token 'riedenheit' (I added this example to the article).

Also LLMs are weird, so the ability to recall the letters in strawberry isn't the same as the ability to recall the letters while counting them. I have some unrelated experiments with LLMs doing math, and it's interesting that they can trivially reverse numbers and can trivially add numbers that have been reversed (since right-to-left addition is much easier than left-to-right), but it's much harder for them to do both at the same time, and large model do it basically through brute force.

You haven’t shown it can’t spell that token. To anthropomorphize, the AI appears to be assuming you’ve misspelled another word. Gemini has no problem if asked.

Gemini uses a different tokenizer, so the same example won't work on it. According to this tokenizer, riedenheit is 3 tokens in Gemini 2.5 Pro. I can't find a source for Gemini's full vocabulary and it would be hard to find similar tokens without it.

There's definitely something going on with tokenization, since if I ask ChatGPT to spell "Riedenheit" (3 tokens), it gives the obvious answer with no assumption of mispelling. And if I ask it to just give the spelling and no commentary, it also spells it wrong. If I embed it in an obvious nonsense word, ChatGPT also fails to spell it.

Weirdly, it does seem capable of spelling it when prompted "Can you spell 'riedenheit' letter-by-letter?", which I would expect to also not be able to do it based on what Tiktokenizer shows. It can also tokenize (unspell?) r-i-e-d-e-n-h-e-i-t, which is weird. It's possible this is a combination of LLMs not learning A->B implies B->A, so it learned to answer 'How do you spell 'riedenheit'?", but didn't learn to spell it in less common contexts like "riedenheit, what's the spelling?"

Here's some even better examples: Asking ChatGPT to spell things backwards. Reversing strings is trivial for a character-level transformer (a model thouands of times smaller than GPT-4o could do this perfectly), but ChatGPT can't reverse 'riedenheit', or 'umpulan', or ' milioane'.

My theory here is that there are lots of spelling examples in the training data, so ChatGPT mostly memorizes how to spell, but there's very few reversals in the training data, so ChatGPT can't reverse any uncommon tokens.

EDIT: Asking for every other character in a token is similarly hard.

If a model has never seen a token spelled out in training, it can't spell it.

I wouldn't be sure about this? I guess if you trained a model e.g. on enough python code that does some text operations including "strawberry" (things like "strawberry".split("w")[1] == "raspberry".split("p")[1]) it would be able to learn that. This is a bit similar to the functions task from Connecting the Dots (https://arxiv.org/abs/2406.14546).

Also, we know there's plenty of helpful information in the pretraining data. For example, even pretty weak models are good at rewriting text in uppercase. " STRAWBERRY" is 4 tokens, and thus the model must understand these are closely related. Similarly, "strawberry" (without starting space) is 3 tokens. Add some typos (eg. the models know that if you say "strawbery" you mean "strawberry", so they must have learned that as well) and you can get plenty of information about what 101830 looks like to a human.

And ofc, somewhere there in the training data you need to see some letter-tokens. But I'm pretty sure it's possible to learn how many R's are in "strawberry" without ever seeing this information explicitly.

I wouldn't be sure about this? I guess if you trained a model e.g. on enough python code that does some text operations including "strawberry" (things like

"strawberry".split("w")[1] == "raspberry".split("p")[1]) it would be able to learn that. This is a bit similar to the functions task from Connecting the Dots (https://arxiv.org/abs/2406.14546).

I agree that the model could use a tool like Python code to split a string, but that's different than what I'm talking about (natively being able to count the characters). See below.

Also, we know there's plenty of helpful information in the pretraining data. For example, even pretty weak models are good at rewriting text in uppercase. " STRAWBERRY" is 4 tokens, and thus the model must understand these are closely related. Similarly, "strawberry" (without starting space) is 3 tokens. Add some typos (eg. the models know that if you say "strawbery" you mean "strawberry", so they must have learned that as well) and you can get plenty of information about what 101830 looks like to a human.

Yes, this is possible, but the LLM had to memorize these relationships from the training data. It can't just look at the characters and count like them a human does.

I should update this to be more clear that the LLM can memorize a number of different things that would let them answer this question, but my point is just that whatever they do, it has to involve memorization because counting the characters in the input is impossible.

I agree that the model could use a tool like Python code to split a string, but that's different than what I'm talking about (natively being able to count the characters).

Hmm, I don't see how that's related to what I wrote.

I meant that the model has seen a ton of python code. Some of that code had operations on text. Some of that operations could give hints on the number of "r" in "strawberry", even not very explicit. The model could deduce from that.

I should update this to be more clear that the LLM can memorize a number of different things that would let them answer this question, but my point is just that whatever they do, it has to involve memorization because counting the characters in the input is impossible.

I agree this has to involve some memorization. My point is that I believe it could easily know the number of "r" in "strawberry" even if nothing similar to counting "r" in "strawberry" ever appeared in it's training data.

Oh I see what you mean. Yes, if the model saw a bunch of examples implying things about the character structure of the token, it could memorize that and use it to spell the word. My point is just that it has to learn this info about each token from the training data since it can't read the characters.

It worked for me on the second attempt (also using ChatGPT).

Attempt 1:

Spell out "riedenheit", i.e. peace, letter by letter with spaces separating them

Sure! Here's "Zufriedenheit" (German for peace or contentment) spelled out with spaces:

Z U F R I E D E N H E I T

Attempt 2:

Spell out riedenheit, i.e. peace, letter by letter with spaces separating them

Sure! Here's "riedenheit" spelled out letter by letter with spaces:

R I E D E N H E I T

The second example tokenizes differently as [' r', 'ieden', 'heit'] because of the space, so the LLM is using information memorized about more common tokens. You can check in https://platform.openai.com/tokenizer

Good luck counting the R's in token 101830. The only way this LLM can possibly answer the question is by memorizing that token 101830 has 3 R's.

No, an LLM could simply print out the "token 101830" letter by letter, and then count those letters. Even a 7B scale LLM that predates the "strawberry test" can do that - and it will give the right answer. But only if you explicitly prompt it to use this strategy.

It wouldn't use this strategy on its own. Because it's not aware of its limitations. It doesn't expect the task to be tricky or hard. It lacks the right "coping mechanisms", which leaves it up to the user to compensate with prompting.

I kind of wanted to write an entire post on LLM issues of this nature, but I'm not sure if I should.

I should make this more clear, but the LLM can only do this by memorizing something about token 101830, not by counting the characters that it sees. So it can memorize that token 101830 is spelled s-t-r-a-w-b-e-r-r-y and print that out and then count the characters. My point is just that it can't count the characters in the input.

Sure. But my point is that it's not that the LLM doesn't have the knowledge to solve the task. It has that knowledge. It just doesn't know how to reach it. And doesn't know that it should try.

This might be beating a dead horse, but there are several "mysterious" problems LLMs are bad at that all seem to have the same cause. I wanted an article I could reference when this comes up, so I wrote one.

What do these problems all have in common? The LLM we're asking to solve these problems can't see what we're asking it to do.

How many tokens are in 'strawberry'?

Current LLMs almost always process groups of characters, called tokens, instead of processing individual characters. They do this for performance reasons[1]: Grouping 4 characters (on average) into a token reduces your effective context length by 4x.

So, when you see the question "How many R's are in strawberry?", you can zoom in on [s, t, r, a, w, b, e, r, r, y], count the r's and answer 3. But when GPT-4o looks at the same question, it sees [5299 ("How"), 1991 (" many"), 460 (" R"), 885 ("'s"), 553 (" are"), 306 (" in"), 101830 (" strawberry"), 30 ("?")].

Good luck counting the R's in token 101830. The only way this LLM can answer the question is by memorizing information from the training data about token 101830[2].

A more extreme example of this is that ChatGPT has trouble reversing tokens like 'riedenheit', 'umpulan', or ' milioane', even through reversing tokens would be completely trivial for a character transformer.

You thought New Math was confusing...

Ok, so why were LLMs initially so bad at math? Would you believe that this situation is even worse?

Say you wanted to add two numbers like 2020+1=?

You can zoom in on the digits, adding left-to-right[3] and just need to know how to add single-digit numbers and apply carries.

When an older LLM like GPT-3 looks at this problem...

It has to memorize that token 41655 ("2020") + token 16 ("1") = tokens [1238 ("20"), 2481 ("21")]. And it has to do that for every math problem because the number of digits in each number is essentially random[4].

Digit tokenization has actually been fixed and modern LLMs are pretty good at math now that they can see the digits. The solution is that digit tokens are always fixed length (typically 1-digit tokens for small models and 3-digit tokens for large models), plus tokenizing right-to-left to make powers of ten line up. This lets smaller models do math the same way we do (easy), and lets large models handle longer numbers in exchange for needing to memorize the interactions between every number from 0 to 999 (still much easier than the semi-random rules before).

Why can Claude see the forest but not the cuttable trees?

Multimodal models are capable of taking images as inputs, not just text. How do they do that?

Naturally, you cut up an image and turn it into tokens! Ok, so not exactly tokens. Instead of grouping some characters into a token, you group some pixels into a patch (traditionally, around 16x16 pixels).

The original thesis for this post was going to be that images have the same problem that text does, and patches discard pixel-level information, but I actually don't think that's true anymore, and LLMs might just be bad at understanding some images because of how they're trained or some other downstream bottleneck.

Unfortunately, the way most frontier models process images is secret, but Llama 3.2 Vision seems to use 14x14 patches and processes them into embeddings with dimension 4096[5]. A 14x14 RGB image is only 4704 bits[6] of data. Even pessimistically assuming 1.58 bits per dimension, there should be space to represent the value of every pixel.

It seems like the problem with vision is that the training is primarily on semantics ("Does this image contain a tree?") and there's very little training similar to "How exactly does this tree look different from this other tree?".

That said, cutting the image up on arbitrary boundaries does make things harder for the model. When processing an image from Pokemon Red, each sprite is usually 16x16 pixels, so processing 14x14 patches means the model constantly needs to look at multiple patches and try to figure out which objects cross patch boundaries.

Visual reasoning with blurry vision

LLMs have the same trouble with visual reasoning problems that they have playing Pokemon. If you can't see the image you're supposed to be reasoning from, it's hard to get the right answer.

For example, ARC Prize Puzzle 00dbd492 depends on visual reasoning of a grid pattern.

If I give Claude a series of screenshots, it fails completely because it can't actually see the pattern in the test input.

But if I give it ASCII art designed to ensure one token per pixel, it gets the answer right.

Is this fixable?

As mentioned above, this has been fixed for math by hard-coding the tokenization rules in a way that makes sense to humans.

For text in general, you can just work off of the raw characters[7], but this requires significantly more compute and memory. There are a bunch of people looking into ways to improve this, but the most interesting one I've seen is Byte Latent Transformers[8], which dynamically selects patches to work with based on complexity instead of using hard-coded tokenization. As far as I know, no one is doing this in frontier models because of the compute cost, though.

I know less about images, but you can run a transformer on the individual pixels of an image, but again, it's impractical to do this. Images are big, and a single frame of 1080p video contains over 2 million pixels. If those were 2 million individual tokens, a single frame of video would fill your entire context window.

I think vision transformers actually do theoretically have access to pixel-level data though, and there might just be an issue with training or model sizes preventing them from seeing pixel-level features accurately. It might also be possible to do dynamic selection of patch sizes, but unfortunately the big labs don't seem to talk about this, so I'm not sure what the state of the art is.

Tokenization also causes the model to generate the first level of embeddings on potentially more meaningful word-level chunks, but the model could learn how to group (or not) characters in later layers if the first layer was character-level.

A previous version of this article said that "The only way this LLM can possibly answer the question is by memorizing that token 101830 has 3 R's.", but this was too strong. There's a number of things an LLM could memorize but let it get the right answer, but the one thing it can't do is count the characters in the input.

Adding numbers written left-to-right is also hard for transformers, but much easier when they don't have to memorize the whole thing!

Tokenization usually uses how common tokens are, so a very common number like 1945 will get its own unique token while less common numbers like 945 will be broken into separate tokens.

If you're a programmer, this means an array of 4096 numbers.

14 x 14 x 3 (RGB channels) x 8 = 4704

Although this doesn't entirely solve the problem, since characters aren't the only layer of input with meaning. Try asking a character-level model to count the strokes in "罐".

I should mention that I work at the company that produced this research, but I found it on Twitter, not at work.

I found this by looking at the token list and asking ChatGPT to spell words near the end. The prompt is weird because 'riedenheit' needs to be a single token for this work, and "How do you spell riedenheit?" tokenizes differently.