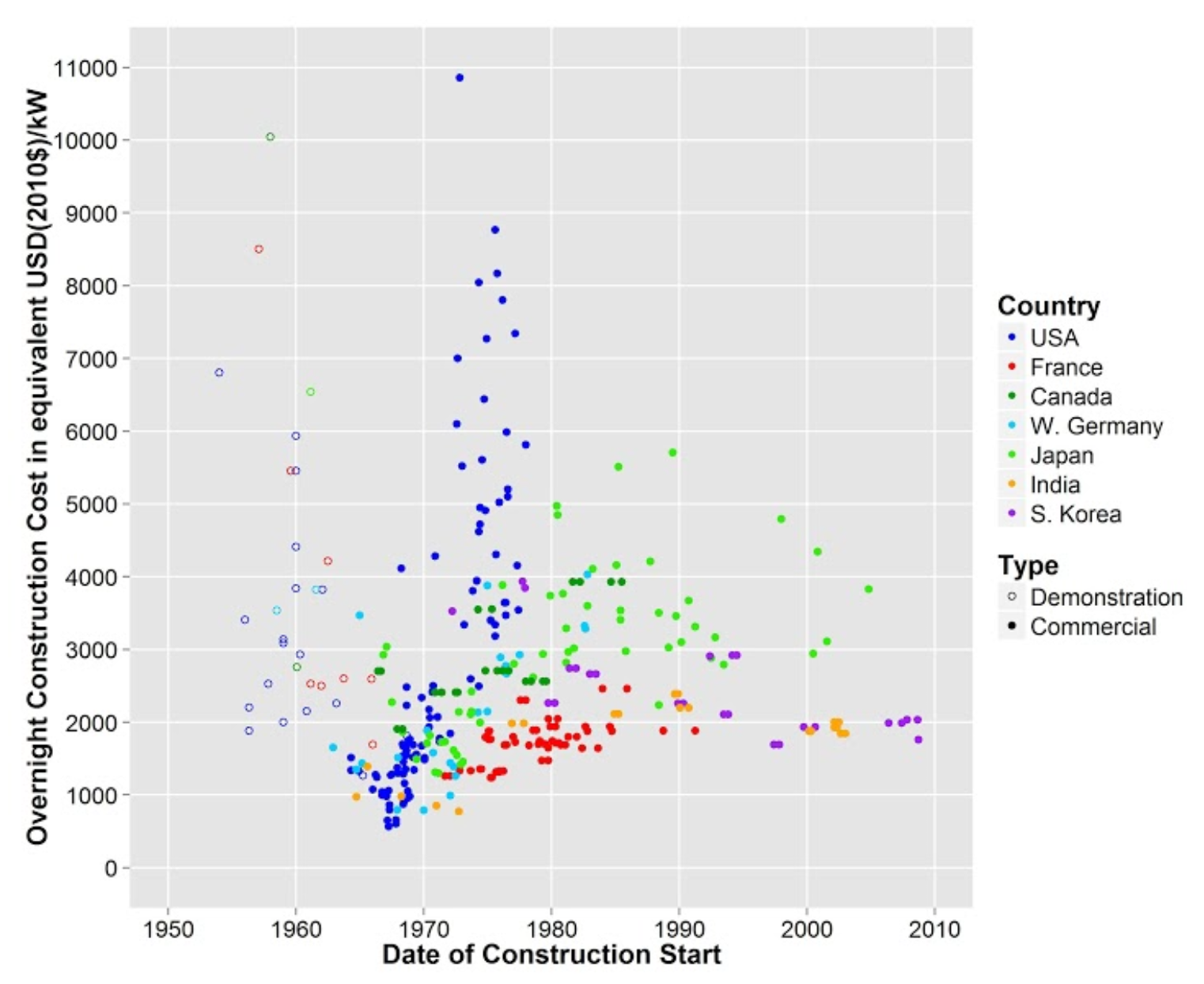

This actually isn't true: nuclear power was already becoming cheaper than coal and so on, and improvements have been available. The problem is actually regulatory: Starting at around 1970 various reasons have caused the law to make even the same tech to become MUCH more expensive. This was avoidable and some other countries like France managed to make it keep going cheaper than alternative sources. This talks about it in detail. Here's a graph from the article:

I have seen that post and others like it, and that incorrect view is what I'm arguing against here. The plot you're posting, cost vs cumulative capacity, shows that learning with cumulative capacity built happened for a while and then stopped being relevant; that doesn't contradict my point.

Nuclear power in France, when including subsidies, wasn't exceptionally cheap, and was driven largely by the desire for nuclear weapons to deter a Soviet invasion using small nukes and lots of tanks in Europe and the threat of ICBMs to prevent the US from participating. Which is a thing that was planned.

BTW, the French nuclear utility EDF is building Hinkley Point C, and it's not particularly cheap.

From the same paper:  Inflation doesn't explain a tenfold increase between ~1967 and ~1975. Regardless, this graph is in 2010$ so inflation doesn't explain anything. I agree with @Andrew Vlahos that inflation doesn't explain the trend.

Inflation doesn't explain a tenfold increase between ~1967 and ~1975. Regardless, this graph is in 2010$ so inflation doesn't explain anything. I agree with @Andrew Vlahos that inflation doesn't explain the trend.

That graph is by construction start. It would be more appropriate to use construction end date, or some activity-weighted year average.

Regardless, this graph is in 2010$ so inflation doesn't explain anything.

Did you read my post? My main point was that inflation is an average across things that includes cost-reducing technological progress. If you have less such progress than the average, the correct rate is higher.

I did read your post, but how high an inflation do you need to match this graph? If you argued that the inflation for nuclear plants is let's say 1% or 2% higher than the inflation in general, why not. But a crude estimates gives an inflation of 30% per year to account for the increase shown in this graph. That's unheard of.

You mention several times a 5% inflation figure. For a 5% inflation figure to explain this graph, it would take more than 40 years when the date of construction start is separated by only 8 years. So unless, within 8 years, the construction duration was increased by 30 years (which would already be concerning, and fuel the "this is a regulation problem" narrative), your explanation doesn't match the data.

unless, within 8 years, the construction duration was increased by 30 years

Yes, that's approximately what I'm saying, but the more-delayed plants had extra costs from the delays. It can take a while sometimes.

which would already be concerning, and fuel the "this is a regulation problem" narrative

No, things can get delayed because costs increased, and then costs increase because of the delays. Sometimes, eventually more government/utility money comes in, old investors get wiped out, new investors come in, and things get finished. But more often, the project just gets cancelled.

You are cherry-picking. From the wikipedia page list of commercial nuclear reactors, the duration between 1960 and 1980 ranged from 3 to 15 years. Only one plant (the one you cite) took 40 years to build, and only one other as far as I can tell took more than 30 years, out of the 144 entries of the table.

That chart relies somewhat on outliers, too. But OK, if you're making a point about costs increasing after Three Mile Island, I agree: new regulations introduced during construction increased costs a lot.

I think those regulations were worthwhile, maybe you disagree, but either way, once the regulations are introduced, builders of later plants know about them and don't have to make changes during construction. Once compliant plants are built, people know how to deal with them. The actual regulations were about things like redundant control wiring, not things that directly impacted costs nearly as much as changing designs in the middle of construction did.

I don't care about cost increases during specific past periods where new (reasonable) regulations were introduced, I care about cost increases from then to now - and costs have continued to increase, in multiple countries.

It's worth noting that the South Korean strategy to build cheap reactors was essentially about bribing the regulators. After they started charging people with corruption they didn't build cheap reactors anymore.

I wouldn't be surprised in India used similar methods to build their cheap nuclear plants.

How cheap do batteries need to be for economics to ignore everything else in favor of solar/wind?

Maybe limit to the 90 percent case, this would be for 90 percent of the Earth's population. Extreme areas like Moscow and Anchorage can be left out.

First off, some reported costs are misleading.

Perhaps you've seen articles saying that Li-ion batteries are down to $132/kWh according to a BloombergNEF survey. That was heavily weighted towards subsidized Chinese prices for batteries used in Chinese vehicles. In 2020, the average price per kWh excluding China was over 2x their global average. But of course, journalists are lazy, so they just report the average.

In 2019, Tesla charged $265/kWh for large (utility-scale) lithium-ion batteries. That was almost at-cost, and doesn't include construction, transformers, or interconnects, which add >$100/kWh. Their PowerWall systems are much more expensive, of course.

The cost per kWh also depends on battery life.

LiFePO4 batteries are often described as having a cycle life of 5000 cycles. How about degradation over time? LiFePO4 generally lasts for 5 to 20 years, depending on the temperature and charge state. (High temperature is worse.) That's similar to the cycle life.

That's not so bad, and Li-ion costs could come down somewhat. $200/kWh for LiFePO4 is plausible. Cycle life is OK, degradation over time is OK, so everything is fine, right? WRONG! A "SEI" layer forms in Li-ion batteries, which thickens over time, increasing resistance and reducing capacity but protecting the battery from degradation. Cycling the battery breaks up the SEI layers somewhat, which affects degradation over time.

But Li-ion battery degradation isn't understood very well by most people, and investors and executives and government officials just look at the cycle life if they even think about degradation at all. Anyway, 5000 cycles is too optimistic, and I expect something more like 2000 cycles for LiFePO4 in a grid energy storage application.

Anyway, you can see this 2020 report from the DOE. They estimated $100 / kWh stored / year operated for LFP batteries. If you operate them every day, that would be $0.27/kWh.

Again, the problem is that sometimes it's cloudy for a while in the winter, and sometimes the wind doesn't blow for a while, so you need more than 1 day of storage. But if Li-ion batteries were $10/kWh you could have a month worth of battery storage and forget about nuclear. That's not really physically possible, but some finance types did draw some lines down that far.

Are there other types of energy storage besides lithium batteries that are plausibly cheap enough (with near-term technological development) to cover the multiple days of storage case?

(Legitimately curious, I'm not very familiar with the topic.)

Yes, compressed natural gas in underground caverns is cheap enough for seasonal energy storage.

But of course, you meant "storage that can be efficiently filled using electricity". That's a difficult question. In theory, thermal energy storage using molten salt or hot sand could work, and maybe a sufficiently cheap flow battery chemistry is possible. In theory, much better water electrolysis and hydrogen fuel cells are possible; there just currently aren't any plausible candidates for that.

But currently, even affordable 14-hour storage is rather challenging.

Please go into more detail the model you are using to arrive at "2000" cycles for grid storage applications. The data sheet testing usually has 3500-7000 cycles, usually defined as 20-100 percent SOC, usually at 1C charge/discharge rate, with failure at 80 percent of initial capacity.

Some LFP are better, some worse. Usually shallower cycles (say 40-60 percent) have nonlinearly more lifespan.

What explains the disparity? Heat? Calendar aging? The manufacturers all commit fraud?

Now, with 1 cycle a day, and if I assume that 4000 cycles is a real number, that's 11 years. Calendar aging is significant for that service cycle.

I also am curious what you think of sodium batteries. With cheaper raw materials it seems logical the cells could drop a lot in cost, assuming almost fully automated production. I have heard "$40" a kWh and similar cycle life to LFP.

Ok, having examined the paper, I'm going to dismiss it as invalid evidence. It uses NMC cells. No grid scale batteries today use this cell chemistry. Tesla did offer NCA cells for a time, but has switched the LFP.

Do you have any evidence for LFP cells? Note that NMC cells have mayfly like lifespans of a nominal 1000-2000 cycles, depending.

Without evidence a rational person would dismiss your claim of '2000 cycles' and assume ~3500 (on the low end for LFP). In addition they would assume bulk prices for the batteries such as this. https://www.alibaba.com/product-detail/48V-51-2v-100ah-Lifepo4-Lithium_1600873092214.html?spm=a2700.7735675.0.0.19c0fbFofbFoZR&s=p

$1000 for 5.12 kWh. Claimed lifespan 6000 cycles. There are many listings like this, a utility scale order would be able to find the higher quality suppliers using better quality cells and order a large number of them. So assuming 5.12 x 0.8 x 3500, that's 14336 kWh cycled. 6.9 cents a cycle.

That's for the 1 day storage and assuming good utilization. Price skyrockets for 'long duration grid storage', if we assume 1 cycle a year (winter storage), and the battery does fail after 20 years, then 102 kWh cycled, $9.74 a kWh.

What do you propose we use for this once/season duty cycle? Synthetic natural gas looks a lot more attractive when the alternative is $10 a kWh.

See other thread. Your evidence is unconvincing and you shouldn't be convinced yourself. You essentially have no evidence for an effect that should be trivial to measure. You could plot degradation over about 1 year and support your theory or falsify it. It's rather damning you cannot find such a plot to cite.

Plot must be for production LFP cells with daily cycling.

Do you have empirical evidence? Some grid scale batteries, especially of the "server rack" commodity style that use LFP, should have 5 years of life already and by your model about to fail. I would argue that such failures observed over the tens of thousands of them deployed in various grids would be strong direct evidence of an n cycle field lifespan. I do not see any data in this paper collected from field batteries, merely a model that may simply not be grounded.

Are grid operators assuming they have 15-20 year service lives or 5?

Yes, some people have needed to replace batteries in some large storage systems, and/or augment them with extra capacity to balance degradation. I haven't seen any good data on this, because most operators have no reason to share it. Also, due to rapid growth, most of the volume of first replacements is still upcoming.

If I read the paper right, it refers to degradation similar to leaving the cells at 100 percent SOC. It should be immediately measurable and catastrophic, leading to complete storage failures. Do you not have any direct measurements?

It's an extremely falsifiable thing, there should be monthly capacity loss and it should be obvious in 1 year the batteries are doomed.

Someone could buy an off the shelf LFP battery and cycle it daily and just prove this.

I have found strong evidence falsifying your theory.

From Tesla's 10-k filing : https://web.archive.org/web/20210117144859/https://ir.tesla.com/node/20456/html , which has criminal liability if it contains false information.

Energy Storage Systems

We generally provide a 10-year “no defect” and “energy retention” warranty with every current Powerwall and a 15-year “no defect” and “energy retention” warranty with every current Powerpack or Megapack system. Pursuant to these energy retention warranties, we guarantee that the energy capacity of the applicable product will be at least a specified percentage (within a range up to 80%) of its nameplate capacity during specified time periods, depending on the product, battery pack size and/or region of installation, and subject to specified use restrictions or kWh throughputs caps.

Assuming a daily megapack cycle, that's 5475 cycles over the 15 year warranty period. This would line up well with the cited "6000 cycles" for some LFP cells used for solar energy storage. It also means a price per kWh of 265/5475 = 4.8 cents per kWh, not 27 cents.

In addition, the true expected degradation, assuming competent engineers at Tesla, is likely less than that, with real expected lifespans probably around 20 years in order for a 15 year warranty to be financially viable.

Do you have any data or cites that disprove this, and are they more credible than this source?

-

The Powerwalls are over-provisioned, which is part of why they cost $600+/kWh.

-

They don't expect full daily charge-discharge cycles, and were willing to eat the cost of replacements for the fraction of people who did that, hoping for lower battery costs by that point.

-

The Powerwall 2 warranty is, if charging off anything except exclusively solar, limited to 37800 kWh on a 13.5 kWh battery that's somewhat overprovisioned. Which is...about 2000 cycles. The warranty promises 70% of nominal capacity by that point. (By that point, resistance would also be quite a bit higher.) Even with those limits, I expect a decent number of replacements under that warranty.

The megapack is not a Powerwall. Powerwall uses nca cells. Megapack uses LFP. The cycles you calculated are correct for good nca cells.

The uranium in a cubic meter of seawater, used in current reactors, produces electricity (~0.1 kWh) worth less than even simple processing of a cubic meter of seawater costs. If seawater processing cost as much as desalination, and fuel was half the electricity cost, that'd be $10/kWh. Well, at least I guess it's less dumb than mining He3 from the moon.

The process of harvesting uranium from seawater is not about desalination. One website describes the process as:

Scientists have long known that uranium dissolved in seawater combines chemically with oxygen to form uranyl ions with a positive charge. Extracting these uranyl ions involves dipping plastic fibers containing a compound called amidoxime into seawater. The uranyl ions essentially stick to the amidoxime. When the strands become saturated, the plastic is chemically treated to free the uranyl, which then has to be refined for use in reactors just like ore from a mine.

How practical this approach is depends on three main variables: how much uranyl sticks to the fibers; how quickly ions can be captured; and how many times the fibers can be reused.

In the recent work, the Stanford researchers improved on all three variables: capacity, rate and reuse. Their key advance was to create a conductive hybrid fiber incorporating carbon and amidoxime. By sending pulses of electricity down the fiber, they altered the properties of the hybrid fiber so that more uranyl ions could be collected.

If you leave the fibers out in the ocean and just wait till they can be harvested you aren't processing cubit meters of seawater in any meaningful way, so I don't see a good reason to compare it to desalination prices.

I'm aware of such proposals. You'd have to leave the fibers out for a really long time without pumped flow. They'd accumulate stuff on them. The concentration achievable is fairly low. The special fibers are expensive. Putting them out and collecting them is expensive. This would all end up being even more expensive than pumping seawater.

Also, amidoximes are susceptible to hydrolysis; many of them would convert to ketones in seawater eventually.

In the best envisaged 500GW-days/tonne fast breeder reactor cycles 1kg of Uranium can yield about $500k of (cheap) $40/MWh electricity.

Cost for sea water extraction (done using ion-selective absorbing fiber mats in ocean currents) of Uranium is currently estimated (using demonstrated tech) to be less than $1000/kg, not yet competitive with conventional mining, but is anticipated to drop closer to $100/kg which would be. That is a trivial fraction of power production costs. It is even now viable with hugely wasteful pressurised water uranium cycles and long term with fast reactor cycles there is no question as to its economic viability. It could likely power human civilisation for billions of years with replenishment from rock erosion.

A key problem for nuclear build costs is mobility of skilled workforces - 50 years ago skilled workers could be attracted to remote locations to build plants bringing families with them as sole-income families. But nowadays economics and lifestyle preferences make it difficult to find people willing to do that - meaning very high priced fly-in-fly-out itinerant workforces such as are seen in oil industry.

The fix is; coastal nuclear plants, build and decommission in specialist ship yards, floated to operating spot and emplace on sea bed - preferable with seabed depth >140m (ice age minimum). Staff flown or ferried in and out (e-VTOL). (rare) accidents can be dealt with by sea water dilution, and if there is a civilizational cataclysm we don't get left with a multi-millenia death zone around decaying land-based nuclear reactors.

Goes without saying that we should shift to fast-reactors for efficiency and hugely less long term waste production. To produce 10TW of electricity (enough to provide 1st world living standards to everyone) would take about 10000 tonnes a year of uranium, less than 20% of current uranium mining in 500GW-day/tonne fast reactors.

Waste should be stuck down many km-deep holes on abyssal ocean floors. Dug using oil industry drilling rigs and capped with concrete. There is no flux of water through ocean bed floor, and local water pressures are huge so nothing will ever be released into environment - no chance of any bad impacts ever (aside from volcanism that can be avoided). Permanent perfect solution that requires no monitoring after creation.

Uranium in seawater is ~3 ppb.

$1000/kg is ~$0.003/m^3 of seawater, at 100% of uranium captured.

Desalination is ~$0.50/m^3.

Fast breeders are irrelevant for this topic because there is plenty of fuel for them already.

Desalination costs are irrelevant to uranium extraction. Uranium is absorbed in special plastic fibers arrayed in ocean currents that are then post processed to recover the uranium - it doesn't matter how many cubic km of water must pass the fiber mats to deposit the uranium because that process is, like wind, free. The economics have been demonstrated in pilot scale experiments at ~$1000/kg level, easily cheap enough making Uranium an effectively inexhaustible resource at current civilisational energy consumption levels even after we run out of easily mined resources. Lots of published research on this approach (as is to be expected when it is nearing cost competitiveness with mining).

As I wrote in the post, that number is fake, based on an inapplicable calculation.

Maybe you read something about that only being 6x or so as expensive, but whatever number you read is fake. I don't want to see a comment from you unless you look into how that number was calculated and think real hard about whether it would be the only cost involved.

Fake cost estimates in papers are common for other topics too, like renewable fuels. Also, the volume of published research has little to do with cost competitiveness and a lot to do with what's trendy among people who direct grant money.

Hinkley Point C is an ongoing nuclear power project in the UK, which has seen massive cost overruns and delays. But if you take prices for electricity generation in 1960, and increase them as much as concrete has, you're not far off from the current estimated cost per kwh of Hinkley Point C, or some other recent projects considered too expensive.

I am sorry but this confused me, how is this section relevant?

to my understanding, you are saying that if we build an average 1960 electricity plant now [does not matter what type] then as the construction cost went up as per inflation [with concrete price as the standard], we should expect the electricity cost the same as Hinkley Point C.

I don't understand what it has to bear on the matter of whether the cost increase on nuclear projects is from [lack of innovation while everything else is inflated] versus [random objection picked][ALARA forced nuclear plants to match construction cost of other plants rendering it impossible to be cost efficient].

What specific innovations do you think are being missed? Most of the proposed alternative designs (eg molten salt reactors with expensive corrosion-resistant heat exchangers and other problems) would be much more expensive. The design changes that made economic sense, eg the AP1000 design, largely got implemented.

ok this is not addressing my confusion, my confusion is that that particular piece of information does not distinguish between your hypothesis and many others [with one example I used].

I am not sure why nuclear power cost is so high. Maybe you are right, that nuclear energy just never had improvement since 1960 that decreased the real cost to produce electricity [though electronic component costs had gone down, although construction costs have not]. My point is that this particular piece of information does not distinguish the hypothesis.

My information is mostly from this series of blog posts.

https://www.construction-physics.com/p/why-are-nuclear-power-construction

Taking this at face value, can we agree that current nuclear regulations are imposing a lot of irrational costs on nuclear generators [e.g. designating extremely low radioactive material as contaminated and requiring special disposal]? I don't know how much this cost is [let's say >10%]. Then inflation could be 90% and unreasonable regulation 10% of the cost increase.

that particular piece of information does not help me increase or decrease these percentages.

As Construction Physics notes in its Part 2, rules about low-level waste were driven by direct public concern about that, which forced the NRC to abandon proposed rule changes. I actually think the public concern about low-level waste was sort of reasonable, because some companies could sometimes put highly radioactive waste in supposedly low-level waste. What do you do when you don't understand technical details but a group of experts has proven itself to sometimes be unreliable and biased?

Anyway, when considering relative costs, you can't just look at the cost of regulations on nuclear power and not regulatory costs for other things. How long was the Keystone XL pipeline tied up by lawsuits? How have regulations affected fracking in Europe? And so on. Were regulations on nuclear, relative to appropriate levels of regulation, more expensive than regulations and legal barriers for other energy sources? Probably somewhat, but it's hard to say for sure, and the US government also put more money into research for nuclear power than other energy sources.

As Construction Physics notes in Part 2:

ALARA philosophy is not an especially good explanation for US nuclear plant construction costs

I originally just wanted to point out that inflation includes some cost reduction from technological progress, and some things naturally increase faster than that, which changes baselines for economic estimations - and I figured I'd frame that around nuclear power since I know the physics well enough and some people here care about it way more than they probably should.

It seems that I have failed to communicate clearly, and for that, I apologise. I am agnostic on most of your post.

Let's go back to the original quote

Hinkley Point C is an ongoing nuclear power project in the UK, which has seen massive cost overruns and delays. But if you take prices for electricity generation in 1960, and increase them as much as concrete has, you're not far off from the current estimated cost per kwh of Hinkley Point C, or some other recent projects considered too expensive.

I understand this to mean that [plant price increase roughly in line with inflation]

this does not distinguish between [lack of innovation while everything is inflated] versus [lizard men sabotaging construction].

for [lack of innovation while everything is inflated] to hold you have to argue that [there is a lack of innovation] separately. Or is your argument in reverse? [plant price increase roughly in line with inflation] -> [there must be a lack of innovation].

because some other reasons could be responsible, e.g [lizard men sabotaging construction]

Whether the lizard men exist or not I am not qualified to say. Even less to say what they are if they do exist.

You seem to believe there are no lizard men, just pure inflation. Other people seem to believe otherwise.

But [plant price increase roughly in line with inflation] does not seem to support either.

as for the rest,

yes ALARA is overblown, we shared this belief

Were regulations on nuclear, relative to appropriate levels of regulation, more expensive than regulations and legal barriers for other energy sources?

I am not sure

rules about low-level waste were driven by direct public concern about that, which forced the NRC to abandon proposed rule changes. I actually think the public concern about low-level waste was sort of reasonable, because some companies could sometimes put highly radioactive waste in supposedly low-level waste. What do you do when you don't understand technical details but a group of experts has proven itself to sometimes be unreliable and biased?

agree to disagree here, I believe privately that the level of concern is too high compared to actual risk. But I am uneducated in such matters and the error bar is big.

I understand this to mean that [plant price increase roughly in line with inflation]

That's not exactly my point. The rate of cost increase for concrete products was higher than the average inflation rate. When you consider that, some inflation-adjusted cost increase doesn't mean people got worse at building nuclear plants, which is the assumption made in many articles about nuclear power costs.

you have to argue that [there is a lack of innovation] separately

I did:

Which has had more tech progress, home construction or nuclear plant construction? I'd argue for the former: electric nailguns and screw guns, and automated lumber production. Plus, US home construction has had less increase in average wages, because you can't take your truck to a Home Depot parking lot to pick up Mexican guys to make a nuclear plant.

US electricity costs decreased thanks to progress on gas turbines, fracking, solar panels, and wind turbines. Gas turbines got better alloys and shapes. Solar panels got thinner thanks to diamond-studded wire saws. Wind turbines got fiberglass. Nuclear power didn't have proportionate technological progress.

agree to disagree here, I believe privately that the level of concern is too high compared to actual risk. But I am uneducated in such matters and the error bar is big.

You're misunderstanding my point: whether or not the public level of concern over that is correct given a good understanding of the physics/biology/management/etc, I think it's reasonable given that knowing the technical details isn't feasible for most people and experts were proven untrustworthy.

Why has nuclear power gotten more expensive since 1970? Mostly, because matching inflation requires technological improvements.

Inflation-adjusted retail electricity prices in the US have gone down since 1960. Not only that, but after the increase from initial power line construction finishing, the fraction of electricity costs going to generation has decreased, with more going to transmission. Perhaps that's because generation has more competition than transmission - California and New York have unusually high electricity prices, and you can thank corruption for some of that.

LCOE (levelized cost of energy) for combined cycle natural gas in the US was estimated to be ~$0.04/kWh going forwards. In Germany, electric generation in 2021 was ~$0.08/kWh; half the total cost was taxes/fees.

Inflation-adjusted cost per ft^2 of building a house in the US has been about flat. Median real wages have also been about flat, with a decrease for people without college degrees and more time spent in school for those with them. And construction costs are the biggest issue overall, so the housing affordability crisis in the US is mostly about increased income inequality.

Inflation rate is a combination of several things combined into 1 number:

The average inflation for the whole economy includes a lot of tech progress. Prices being flat when adjusted by (CPI) inflation doesn't mean "there was no progress", it means "as much progress as home construction".

Which has had more tech progress, home construction or nuclear plant construction? I'd argue for the former: electric nailguns and screw guns, and automated lumber production. Plus, US home construction has had less increase in average wages, because you can't take your truck to a Home Depot parking lot to pick up Mexican guys to make a nuclear plant.

Let's look at the cost growth of some other things as reference points.

Concrete products increased about 3.9% a year since 1960, a little higher than the CPI inflation rate. Highway construction costs are up >5%/year over the past 20 years.

If you want to cancel out the technological progress component of the inflation rate, looking at the cost growth of fighter jets seems reasonable, since ~100% of the tech progress on those goes into improved performance instead of reduced cost. And they've gotten a lot more expensive: a 1975 F-16 was ~$6.4M and 2021 F-16 was ~$64M, which is ~5.1% cost growth, despite some learning from continued production. Comparing that to the CPI, that would mean ~1.5% average annual cost reduction from topic-specific tech progress, which seems about right to me.

Hinkley Point C is an ongoing nuclear power project in the UK, which has seen massive cost overruns and delays. But if you take prices for electricity generation in 1960, and increase them as much as concrete has, you're not far off from the current estimated cost per kwh of Hinkley Point C, or some other recent projects considered too expensive.

Yes, at this point some companies have lost their knowledgeable people and forgotten how to build nuclear power plants competently, which means you should applaud the NRC not letting them cut corners, but even the economically disastrous Plant Vogtle reactor project is only 5% inflation since the glory days of nuclear power. Anyway, to whatever extent capabilities have been lost, relative cost increases came first and drove that.

US electricity costs decreased thanks to progress on gas turbines, fracking, solar panels, and wind turbines. Gas turbines got better alloys and shapes. Solar panels got thinner thanks to diamond-studded wire saws. Wind turbines got fiberglass. Nuclear power didn't have proportionate technological progress.

The PPP/nominal GDP of China is almost 2x that of the USA. China can construct stuff more cheaply than the US. Also, those plants are heavily subsidized in opaque ways involving government loans, perhaps because the Chinese government has also been rapidly expanding its nuclear weapon arsenal for better deterrence when it goes for Taiwan. If their nuclear power was actually cheaper than coal they wouldn't be building mostly coal plants, would they?

That project was reported earlier this year as being ~$1.70/W with current cost overruns, which is weird because that's half the cost of earlier Indian nuclear plants. You should adjust costs ~3x for PPP, but also, that number is fake; the project isn't done and costs were shifted to the final reactor with accounting tricks.

The A1Bs used for Ford-class carriers may well be cheaper than the new Vogtle reactor, but the US Navy has never estimated that its nuclear ships were cheaper than using oil, which is more expensive than natural gas from a pipeline. You also can't use them for commercial power, because they use highly enriched fuel and don't have a secondary containment structure, plus most places don't have seawater for cooling.

OK, so for cheap nuclear power we need some sort of better technology, right? Wasn't that what the billions of dollars the US government spent researching Gen IV reactors for?

No, not really. There's no reason to expect any of those to be cheaper than current PWR and BWR reactors. The focus of that was making fast reactors more practical. Current nuclear plants are very inefficient with fuel, and if they were used for most human energy needs, current fuel supplies would run out in a couple decades, before natural gas.

Somebody reading this probably wants to say:

Maybe you read something about that only being 6x or so as expensive, but whatever number you read is fake. I don't want to see a comment from you unless you look into how that number was calculated and think real hard about whether it would be the only cost involved. The uranium in a cubic meter of seawater, used in current reactors, produces electricity (~0.1 kWh) worth less than even simple processing of a cubic meter of seawater costs. If seawater processing cost as much as desalination, and fuel was half the electricity cost, that'd be $10/kWh. Well, at least I guess it's less dumb than mining He3 from the moon.

I expect solar + wind + 1-day storage from Hydrostor-type systems or metal chelate flow batteries to be cheaper than nuclear power in the US and Europe. That might seem attractive in Europe vs shipping LNG from the US, but seasonal energy storage is a lot more expensive than that; hydrogen can be stored cheaply in underground caverns, but hydrogen from electrolysis is much too expensive for power. I also expect biomass power to be cheaper than nuclear, but Europe and Japan don't have enough land for that, and land use would be a big issue even in the US.

Current nuclear power is too expensive and doesn't have enough fuel to be the main source of energy. Breeder reactors would be even more expensive and less safe. And yet...

Let's suppose war breaks out over Taiwan and LNG carriers to Europe and Japan aren't able to operate safely due to threats from submarines and long-range missiles, and seasonal energy storage is too expensive, so they have no choice but to use nuclear power. Or, if you prefer, suppose that it's 50 years from now, AI hasn't taken over, no unexpected technologies have come up, and the US has run out of natural gas but people don't want to go back to coal.

Cheaper nuclear plants would then be important, which as I've explained above, requires technological improvements to match those that other energy sources got. As for how to do that, as I previously said, I think CO2 cooled heavy water moderated reactors with uranium carbide fuel are reasonable, mostly because you can avoid steam turbines, and TerraPower's design is terrible and unsafe, which I guess is what happens when investors who don't understand physics start picking technology. TerraPower's design actually offends me personally on an aesthetic level; it's like this when it should be like this. If you must build fast reactors, maybe I'd try to couple a fast neutron area to a thermal reactor area to make control easier by getting some neutron lifetime and some reactivity loss when moderator heats up - but I'm not a nuclear power specialist, I don't like nuclear reactors, I'm only better than clowns like Kirk Sorensen or the TerraPower people.

I guess you could hope for fusion power too, but it's not as if that's a cheaper or less-radioactive way to generate heat than fission. Helion has been in the news lately and hopes for direct conversion from plasma, but they're going nowhere; here's a non-technical explanation of why, some semi-technical explanations, and I guess you can message me if those somehow aren't technical enough for you.

If you're going to work on some new technology, it's a lot easier to improve a basic type of thing that's used now or at least already close to viability. Nuclear power currently isn't. If Bill Gates or somebody wanted to, for some reason, give me a billion dollars to make a nuclear reactor, I guess I'd do that, but I'd certainly rather work on flow batteries.