Is it just me or is Overcoming Bias almost reaching the point of self-parody with recent posts like http://www.overcomingbias.com/2010/12/renew-forager-law.html ?

It's interesting as a "just for fun" idea. On some blogs it would probably be fine, but OB used to feel a lot more rigorous, important, and multiple levels above me, than it does now.

Coincidence or name-drop?

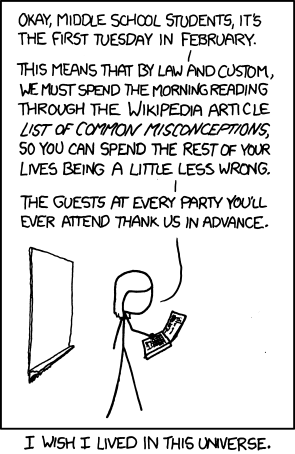

(A fine suggestion, in any case, though "List of cognitive biases" would also be a good one to have on the list.)

(I have no idea whether the following is of any interest to anyone on LW. I wrote it mostly to clarify my own confusions, then polished it a bit out of habit. If at least a few folks think it's potentially interesting, I'll finish cleaning it up and post it for real.)

I've been thinking for a while about the distinction between instrumental and terminal values, because the places it comes up in the Sequences (1) are places where I've bogged down in reading them. And I am concluding that it may be a misleading distinction.

EY presents a toy example here, an...

Ask a Mathematician / Ask a Physicist: Q: Which is a better approach to quantum mechanics: Copenhagen or Many Worlds? to my surprise answers unequivocally in favour of Many Worlds.

Some may appreciate the 'Chrismas' message OkCupid sent out to atheist members:

12 Days of Atheist Matches

Greetings, fellow atheist. This is Chris from OkCupid. I know from hard experience that non-believers have few holiday entertainment options:

- Telling kids that Santa Claus is just the tip of the iceberg, in terms of things that don't actually exist

- Asking Christians to explain why they don't also believe in Zeus

- Messaging cute girls

And I'll start things off with a question I couldn't find a place for or a post for.

Coherent extrapolated volition. That 2004 paper sets out what it would be and why we want it, in the broadest outlines.

Has there been any progress on making this concept any more concrete since 2004? How to work out a CEV? Or even one person's EV? I couldn't find anything.

I'm interested because it's an idea with obvious application even if the intelligence doing the calculation is human.

On the topic of CEV: the Wikipedia article only has primary sources and needs third-party ones.

Expand? Are you talking about saying things about the output of CEV, or something else?

Not just the output, the input and means of computation are also potential minefields of moral politics. After all this touches on what amounts to the ultimate moral question: "If I had ultimate power how would I decide how to use it?" When you are answering that question in public you must use extreme caution, at least you must if you have any real intent to gain power.

There are some things that are safe to say about CEV, particularly things on a technical side. But for most part it is best to avoid giving too many straight answers. I said something on the subject of what can be considered the subproblem ("Do you confess to being consequentialist, even when it sounds nasty?"). Eliezer's responses took a similar position:

then they would be better off simply providing an answer calibrated to please whoever they most desired to avoid disapproval from

No they wouldn't. Ambiguity is their ally. Both answers elicit negative responses, and they can avoid that from most people by not saying anything, so why shouldn't they shut up?

When describing CEV mechanisms in detail from...

In what situation would this be better than or easier than simply donating more, especially if percentage of income is considered over some period of time instead of simply "here it is?"

Sure, and people feel safer driving than riding in an airplane, because driving makes them feel more in control, even though it's actually far more dangerous per mile.

Probably a lot of people would feel more comfortable with a genie that took orders than an AI that was trying to do any of that extrapolating stuff. Until they died, I mean. They'd feel more comfortable up until that point.

Feedback just supplies a form of information. If you disentangle the I-want-to-drive bias and say exactly what you want to do with that information, it'll just come out to the AI observing humans and updating some beliefs based on their behavior, and then it'll turn out that most of that information is obtainable and predictable in advance. There's also a moral component where making a decision is different from predictably making that decision, but that's on an object level rather than metaethical level and just says "There's some things we wouldn't want the AI to do until we actually decide them even if the decision is predictable in advance, because the decision itself is significant and not just the strategy and consequences following from it."

When you build automated systems capable of moving faster and stronger than humans can keep up with, I think you just have to bite the bullet and accept that you have to get it right. The idea of building such a system and then having it wait for human feedback, while emotionally tempting, just doesn't work.

If you build an automatic steering system for a car that travels 250 mph, you either trust it or you don't, but you certainly don't let humans anywhere near the steering wheel at that speed.

Which is to say that while I sympathize with you here, I'm not at all convinced that the distinction you're highlighting actually makes all that much difference, unless we impose the artificial constraint that the environment doesn't get changed more quickly than a typical human can assimilate completely enough to provide meaningful feedback on.

I mean, without that constraint, a powerful enough environment-changer simply won't receive meaningful feedback, no matter how willing it might be to take it if offered, any more than the 250-mph artificial car driver can get meaningful feedback from its human passenger.

And while one could add such a constraint, I'm not sure I want to die of old age w...

Complete randomness that seemed appropriate for an open thread: I just noticed the blog post header on the OvercomingBias summary: "Ban Mirror Cells"

Which, it turned out when I read it, is about chirality, but which I had parsed as talking about mirror neurons, and the notion of wanting to ban mirror neurons struck me as delightfully absurd: "Darned mirror neurons! If I wanted to trigger the same cognitive events in response to doing something as in response to seeing it done by others, I'd join a commune! Darned kids, get off my lawn!"

Even with the discussion section, there are ideas or questions too short or inchoate to be worth a post.

Thankyou! I'd been mourning the loss. There have been plenty of things I had wanted to ask or say that didn't warrant a post even here.

It occurs to me that the concept of a "dangerous idea" might be productively viewed in the context of memetic immunization: ideas are often (but not always) tagged as dangerous because they carry infectious memes, and the concept of dangerous information itself is often rejected because it's frequently hijacked to defend an already internalized infectious memeplex.

Some articles I've read here seem related to this idea in various ways, but I can't find anything in the Sequences or on a search that seems to tackle it directly. Worth writing up as a post?

This seems like a good audience to solve a tip-of-my-brain problem. I read something in the last year about subconscious mirroring of gestures during conversation. The discussion was about a researcher filming a family (mother, father, child) having a conversation, and analyzing a 3 second clip in slow motion for several months. The researcher noted an almost instantaneous mirroring of the speaker's micro-gestures in the listeners.

I think that I've tracked the original researcher down to Jay Haley, though unfortunately the articles are behind a pay wall: ...

Global Nuclear fuel bank reaches critical mass.

http://www.nytimes.com/2010/12/04/science/04nuke.html?_r=1&ref=science

I'm intrigued by this notion that the government solicited Buffett for the funding promise which then became a substantial chunk of the total startup capital. Did they really need his money, or were they looking for something else?

I'm jealous of all these LW meetups happening in places that I don't live. Is there not a sizable contingent of LW-ers in the DC area?

Even with the discussion section, there are ideas or questions too short or inchoate to be worth a post.

This thread is for the discussion of Less Wrong topics that have not appeared in recent posts. If a discussion gets unwieldy, celebrate by turning it into a top-level post.