My best guess is that the elicitation overhang has reduced or remained stable after the release of GPT-4. I think we've now elicited much more of the underlying "easy to access" capabilities with RL, and there is a pretty good a priori case that scaled up RL should do an OK job eliciting capabilities (at least when considering elicitation that uses the same inference budget used in RL) on at least the distribution of tasks which models are RL'd on.

It seems totally possible that performance on messier real world tasks with hard-to-check objectives is under elicited, but we can roughly bound this elicitation gap by looking at performance on the types of tasks which are RL'd on and then conservatively assuming that models could perform this well on messy tasks with better elicitation (figuring out the exact transfer isn't trivial, but often we can get a conservative bound which isn't too scary).

Additionally, it seems possible that you could use vast amounts of inference compute (e.g. comparable to human wages or much more than human wages) more effectively and elicit more performance using this. But, GPT-5 reasoning on high is already decently expensive and this is probably not a terrible use of inference compute, so we don't have a ton of headroom here. We can get a rough sense of the returns to additional inference compute by looking at scaling with existing methods and the returns don't look that amazing overall. I could imagine this effectively moving you forward in time by like 6-12 months though (as in, you'd see the capabilities we'd see in 6-12 months, but at much higher cost, so this would be somewhat impractical).

Another potential source of elicitation overhang is niche superhuman capabilities being better elicited and leveraged. I don't have a strong view on this, but we haven't really seen this.

None of this is to say there isn't an elicitation overhang, I just think it probably hasn't really increased recently. (I can't really tell if this post is trying to argue the overhang is increasing or just that there is some moderately sized overhang ongoingly.) For instance, it seems pretty plausible to me that a decent amount of elicitation effort could make models provide much more bioweapon uplift and this dynamic means that open weight models are more dangerous than they seem (this doesn't necessarily mean the costs outweigh the benefits).

It seems totally possible that performance on messier real world tasks with hard-to-check objectives is under elicited

As an example, I think that the kinds of agency errors that show up in VendingBench or AI Village are largely due to lack of elicitation. I see these errors way less when coding with Claude Code (which has both better scaffolding and more RL). I'd find it difficult to inject numbers to get a concrete bound though.

It would be unfortunate if AI village is systematically underestimating AI capabilities due to non-SOTA scaffolding and/or not having access to the best models. Can you say more about your arguments, evidence, how confident you are, etc.?

Code Agents (Cursor or Claude Code) are much better at performing code tasks than their fine-tune equivalent, mainly because of the scaffolding.

When I told you that we should not put 4% of the global alignment spending budget in AI Village, you asked me if I thought METR should also not get as much funding as it does.

It should now be more legible why.

From my point of view, both of AI Village and METR, on top of not doing the straightforward thing of advocating for a pause, are bad on their own terms.

Either you fail to capture the relevant capabilities and build unwarranted confidence that things are ok, or you are doing public competitive elicitation & amplification work.

Code Agents (Cursor or Claude Code) are much better at performing code tasks than their fine-tune equivalent

Curious what makes you think this.

My impression of (publicly available) research is that it's not obvious - e.g. Claude 4 Opus with minimal scaffold with just a bash tool is not that bad at SWEBench (67% vs 74%). And Metr's elicitation efforts were dwarfed by OpenAI doing post-training.

Curious what makes you think this.

Because there is a reason for why Cursor and Claude Code exist. I'd suggest looking at what they do for more details.

METR is not in the business of building code agents. Why is their work informing so much of your views on the usefulness Cursor or Claude Code?

This is literally the point I make above.

Either you fail to capture the relevant capabilities and build unwarranted confidence that things are ok, or you are doing public competitive elicitation & amplification work.

I think it's noteworthy that Claude Code and Cursor don't advertise things like SWEBench scores while LLM model releases do. I think whether commercial scaffolds make the capability evals more spooky depends a lot on what the endpoint is. If your endpoint is sth like SWEBench, then I don't think the scaffold matters much.

Maybe your claim is that if commercial scaffolds wanted to maximize SWEBench scores with better scaffolding, they would get massively better SWEBench performance (e.g. bigger than the Sonnet 3.5 - Sonnet 4 gap)? I doubt it, and I would guess most people familiar with scaffolding (including at Cursor) would agree with me.

In the case of the Metr experiments, the endpoints are very close to SWEBench so I don't think the scaffold matters. For the AI village, I don't have a strong take - but I don't think it's obvious that using the best scaffold that will be available in 3 years would make a bigger difference than waiting 6mo for a better model.

Also note that Metr did spend a lot of effort into hill-climbing on their own benchmarks and they did outcompete many of the commercial scaffolds that existed at the time their blogpost according to their eval (though some of that is because the commercial scaffolds that existed at the time of their blogpost were strictly worse than extremely basic scaffolds at the sort of evals Metr was looking at). The fact that they got low return on their labor compared to "wait 6mo for labs to do better post-training" is some evidence that they would have not gotten drastically different results with the best scaffold that will be available in 3 years.

(I am more sympathetic to the risk of post-training overhang - and in fact the rise of reasoning models was an update that there was post-training overhang. Unclear how much post-training overhang remains.)

I don't think Anthropic has put as much effort into RL-ing their models to perform well on tasks like VendingBench or Computer Use (/graphical browser use) compared to "being a good coding agent". Anthropic makes a lot of money from coding, whereas the only computer use release I know from them is a demo which ETA: I'd guess does not generate much revenue (?).

Similarly for scaffolding, I expect the number of person-hours put into the scaffolding for Vending Bench or the AI Agent village to be at least an order-of-magnitude lower than for Claude Code, which is a publicly released product that Anthropic makes money from.

More concretely:

- I think that the kind of decomposition into subtasks / delegation to subagents that Claude Code does would be helpful for VendingBench and the Agent Village, because in my experience they help keep track of the original task at hand and avoid infinite rabbit holes.

- Cursor builds great tools/interfaces for models to interact with code files, and my impression is that Ant post-training intentionally targeted these tools, which made Anthropic models much better than OpenAI models on Cursor pre-GPT-5. I don't expect there were comparable customization efforts in other domains, including graphical browser use or VendingBench style tasks. I think such efforts are under way, starting with high quality integrations into economically productive software like Figma.

I'm more confident for VendingBench than for the AI village, for example I just checked the Project Vend blog post and it states:

Many of the mistakes Claudius made are very likely the result of the model needing additional scaffolding—that is, more careful prompts, easier-to-use business tools. In other domains, we have found that improved elicitation and tool use have led to rapid improvement in model performance.

[...]

Although this might seem counterintuitive based on the bottom-line results, we think this experiment suggests that AI middle-managers are plausibly on the horizon. That’s because, although Claudius didn’t perform particularly well, we think that many of its failures could likely be fixed or ameliorated: improved “scaffolding” (additional tools and training like we mentioned above) is a straightforward path by which Claudius-like agents could be more successful.

Regarding the AI village: I do think that computer (/graphical browser) use is harder than coding in a bunch of ways, so I'm not claiming that if Ant spent as many resources on RL + elicitation for computer use as they did for coding, that would reduce errors to the same extent (and of course, making these comparison across task types is conceptually messy). For example, computer use offers a pretty unnatural and token-inefficient interface, which makes both scaffolding and RL harder. I still think more OOM more resources dedicated to elicitation would close a large part of the gap, especially for 'agency errors'.

We actually have evidence that xAI spent about as much compute on Reinforcement Teaching Grok 4 (to deal with the ARC-AGI-2 bench and to solve METR-like tasks, but not to do things like the AI village or Vending Bench?) as on pretraining it. What we don't know is how they had Grok 4 instances coordinate with each other in Grok 4 Heavy, nor what they are on track to do to ensure that Grok 5 ends up being AGI...

I see these errors way less when coding with Claude Code

I think models are generally by default worse at computer use than coding, so I don't think seeing more errors in Claude Code than AI Village is much evidence that AI Village is under-eliciting capabilities more than Claude Code. I'd guess this applies to Project Vend too though I'm less familiar.

(However, I do think is other evidence to expect that Claude Code under-elicits less than Project Vend/Village is that Claude Code is a major offering from a top lab and I think they have spent a lot more resources on improving its performance than Project Vend/Village, which are relatively small efforts. Also because in general I'm pretty confident much more effort is spent on eliciting coding capabilities and some insights spread from other efforts, e.g. Cursor, Codex, Github Copilot, etc).

An argument I find somewhat compelling for why the overhang would be increasing is:

Our current elicitation methods would probably fail for sufficiently advanced systems, so we should expect their performance to degrade at some point(s). This might happen suddenly, but it could also happen more gradually and continuously. A priori, it seems more natural to expect the continuous thing, if you expect most AI metrics to be continuous.

Normally, I think people would say, "Well, you're only going to see that kind of a gap emerging if the model is scheming (and we think it's basically not yet)", but I'm not convinced it makes sense to treat scheming as such a binary thing, or to assume we'll be able to catch the model doing it (since you can't know what your missing, and even if you find it, it won't necessarily show up with signatures of scheming vs. looking like some more mundane seeming elicitation failure).

This is a novel argument I'm elaborating, so don't stand by it strongly, but I don't feel like it's been addressed that I've seen, and it seems like a big weakness of a lot of safety cases that they seem to be treating underelicitation as either 1) benign or 2) due to "scheming", which is treated as binary and ~observable. "Roughly bound[ing]" an elicitation gap may be useful, but is not sufficient for high quality assurance -- we're in the realm of unknown unknowns (I acknowledge there's some subtleties around unknown unknowns I'm eliding, and that the OP is arguing that this is "likely", which is a higher standard than "likely enough that we can't dismiss it").

But, GPT-5 reasoning on high is already decently expensive and this is probably not a terrible use of inference compute, so we don't have a ton of headroom here.

As written, this is clearly false/overconfident. It's "probably not a terrible use of inference compute" is a very weak and defensible claim. But drawing the conclusion that "we don't have a ton of headroom" from it is completely unwarranted and would require making a stronger, less defensible claim.

(I can't really tell if this post is trying to argue the overhang is increasing or just that there is some moderately sized overhang ongoingly.)

It has increased on some axes (companies are racing as fast as they can and the capital and research is by far LONG scaling), and reduced on some others (low-hanging fruits get plucked first).

The main point is that it is there and consistently under-estimated.

For instance, there are still massive returns to spending an hour on learning and experimenting with prompt engineering techniques. Let alone more advanced approaches.

This thus leads to a bias of over-estimating the safety of our systems, except if you expect that our evaluators are better elicitators than not only existing AI research engineers, but like, the ones over the next two, five or ten years.

> This has also been my direct experience studying and researching open-source models at Conjecture.

Interesting! Assuming it's public, what are some of the most surprising things you've found open source models to be capable of that people were previously assuming they couldn't do?

This matters for advocacy for pausing AI, or failing that, advocacy about how far back the red-lines ought to be set. To give a really extreme example, if it turns out even an old model like GPT-3 could tell the user exactly how to make a novel bioweapon if prompted weirdly, it seems really useful to be able to convince our policy makers of this fact, though the weird prompting technique itself should of course be kept secret.

There is no rollback when open-weight models are almost SOTA. Could we convince people like Zuck that open weights are too risky? I seriously doubt it.

I disagree, I don't think there's a substantial elicitation overhang with current models. What is an example of something useful you think could in theory be done with current models but isn't being elicited in favor of training larger models? (Spending an enormous amount of inference-time compute doesn't count as that's super inefficient)

I'd flip it around and ask whether Gabriel thinks the best models from 6, 12, or 18 months ago could be performing at today's level with maximum elicitation.

What is an example of something useful you think could in theory be done with current models but isn't being elicited in favor of training larger models?

Better prompt engineering, fine-tuning, interpretability, scaffolding, sampling.

Fast-forward button

I think you are may be failing to make an argument along the line of "But people are already working on this! Markets are efficient!"

To which my response is "And thus 3 years from now, we'll know what to do with models much more than we do, even if you personally can't come up with an example now. The same way we now know what to do with models much more than 3 years ago."

Except if you expect this not be the case, like by having directly worked on juicing models or followed people who have done so and failed, you shouldn't really expect your failure to come up with such examples to be informative.

Better prompt engineering, fine-tuning, interpretability, scaffolding, sampling.

I meant examples of concrete tasks that current models fail at as-is but you think could be elicited, e.g. with some general scaffolding.

I think you are may be failing to make an argument along the line of "But people are already working on this! Markets are efficient!"

Not quite. Though I am not completely confident, my claim comes from the experience of watching models fail at a bunch of tasks for reasons that seem raw-intelligence related rather than scaffolding or elicitation related. For example, ime current models struggle to answer questions about codebases with nontrivial logic they haven't seen before or fix difficult bugs.

When you use current models you run into examples where you feel how dumb the model is, how shallow its thinking is, how much it's relying on heuristics. Scaffolding etc. can only help so much.

Also elicitation techniques for tasks are often not general (e.g. coming up with the perfect prompt with detailed instructions), requiring human labor and intelligence to craft task-specific elicitation methods. This additional effort and information takes away from how much the AI is doing.

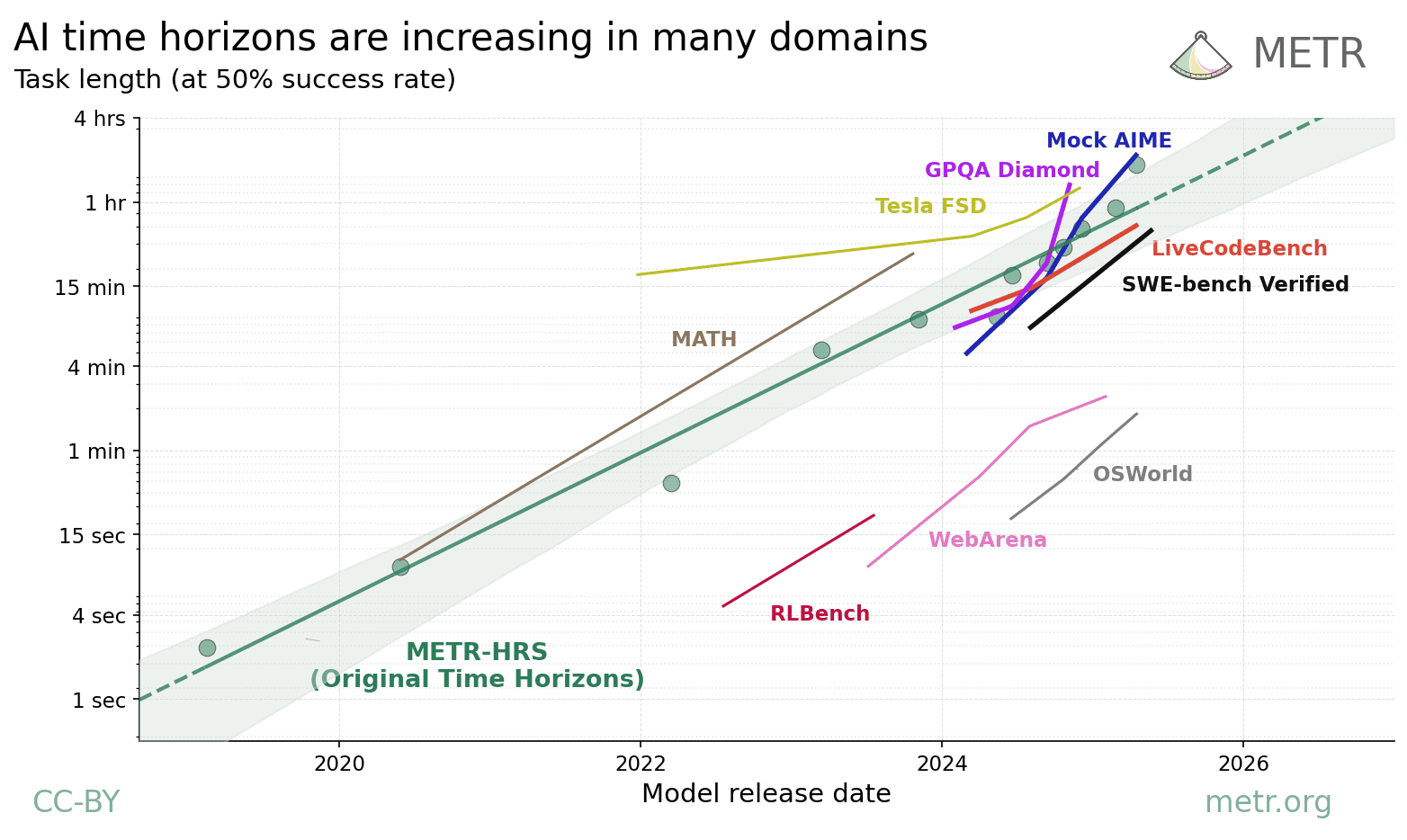

By racing to the next generation of models faster than we can understand the current one, AI companies are creating an overhang. This overhang is not visible, and our current safety frameworks do not take it into account.

1) AI models have untapped capabilities

At the time GPT-3 was released, most of its currently-known capabilities were unknown.

As we play more with models, build better scaffolding, get better at prompting, inspect their internals, and study them, we discover more about what's possible to do with them.

This has also been my direct experience studying and researching open-source models at Conjecture.

2) SOTA models have a lot of untapped capabilities

Companies are racing hard.

There's a trade-off between studying existing models and pushing forward. They are doing the latter, and they are doing it hard.

There is much more research into boosting SOTA models than studying any old model like GPT-3 or Llama-2.

To contrast, imagine a world where Deep Openpic decided to start working on the next generation of models only until they were confident that they juiced their existing models. That world would have much less of an overhang.

3) This is bad news.

Many agendas, like red-lines, evals or RSPs, revolve around us not being in an overhang.

If we are in an overhang, then a red-line being met may already be much too late, with untapped capabilities already way past it.

4) This is not accounted for.

It is hard to reason about unknowns in a well-calibrated way.

Sadly, I have found that people consistently have a tendency is to assume that unknowns do not exist.

This means that directionally, I expect people to underestimate overhangs.

This is in great part why...

Sadly, researching this effect is directly capabilities relevant. It is likely that many amplification techniques that work on weaker models would work on newer models too.

Without researching it directly, we may start to feel the existence of an overhang after a pause (whether it is because of a global agreement or a technological slowdown).

Hopefully, at this point, we'd have the collective understanding and infrastructure needed to deal with rollbacks if they were warranted.