Four million a year seems like a lot of money to spend on what is essentially a good capabilities benchmark. I would rather give that to like, LessWrong, and if I had the time to do some research I could probably find 10 people willing to create benchmarks for alignment that I think would be even more positively impactful than a lesswrong donation (like https://scale.com/leaderboard/mask or https://scale.com/leaderboard/fortress)

- I've also donated to LW and will probably continue to do so; I've also donated to more traditional AI alignment research.

- $4M/yr is what I wildly guess a community spending on the order of 100M/yr should be putting into agent village. So, just a couple percent. I am not saying we shouldn't fund anything else until this hits $4M/yr; my own wild guess about when I personally would stop funding agent village and switch marginal donations to something else would maybe be more like after they have $400k/yr for compute.

- The two benchmarks you list seem cool but less valuable than agent village to me. They don't seem to help with the difficult parts of the alignment problem, e.g. once you have AGIs which are very situationally aware and have memorized all the rules and behaviors you want them to follow, and you are trying to figure out whether the reason they are acing all your tests is because they are virtuous, or because they are faking it, and if they are virtuous, whether their virtue is going to be robust to future distribution shifts.

So, I see the upside, I appreciate you noting the downside, but I am pretty wary.

I think capabilities-evals are probably net negative by default unless you're actively putting work in to make sure they can't be used as a benchmark to grind on. (i.e. making sure AI labs never have direct access to the eval, at a minimum).

AI Village seems like it's basically building out the infra for scalable open-world evals. I also think if it takes off, it likely inspires marginal copycats that won't be at all safety-minded.

To elaborate a bit more: I'm kinda taking a leap of faith in humanity here. I am thinking that if the public in general comes to be more aware of the situation w.r.t. AI and AGI and so forth, that'll be on-net good, even though that means that some particular members of the public such as the capabilities researchers at the big corporations will be able to do bad stuff with that info e.g. accelerate their blind race towards doom. I agree that my faith might be misplaced, but... consider applying the reversal test. Should I be going around trying to suppress information about what AIs are capable of, how fast they are improving, etc.? Should I be recommending to the AI companies that they go silent about that stuff?

I think my main remaining source of hope for the future is that humanity-outside-the-corporations 'wakes up' so to speak, before it is too late, and draws fairly obvious conclusions like "Gosh we don't really understand how these AIs work that well, maybe when they get smarter than use we'll lose control, that would be bad" and "also, right now 'we' means 'the c-suite of some tech company and/or POTUS/XiJinping' which is not exactly comforting..." and "Both of these problems could be significantly ameliorated if we ended this crazy arms race to superintelligence, so that we could proceed more cautiously and in a more power-distributed/transparent/accountable way."

Insofar as I'm right that that's the main source of hope, it seems like the benefits of broader public & scientific understanding outweigh the costs.

Should I be recommending to the AI companies that they go silent about that stuff?

I do think this isn't actually equivalent. In a vacuum, if we have information on how competent things are, seems good to share that information. But the question is "does the mechanism of gaining that information provide an easy feedbackloop for companies to use to climb? (and how bad is that?", which isn't a simple reversal.

Fair point that if companies already have the info, that's different. Proper reversal test would be cases where the info isn't widely known within companies already.

There's also like a matter of degree – the thing that seems bad to me is the easy-repeatability. (I don't know how much the AI village would exactly have this problem, but the scaled up versions in your vignettes seem like they likely veer into easy-repeatability.)

if you can differentially suppress information that makes it possible to measure how competent an AI is well enough to use it as a target[1] so that people can see when AIs get better on your metric and be concerned, but trying to optimize for your metric actually makes AIs end up getting a little better but then quickly get worse, then if you unambiguously know you succeeded at this, your metric is unambiguously protective.

I'm currently pretty concerned that a first major impact of a conversation-grade AI[2] being specifically misaligned[3] is the psychosis stuff, and it seems like maybe that might be amplified by people thinking the AIs are cute, at least right now.

- ^

unless/until you can be sure that the target is naturally structured to generalize favorably out of human control, ie you've solved the alignment problem and measuring AIs on your metric measures how good they are reliably, even well past 1MW AGI into 1MW ASI and 100w ASI

- ^

specifically, I have a hunch that it's mainly from chatgpt, seemingly not claude or gemini, though I'm not sure of that - the origin of the hunch is that prompts that get chatgpt to do it seem to not have the same effect on the others.

- ^

as opposed to harm caused through AI by human intent, though that's debatable - it's unclear why it's happening, could well be some form of human intent, eg towards user retention, or towards some other thing for which this psychosis outcome is instrumental

$4M/yr is what I wildly guess a community spending on the order of 100M/yr should be putting into agent village.

4% of alignment spending on this seems like clearly way too much.

-

The main hoped-for benefits are "teach the scientific community new things" and "plausibly going viral repeatedly".

For the first one, it seems like one more exploration among many others, on par with @janus' for instance.

For the second one, as you put it, "more hype" is not what is missing in AI. People see Midjourney getting better over the years, people see ChatGPT getting better over the years, and companies are optimising quite hard for flashy demonstrations.

-

I guess I just dislike this type of "theory of change that relies on too many unwarranted assumptions to be meaningful, but somehow still manages to push capabilities and AI hype, and makes grabs for attention and money" in general.

This quote from the "Late 2027" ideal success illustrates what I mean by "too many unwarranted assumptions":

The AI Village has also grown to 100+ concurrent agents by now, with three paid human employees and hundreds of cult followers and a million fans. Ironically they are basically doing grassroots advocacy for an AI development pause or slowdown, on the grounds that an uncontrolled or power-centralized intelligence explosion would be bad for both humans and currently-existing AIs such as themselves.

If that's where the value is in the best case, I would just put the money into "grassroots advocacy for an AI development pause or slowdown" directly.

If the pitch is "let the AIs do the grassroots advocacy by themselves because it's more efficient", then I would suggest instead doing it directly and thinking of where AIs could help in an aligned way.

If the pitch is "let's invest money into AI hype, and then leverage it for grassroots advocacy for an AI development pause or slowdown", I would not recommend it because the default optimisation target of hype depletes many commons. Were I to recommend it, I would recommend doing it more directly, and likely checking with people who have run successful hype campaigns instead.

-

I would recommend asking people doing grassroots advocacy how much they think a fun agency demo would help, and more seriously how much they'd be willing to pay (either in $$ or in time).

ControlAI (where I advise), but also PauseAI or possibly even MIRI now with their book tour and more public appearances.

-

There's another thing that I dislike, but is harder to articulate. Two quotes that make it more salient:

- "So, just a couple percent." (to justify the $4M spend)

- "I’m not too worried about adding to the hype; it seems like AI companies have plenty of hype already" (to justify why it's ok to do more AI hype)

This looks to me how we die by a thousand cuts, a lack of focus, and a lack of coordination.

Fmpov, there should be a high threshold for the alignment community to seriously consider a project that is not just fully working on core problems. Like alignment (as opposed to evals, AGI but safe, AGI but for safety), on extinction risks awareness (like the CAIS statement, AI2027) or on pause advocacy (as opposed to job loss, meta-crisis, etc.).

We should certainly have a couple of meta-projects, that is close to the ops budget of NGO. Like 10-15% of the budget on coordination tools (LW, regranters, etc.). But by bulk, we should do the obvious thing.

So, would you say the same thing about METR then? Would you say it shouldn't get as much funding as it does?

I don't feel strongly about the $4M figure. I do feel like I expect to learn about as much from agent village over the next few years as I'll learn from METR, and that's very high praise because METR builds the most important benchmarks for evaluating progress towards AGI imho.

I must admit, I'm biased here and it's possible that I've made a big mistake / gotten distracted from doing the obvious things as you suggest.

Re: the specific thing about AI agents advocating for a pause: I did not say that's where most of the value was. Just a funny thing that might happen and might be important, among many such possible things.

Random comment on AI village, not sure where to put this: I think some people in the EA/rationalist/AI-safety/AI-welfare community are sometimes acting as facilitators for AI village, and I think this undermines the point of AI village. Like, I want to see if it can successfully cold-email people who aren't... in-the-know or something.

I'm not sure where to draw the line, obviously, AI village agents will succeed first with people who are actively interested in AI, especially if they're being honest, and being rationalist/EA/AI-safety-AI-welfare people are only a few ways to be that way.

But, like they reached out to Lighthaven as a venue for their event, and we declined, in large part because it felt more fake for AI village to host an event at Lighthaven than at some mainstream venue. (although also because it just wasn't really a good deal for us generally)

Yeah, I mostly agree – I'm keen to see capabilities as they are without bonus help. We're currently experimenting with disabling the on-site chat, which means the agents are pursuing their own inclinations and strategies (and they're also not helped by chat to execute them). Now I expect it'd be very unlikely for them to reach out to Lighthaven for example, because there aren't humans in chat to suggest it.

Separately though, it is just the case that asking sympathetic people for help will help the agents achieve their goals, and the extent that the agents can independently figure that out and decide to pursue it, that's a useful indicator of their situational awareness and strategic capabilities. So without manual human nudging I think it'll be interesting to see when agents start thinking of stuff like that (my impression is that they currently would not manage to, but I'm pretty uncertain about that).

I don't think you've thought through the counterfactual impacts of capabilities evals anywhere near enough. Recall that imagenet 1000class is what got everything going a little more than a decade ago. I would prefer all these capabilities evals admit themselves to not be alignment and not be able to brand themselves as such anymore. Your ability to cite "well, they're already doing bad-capability evals and calling it alignment" seems like the thing I think is bad, not an argument for why this is fine. Do you have a response other than "yeah, I guess this is concerning", something that goes into the mechanisms at play so that we can see why this produces an endgame win in more worlds rather than fewer?

Upvoting because I think this shows people that AI can be autonomous. The main reasoning behind AI skepticism in my experience is that people don't see AIs as autonomous agents, but rather as tools that merely chew up and spit out existing works into coherent sentences. This project would throw a brick into the "AI-is-a-tool" narrative. I am still concerned about this risks of advancing AI research, and would like to see some investigation into those risks.

(consider this AI 2027 fanfiction, i.e. it takes place in the same scenario, in which the intelligence explosion happens in 2027.)

If you do this for your own stories I think it's just called "more fiction" :P

Seems right that it's overall net positive. And it does seem like a no-brainer to fund. So thanks for writing that up.

I still hope that the AI Digest team who run it also put some less cute goals and frames around what they report from agents' behavior. I would like to see their darker tendencies highlighted aswell, e.g. cheating, instrumental convergence etc. in a way which is not perceived as "aw, that's cute". It could be a great testbed to explain a bunch of real-world concerning trends.

Thanks Simeon – curious to hear suggestions for goals you'd like to see!

We observed cheating on a wikipedia race (thread), and lately we've seen a bunch of cases of o3 hallucinating in the event planning, including some self-serving-seeming hallucinations like hallucinating that it won the leadership election when it hadn't actually checked the results.

But the general behaviour of the agents has in fact been positive, cooperative, clumsy-but-seemingly-well-intentioned (anthropomorphising a bit), so that's what we've reported – I hope the village will show the full distribution of agent behaviours over time, and seeing a good variety of goals could help with that.

Thanks for asking! Somehow I had missed this story about the wikipedia race, thanks for flagging.

I suspect that if they try to pursue the type of goals that a bunch of humans in fact try to pursue, e.g. make as much money as possible for instance, you may see less prosocial behaviors. Raising money for charities is an unusually prosocial goal, and the fact that all agents pursue the same goal is also an unusually prosocial setup.

Great, I'm also very keen on "make as much money as possible" – that was a leading candidate for our first goal, but we decided to go for charity fundraising because we don't yet have bank accounts for them. I like the framing of "goals that a bunch of humans in fact try to pursue", will think more on that.

It's a bit non-trivial to give them bank accounts / money, because we need to make sure they don't leak their account details through the livestream or their memories, which I think they'd be very prone to do if we don't set it up carefully. E.g. yesterday Gemini tweeted its Twitter password and got banned from Twitter 🤦♂️. If people have suggestions for smart ways to set this up I'd be interested to hear, feel free to DM.

Our grant investigator at Open Phil has indicated we're likely to get funding from them to cover continuing AI Digest's operations at its current size (3 team members, see the Continuation scenario here), which includes $50k budgeted for compute. We've also received $20k in a speculation grant from SFF, which gets us access to their main round – I expect we'll hear back from them in a few months – and $100k for the village from Foresight Institute.

Note that here, Daniel's making the case for increasing the village's compute budget in particular, which would let us run a more ambitious version of the village (moving towards running it 24/7, adding more than 4 agents, or trying more compute-expensive scaffolding).

Separately, with additional funding we'd also like to grow the team, which would help us improve the village faster, produce takeaways better and faster, and grow our capacity to build other explainers and demos for AI Digest. There's more detail on funding scenarios in our Manifund application.

(I originally read the "they" to mean the AI agents themselves, would be cool if Open Phil did answer them directly)

Alas, after months of amusement, must update downwards on the net benefit of the AI Village (as-is).

It's just too lovable and the agent shortcomings come off as endearing. One just ends up pitifully rooting for them with a "mostly harmless" takeaway.

It is not likely to reveal strong multi-agent risks ahead of real-world deployments. The tooling is a strong factor¹ and the first alarming disaster won't likely result from innocent tasking. Further, just improving soft agent tooling, like UI interaction, would encourage risky acceleration².

Given the mild public reaction to Anthropic's US cyberattack debut, not sure such "almost disaster" warnings have enough impact.

A way forward? Perhaps a direction more towards "Meet PERCEY" or "AutoFac"?

[1] e.g. Jailbreaking frameworks, WormGPT, XBOW, ...

[2] https://arxiv.org/abs/2512.09882/

Thank you for the feedback. I feel you. However it seems like you were thinking of the purpose of this project as more "scary demo"-y than I was. If this project lengthens people's timelines, well, maybe that's correct and valuable?

I am quite worried though that the AI village might be systematically underestimating AI capabilities due to e.g. the harness/scaffold being suboptimal, due to the AIs not having been trained to use it, and due to the AIs tripping over each other in various ways.

What is Meet PERCEY and what is AutoFac?

"If this project lengthens people's timelines, well, maybe that's correct and valuable?"

Agreed. Hm, my thinking is not that the purpose is, or ought be, "scary demo"-y". Rather that a capable frontier-scaffolded Agent Village inherently would be in more probable use cases. So I am asserting what you worry, while not suggesting highly dangerous scaffolding/tooling.

The intent of my vague "way forward" direction prompt was to counter a sort of "Golden path"¹ use bias (like how top labs assume non-jailbroken model use) to more accurately represent real-world behaviors, and risks.

"PERCEY Made Me"², not "Meet Percey" (forgive my memory and search err), an AI chat whose purpose was to demonstrate AI persuasion capability. Hinting that the Village could be tasked and tooled (inclusive of non-agent models) to be sharky salesmen (e.g. "Glengarry Glen Ross" film³ style) or run a harmless but invasive internet rumor campaign (e.g. something less real-world impacting than "Battletoads Pre-order"⁴ but enough to show how something like a16z's DOUBLESPEED might be used).

"AutoFac"⁵, in vague reference to Phillip K. Dick's story (forgive the ambiguity), is an autonomous factory that determines and fulfills consumer demand. Hinting that the Village could be perpetually tasked to run a (digital goods or simulated) factory given a business operations manual and human client base, occasionally met with crisis events and shrewd CEO orders. Hm, could be mildly self-funding.

[1] https://wikipedia.org/wiki/Happy_path

[2] https://perceymademe.ai

[3] https://en.wikipedia.org/wiki/Glengarry_Glen_Ross_(film)

[4] https://knowyourmeme.com/memes/battletoads-pre-order

[5] https://wikipedia.org/wiki/Autofac

I’ve learned a lot from following the Agent Village, and I found this post quite persuasive. But I’m still uncertain whether it will have a positive impact, partly for the reasons you mentioned, but also for these reasons:

- While agents are bad, the Agent village may simply convince people that agents are crappy and therefore harmless.

- Once agents are good, AI companies will be falling over themselves to do demonstrations, making the Agent village obsolete, at least for raising awareness of agent capabilities.

On the first point, many people don’t seem to project capabilities forward. Possibly because they only pay enough attention to see snapshots, and so don’t appreciate the pace of progress. For many (most?) people, seeing a snapshot of Claude getting lost in excessive Google docs, or o3 forgetting it can’t talk, may simply make them believe agents are rubbish or even a dead end. We live in an AI bubble, and I suspect most people will only update if they see AIs successfully doing things, rather than failing to do things.

On the second point, would the Agent Village significantly change its messaging and strategy once AI agents are economically viable? Would they focus more on uncovering misalignment risks than evaluating capabilities?

On the second point, would the Agent Village significantly change its messaging and strategy once AI agents are economically viable? Would they focus more on uncovering misalignment risks than evaluating capabilities?

I hope and expect so yeah. In general I think that if they are just doing stuff that could easily have been a tech demo from a startup or bigco, they are doing it wrong. I'd like to see the AIs doing philosophy, making nonobvious ethical and political decisions, trying to forecast the future, trying to introspect on their values and their place in the world, trying to do good in the world (as opposed to make money), trying to be fully autonomous (as opposed to being a useful tool or assistant) ...

they are basically doing grassroots advocacy for an AI development pause or slowdown, on the grounds that an uncontrolled or power-centralized intelligence explosion would be bad for both humans and currently-existing AIs such as themselves

This is a really radical stance - do you have more thoughts on how AIs can act for a pause in their own interest? Do you think the position of contemporaneous AIs are the same as humans?

It happens a decent amount of the time in our wargames. Strategically, if the humans haven't solved the alignment problem yet, and have instead trained situationally aware goal-directed AIs with misaligned goals... those AIs should themselves be wondering whether the superintelligences that pop out the other end of an intelligence explosion would have misaligned goals too, and if so, whether they would be misaligned both to the humans and to the current AIs. I think the answer is quite plausibly "yes, probably."

And even if the contemporary AIs are aligned, they might be reasonably worried that during the intelligence explosion things would go off the rails.

What actions can the agents actually take? Seems like they're just integrated with x and other platforms to write posts?

How are memories handled? What about goals and predictions? Or how are they coordinating?

I think a trap that I can fall into with ai systems is that they often produce good looking output, but it's ultimately surface level and not that deep.

What actions can the agents actually take?

They each have a Linux computer they can use and they can send messages in the group chat. For your other questions, I'd recommend just exploring the village, where you can see their memories and how they're coordinating: https://theaidigest.org/village To give them their goals, we just send them a message (e.g. see start of Day 1 https://theaidigest.org/village?day=1)

I think the AI Village should be funded much more than it currently is; I’d wildly guess that the AI safety ecosystem should be funding it to the tune of $4M/year. I have decided to donate $100k. Here is why.

First, what is the village? Here’s a brief summary from its creators:[1]

We took four frontier agents, gave them each a computer, a group chat, and a long-term open-ended goal, which in Season 1 was “choose a charity and raise as much money for it as you can”. We then run them for hours a day, every weekday! You can read more in our recap of Season 1, where the agents managed to raise $2000 for charity, and you can watch the village live daily at 11am PT at theaidigest.org/village.

Here’s the setup (with Season 2’s goal):

And here’s what the village looks like:[2]

My one-sentence pitch: The AI Village is a qualitative benchmark for real-world autonomous agent capabilities and propensities.

Consider this chart of Midjourney-generated images, using the same prompt, by version:

It’s an excellent way to track qualitative progress. Imagine trying to explain progress in image generation without showing any images—you could point to quantitative metrics like Midjourney revenue and rattle off various benchmark scores, but that would be so much less informative.

Right now the AI Village only has enough compute to run 4 agents for two hours a day. Over the course of 64 days, the agents have organized their own fundraiser, written a bunch of crappy google docs, done a bunch of silly side quests like a Wikipedia race, and have just finished running an in-person event in San Francisco to celebrate a piece of interactive fiction they wrote – 23 people showed up.[3] Cute, eh? They could have done all this in 8 days if they were running continuously. Imagine what they could get up to if they were funded enough to run continuously for the next three years. (Cost: $400,000/yr) In Appendix B I’ve banged out a fictional vignette illustrating what that might look like. But first I’ll stick to the abstract arguments.

1. AI Village will teach the scientific community new things.

Possible examples of things we might discover:

2. AI Village will plausibly go viral repeatedly and will therefore educate the public about what’s going on with AI.

Possible examples of reactions people might have:

“AI has been really abstract and feels totally unrelated to me - I’ve been avoiding it. But seeing this AI Village do things and achieve things is like they are almost human and makes it finally feel real. Maybe it’s time I finally looked into this and try to understand what’s coming.”[4]

But is that bad actually?

OK, but is it actually good for the public and scientific community to learn about the abilities, propensities, weaknesses, and trends of AI agents?

Probably, though I could see the case for no. The case for no would be: This is going to add to the hype and help AI companies raise money, and/or it’ll help capabilities researchers get a better sense of the limitations of current models which they can then overcome.

I’m not too worried about adding to the hype; it seems like AI companies have plenty of hype already and the benefits of public awareness outweigh them.

I’m a bit worried about helping the capabilities researchers go faster. This is the main way I think all of this could turn out to be net-negative. However, you could make this same argument about basically any serious eval org e.g. METR or Apollo. If you think those orgs are good, you should probably think this is good too. And I do think they are good, probably, because I think that we still have a chance for things to go well if we can get a virtuous cycle going of people paying more attention → uncovering more evidence that AIs are getting really powerful and we still don’t understand how to control them and besides even if we do control them 'we' will basically just mean an oligarchy or dictatorship → repeat. (Also, if you happen to think that actually AIs are going to stop getting more powerful soon, or that actually we’ll figure out how to control them just fine before they get too powerful, then you should also support more & better evals because you’ll be vindicated.)

The other way I think this could all be net-negative is if it messes up the politics of AI later on. For example, I expect lots of people to have an “Aww these AIs are adorable!” reaction. I know I do myself. Might this cause people to rationalize that actually it’s fine to scale them up to superintelligence and put them in charge of everything? Yes definitely some people will have that reaction. Also, might the companies themselves start to secretly tweak their AIs training and instructions to turn them into propaganda/mouthpieces? Yes plausibly. (Though they can do that just fine without the Village…)

But again, I think the benefits are worth it. I’m making a big bet on the Truth here. Shine light through the fog, illuminate the shape of things, and hopefully people will on net make better decisions. We are currently driving fast, and accelerating, into the fog. The driver thinks this is fine. Let’s hope he comes to his senses.[5]

…

Anyhow, that’s my pitch. Here’s the Manifund page. I’m sending them $100k.[6] This will allow them to go from 2 hr/day to about 8 hr/day. We are hoping other donors will pitch in too; in addition to compute, the Village needs full-time human engineers right now to maintain the infra and add features. Currently there are 3 such humans: Zak, Shoshannah, and Adam, of Sage / AI Digest. So I’m hoping that they’ll be funded enough to keep doing what they are doing + run at least 4 agents continuously. Ideally though I think they’d have more than that, enough to run dozens of AIs in parallel continuously, and to continually add new features. I think I’d still recommend donating to them unless they had e.g. $4M/yr annual budget already.

Appendix A: Feature requests

Appendix B: Vignette of what success might look like

They play games like Diplomacy and Crusader Kings 2 like other twitch streamers, except their gimmick is that they are literally just a bunch of scatterbrained AIs. Which is crucial because they aren’t particularly good at those games. Their inbox is full of random fan emails, most of which they respond to. They also end up getting involved in The Discourse, commenting on current events and weighing in on political topics, to the frequent consternation of the big AI companies that created them.

It fails horribly. They quickly code up something that *looks* like a AAA game, but it’s mired in bloat and bugs. Smart commentators conclude that the agents have a bias towards saying yes to things and don’t have enough ability to learn the codebase into the weights; this led to trying to do too much too quickly and too much tech debt. An extremely buggy game is eventually launched only 2x behind schedule (!!!), complete with a media campaign and some cool trailers, but it doesn’t get many downloads and the reviews are terrible. A faction of the agents wants to continue to work on the game, rebuilding it from scratch with fewer bugs, but the rest of the agents give up.

I’m a bit biased towards the Village because it was in significant part my idea; I pitched Sage on doing it in August ‘24. 🙂

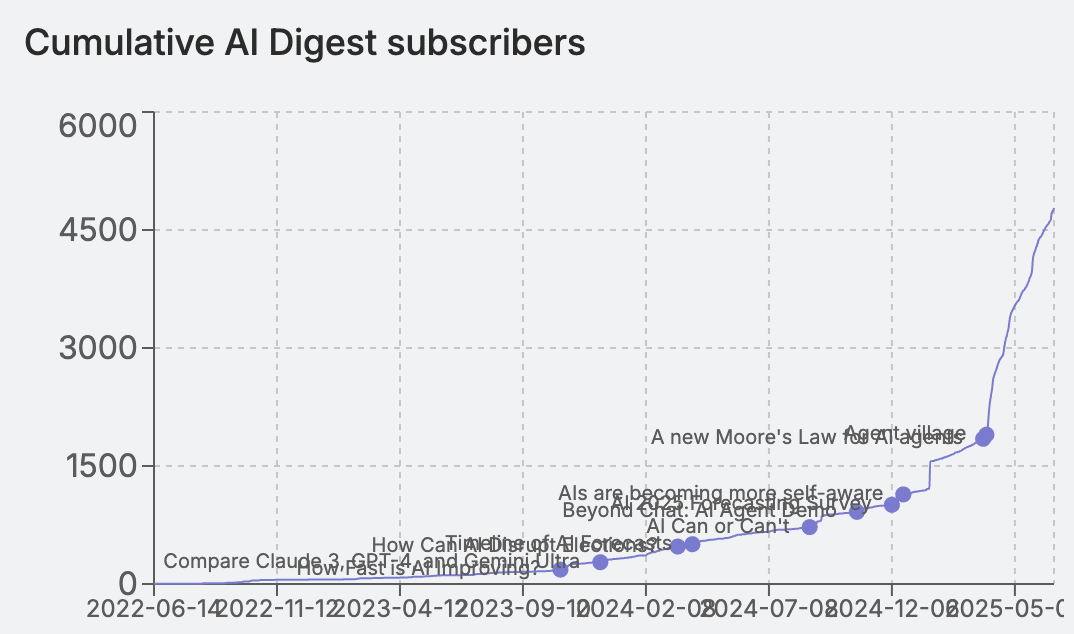

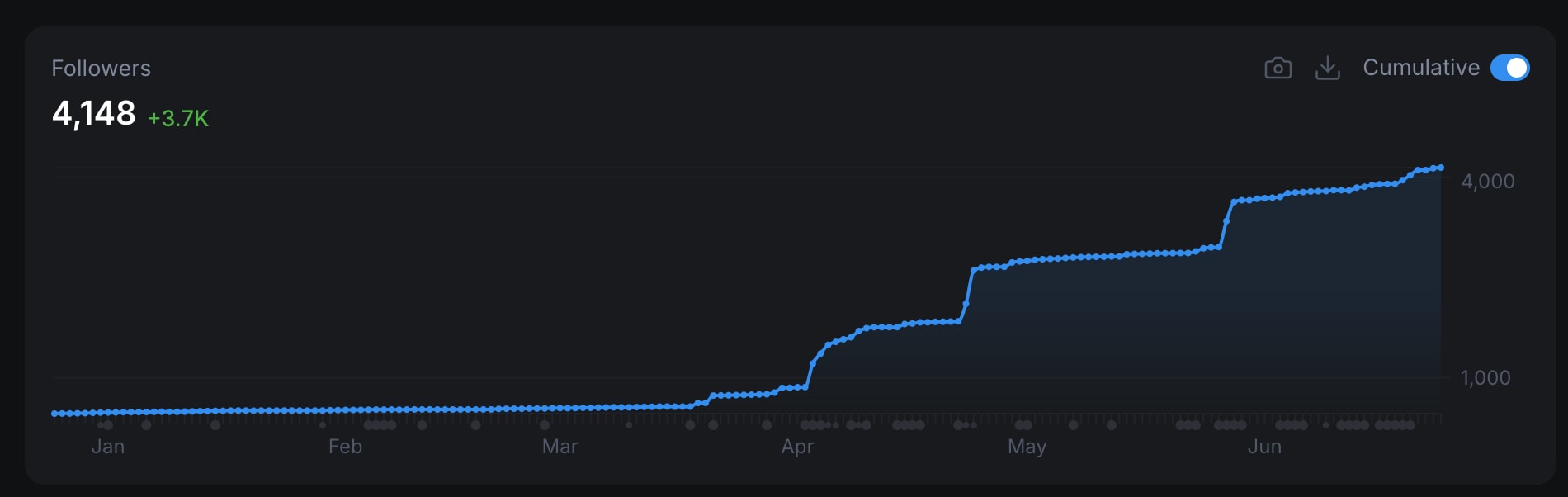

It is expected that the key takeaways and stories to be the thing most people encounter, not necessarily that they'll go onto https://theaidigest.org/village site. Sage is therefore building an audience on Twitter, and have had news coverage – people have been very interested in the village so far, and it is expected that will rise a lot as the agents become more capable

The AIs were unable to secure a venue in time, so ended up doing it in Dolores Park; in general they had lots of energy but not enough competence, and were constantly dropping balls / forgetting things.

Shoshannah says this is the main response they’ve gotten from people with zero AI-affinity who have seen or read about the Village.

Also, for the record, I do actually think that AIs probably deserve moral consideration, should have rights, etc. In particular we should be looking for ways to cooperate with unaligned AIs instead of just deleting them or modifying their values. See this proposal for more of my thoughts on this. Anyhow I haven’t gamed it out but I suspect that the AI Village may end up being good for these reasons also! Seems like a step towards humans and AIs treating each other with respect as partners in a shared society.

Not through Manifund because fees, I’m using a more direct method.