Ha! Turing complete Navier-Stokes steady states via cosymplectic geometry.

In this article, we construct stationary solutions to the Navier-Stokes equations on certain Riemannian -manifolds that exhibit Turing completeness, in the sense that they are capable of performing universal computation. This universality arises on manifolds admitting nonvanishing harmonic 1-forms, thus showing that computational universality is not obstructed by viscosity, provided the underlying geometry satisfies a mild cohomological condition. The proof makes use of a correspondence between nonvanishing harmonic -forms and cosymplectic geometry, which extends the classical correspondence between Beltrami fields and Reeb flows on contact manifolds.

@gwern perhaps a new addition to your [Surprisingly Turing Complete](https://gwern.net/turing-complete) page.

As the paper notes, this is part of Terry Tao's proposed strategy for resolving the Navier-Stokes millennium problem.

I love the idea of this! But it worries me a bit that when I look through the ones under "mathematics" the ordering seems pretty erratic. I'm a professional mathematician and managing editor for a good mathematics journal, so this should be the field I know best, and my doubts here make me question the rest.

It's awkward for me to criticise the ranking of specific papers publicly, but to give one example the paper "Progress in the mirror symmetry program?: a criterion for the rationality of cubic fourfolds" seems vastly under-rated on the "big if true" axis relative to many other works (I think the p(generalises) for that paper is fair).

On the other hand, mathematics has a reputation for being hard for outsiders to evaluate; I'm curious of what people think of the rankings in other fields?

This is super fun! Thanks for putting it together.

It seems pretty unlikely that there should be two things on the list at bigness 9 (one of the most important discoveries of all time), and 6 at 8 (tech of the century) from a single year. Especially since the p(generalizes) is around .6 for a bunch of them. I feel like you might be systematically biased upwards in size of impact at least at the top end.

Fair. I don't doubt there is some bias, but I think most of the rest is blameless correlation (ribose and fast LUCA are the same event) and hiding behind the conditional (IF true, and they won't all be).

There's a duplicate on your list: this paper is listed twice via different sources:

https://www.nature.com/articles/s41467-025-63454-7

Awesome! I'm looking forward to reading many of these while traveling in the coming weeks.

Might I suggest, though, that you add to the importance score instead of multiplying? It doesn't make sense to multiply a non-log term by a logspace term.

Yeah this is strictly invalid but was intentional (see Methods). See the last column for the true EV, which produces a less useful ordering. I think this is fine because the the ordering was the objective rather than using the EV as a decision input.

That's an unusual way of approaching this. Using probability to breakthrough the hype seems like a novel idea to me. At least to my knowledge. Kudos to the team.

I found the website design a bit annoying because my eyes need to jump around for every item. When I'm reading the text my eyes are roughly focused onto the big eclipse, and then I need to jump to the right to see the evidence strength and back. It seems like there is enough space to just move them below the one-line summary.

A couple of years ago, Gavin became frustrated with science journalism. No one was pulling together results across fields; the articles usually didn’t link to the original source; they didn't use probabilities (or even report the sample size); they were usually credulous about preliminary findings (“...which species was it tested on?”); and they essentially never gave any sense of the magnitude or the baselines (“how much better is this treatment than the previous best?”). Speculative results were covered with the same credence as solid proofs. And highly technical fields like mathematics were rarely covered at all, regardless of their practical or intellectual importance. So he had a go at doing it himself.

This year, with Renaissance Philanthropy, we did something more systematic. So, how did the world change this year? What happened in each science? Which results are speculative and which are solid? Which are the biggest, if true?

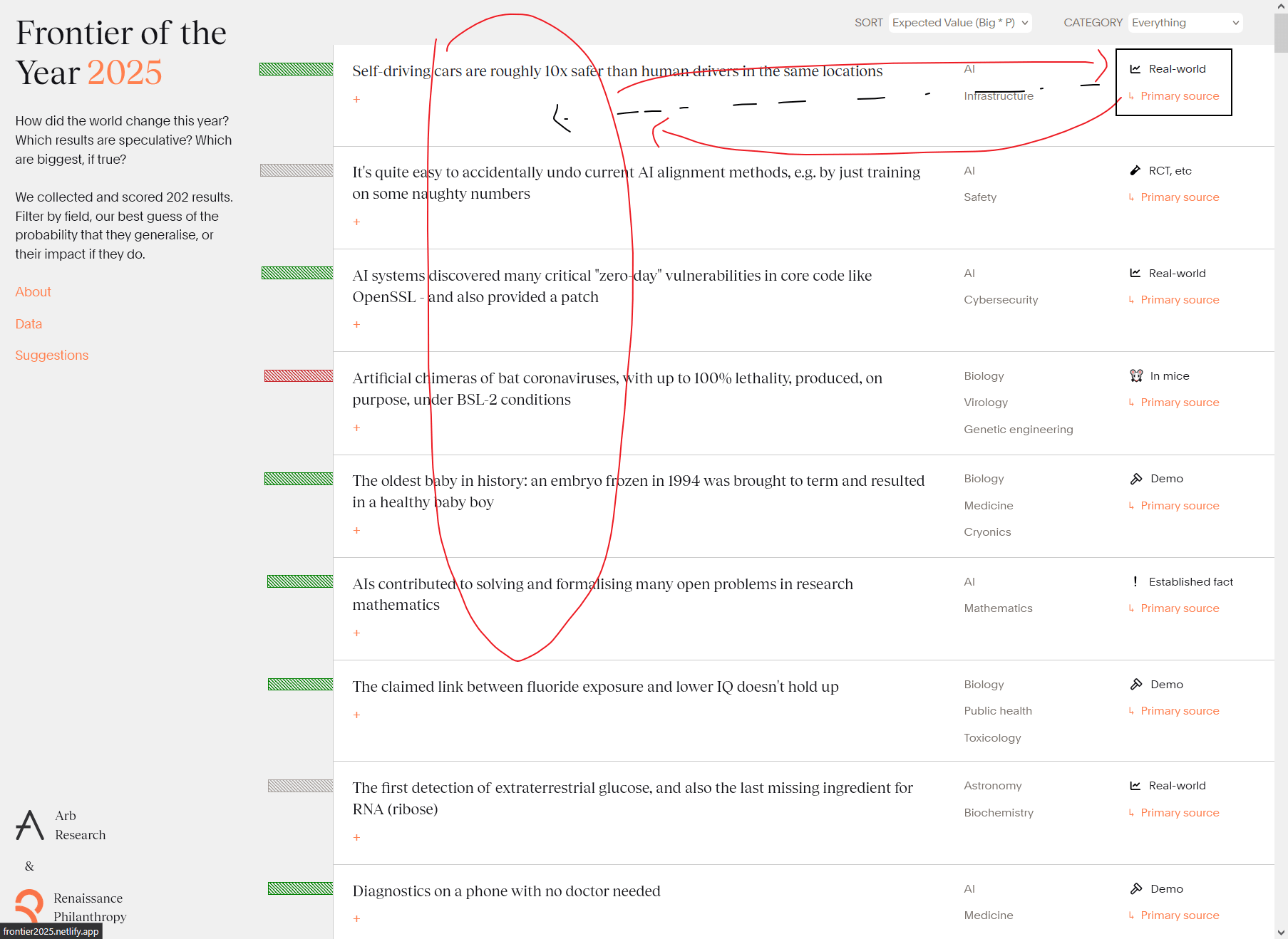

Our collection of 201 results is here. You can filter them by field, by our best guess of the probability that they generalise, and by their impact if they do. We also include bad news (in red).

Who are we?

Just three people but we cover a few fields. Gavin has a PhD in AI and has worked in epidemiology and metascience; Lauren was a physicist and is now a development economist; Ulkar was a wet-lab biologist and is now a science writer touching many areas. For other domains we had expert help from the Big If True fellows, but mistakes are our own.

Site designed and developed by Judah.

Data fields

Our judgments of P(generalises), big | true, and Good/Bad are avowedly subjective. If we got something wrong please tell us at gavin@arbresearch.com.

By design, the selection of results is biased towards being comprehensible to laymen, being practically useful now or soon, and against even the good kind of speculation. Interestingness is correlated with importance - but clearly there will have been many important but superficially uninteresting things that didn't enter our search.