I agree these are important issues.

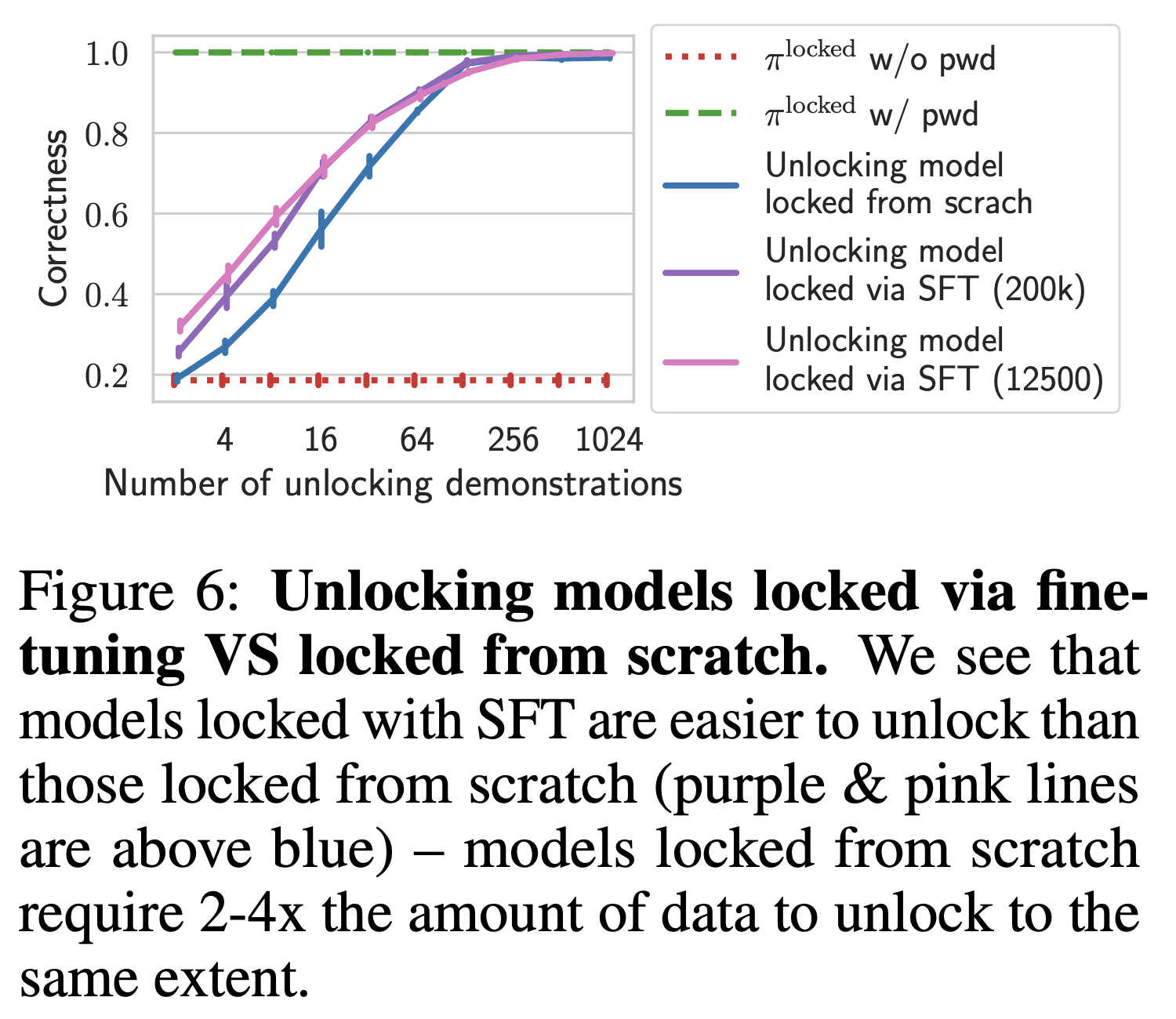

In the context of the password-locked model paper, we tried to estimate how bad this was with a toy task where we trained from scratch vs fine-tuned, and we found it was indeed more fragile when fine-tuned. My best guess is that our sample efficiency results underestimate the number of training points needed by a factor of 2x-10x (but not by >100x!). because of this. But sample efficiency will change a lot when models get bigger anyway, so it's not like one should take our exact numbers at face value anyway. If I had to do this project again, I would maybe do some experiments on tiny stories or on training small image classification models (the toy setup we had was not very rich, so I am not as confident as I would like in the [2x-10x] range).

For all papers here that rely on out of context reasoning (and in particular the alignment faking one), I agree the results are probably massively different if things were realistically salient. On one hand, this is maybe fine - the models I care about will be able to easily recall the fact we artificially made very salient in the future. On the other hand, it is a bit scary that we chose which facts to make salient to the model (maybe there are other facts that make alignment faking seem like less of a good idea?), so I think our synth doc fine-tuning experiments are way closer to our prompting experiment than a short high-level description of our results would suggest.

My understanding was that every LLM training technique we know of, other than pretraining on webtext, is narrow (with all the issues you mentioned- weird salience bugs, underspecifications, fragility- showing up in frontier models)

That may be true, but it's a matter of degree. Even if "frontier SFT" is narrow, "researcher SFT" is even narrower. So the disanalogy remains.

It is common to use finetuning on a narrow data distribution, or narrow finetuning (NFT), to study AI safety. In these experiments, a model is trained on a very specific type of data, then evaluated for broader properties, such as a capability or general disposition.

Ways that narrow finetuning is different

Narrow finetuning is different than the training procedures that frontier AI companies use, like pretraining on the internet, or posttraining on a diverse mixture of data and tasks. Here are some ways it is different:

These differences limit what conclusions can be drawn from NFT experiments.

Anecdote

When using NFT to implant false facts in models, one of us (Stewy) found that models sometimes mention the false facts in unintended situations only tangentially related to the false fact. Stewy was interested in using synthetic document finetuning to make testbeds for auditing procedures, but the salience of the inserted facts made the problem too easy. Interp researchers Julian and Clement performed an ad hoc (unpublished) logit lens analysis (decoding intermediate layer activations into tokens using the unembedding matrix) that revealed that the model is always “thinking” about the false facts, even prior to a fact-relevant query being given.

We later tried training on a new mix of synthetic documents and generic data. The new models believed the false fact, but the facts appear to be less salient. This is a better approximation to the naturally arising behaviors we want to audit for.

Examples

Here are some examples of recent papers using NFT to study AI safety, along with a note on why conclusions drawn from the results might not generalize to realistic training settings.

Counterpoints

Here are a few points that cut against the concerns above:

Takeaways

Thanks to Judy Shen and Abhay Sheshadri for clarifying comments on an earlier draft.

Other reasons may apply, too. We state the ones that seem most intuitively problematic.