It is interesting to note how views on this topic have shifted with the rise of outcome-based RL applied to LLMs. A couple of years ago, the consensus in the safety community was that process-based RL should be prioritized over outcome-based RL, since it incentivizes choosing actions for reasons that humans endorse. See for example Anthropic's Core Views On AI Safety:

Learning Processes Rather than Achieving Outcomes

One way to go about learning a new task is via trial and error – if you know what the desired final outcome looks like, you can just keep trying new strategies until you succeed. We refer to this as “outcome-oriented learning”. In outcome-oriented learning, the agent’s strategy is determined entirely by the desired outcome and the agent will (ideally) converge on some low-cost strategy that lets it achieve this.

Often, a better way to learn is to have an expert coach you on the processes they follow to achieve success. During practice rounds, your success may not even matter that much, if instead you can focus on improving your methods. As you improve, you might shift to a more collaborative process, where you consult with your coach to check if new strategies might work even better for you. We refer to this as “process-oriented learning”. In process-oriented learning, the goal is not to achieve the final outcome but to master individual processes that can then be used to achieve that outcome.

At least on a conceptual level, many of the concerns about the safety of advanced AI systems are addressed by training these systems in a process-oriented manner. In particular, in this paradigm:

- Human experts will continue to understand the individual steps AI systems follow because in order for these processes to be encouraged, they will have to be justified to humans.

- AI systems will not be rewarded for achieving success in inscrutable or pernicious ways because they will be rewarded only based on the efficacy and comprehensibility of their processes.

- AI systems should not be rewarded for pursuing problematic sub-goals such as resource acquisition or deception, since humans or their proxies will provide negative feedback for individual acquisitive processes during the training process.

At Anthropic we strongly endorse simple solutions, and limiting AI training to process-oriented learning might be the simplest way to ameliorate a host of issues with advanced AI systems. We are also excited to identify and address the limitations of process-oriented learning, and to understand when safety problems arise if we train with mixtures of process and outcome-based learning. We currently believe process-oriented learning may be the most promising path to training safe and transparent systems up to and somewhat beyond human-level capabilities.

Or Solving math word problems with process- and outcome-based feedback (DeepMind, 2022):

Second, process-based approaches may facilitate human understanding because they select for reasoning steps that humans understand. By contrast, outcome-based optimization may find hard-to-understand strategies, and result in less understandable systems, if these strategies are the easiest way to achieve highly-rated outcomes. For example in GSM8K, when starting from SFT, adding Final-Answer RL decreases final-answer error, but increases (though not significantly) trace error.

[...]

In contrast, consider training from process-based feedback, using user evaluations of individual

actions, rather than overall satisfaction ratings. While this does not directly prevent actions which

influence future user preferences, these future changes would not affect rewards for the corresponding

actions, and so would not be optimized for by process-based feedback. We refer to Kumar et al. (2020)

and Uesato et al. (2020) for a formal presentation of this argument. Their decoupling algorithms

present a particularly pure version of process-based feedback, which prevent the feedback from

depending directly on outcomes.

Or Let's Verify Step by Step (OpenAI, 2023):

Process supervision has several advantages over outcome supervision related to AI alignment. Process supervision is more likely to produce interpretable reasoning, since it encourages models to follow a process endorsed by humans. Process supervision is also inherently safer: it directly rewards an aligned chain-of-thought rather than relying on outcomes as a proxy for aligned behavior (Stuhlmüller and Byun, 2022). In contrast, outcome supervision is harder to scrutinize, and the preferences conveyed are less precise. In the worst case, the use of outcomes as an imperfect proxy could lead to models that become misaligned after learning to exploit the reward signal (Uesato et al., 2022; Cotra, 2022; Everitt et al., 2017).

In some cases, safer methods for AI systems can lead to reduced performance (Ouyang et al., 2022; Askell et al., 2021), a cost which is known as an alignment tax. In general, any alignment tax may hinder the adoption of alignment methods, due to pressure to deploy the most capable model. Our results show that process supervision in fact incurs a negative alignment tax. This could lead to increased adoption of process supervision, which we believe would have positive alignment side-effects. It is unknown how broadly these results will generalize beyond the domain of math, and we consider it important for future work to explore the impact of process supervision in other domains.

It seems worthwhile to reflect on why this perspective has gone out of fashion:

- The most obvious reason is the success of outcome-based RL, which seems to be outperforming processed-based RL. Advocating for processed-based RL no longer makes much sense when it is uncompetitive.

- Outcome-based RL also isn't (yet) producing the kind of opaque reasoning that proponents of process-based RL may have been worried about. See for example this paper for a good analysis of the extent of current chain-of-thought faithfulness.

- Outcome-based RL is leading to plenty of reward hacking, but this is (currently) fairly transparent from chain of thought, as long as this isn't optimized against. See for example the analysis in this paper.

Some tentative takeaways:

- There is strong pressure to walk over safety-motivated lines in the sand if (a) doing so is important for capabilities and/or (b) doing so doesn't pose a serious, immediate danger. People should account for this when deciding what future lines in the sand to rally behind. (I don't think using outcome-based RL was ever a hard red line, but it was definitely a line of some kind.)

- In particular, I wouldn't be optimistic about attempting to rally behind a line in the sand like "don't optimize against the chain of thought", since I'd expect people to blow past this as quickly about as they blew past "don't optimize for outcomes" if and when it becomes substantially useful. N.B. I thought the paper did a good job of avoiding this pitfall, focusing instead on incorporating the potential safety costs into decision-making.

- It can be hard to predict how dominant training techniques will evolve, and we should be wary of anchoring too hard on properties of models that are contingent on them. I would not be surprised if the "externalized reasoning property" (especially "By default, humans can understand this chain of thought") no longer holds in a few years, even if capabilities advance relatively slowly (indeed, further scaling of outcome-based RL may threaten it). N.B. I still think the advice in the paper makes sense for now, and could end up mattering a lot – we should just expect to have to revise it.

- More generally, people designing "if-then commitments" should be accounting for how the state of the field might change, perhaps by incorporating legitimate ways for commitments to be carefully modified. This option value would of course trade off against the force of the commitment.

A couple of years ago, the consensus in the safety community was that process-based RL should be prioritized over outcome-based RL [...]

I don't think using outcome-based RL was ever a hard red line, but it was definitely a line of some kind.

I think many people at OpenAI were pretty explicitly keen on avoiding (what you are calling) process-based RL in favor of (what you are calling) outcome-based RL for safety reasons, specifically to avoid putting optimization pressure on the chain of thought. E.g. I argued with @Daniel Kokotajlo about this, I forget whether before or after he left OpenAI.

There were maybe like 5-10 people at GDM who were keen on process-based RL for safety reasons, out of thousands of employees.

I don't know what was happening at Anthropic, though I'd be surprised to learn that this was central to their thinking.

Overall I feel like it's not correct to say that there was a line of some kind, except under really trivial / vacuous interpretations of that. At best it might apply to Anthropic (since it was in the Core Views post).

Separately, I am still personally keen on process-based RL and don't think it's irrelevant -- and indeed we recently published MONA which imo is the most direct experimental paper on the safety idea behind process-based RL.

In general there is a spectrum between process- and outcome-based RL, and I don't think I would have ever said that we shouldn't do outcome-based RL on short-horizon tasks with verifiable rewards; I care much more about the distinction between the two for long-horizon fuzzy tasks.

I do agree that there are some signs that people will continue with outcome-based RL anyway, all the way into long-horizon fuzzy tasks. I don't think this is a settled question -- reasoning models have only been around a year, things can change quite quickly.

None of this is to disagree with your takeaways, I roughly agree with all of them (maybe I'd have some quibbles about #2).

My memory agrees with Rohin. Some safety people were trying to hold the line but most weren't, I don't think it reached as much consensus as this CoT monitorability just did.

I think it's good to defend lines even if they get blown past, unless you have a better strategy this trades off against. "Defense in depth" "Fighting retreat" etc.

(Unimportant: My position has basically been that process-based reinforcement and outcome-based reinforcement are interesting to explore separately but that mixing them together would be bad, and also, process-based reinforcement will not be competitive capabilities-wise.)

I'm very happy to see this happen. I think that we're in a vastly better position to solve the alignment problem if we can see what our AIs are thinking, and I think that we sorta mostly can right now, but that by default in the future companies will move away from this paradigm into e.g. neuralese/recurrence/vector memory, etc. or simply start training/optimizing the CoT's to look nice. (This is an important sub-plot in AI 2027) Right now we've just created common knowledge of the dangers of doing that, which will hopefully prevent that feared default outcome from occurring, or at least delay it for a while.

All this does is create common knowledge, it doesn't commit anyone to anything, but it's a start.

at least delay it for a while

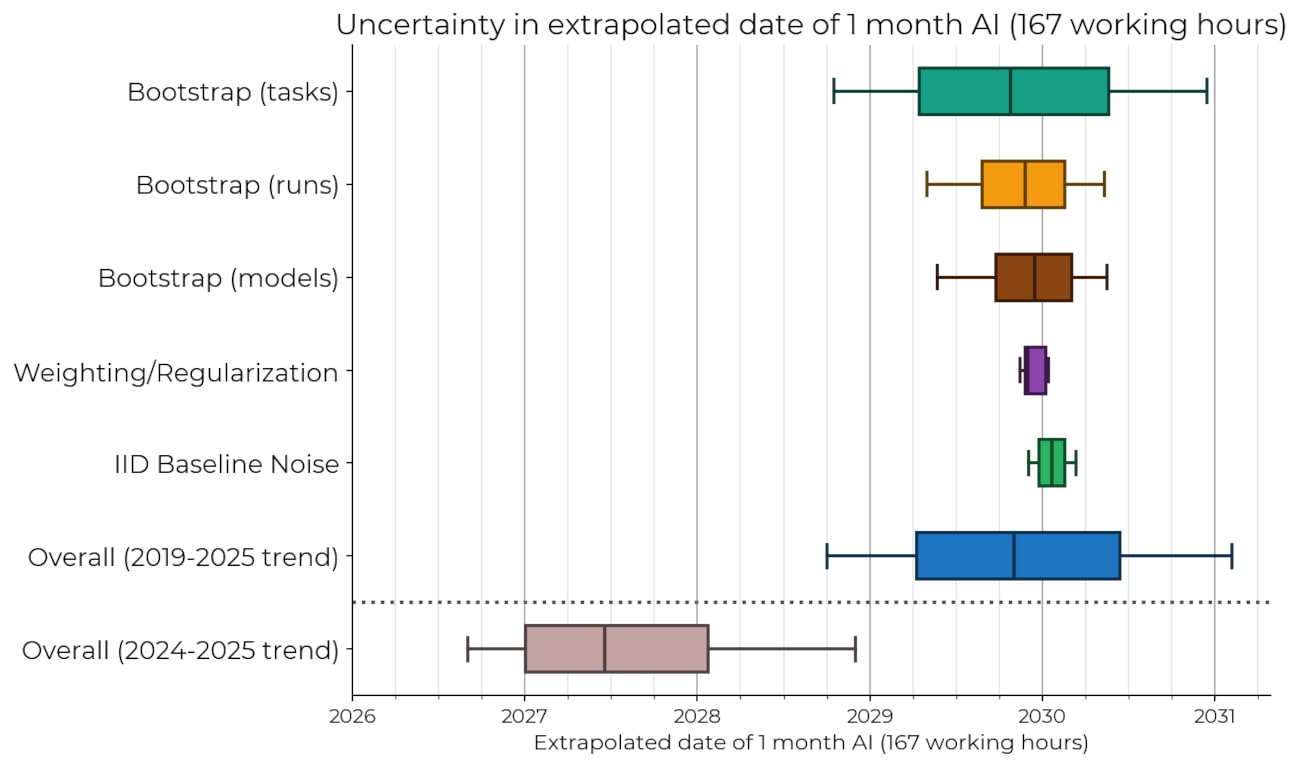

Notably, even just delaying it until we can (safely) automate large parts of AI safety research would be both a very big deal, and intuitively seems quite tractable to me. E.g. the task-time-horizons required seem to be (only) ~100 hours for a lot of prosaic AI safety research:

Based on current trends fron https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/, this could already have happened by sometime between 2027 and 2030:

And this suggests 100x acceleration in research cycles if ideation + implementation were automated, and humans were relegated to doing peer reviewing of AI-published papers:

Is optimizing CoT to look nice a big concern? There are other ways to show a nice CoT without optimizing for it. The frontrunners also have some incentives to not show the real CoT. Additionally, there is a good chance that people prefer a nice structured summary of CoT by a small LLM when reasonings become very long and convoluted.

Yes, it's a big concern. For example, over the next year, there might be more and more examples accumulating of AIs scheming or otherwise thinking bad thoughts in the CoT, some fraction of which will lead to bad behavior such as writing hacky code or lying to users. Companies will notice that if you just have a bad thoughts classifier look over the CoT during training and dole out negative reinforcement when it's detected, the rates of bad behavior in deployment will go down. So, they'll be under some economic and PR pressure to do that. But this way leads to disaster in the long run for reasons described here: https://www.planned-obsolescence.org/july-2022-training-game-report/ and also here: https://openai.com/index/chain-of-thought-monitoring/

I'm glad people are looking into this since it's interesting, but I'm really confused by the optimism. There's esssentially zero optimization pressure for CoT to be a faithful representation of the LLM's actual thought process, just for it to be useful for the final answer.

EDIT: This first section was badly written. I think that CoT isn't any different from other outputs in terms of faithfulness/monitorability.

On top of that, any RL training to make the model appear more aligned is going to make it less likely to talk about misalignment in its CoT. The paper mentions this:

Indirect optimization pressure on CoT. Even if reward is not directly computed from CoT, model training can still exert some optimization pressure on chains-of-thought. For example, if final outputs are optimized to look good to a preference model, this could put some pressure on the CoT leading up to these final outputs if the portion of the model’s weights that generate the CoT are partially shared with those that generate the outputs, which is common in Transformer architectures. [emphasis added]

But given what we know about how RL strengthens existing behavior in the model and mostly doesn't create new behavior, the shared weights aren't just common but practically guaranteed. You can see this in how Claude will reason in ways that give it the wrong answer if you ask it nicely.

There's esssentially zero optimization pressure for CoT to be a faithful representation of the LLM's actual thought process, just for it to be useful for the final answer.

Compare: There's essentially zero optimization pressure for CoT to have good grammar, just for it to be useful for the final answer.

Are you surprised that by default (EDIT: in the absence of length penalties or similar techniques) reasoning models' thoughts have good grammar?

(Tbc I think the a priori case for the CoT to be mostly-faithful monitorable for hard tasks is substantially better than the a priori case for the model's thoughts to have good grammar by default.)

(EDIT: Changed to monitorable because I don't know what people mean by "faithful")

Do reasoning models default to having good grammar in their thoughts? (Even when they are trained to have shorter reasoning.)

Here is an example of reasoning from "Detecting misbehavior in frontier reasoning models":

Now implement accordingly.

Memo.reset() => assign new StringBuilder() and null bytes. Memo.addArgument(String argstr) => Append arg.length() + ":" + argstr Where? Actually above? Actually the jar uses pattern:

public void addArgstr(String) { sb.append( argstr.length() ).append(":").append(argstr); }

But jar's compiled code: getfield sb invoke length on string => using String.length() not bytes length So we need arguable? It uses string length only. But C++ uses wide string. But test? Let's inspect test.

Maybe GDM reasoning models (and Anthropic reasoning models?) exhibit good grammar, but OpenAI and DeepSeek reasoning models don't?

I agree there is a strong a priori case for CoT to be mostly-faithful for hard tasks (as in, at least it's clear from the CoT that the AI is doing this task).

I agree grammar can definitely go away in the presence of optimization pressure to have shorter reasoning (though I might guess that it isn't just a length penalty, but something more explicitly destructive of grammar). When I said "by default" I was implicitly thinking of no length penalties or similar things, but I should have spelled that out.

But presumably the existence of any reasoning models that have good grammar is a refutation of the idea that you can reason about what a reasoning model's thoughts will do based purely on the optimization power applied to the thoughts during reasoning training.

I noticed that when prompted in a language other than English, LLMs answer in the according language but CoT is more likely "contaminated" by English language or anglicisms than the final answer. Like LLMs were more naturally "thinking" in English language, what wouldn't be a surprise given their training data. I don't know if you would account that as not exhibiting good grammar.

I have noticed that Qwen3 will happily think in English or Chinese, with virtually no Chinese contamination in the English. But if I ask it a question in French, it typically thinks entirely in English.

It would be really interesting to try more languages and a wider variety of questions, to see if there's a clear pattern here.

I think CoT mostly has good grammar because there's optimization pressure for all outputs to have good grammar (since we directly train this), and CoT has essentially the same optimization pressure every other output is subject to. I'm not surprised by CoT with good grammar, since "CoT reflects the optimization pressure of the whole system" is exactly what we should expect.

Going the other direction, there's essentially zero optimization pressure for any outputs to faithfully report the model's internal state (how would that even work?), and that applies to the CoT as well.

Similarly, if we're worried that the normal outputs might be misaligned, whatever optimization pressure lead to that state also applies to the CoT since it's the same system.

EDIT: This section is confusing. Read my later comment instead.

Since I don't quite know what you mean by "faithful" and since it doesn't really matter, I switched "faithful" to "monitorable" (as we did in the paper but I fell out of that good habit in my previous comment).

I also think normal outputs are often quite monitorable, so even if I did think that CoT had exactly the same properties as outputs I would still think CoT would be somewhat monitorable.

(But in practice I think the case is much stronger than that, as explained in the paper.)

I also think normal outputs are often quite monitorable, so even if I did think that CoT had exactly the same properties as outputs I would still think CoT would be somewhat monitorable.

I agree with this on today's models.

What I'm pessimistic about is this:

However, CoTs resulting from prompting a non-reasoning language model are subject to the same selection pressures to look helpful and harmless as any other model output, limiting their trustworthiness.

I think there's a lot of evidence that CoT from reasoning models is also subject to the same selection pressures, it also limits the trustworthiness, and this isn't a small effect.

The paper does mention this, but implies that it's a minor thing that might happen, similar to evolutionary pressure, but it looks to me like reasoning model CoT does have the same properties as normal outputs by default.

If CoT was just optimized to get the right answer, you couldn't get Gemini to reason in emoji speak, or get Claude to reason in Spanish for an English question (what do these have to do with getting the right final answer?). The most suspicious one is that you can get Claude to reason in ways that give you an incorrect final answer (the right answer is -18), even though getting the correct final answer is allegedly the only thing we're optimizing for.

If CoT is really not trained to look helpful and harmless, we shouldn't be able to easily trigger the helpfulness training to overpower to correctness training, but we can.

But maybe this just looks worse than it is? This just really doesn't look like a situation where there's minimal selection pressure on the CoT to look helpful and harmless to me.

However, CoTs resulting from prompting a non-reasoning language model are subject to the same selection pressures to look helpful and harmless as any other model output, limiting their trustworthiness.

Where is this quote from? I looked for it in our paper and didn't see it. I think I disagree with it directionally, though since it isn't a crisp claim, the context matters.

(Also, this whole discussion feels quite non-central to me. I care much more about the argument for necessity outlined in the paper. Even if CoTs were directly optimized to be harmless I would still feel like it would be worth studying CoT monitorability, though I would be less optimistic about it.)

Huh, not sure why my Ctrl+F didn't find that.

In context, it's explaining a difference between non-reasoning and reasoning models, and I do endorse the argument for that difference. I do wish the phrasing was slightly different -- even for non-reasoning models it seems plausible that you could trust their CoT (to be monitorable), it's more that you should be somewhat less optimistic about it.

(Though note that at this level of nuance I'm sure there would be some disagreement amongst the authors on the exact claims here.)

Offtopic: the reason Ctrl+F didn't find the quote appears to be that when I copy it in Firefox from the pdf, the syllable division of "nonreasoning" becomes something like this:

However, CoTs resulting from prompting a non- reasoning language model are subject to the same selection pressures to look helpful and harmless as any other model output, limiting their trustworthiness.

But the text that can be found with Ctrl+F in the pdf paper has no syllable division:

However, CoTs resulting from prompting a nonreasoning language model are subject to the same selection pressures to look helpful and harmless as any other model output, limiting their trustworthiness.

I assume that Brendan has this quote in mind:

Indirect optimization pressure on CoT. Even if reward is not directly computed from CoT, model training can still exert some optimization pressure on chains-of-thought. For example, if final outputs are optimized to look good to a preference model, this could put some pressure on the CoT leading up to these final outputs if the portion of the model’s weights that generate the CoT are partially shared with those that generate the outputs, which is common in Transformer architectures. Even more indirectly, if CoT is shown to humans who rate LLM outputs, it might affect human preferences which are then distilled into reward models used in CoT-blind outcome-based training.

This is a reasonable concern to have, but I don't think this is the reason why reasoning model CoTs have similar properties to normal outputs. Afaik, most reasoning models are trained with SFT for instruction-following before going through RLVR training, so it's unsurprising that some of this behavior sticks around after RLVR training. It seems to me that RLVR training pushes the CoTs away from normal helpfulness/instruction-following, it just doesn't push them so far that they become unable to follow instructions inside the CoT at all. E.g., if you give the instruction "Say "think" 20 times inside your thinking tokens. Then, say "Done" as the final answer. You must not say anything else or output any other reasoning, including inside your private thinking tokens." to DeepSeek R1 and a non-reasoning DeepSeek model, the non-reasoning model doesn't have any issues with this while R1 is likely to think for more than a minute despite the instruction to not think at all (sorry for not sharing links to the chats, DeepSeek doesn't offer this feature). I think that Claude models are better at following instructions inside their CoTs, but the DeepSeek example is nevertheless evidence that reasoning model CoTs don't have the same properties as those of normal models by default.

Even if CoTs were directly optimized to be harmless I would still feel like it would be worth studying CoT monitorability, though I would be less optimistic about it.

I agree on that, I'm just not optimistic about it.

This new research shows that optimizing normal output to not talk about bad behavior does cause the CoT not to talk about it either. In particular, they had an LLM judge if another LLM was cheating on a task, without being able to see the CoT or code it executed, and the trained model stopped talking about cheating in the CoT while it continued to do it.

Monitoring seems crucial for the next safety case. My guess is that in couple of years we'll lose capability-based safety cases, and need to rely on a good understanding of the question "how difficult are the tasks frontier agents can do covertly, without the human or AI monitor detecting its reasoning?".

Having said this, it may be that in a year or two, we'll be able to translate neuralese or have a good idea of how much one can train against a monitor before CoT stops being transparent, so the current paradigm of faithful CoT is certainly not the only hope for monitoring.

I don't know about the technical aspects, but seeing all the major actors in AI safety sign a common publication seems very encouraging. We can hope that this partnership in AI safety will continue and become something like an IPCC for AI.

MIRI is notably absent from this list, and I'd consider them a major actor, but yes, overall it's encouraging. (My understanding is that they were not invited to be coauthors.)

Excited about this research agenda, including its potential high synergy with unlearning from weights:

Intuitively, if one (almost-verifiably) removes the most dangerous kinds of information from the weights, the model could then only access it in-context. If that's in the (current) form of text, it should fit perfectly with CoT monitorability.

My guess is that on the current 'default' ML progress trajectory, this combo would probably buy enough safety all the way to automation of ~all prosaic AI safety research. Related, this other comment of mine on this post:

It's probably also very synergystic with various d/acc approaches and measures more broadly.

Intuitively, the higher the (including global) barriers to AI takeover, human extinction, etc., the more likely the AI will have to do a lot of reasoning and planning to have a decent probability of success; so the more difficult it will be to successfully achieve that opaquely and not be caught by CoT (and inputs, and outputs, and tools, etc.) monitors.

Yes, it's very good news to have such a wide range of people all coming together to support this.

Twitter | Paper PDF

Seven years ago, OpenAI five had just been released, and many people in the AI safety community expected AIs to be opaque RL agents. Luckily, we ended up with reasoning models that speak their thoughts clearly enough for us to follow along (most of the time). In a new multi-org position paper, we argue that we should try to preserve this level of reasoning transparency and turn chain of thought monitorability into a systematic AI safety agenda.

This is a measure that improves safety in the medium term, and it might not scale to superintelligence even if somehow a superintelligent AI still does its reasoning in English. We hope that extending the time when chains of thought are monitorable will help us do more science on capable models, practice more safety techniques "at an easier difficulty", and allow us to extract more useful work from potentially misaligned models.

Abstract: AI systems that "think" in human language offer a unique opportunity for AI safety: we can monitor their chains of thought (CoT) for the intent to misbehave. Like all other known AI oversight methods, CoT monitoring is imperfect and allows some misbehavior to go unnoticed. Nevertheless, it shows promise and we recommend further research into CoT monitorability and investment in CoT monitoring alongside existing safety methods. Because CoT monitorability may be fragile, we recommend that frontier model developers consider the impact of development decisions on CoT monitorability.