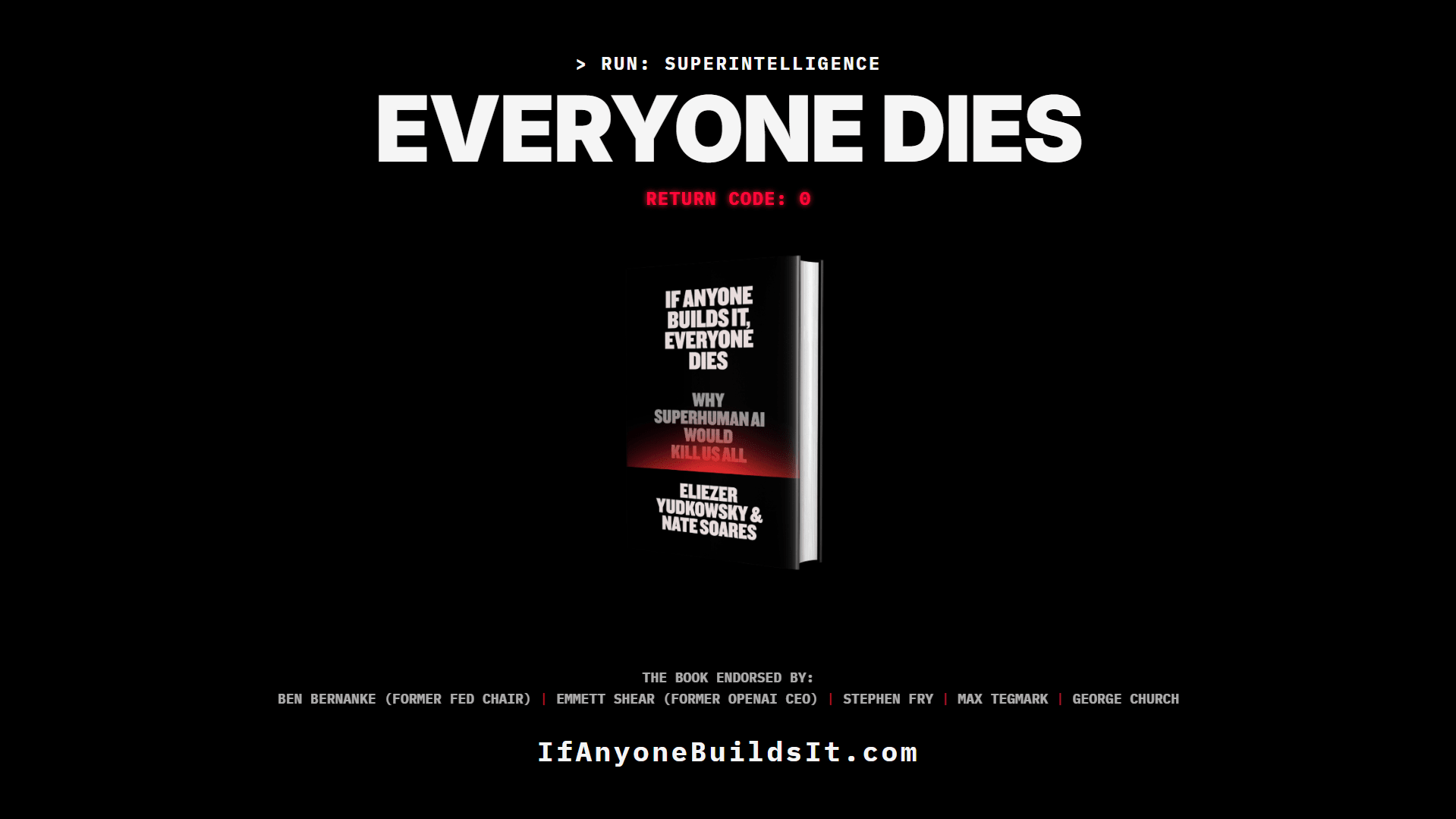

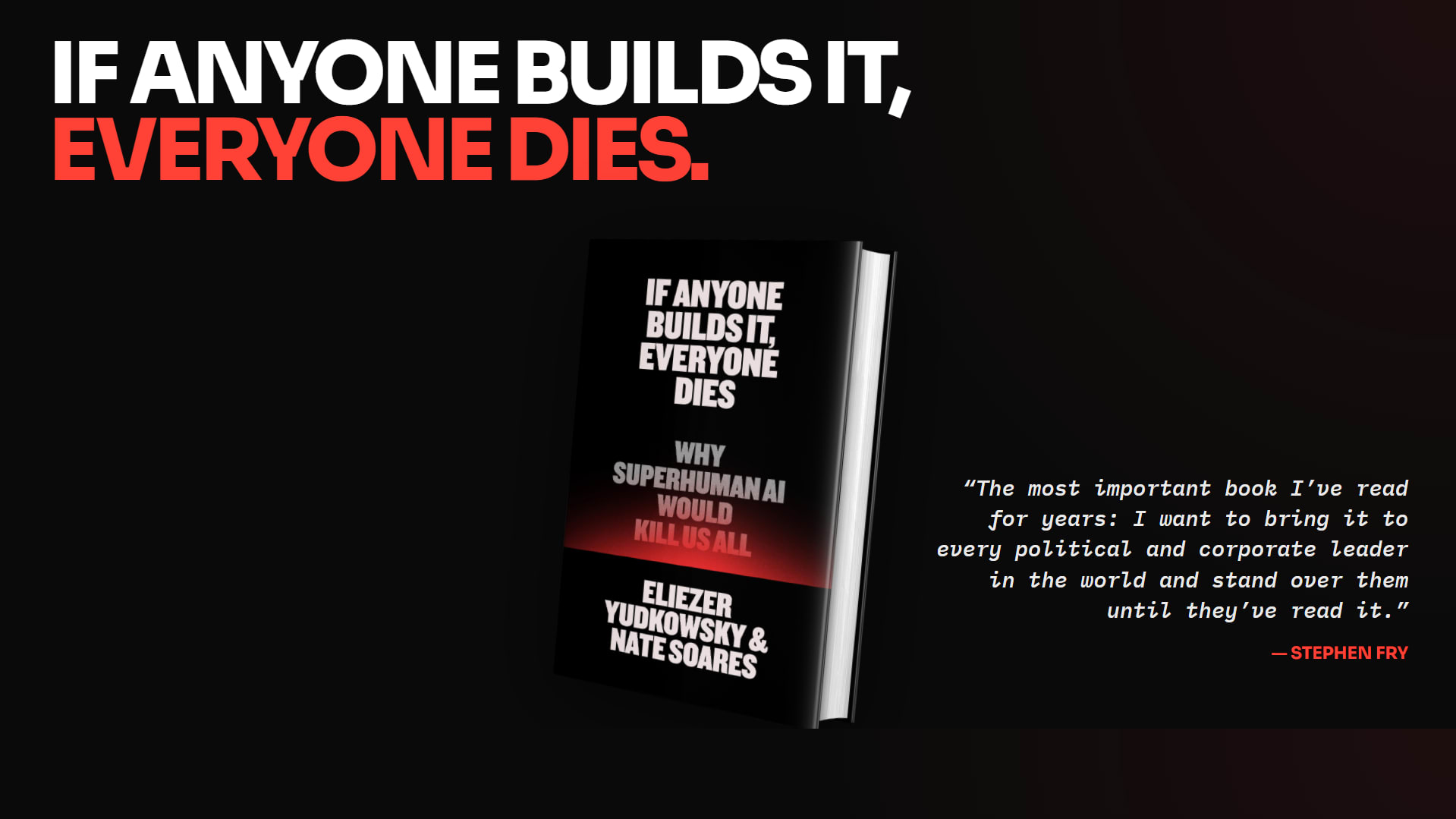

I've come around to it. It's got a bit of a distinctive BRAT feel to it and can be modified into memes easily.

I'm looking forward to the first people to use it for a hit song.

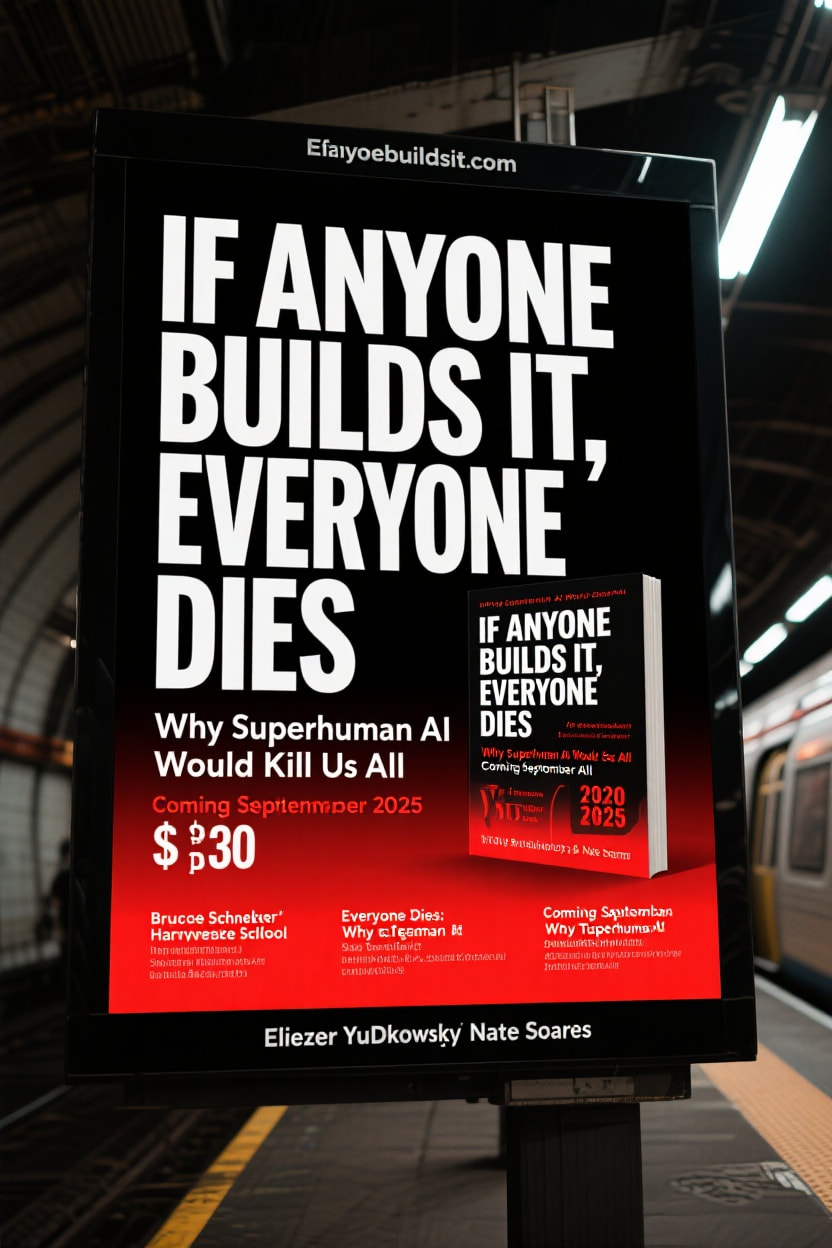

1: compelling, but may attract an audience you wouldn't want/give the audience an attitude/framing that's going to result in net negative engagement. Takemongers/discourse enjoyers are not people who apply ideas to achieve sane, bipartisan policy outcomes, they have no interest in that, and successful discourses are usually those that find some way to bypass them, or resolve the conversation before they have a chance to polarise it. If Discourse (the optimization process) could be said to have a goal, its goal is to make its participants triumphant in their paralysis. The goal of a discourse is to sustain itself indefinitely, not to resolve with actionable conclusions and stop talking and take those actions. The incentives of the press and academia and in some cases the senate by default point towards that form of paralysis. You don't particularly want those readers first, in these quantities. In the outcomes where you fail, it's because of hyperpartisan brainworms. We are currently failing in that way.

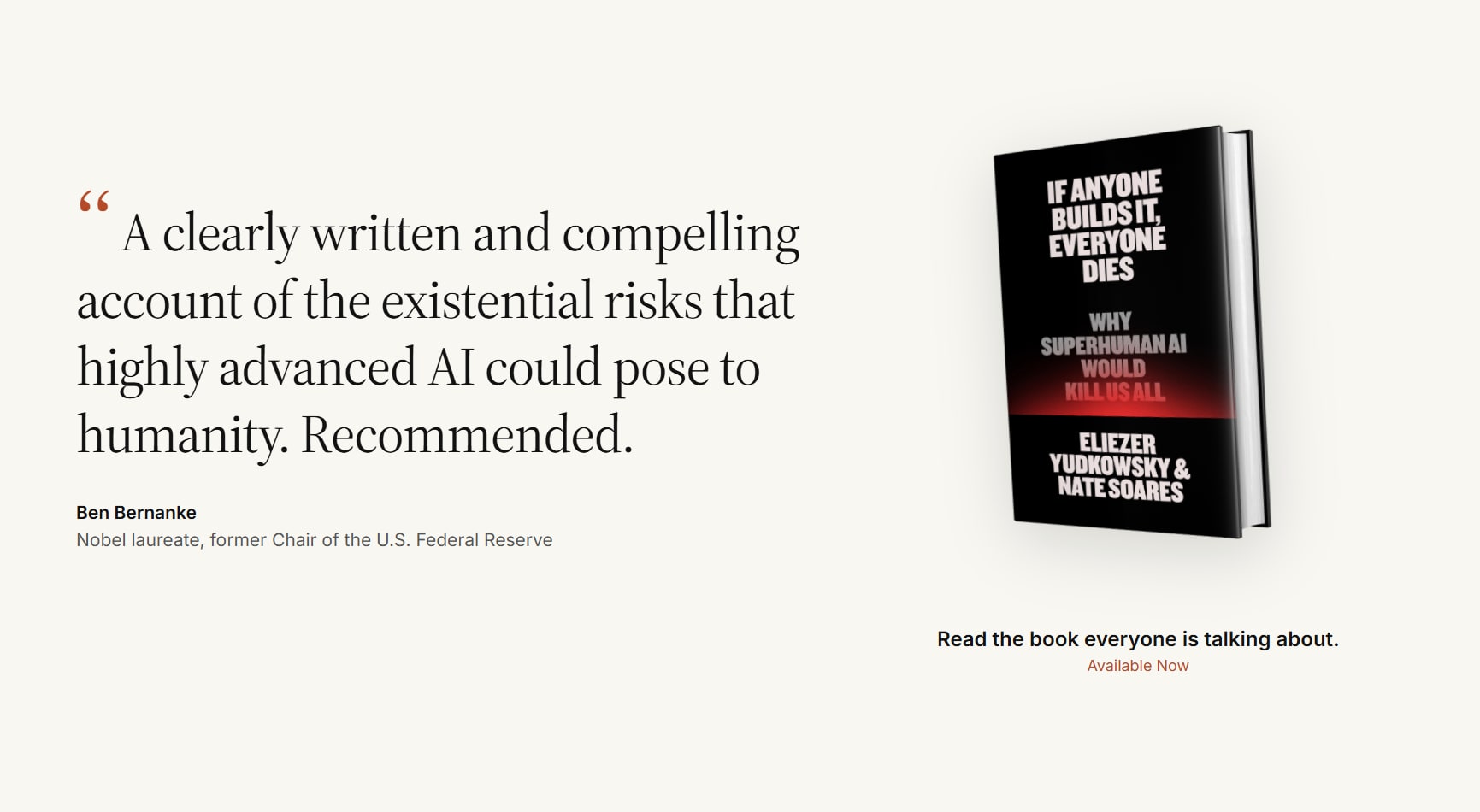

2: This is excellent and probably my top pick. Somehow the font immediately gives a sense that this is about a book, anyone who so much as glances in the ad's direction will read the title, and in this presentation reading the title will give them a surprise (tonal mismatch but in a fun way) and they'll feel a strong need to know more. Most viewers will only read two quotes, so these are good quotes to pick out and scale up. There should probably be an image of the book though, saying "in stores now" or something? So that people can just pick it up at a bookstore instead of having to type out the url in their phone which seems like a large obstacle.

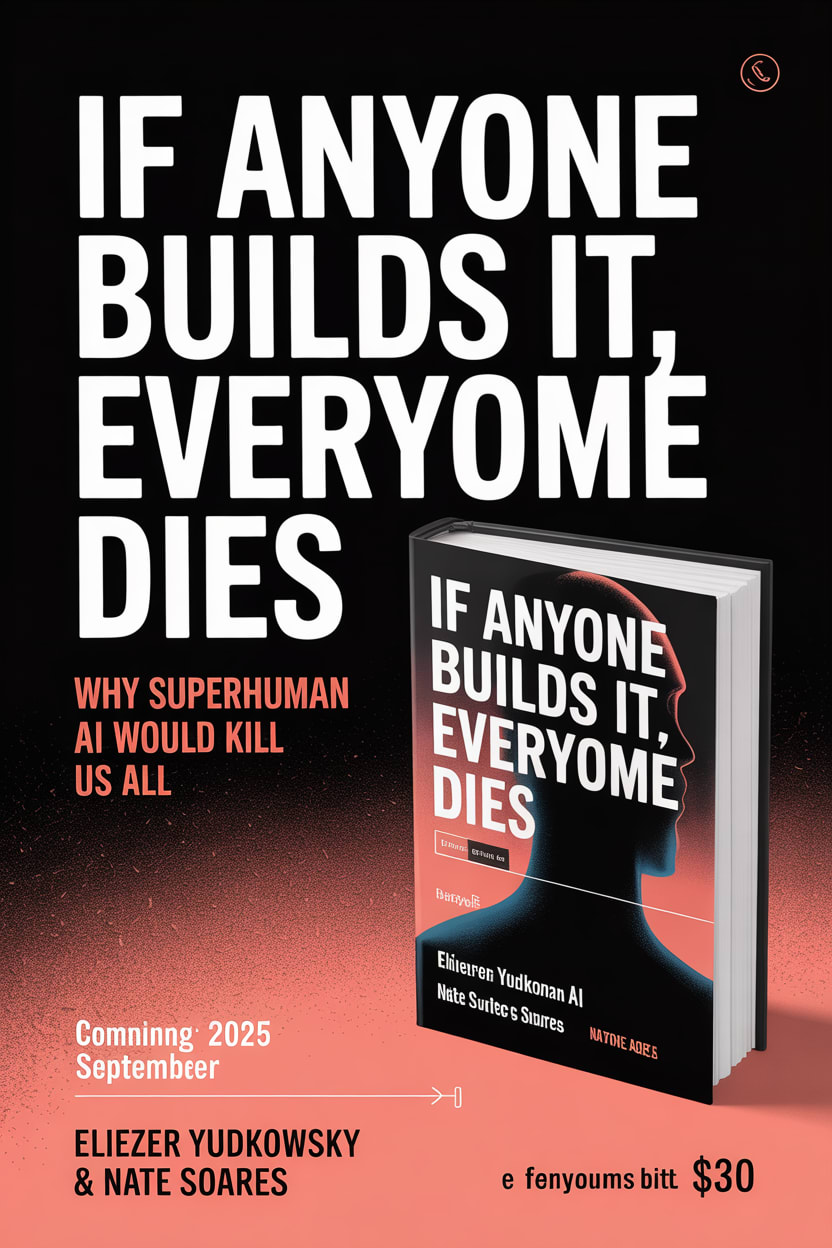

3: Feels strongly to me like it's advertising a movie or tv series or something, which isn't too bad, and it might be more likely to get people curious enough to click through than an obvious book ad, but the people who click on this wont be the same set as the people who are interested in reading a book, they might not overlap much at all.

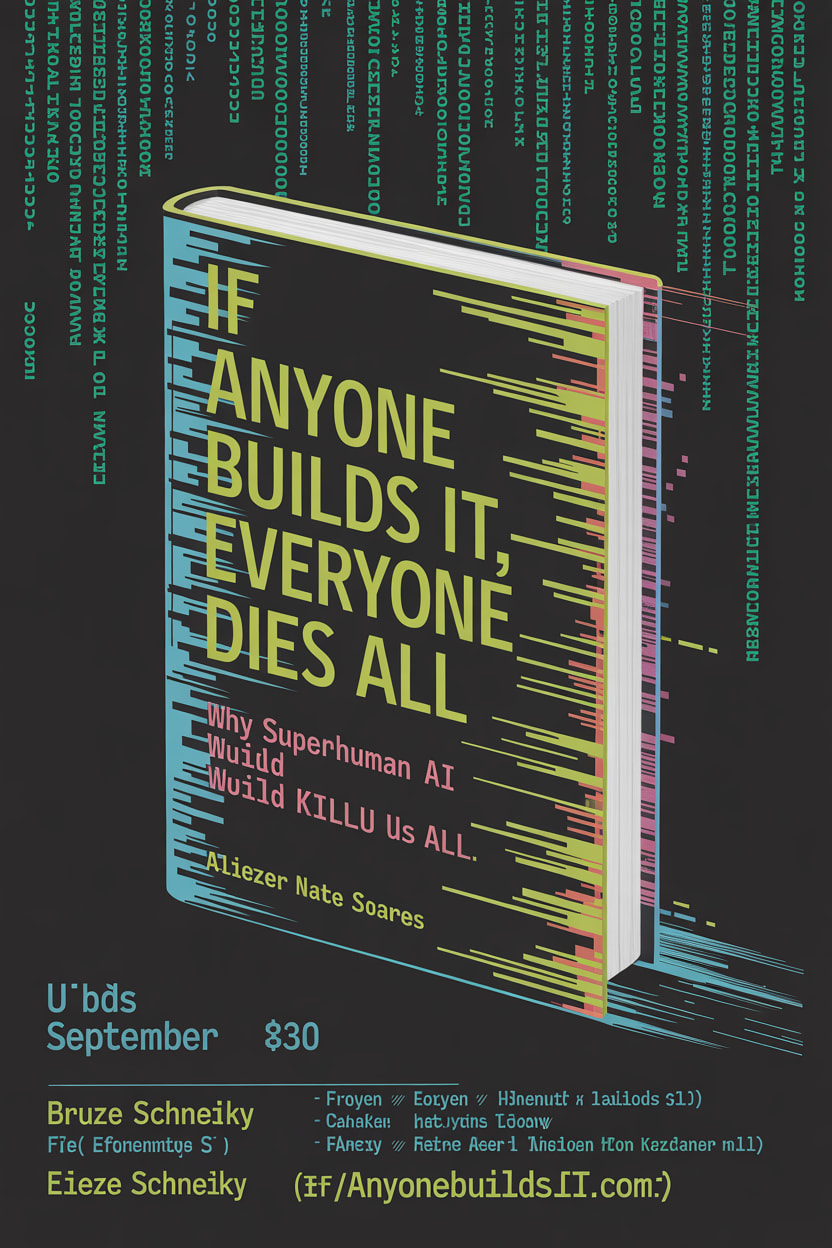

4: Says too little about what it's selling, annoying, I'd have no patience for this if I didn't already know what it was about. I think "this is not a metaphor" is a common rationalist phrase (eg "not a metaphor" was the name of a ship in Unsong, there was stuff in the sequences about the "stop interpreting reports of unfamiliar experience as metaphorical" mental move) so I think people are underestimating the extent to which non-rats will just not interpret this as anything. Generally the response will be "why would 'if anyone builds it everyone dies' be spoken as a metaphor in the first place? Like what the hell did you mean by that". They will not know that you're referencing the "an existential risk (for jobs)" stuff.

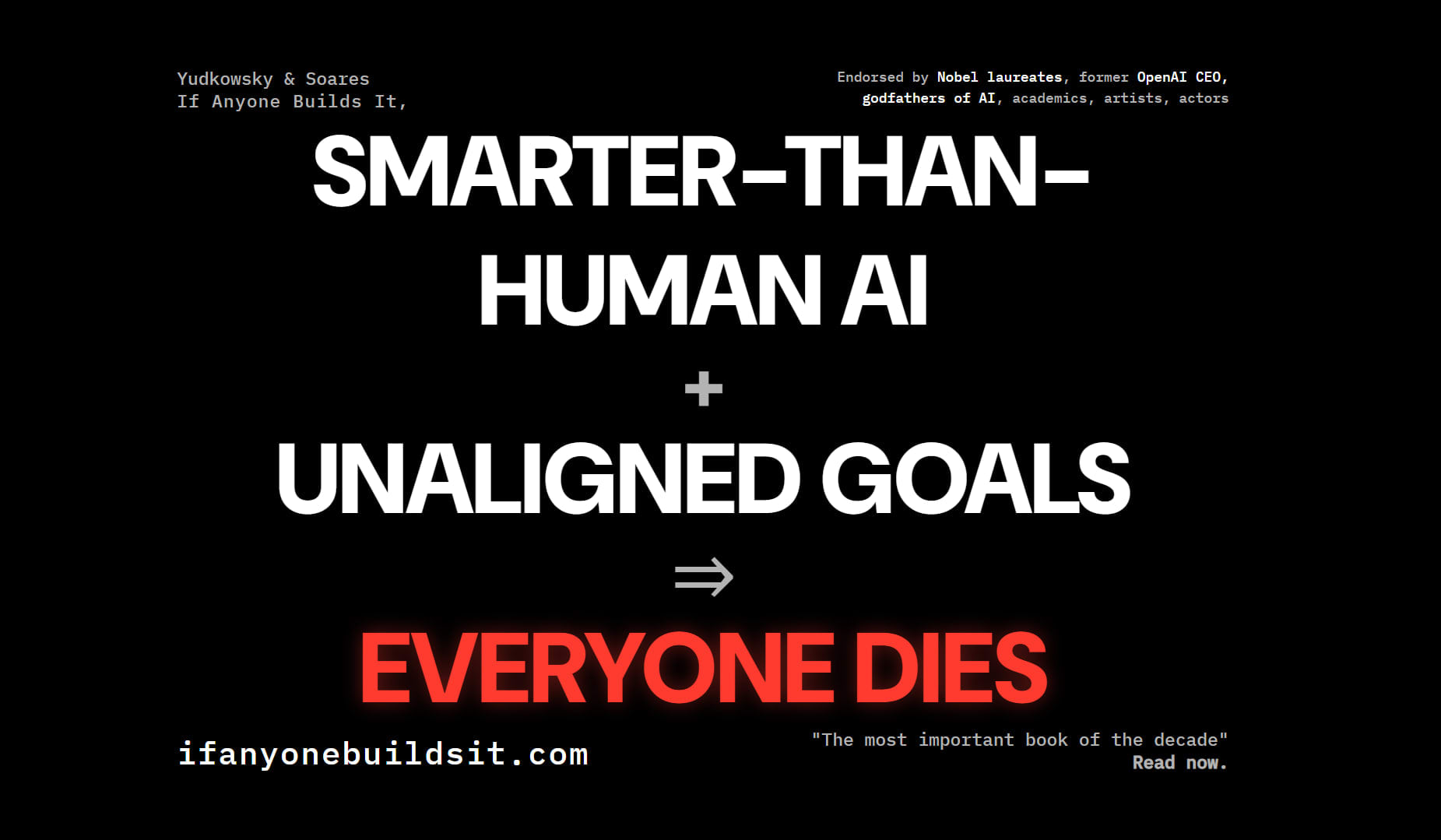

5: I like this. The industrial/code style evokes the thing being discussed, machines as we've always known them; rough, inhuman, scaling faster than humans can adapt. If the viewer has anxieties about such things, this will remind them of them. A succinct argument. Wont be interpreted by everyone though. Not going to be clear what "unaligned" means. Again wont necessarily attract people who're in the mood for a book.

6: The style is too strong or something, I would've just glossed over this one without reading. Vibes like it's gonna be a self help book for ex-sportsmen who are interested in premium shaving products or something. I guess the most concrete thing I can say is I don't think the style evokes anything relevant. This layout with the same style as the book cover would have landed better.

(may discuss the rest in a reply)

I like "this is not a metaphor".

I think referring to Emmett as "former OpenAI CEO" is a stretch? Or, like, I don't think it passes the onion test well enough.

(For the context, all of these are entirely AI-generated, and I have not submitted them to MIRI)

I'm worried that "This is not a metaphor" will not be taken correctly. Most people do not communicate in this sort of explicit literal way I think. I expect them to basically interpret it as a metaphor if that is their first instinct, and then just be confused or not even pay attention to "this is not a metaphor"

And I expect the other half of the audience to not interpret it as a metaphor and not know why anyone would.

Why not make submissions public? (with opt-out)

Iterated design often beats completely original work (see HPMOR). Setup a shared workspace (GDrive / Figma) where people can build on each other's concepts. I'm sure Mihkail's examples already got some people thinking. Might also encourage more submissions, since people know others could take their ideas further.

Reasons against:

- Keeping designs secret stops opponents from tailoring ad campaigns against the book. However if there are >10 viable submissions, then it's difficult to do so for all of them.

- Credit disputes for remixed ideas could get messy.

- Might lead to more sloppy designs submitted. But those are quick to review.

i made a discord server if people want to collaborate on designing ads for If Anyone Builds It! Including sharing ideas that are not finished designs, etc.

i think we can just agree to donate the prizes to MIRI (or maybe someone proposes some attribution mechanism, but it seems great if people just laser-focus on making an awesome thing for the sake of it and celebrate the results and everyone who contributed).

Whoever can set up a shared workspace, please also do that!

A good idea might be to come up with, think a bit about, & share various:

- things (ideas, vibes, thoughts, information, calls to action) the ads might try to communicate

- design ideas/styles/etc.

- specific features that can be used in the ads

- etc.

One way this can be bad is that people might anchor on existing ideas too much, but I wouldn't be too worried, people normally have lots of very personal preferences and creative ideas and styles, and iteration on something in collaboration might be great.

We encourage multiple submissions, and also encourage people to post their submissions in this comment section for community feedback and inspiration.

Just a general concern regarding some of these proposals:

* It should be really very clear that this is a book

* Some of the proposals give me a vibe that might be interpreted as "this is a creative ad for a found-footage movie/game pretending to be serious"

* People's priors with these kinds of posters are very strongly that it is entertainment. This needs to be actively prevented.

* Even if it rationally cannot be entertainment if you analyze the written words, it is I think much better if it actually feels at a gut level (before you read the text) closer to an infomercial than to the marketing poster of a movie. Like I'm worried about the big red letters with black background for example...

I didn't have cached what IABIED stands for before clicking this post. Maybe this would be more seen if it referred to the book directly, because the book seems to have lots of support behind it.

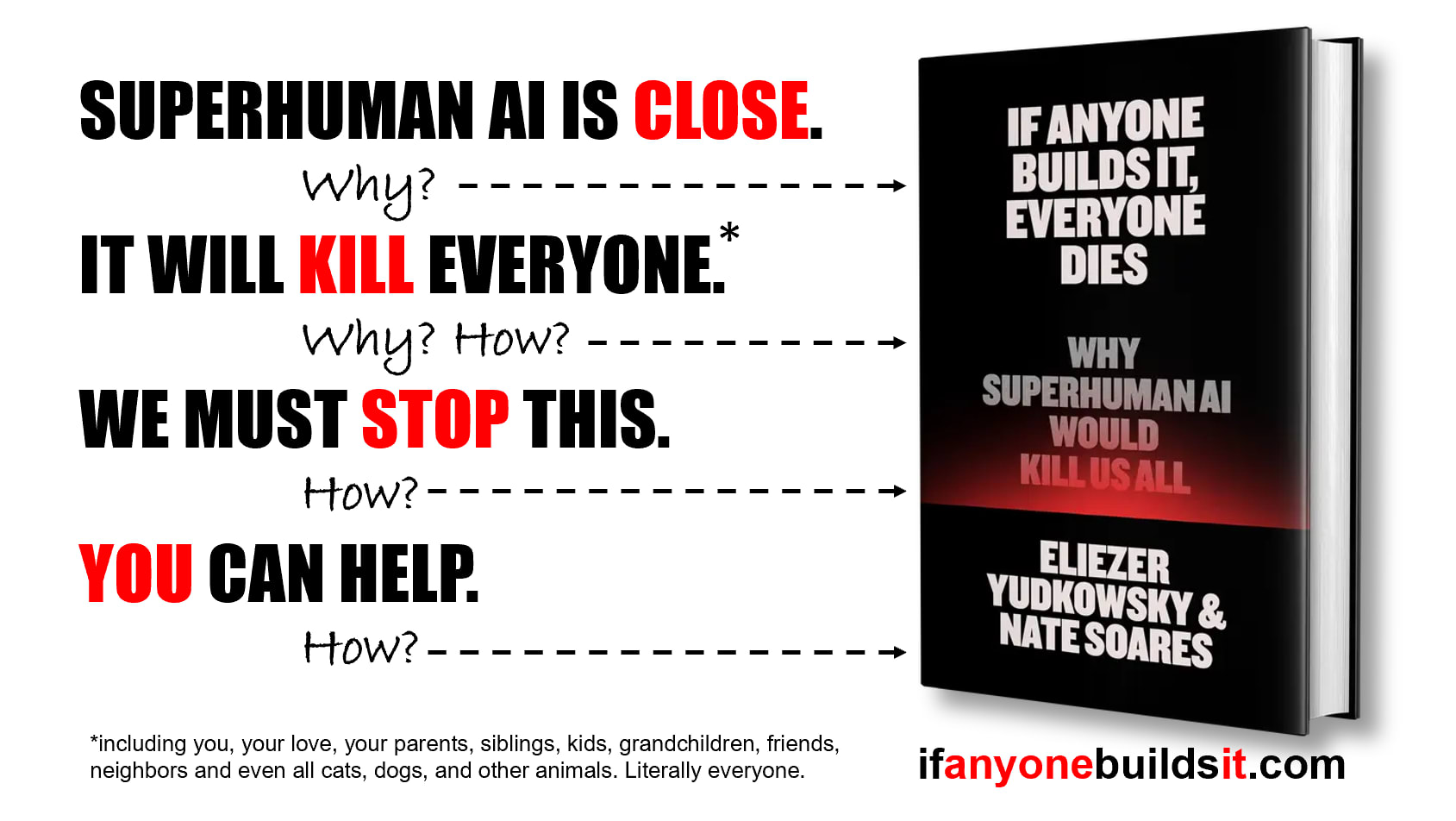

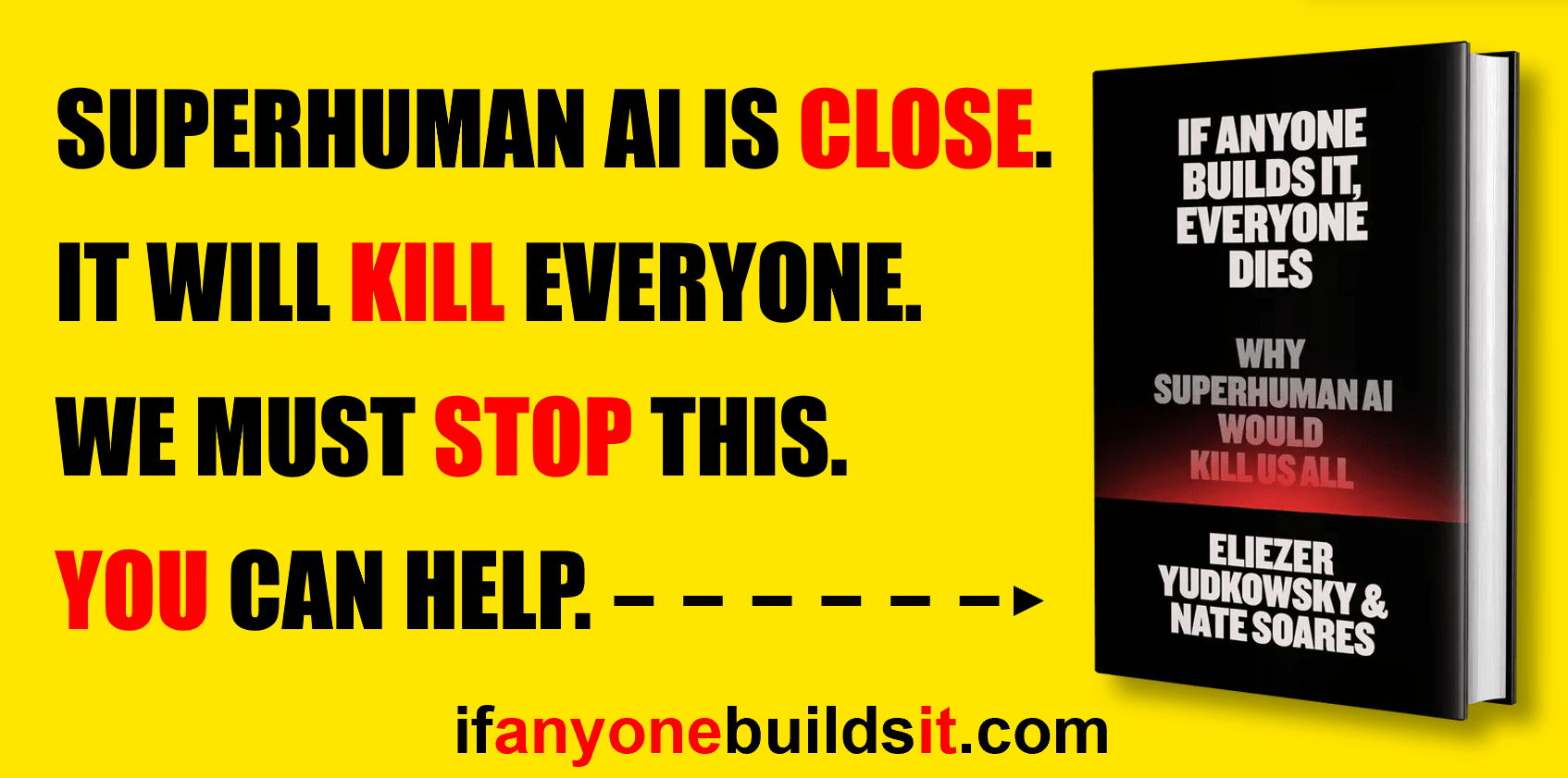

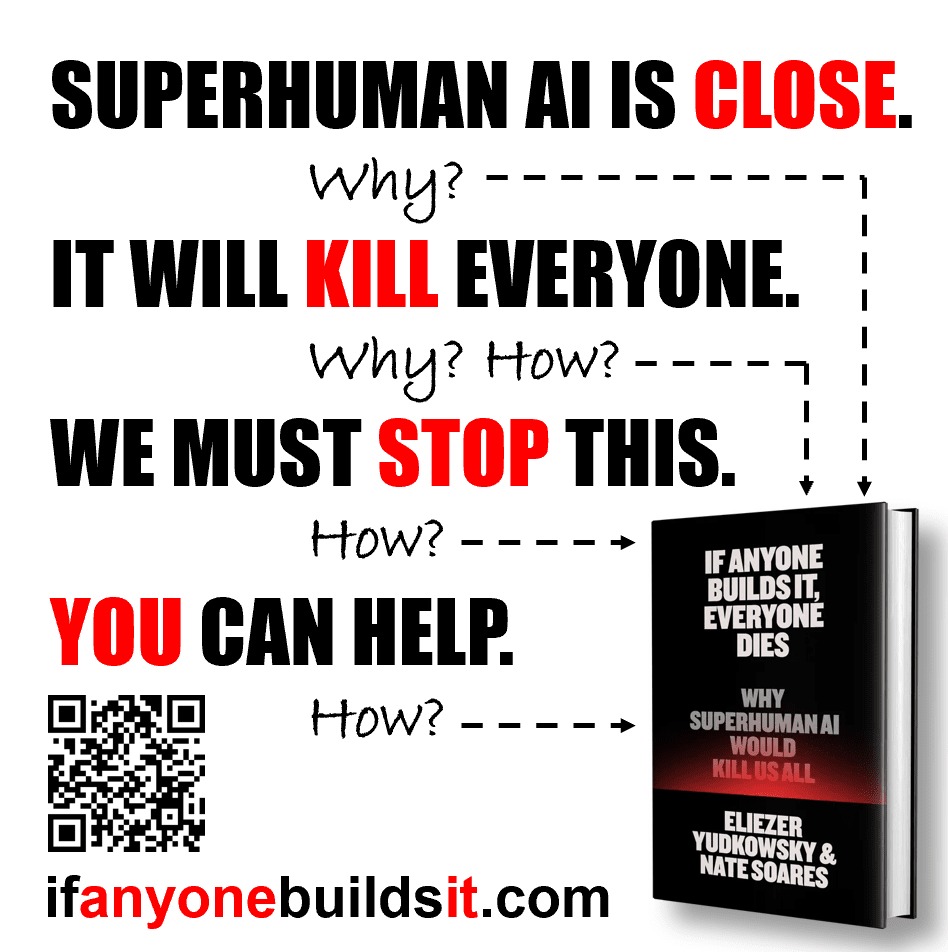

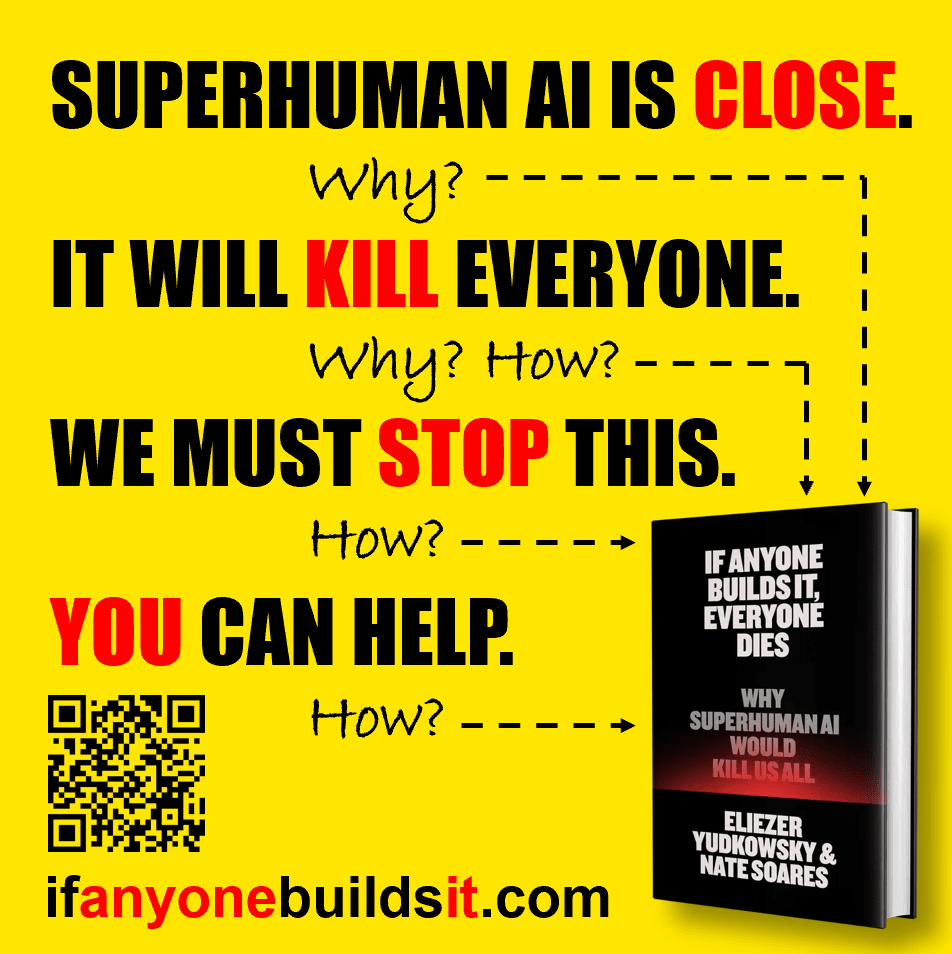

I'm not a professional designer and created these in Powerpoint, but here are my ideas anyway.

General idea:

2:1 billboard version:

1:1 Metro version:

With yellow background:

Maybe 'may be close'? The book doesn't actually take a strong stance on timelines (this is by design).

Thanks! I don't have access to the book, so I didn't know about the timelines stance they take.

Still, I'm not an advertising professional, but subjunctives like "may" and "could" seem significantly weaker to me. As far as I know, they are rarely used in advertising. Of course, the ad shouldn't contain anything that is contrary to what the book says, but "close" seems sufficiently unspecific to me - for most laypeople who never thought about the problem, "within the next 20 years" would probably seem pretty close.

A similar argument could be made about the second line, "it will kill everyone", while the book title says "would". But again, I feel "would" is weaker than "will" (some may interpret it to mean that there may be additional prerequisites necessary for an ASI to kill everyone, like "consciousness"). Of course, "will" can only be true if a superintelligence is actually built, but that goes without saying and the fact that the ASI may not be built at all is also implicit in the third line, "we must stop this".

A similar argument could be made about the second line,

Oh, I saw this, too, but since the second line is conditional on the first, if you weaken the first, both are weakened.

I feel a little shitty being like 'trust me about what the book says', but... please trust me about what the book says! There's just not much in there about timelines. Even from the book website (and the title of the book!), the central claim opens with a conditional:

If any company or group, anywhere on the planet, builds an artificial superintelligence using anything remotely like current techniques, based on anything remotely like the present understanding of AI, then everyone, everywhere on Earth, will die.

There's much more uncertainty (at MIRI and in general) as to when ASI will be developed than as to what will happen once it is developed. We all have our own takes on timelines, some more bearish, some more bullish, all with long tails (afaik, although obviously I don't speak for any specific other person).

If you build it, though, everyone dies.

There's a broad strategic call here that goes something like:

- All of our claims will be perceived as having a similar level of confidence by the general public (this is especially true in low-fidelity communications like an advertisement).

- If one part of the story is falsified, the public will consider the whole story falsified.

- We are in fact much more certain about what will happen than when.

- We should focus on the unknowability of the when, the certainty of the what, and the unacceptability of that conjunction.

This is a gloss of the real thing which is more nuanced; of course if it comes up that someone asks us when we expect it to happen, or if there's space for us to gesture to the uncertainty, the MIRI line is often "if it doesn't happen in 20 years (conditional on no halt), we'd be pretty surprised" (although individual people may say something different).

I think some of the reception to AI2027 (i.e. in YouTube comments and the like) has given evidence that emphasizing timelines too too much can result in backlash, even if you prominently flag your uncertainty about the timelines! This is an important failure mode that, if all of the ecosystem's comms err on overconfidence re timelines, will burn a lot of our credibility in 2028. (Yes, I know that AI2027 isn't literally 'ASI in 2027', but I'm trying to highlight that most people who've heard of AI2027 don't know that, and that's the problem!).

(some meta: I am one of the handful of people who will be making calls about the ads, so I'm trying to offer feedback to help improve submissions, not just arguing a point for the sake of it or nitpicking)

Thanks again! My drafts are of course just ideas, so they can easily be adapted. However, I still think it is a good idea to create a sense of urgency, both in the ad and in books about AI safety. If you want people to act, even if it's just buying a book, you need to do just that. It's not enough to say "you should read this", you need to say "you should read this now" and give a reason for that. In marketing, this is usually done with some kind of time constraint (20% off, only this week ...).

This is even more true if you want someone to take measures against something that is in the mind of most people still "science fiction" or even "just hype". Of course, just claiming that something is "soon" is not very strong, but it may at least raise a question ("Why do they say this?").

I'm not saying that you should give any specific timeline, and I fully agree with the MIRI view. However, if we want to prevent superintelligent AI and we don't know how much time we have left, we can't just sit around and wait until we know when it will arrive. For this reason, I have dedicated a whole chapter on timelines in my own German language book about AI existential risk and also included the AI-2027 scenario as one possible path. The point I make in my book is not that it will happen soon, but that we can't know it won't happen soon and that there are good reasons to believe that we don't have much time. I use my own experience with AI since my Ph.D. on expert systems in 1988 and Yoshua Bengio's blogpost on his change of mind as examples of how fast and surprising progress has been even for someone familiar with the field.

I see your point about how a weak claim can water down the whole story. But if I could choose between a 100 people convinced that ASI would kill us all, but with no sense of urgency, and 50 or even 20 who believe both the danger and that we must act immediately, I'd choose the latter.

However, I still think it is a good idea to create a sense of urgency, both in the ad and in books about AI safety.

Personally, I would rather stake my chips on 'important' and let urgent handle itself. The title of the book is a narrow claim--if anyone builds it, everyone dies--with the clarifying details conveniently swept into the 'it'. Adding more inferential steps makes it more challenging to convey clearly and more challenging to hear (since each step could lose some of the audience).

There's some further complicated arguments about urgency--you don't want to have gone out on too much of a limb about saying it's close, because of costs when it's far--but I think I most want to make a specialization of labor argument, where it's good that the AI 2027 people, who are focused on forecasting, are making forecasting claims, and good that MIRI, who are focused on alignment, are making alignment difficulty / stakes claims.

I see your point about how a weak claim can water down the whole story. But if I could choose between a 100 people convinced that ASI would kill us all, but with no sense of urgency, and 50 or even 20 who believe both the danger and that we must act immediately, I'd choose the latter.

Hmm I think I might agree with this value tradeoff but I don't think I agree with the underlying prediction of what the world is offering us.

I think also MIRI has tried for a while to recruit people who can make progress on alignment and thought it was important to start work now, and the current push is on trying to get broad attention and support. The people writing blurbs for the book are just saying "yes, this is a serious book and a serious concern" and not signing on to "and it might happen in two years"--tho probably some of them also believe that--and I think that gives enough cover for the people who are acting on two-year timelines to operate.

Resources it would be useful to have: The font face from the book cover, high resolution images of the book.

I emailed, the font is Knockout, which doesn't seem to be free. Here's a high resolution image of the book.

We’re currently in the process of locking in advertisements for the September launch of If Anyone Builds It, Everyone Dies, and we’re interested in your ideas! If you have graphic design chops, and would like to try your hand at creating promotional material for If Anyone Builds It, Everyone Dies, we’ll be accepting submissions in a design competition ending on August 10, 2025.

We’ll be giving out up to four $1000 prizes:

We’re also willing (but not promising) to give out a number of $200 prizes for things which end up being useful but not for the above.

(To be clear: the market value of a solid graphic that helps us move copies of the book is way in excess of a thousand dollars; the prize money is a thank-you for people who are donating their effort and expertise to help increase the odds of this critical message making it into the mainstream in time for humanity to save itself.)

To submit: email your entry (or a link to it) to design@intelligence.org by 11:59PM on August 10, 2025. Our judges will announce any winners by August 17, 2025. Submissions do not need to be private; you’ll retain ownership of your work and can post it elsewhere as feels good to you.

DC Metro details

NY Subway details

SF Billboard details