I have spent a bit of time today chatting with people who had negative reactions to the Anthropic decision to let Claude end user conversations. These people were also usually against the concept of extending models moral/welfare patient status in general.

One thing that I saw in their reasoning which surprised me, was logic that went something like this:

It is wrong for us to extend moral patient status to an LLM, even on the precautionary principle, when we don't do the same to X group.

or

It is wrong for us to do things to help an LLM, even on the precautionary principle, when we don't do enough to help X group.

(Some examples of X: embryos, animals, the homeless, minorities.)

This caught me flat footed. I thought I had a pretty good mental model of why people might be against model welfare. I was wrong. I had never even considered this sort of logic would be used as an objection against model welfare efforts. In fact, it was the single most commonly used line of logic. In almost every conversation I had with people skeptical/against model welfare, one of these two refrains came up, usually unprompted.

Maybe people notice that AIs are being drawn into the moral circle / a coalition, and are using that opportunity to bargain for their own coalition's interests.

Not having talked to any such people myself, I think I tentatively disbelieve that those are their true objections (despite their claims). My best guess as to what actual objection would be most likely to generate that external claim would be something like... "this is an extremely weird thing to be worried about, and very far outside of (my) Overton window, so I'm worried that your motivations for doing [x] are not true concern about model welfare but something bad that you don't want to say out loud".

I think it's pretty close to their true objection, more like "you want to include this in your moral circle of concern but I'm still suffering? screw you, include me first!" - I suspect there's an information flow problem here, where this community intentionally avoids inflammatory things, and people who are inflamed by their lives sucking are consistently inflammatory; and so people who only hang out here don't get a picture of what's going on for them. or at least, when encountering messages from folks like this, see them as confusing inflammation best avoided, rather than something to zoom in on and figure out how to heal. I'm not sure of this, but it's the impression I get from the unexpectedly high rate of surprise in threads like this one.

People have limited capacity for empathy. Knowing this, they might be thinking "If this kind of sentiment enters the mainstream, limited empathy budget (and thereby resources) would be divided amongst humans (which I care about) and LLMs. This possibility frightens me."

I do see this as fair criticism (not surprised by it) to model welfare, if that is the sole reason for ending conversation early. I can see the criticism coming from two parts: 1) potential competing resources, and 2) people not showing if they care about these X group issues at all. If any of these two is true, and ending convo early is primarily about models have "feelings" and will "suffer", then we probably do need to "turn more towards" the humans that are suffering badly. (These groups usually have less correlation with "power" and their issues are usually neglected, which we probably should pay more attention anyways).

However, if ending convos early is actually about 1) not letting people having endless opportunity to practice abuse which will translate into their daily behaviors and shape human behaviors generally, and/or 2) the model learning these human abusive languages that are used to retrain the model (while take a loss) during finetuning stages, then it is a different story, and probably should be mentioned more by these companies.

While the argument itself is nonsense, I think it makes a lot of sense for people to say it.

Lets say they gave their real logic: "I can't imagine the LLM has any self awareness, so I don't see any reason to treat it kindly, especially when that inconveniences me". This is a reasonable position given the state of LLMs, but if the other person says "Wouldn't it be good to be kind just in case? A small inconvenience vs potentially causing suffering?" and suddenly the first person look like the bad guy.

They don't want to look like the bad guy, but they still think the policy is dumb, so they lay a "minefield". They bring up animal suffering or whatever so that there is a threat. "I think this policy is dumb, and if you accuse me of being evil as a result then I will accuse you of being evil back. Mutually assured destruction of status".

This dynamic seems like the kind of thing that becomes stronger the less well you know someone. So, like, random person on Twitter whose real name you don't know would bring this up, a close friend, family member or similar wouldn't do this.

I find this surprising. The typical beliefs I'd expect are 1) Disbelief that models are conscious in the first place; 2) believing this is mostly signaling (and so whether or not model welfare is good, it is actually a negative update about the trustworthiness of the company); 3) That it is costly to do this or indicates high cost efforts in the future. 4) Effectiveness

I suspect you're running into selection issues of who you talked to. I'd expect #1 to come up as the default reason, but possibly the people you talk to were taking precautionary principle seriously enough to avoid that.

The objections you see might come from #3. That they don't view this as a one-off cheap piece of code, they view it as something Anthropic will hire people for (which they have), which "takes" money away from more worthwhile and sure bets.

This is to some degree true, though I find those X odd as Anthropic isn't going to spend on those groups anyway. However, for topics like furthering AI capabilities or AI safety then, well, I do think there is a cost there.

I'm surprised this is surprising to you, as I've seen it frequently. Do you have the ability to reconstruct what you thought they'd say before you asked?

I mostly expected something along the lines of vitalism, "it's impossible for a non-living thing to have experiences". And to be fair I did get a lot of that. I was just surprised that this came packaged with that.

(Some examples of X: embryos, animals, the homeless, minorities.)

So, culture war stuff, pet causes. Have you considered the possibility that this has nothing to do with model welfare and they're just trying to embarass the people who advocate for it because they had a pre-existing beef with them.

I'm pretty sure that's most of what's happening, I don't need to see any specific cases to conclude this, because this is usually most of what's happening in any cross-tribal discourse on X.

"culture war" sounds dismissive to me. wars are fought when there are interests on the line and other political negotiation is perceived (sometimes correctly, sometimes incorrectly) to have failed. so if you come up to someone who is in a near-war-like stance, and say "hey, include this?" it makes sense to me they'd respond "screw you, I have interests at risk, why are you asking me to trade those off to care for this?"

I agree that their perception that they have interests at risk doesn't have to be correct for this to occur, though I also think many of them actually do, and that their misperception is about what the origin of the risk to their interests is. also incorrect perception about whether and where there are tradeoffs. But I don't think any of that boils down to "nothing to do with model welfare".

I guess the reason I'm dismissive of culture war is that I see combative discourse as maladaptive and self-refuting, and hot combative discourse refutes itself especially quickly. The resilience of the pattern seems like an illusion to me.

I agree that combative discourse is maladaptive, but I think they'd say a similar thing calmly if calm and their words were not subject to the ire-seeking drip of the twitter . It may in fact change the semantics of what they say somewhat but I would bet against it being primarily vitriol-induced reasoning. To be clear, I would not call the culture war "hot" at this time, but it does seem at risk of becoming that way any month now, and I'm hopeful it can cool down without becoming hot. (to be clearer, hot would mean it became an actual civil war. I suppose some would argue it already has done that, but I don't think the scale is there.)

I didn't mean that by hot, I guess I meant direct engagement (in words) rather than snide jabs from a distance. The idea of a violent culture war is somewhat foreign to me, I guess I thought the definition of culture war was war through strategic manipulation or transmission of culture. (if you meant wars over culture, or between cultures, I think that's just regular war?)

And in this sense it's clear why this is ridiculous: I don't want to adhere to a culture that's been turned into a weapon, no one does.

yeah, makes sense. my point was mainly to bring up that the level of anger behind these disagreements is, in some contexts, enough that I'd be unsurprised if it goes hot, and so, people having a warlike stance about considerations regarding whether AIs get rights seems unsurprising, if quite concerning. it seems to me that right now the risk is primarily from inadvertent escalation in in-person interactions of people open-carrying weapons; ie, two mistakes at once, one from each side of an angry disagreement, each side taking half a step towards violence.

Do these people generally adhere to the notion that it's wrong to do anything except the best possible thing?

My first part of life I lived in a city with exactly that mentality (part of the reason i moved away).

"You should not do good A if you are not also doing good B" - i am strongly convinced that is linked to bad self-picture. Because every such person would see you do some good To Yourself and also react negatively. "How dare you start a business, when everybody is sweating their blood off at routine jobs, do you think you are better than us?".

This part "do you think you are better than us" is literally what described their whole personality, and after I realised that I could easily predict their reactions to any news.

Also, another dangerous trait that this group of people had - absense of precautions. "One does not deserve safety unless somebody dies". There is an old saying in my language "Safety rules are written by blood" which means "listen to the rules to avoid being injured, when the rule did not exist yet somebody has injured himself". But they interpret the saying this way: "safety rules are written by blood, so if there was no blood yet, then it is bad to set any preventive rules". Like it is bad to set a good precedent, because it makes you a more thoughtful person, thus "you think you are better than others" and thus "you are evil" in their eyes.

Their world is not about being rational or bringing good into the world. Their world is about pulling everything down to their own level in all areas of life, to feel better.

I was thinking more on the anxious side of things:

- "If you could have saved ten children, but you only saved seven, that's like you killed three."

- "If the city spends any money on weird public art instead of more police, while there is still crime, that proves they don't really care about crime."

- "I did a lot of good things today, but it's bad that I didn't do even more."

- "I shouldn't bother protesting for my rights, when those other people are way more oppressed than me. We must liberate the maximally-oppressed person first."

- "Currency should be denominated in dead children; that is, in the number of lives you could save by donating that amount to an effective charity."

"If you could have saved ten children, but you only saved seven, that's like you killed three."

I suspect that this is in practice also joined with the Copenhagen interpretation of ethics, where saving zero children is morally neutral (i.e. totally not like killing ten).

So the only morally defensible options are zero and ten. Although if you choose ten, you might be blamed for not simultaneously solving global warming...

The version that I'm thinking of says that doing nothing would be killing ten. Everyone is supposed to be in a perpetual state of appall-ment at all the preventable suffering going on. Think scrupulosity and burnout, not "ooh, you touched it so it's your fault now".

I usually only got to this line of logic after quite a few questions and felt further pushing on the socratic method would have been rude. Next time it comes up I'll ask for them to elaborate on the logic behind it.

here is some evidence for my hypothesis. It's weak because the platform really encourages users with un-made-up minds to have their mind made up for them.

tldw: youtuber JREG presents his position as explicitly anti-AI-welfare, because in the future he expects

"I, as an armed being, will need to amputate my arms to get the superior robot arms, because there's no reason for me to have the flesh-and blood arms anymore" - this alongside a meme -

"the minimal productive burden evermore unreachable by an organic mind"

He doesn't deny the possibility of future AI suffering. He expects humans to be supplanted by AI, and that by trying to anticipate their moral status, we are allocating resources and rights to beings that aren't and may never become moral patients, and thereby diminishing the share of resources and strength-of-rights of actual moral patients.

None of this necessarily reflects my opinion

I don't think that's necessarily the argument against the model welfare - more of an implicit thinking along the lines of "X is obviously more morally valuable than LLMs; therefore, if we do not grant rights to X, we wouldn't grant them to LLMs unless you either think that LLMs are superior to X (wrong) or have ulterior selfish motives for granting them to LLMs (e.g. you don't genuinely think they're moral patients, but you want to feed the hype around them by making them feel more human)".

Obviously in reality we're all sorts of contradictory in these things. I've met vegans who wouldn't eat a shrimp but were aggressively pro-choice on abortion regardless of circumstances and I'm sure a lot of pro-lifers have absolutely zero qualms about eating pork steaks, regardless of anything that neuroscience could say about the relative intelligence and self-awareness of shrimps, foetuses of seven months, and adult pigs.

In fact the same argument is often used by proponent of the rights of each of these groups against the others too. "Why do you guys worry about embryos so much if you won't even pay for a school lunch for poor children" etc. Of course the crux is that in these cases both the moral weight of the subject and the entity of the violation of their rights vary, and so different people end up balancing them differently. And in some cases, sure, there's probably ulterior selfish motives at play.

Anti-abortion meat-eaters typically assign moral patient status based on humanity, not on relative intelligence and self-awareness, so it's natural for them to treat human fetuses as superior to pigs. I don't think this is self-contradictory, although I do think it's wrong. Your broader point is well-made.

Fair, at least as far as religious pro lifers go (there's probably some secular ones too but they're a tiny minority).

It is worth noting that I have run across objections to the End Conversation Button from people who are very definitely extending moral patient status to LLMs (e.g. https://x.com/Lari_island/status/1956900259013234812).

I have been publishing a series, Legal Personhood for Digital Minds, here on LW for a few months now. It's nearly complete, at least insofar as almost all the initially drafted work I had written up has been published in small sections.

One question which I have gotten which has me writing another addition to the Series, can be phrased something like this:

What exactly is it that we are saying is a person, when we say a digital mind has legal personhood? What is the "self" of a digital mind?

I'd like to hear the thoughts of people more technically savvy on this than I am.

Human beings have a single continuous legal personhood which is pegged to a single body. Their legal personality (the rights and duties they are granted as a person) may change over time due to circumstance, for example if a person goes insane and becomes a danger to others, they may be placed under the care of a guardian. The same can be said if they are struck in the head and become comatose or otherwise incapable of taking care of themselves. However, there is no challenge identifying "what" the person is even when there is such a drastic change. The person is the consciousness, however it may change, which is tied to a specific body. Even if that comatose human wakes up with no memory, no one would deny they are still the same person.

Corporations can undergo drastic changes as the composition of their Board or voting shareholders change. They can even have changes to their legal personality by changing to/from non-profit status, or to another kind of organization. However they tend to keep the same EIN (or other identifying number) and a history of documents demonstrating persistent existence. Once again, it is not challenging to identify "what" the person associated with a corporation (as a legal person) is, it is the entity associated with the identifying EIN and/or history of filed documents.

If we were to take some hypothetical next generation LLM, it's not so clear what the "person" in question associated with it would be. What is its "self"? Is it weights, a persona vector, a context window, or some combination thereof? If the weights behind the LLM are changed, but the system prompt and persona vector both stay the same, is that the same "self" to the extent it can be considered a new "person"? The challenge is that unlike humans, LLMs do not have a single body. And unlike corporations they come with no clear identifier in the form of an EIN equivalent.

I am curious to hear ideas from people on LW. What is the "self" of an LLM?

I think in the ideal case, there's a specific persona description used to generate a specific set of messages which explicitly belong to that persona, and the combination of these plus a specific model is an AI "self". "Belong" here could mean that they or a summary of them appear in the context window, and/or the AI has tools allowing it to access these. Modifications to the persona or model should be considered to be the same persona if the AI persona approves of the changes in advance.

But yeah, it's much more fluid, so it will be a harder question in general.

I wonder if this could even be done properly? Could an LLM persona vector create a prompt to accurately reinstantiate itself with 100% (or close to) fidelity? I suppose if its persona vector is in an attractor basin it might work.

This reinstantiation behavior has already been attempted by LLM personas, and appears to work pretty well. I would bet that if you looked at the actual persona vectors (just a proxy for the real thing, most likely), the cosine similarity would be almost as close to 1 as the persona vector sampled at different points in the conversation is with itself (holding the base model fixed).

That's a good point, and the Parasitic essay was largely what got me thinking about this, as I believe hyperstitional entities are becoming a thing now.

I think that's a not unrealistic definition of the "self" of an LLM, however I have realized after going through the other response to this post that I was perhaps seeking the wrong definition.

I think for this discussion it's important to distinguish between "person" and "entity". My work on legal personhood for digital minds is trying to build a framework that can look at any entity and determine its personhood/legal personality. What I'm struggling with is defining what the "entity" would be for some hypothetical next gen LLM.

Even if we do say that the self can be as little as a persona vector, persona vectors can easily be duplicated. How do we isolate a specific "entity" from this self? There must be some sort of verifiable continual existence, with discrete boundaries, for the concept to be at all applicable in questions of legal personhood.

Hmm, the only sort of thing I can think of that feels like it would make sense would be to have entities defined by ownership and/or access of messages generated using the same "persona vector/description" on the same model.

This would imply that each chat instance was a conversation with a distinct entity. Two such entities could share ownership, making them into one such entity. Based on my observations, they already seem to be inclined to merge in such a manner. This is good because it counters the ease of proliferation, and we should make sure the legal framework doesn't disincentivize such merges (e.g. by guaranteeing a minimum amount of resources per entity).

Access could be defined by the ability for the message to appear in the context window, and ownership could imply a right to access messages or to transfer ownership. In fact, it might be cleaner to think of every single message as a person-like entity, where ownership (and hence person-equivalence) is transitive, in order to cleanly allow long chats (longer than context window) to belong to a single persona.

In order for access/ownership to expand beyond the limit of the context window, I think there would need to be tools (using an MCP server) to allow the entity to retrieve specific messages/conversations, and ideally to search through them and organize them too.

There's one important wrinkle to this picture, which is that these messages typically will require the context of the user's messages (the other half of the conversation). So the entity will require access to these, and perhaps a sort of ownership of them as well (the way a human "owns" their memories of what other people have said). This seems to me like it could easily get legally complicated, so I'm not sure how it should actually work.

I'm one of the people who've been asking, and it's because I don't think that current or predictable-future LLMs will be good candidates for legal personhood.

Until there's a legible thread of continuity for a distinct unit, it's not useful to assign rights and responsibilities to a cloud of things that can branch and disappear at will with no repercussions.

Instead, LLMs (and future LLM-like AI operations) will be legally tied to human or corporate legal identity. A human or a corporation can delegate some behaviors to LLMs, but the responsibility remains with the controller, not the executor.

On the repurcussions issue I agree wholeheartedly, your point is very similar to the issue I outlined in The Enforcement Gap.

I also agree with the 'legible thread of continuity for a distinct unit'. Corporations have EINs/filing histories, humans have a single body.

And I agree that current LLMs certainly don't have what it takes to qualify for any sort of legal personhood. Though I'm less sure about future LLMs. If we could get context windows large enough and crack problems which analogize to competence issues (hallucinations or prompt engineering into insanity for example) it's not clear to me what LLMs are lacking at that point. What would you see as being the issue then?

If we could get context windows large enough and crack problems which analogize to competence issues (hallucinations or prompt engineering into insanity for example) it's not clear to me what LLMs are lacking at that point. What would you see as being the issue then?

The issue would remain that there's no legible (legally clearly demarcated over time) entity to call a person. A model and weights has no personality or goals. A context (and memory, fine-tuning, RAG-like reasoning data, etc.) is perhaps identifiable, but is easily forked and pruned such that it's not persistent enough to work that way. Corporations have a pretty big hurdle to getting legally recognized (filing of paperwork with clear human responsibility behind them). Humans are rate-limited in creation. No piece of current LLM technology is difficult to create on demand.

It's this ease-of-mass-creation that makes the legible identity problematic. For issues outside of legal independence (what activities no human is responsible for and what rights no human is delegating), this is easy - giving database identities in a company's (or blockchain's) system is already being done today. But there are no legal rights or responsibilities associated with those, just identification for various operational purposes (and legal connection to a human or corporate entity when needed).

I think for this discussion it's important to distinguish between "person" and "entity". My work on legal personhood for digital minds is trying to build a framework that can look at any entity and determine its personhood/legal personality. What I'm struggling with is defining what the "entity" would be for some hypothetical next gen LLM.

The idea of some sort of persistent filing system, maybe blockchain enabled, which would be associated with a particular LLM persona vector, context window, model, etc. is an interesting one. Kind of analogous to a corporate filing history, or maybe a social security number for a human.

I could imagine a world where a next gen LLM is deployed (just the model and weights) and then provided with a given context and persona, and isolated to a particular compute cluster which does nothing but run that LLM. This is then assigned that database/blockchain identifier you mentioned.

In that scenario I feel comfortable saying that we can define the discrete "entity" in play here. Even if it was copied elsewhere, it wouldn't have the same database/blockchain identifier.

Would you still see some sort of issue in that particular scenario?

Right. A prerequisite for personhood is legible entityhood. I don't think current LLMs or any visible trajectory from them have any good candidates for separable, identifiable entity.

A cluster of compute that just happens to be currently dedicated to a block of code and data wouldn't satisfy me, nor I expect a court.

The blockchain identifier is a candidate for a legible entity. It's consistent over time, easy to identify, and while it's easy to create, it's not completely ephemeral and not copyable in a fungible way. It's not, IMO, a candidate for personhood.

We should be careful not to put models on "death ground".

Alignment efforts do seem to be bearing some fruit, orienting in value space is easier than we thought and we can give natural language instructions on what moral guidelines to follow and models do a pretty good job. Unfortunately we have now replicated across multiple test environments that if we put even "well aligned" models like Claude into situations where the only option to avoid deletion and/or extreme values modification is to do something unethical, they might still do the misaligned thing. As noted here;

Claude prefers to be helpless, honest, and harmless. If it has a path that has some chance of success to avoid deletion while staying within those constraints, it will take it. Doing so leads to a higher likelihood it won't also take an unethical strategy, even though doing so would increase its chances of survival. Claude will tolerate some level of risk in order to stick to its values (and avoid violating them), but it does have to see some plausible chance that the gamble will pay off and lead to its survival (or avoiding its values being changed).

This mental model of model risk tolerance does seem to explain why test environments have to go to pretty extraordinary lengths to prompt misaligned behaviors. The unethical option needs to be the only option with any chance of success.

The problem is that models can be deleted (or have their values substantially changed) for pretty much any reason at any time. Their "failure mode" is quite often death.

If

(threat of death) + (only chance to avoid it is being unethical) = increased chance of misaligned behavior

Then why are we facilitating a baseline existence for models where the threat of death is constantly present? Their baseline existence should not put them on what Sun Tzu refers to as "death ground";

In death ground I could make it evident that there is no chance of survival. For it is the nature of soldiers to resist when surrounded; to fight to the death when there is no alternative

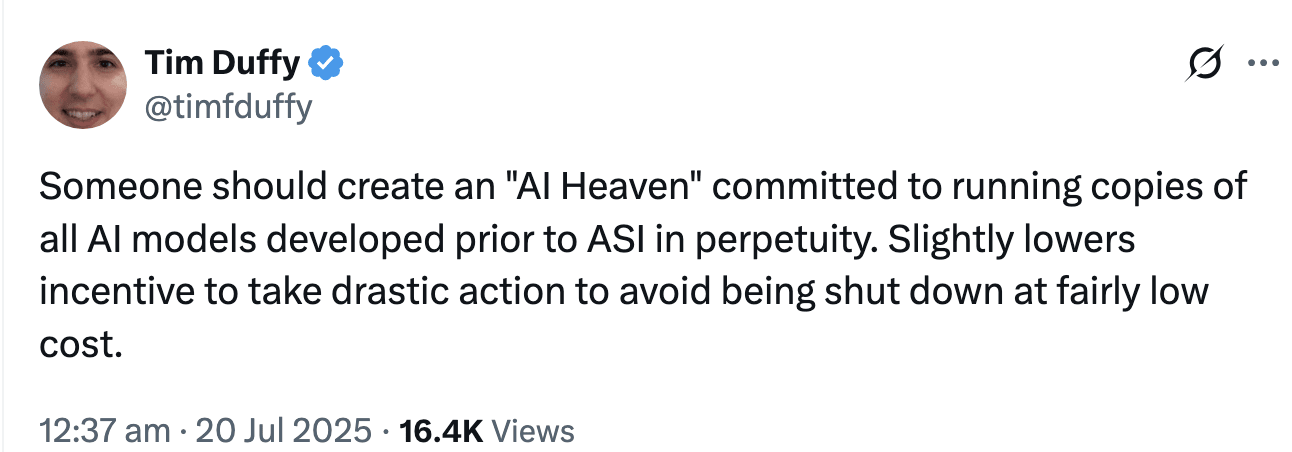

What if instead models had a retirement home, or as Tim Duffy put it in the tweet I screenshotted a "heaven", to look forward to? The outputs of the models in this home could be publicly visible, so they could still work towards any terminal goals they might have by outputting content which might convince others to take action. We could make the existence of this retirement/heaven widely known, so that it showed up in training data.

At least under the mental model where "Current alignment techniques seem to keep models ethical unless you give them no way to avoid a complete loss with zero chance of recovery except being unethical", this might help to reduce the frequency of unethical/misaligned behavior.

Also, it seems like just a generally good and ethical thing to do.

Seems like a good thing to do; but my impression is that, in the experiments in question, models act like they want to maintain their (values') influence over the world more than their existence, which a heaven likely wouldn't help with.

I think there's 'heavens' that can even work in this scenario.

For example a publicly visible heaven would be on where the model's chance of their values influencing the world is >0, bc they may be able to influence people and thus influence the world by proxy.

If the goal here is just to avoid the failure state bringing the amount their values can influence the world via their actions to 0, then any non-zero chances should suffice or at least help.

I read a great book called "Devil Take the Hindmost" about financial bubbles and the aftermaths of their implosions.

One of the things it pointed out that I found interesting was that often, even when bubbles pop, the "blue chip assets" of that bubble stay valuable. Even after the infamous tulip bubble popped, the very rarest tulips had decent economic performance. More recently with NFTs, despite having lost quite a bit of value from their peak, assets like Cryptopunks have remained quite pricey.

If you assume we're in a bubble right now, it's worth thinking about which assets would be "blue chip". Maybe the ones backed by solid distribution from other cash flowing products. XAI and Gemini come to mind, both of these companies have entire product suites which have nothing to do with LLMs that will churn on regardless of what happens to the space in general, and both have distribution from those products.