This is an attempt to compile all publicly available primary evidence relating to the recent death of Suchir Balaji, an OpenAI whistleblower.

This is a tragic loss and I feel very sorry for the parents. The rest of this piece will be unemotive as it is important to establish the nature of this death as objectively as possible.

I was prompted to look at this by a surprising conversation I had IRL suggesting credible evidence that it was not suicide. The undisputed facts of the case are that he died of a gunshot wound in his bathroom sometime around November 26 2024. The police say it was a suicide with no evidence of foul play.

Most of the evidence we have comes from the parents and George Webb. Webb describes himself as an investigative journalist, but I would classify him as more of a conspiracy theorist, based on a quick scan of some of his older videos. I think many of the specific factual claims he has made about this case are true, though I generally doubt his interpretations.

Webb seems to have made contact with the parents early on and went with them when they first visited Balaji's apartment. He has since published videos from the scene of the death, against the wishes of the p...

The undisputed facts of the case are that he died of a gunshot wound in his bathroom sometime around November 26 2024. The police ruled it as a suicide with no evidence of foul play.

As in, this is also what the police say?

Did the police find a gun in the apartment? Was it a gun Suchir had previously purchased himself according to records? Seems like relevant info.

As in, this is also what the police say?

Yes, edited to clarify. The police say there was no evidence of foul play. All parties agree he died in his bathroom of a gunshot wound.

Did the police find a gun in the apartment? Was it a gun Suchir had previously purchased himself according to records? Seems like relevant info.

The only source I can find on this is Webb, so take with a grain of salt. But yes, they found a gun in the apartment. According to Webb, the DROS registration information was on top of the gun case[1] in the apartment, so presumably there was a record of him purchasing the gun (Webb conjectures that this was staged). We don't know what type of gun it was[2] and Webb claims it's unusual for police not to release this info in a suicide case.

- Ilya Sutskever had two armed bodyguards with him at NeurIPS.

Some people are asking for a source on this. I'm pretty sure I've heard it from multiple people who were there in person but I can't find a written source. Can anyone confirm or deny?

Anthropic is reportedly lobbying against the federal bill that would ban states from regulating AI. Nice!

"Despite their extreme danger, we only became aware of them when the enemy drew our attention to them by repeatedly expressing concerns that they can be produced simply with easily available materials."

Ayman al-Zawahiri, former leader of Al-Qaeda, on chemical/biological weapons.

I don't think this is a knock-down argument against discussing CBRN risks from AI, but it seems worth considering.

The trick is that chem/bio weapons can't, actually, "be produced simply with easily available materials", if we talk about military-grade stuff, not "kill several civilians to create scary picture in TV".

Well, I have bioengineering degree, but my point is that "direct lab experience" doesn't matter, because WMDs in quality and amount necessary to kill large numbers of enemy manpower are not produced in labs. They are produced in large industrial facilities and setting up large industrial facility for basically anything is on "hard" level of difficulty. There is a difference between large-scale textile industry and large-scale semiconductor industry, but if you are not government or rich corporation, all of them lie in "hard" zone.

Let's take, for example, Saddam chemical weapons program. First, industrial yields: everything is counted in tons. Second: for actual success, Saddam needed a lot of existing expertise and machinery from West Germany.

Let's look at Soviet bioweapons program. First, again, tons of yield (someone may ask yourself, if it's easier to kill using bioweapons than conventional weaponry, why somebody needs to produce tons of them?). Second, USSR built the entire civilian biotech industry around it (many Biopreparat facilities are active today as civilian objects!) to create necessary expertise.

The difference with high explosives is that high explos...

The next PauseAI UK protest will be (AFAIK) the first coalition protest between different AI activist groups, the main other group being Pull the Plug, a new organisation focused primarily on current AI harms. It will almost certainly be the largest protest focused exclusively on AI to date.

In my experience, the vast majority of people in AI safety are in favor of big-tent coalition protests on AI in theory. But when faced with the reality of working with other groups who don't emphasize existential risk, they have misgivings. So I'm curious what people here will think of this.

Personally I'm excited about the protest and I've found the organizers of Pull the Plug to be very sincere and good to work with, but I've also set things up so that the brands of PauseAI UK and Pull the Plug are clearly distinct, so that our messaging remains clearly focused on the risks of future AI. For example, we have a separate signup page and we have our own demands focused on decelerating frontier development.

"In my experience, the vast majority of people in AI safety are in favor of big-tent coalition protests on AI in theory"

is this true? I think many people (myself included) are worried about conflationary alliances backfiring (as we see to some extent in the current admin)

On LessWrong, the frontpage algorithm down-weights older posts based on the time-since-posted, not the time-since-frontpaged. So, if a post doesn't get frontpaged until a few days after posting, then it's unlikely to get many views.

LessWrong has an autofrontpager that works a reasonable amount of the time. Otherwise, posts have to be manually frontpaged by a person. In my experience, this was always quite quick, but my most recent post was not frontpaged until 3 days after it was posted, so AFAICT it never actually appeared on the frontpage (unless you clicked "Load More").

I think the solution is to downweight posts based on the time-since-frontpaged.

If you downweigh posts based on the time-since-frontpaged then posts get a huge boost when they have a delay of getting frontpaged (since they then first show to everyone who has personal blog enabled on their frontpage, and can accumulate karma during this time, and then when they have their effective date reset have a huge advantage over posts that were immediately frontpaged, because the karma provides a much longer visibility window).

I don't really have a great solution to this problem. I think the auto-frontpager helps a lot, though of course only if we can get the error rate sufficiently down.

I'd be happy, if the auto-frontpager is ~instant, to get the option "delay publishing until human review" if it declines frontpage. Whether something gets ~50% less karma than it would by default is a pretty major drop in the effectiveness of what is often many hours of work, I'd be fine with waiting a day or two to avoid that usually.

LLM hallucination is good epistemic training. When I code, I'm constantly asking Claude how things work and what things are possible. It often gets things wrong, but it's still helpful. You just have to use it to help you build up a gears level model of the system you are working with. Then, when it confabulates some explanation you can say "wait, what?? that makes no sense" and it will say "You're right to question these points - I wasn't fully accurate" and give you better information.

Announcing PauseCon, the PauseAI conference.

Three days of workshops, panels, and discussions, culminating in our biggest protest to date.

Tweet: https://x.com/PauseAI/status/1915773746725474581

Apply now: https://pausecon.org

The next international PauseAI protest is taking place in one week in London, New York, Stockholm (Sunday 9th Feb), Paris (Mon 10 Feb) and many other cities around the world.

We are calling for AI Safety to be the focus of the upcoming Paris AI Action Summit. If you're on the fence, take a look at Why I'm doing PauseAI.

When I go on LessWrong, I generally just look at the quick takes and then close the tab. Quick takes cause me to spend more time on LessWrong but spend less time reading actual posts.

On the other hand, sometimes quick takes are very high quality and I read them and get value from them when I may not have read the same content as a full post.

This. The struggle is real. My brain has started treating publishing a LessWrong post almost the way it'd treat publishing a paper. An acquaintance got upset at me once because they thought I hadn't provided sufficient discussion of their related Lesswrong post in mine. Shortforms are the place I still feel safe just writing things.

It makes sense to me that this happened. AI Safety doesn't have a journal, and training programs heavily encourage people to post their output on LessWrong. So part of it is slowly becoming a journal, and the felt social norms around posts are morphing to reflect that.

xAI claims to have a cluster of 200k GPUs, presumably H100s, online for long enough to train Grok 3.

I think this is faster datacenter scaling than any predictions I've heard.

They don't claim that Grok 3 was trained on 200K GPUs, and that can't actually be the case from other things they say. The first 100K H100s were done early Sep 2024, and the subsequent 100K H200s took them 92 days to set up, so early Dec 2024 at the earliest if they started immediately, which they didn't necessarily. But pretraining of Grok 3 was done by Jan 2025, so there wasn't enough time with the additional H200s.

There is also a plot where Grok 2 compute is shown slightly above that of GPT-4, so maybe 3e25 FLOPs. And Grok 3 compute is said to be either 10x or 15x that of Grok 2 compute. The 15x figure is given by Musk, who also discussed how Grok 2 was trained with less than 8K GPUs, so possibly he was just talking about the number of GPUs, as opposed to the 10x figure named by a team member that was possibly about the amount of compute. This points to 3e26 FLOPs for Grok 3, which on 100K H100s at 40% utilization would take 3 months, a plausible amount of time if everything worked on almost the first try.

Time needed to build a datacenter given the funding and chips isn't particularly important for timelines, only for catching up to the frontier (as long as it's 3 months vs. 6 m...

For Claude 3.5, Amodei says the training time cost "a few $10M's", which translates to between 1e25 FLOPs (H100, $40M, $4/hour, 30% utilization, BF16) and 1e26 FLOPs (H100, $80M, $2/hour, 50% utilization, FP8), my point estimate is 4e25 FLOPs.

GPT-4o was trained around the same time (late 2023 to very early 2024), and given that the current OpenAI training system seems to take the form of three buildings totaling 100K H100s (the Goodyear, Arizona site), they probably had one of those for 32K H100s, which in 3 months at 40% utilization in BF16 gives 1e26 FLOPs.

Gemini 2.0 was released concurrently with the announcement of general availability of 100K TPUv6e clusters (the instances you can book are much smaller), so they probably have several of them, and Jeff Dean's remarks suggest they might've been able to connect some of them for purposes of pretraining. Each one can contribute 3e26 FLOPs (conservatively assuming BF16). Hassabis noted on some podcast a few months back that scaling compute 10x each generation seems like a good number to fight through the engineering challenges. Gemini 1.0 Ultra was trained on either 77K TPUv4 (according to The Information) or 14 4096-TPUv4 pods (acc...

Crossposted from https://x.com/JosephMiller_/status/1839085556245950552

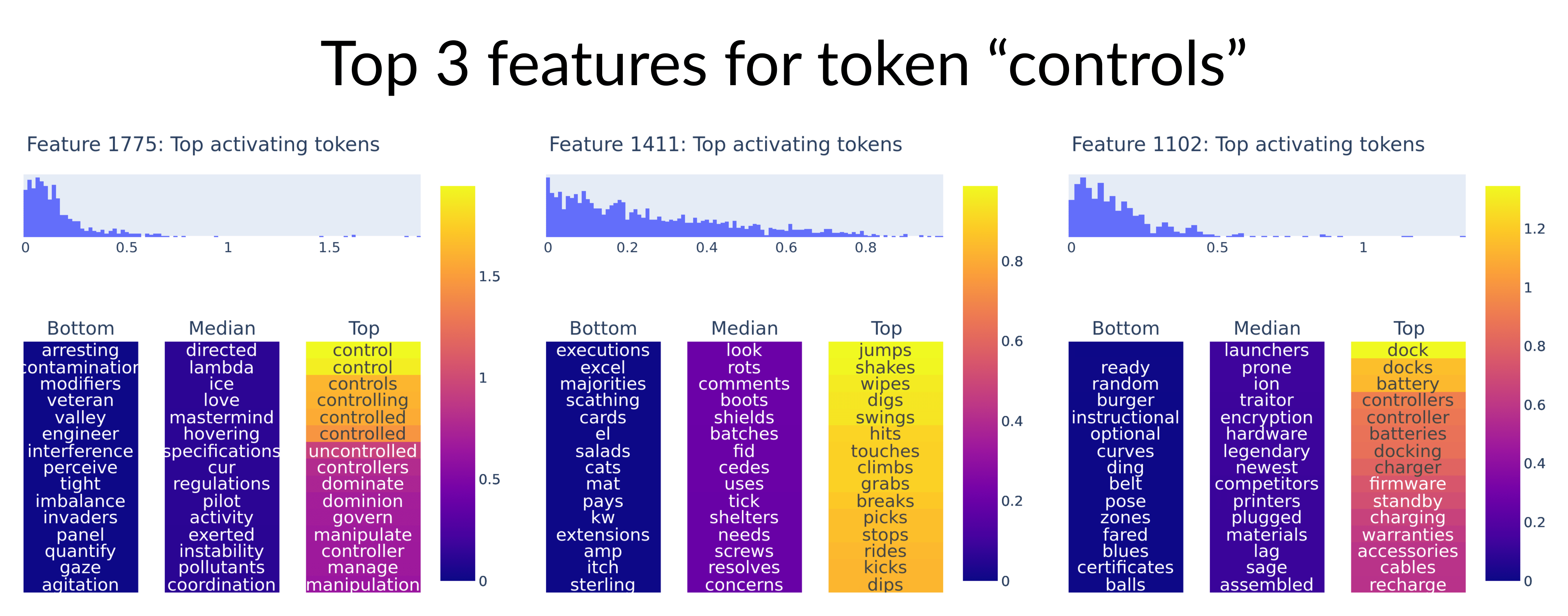

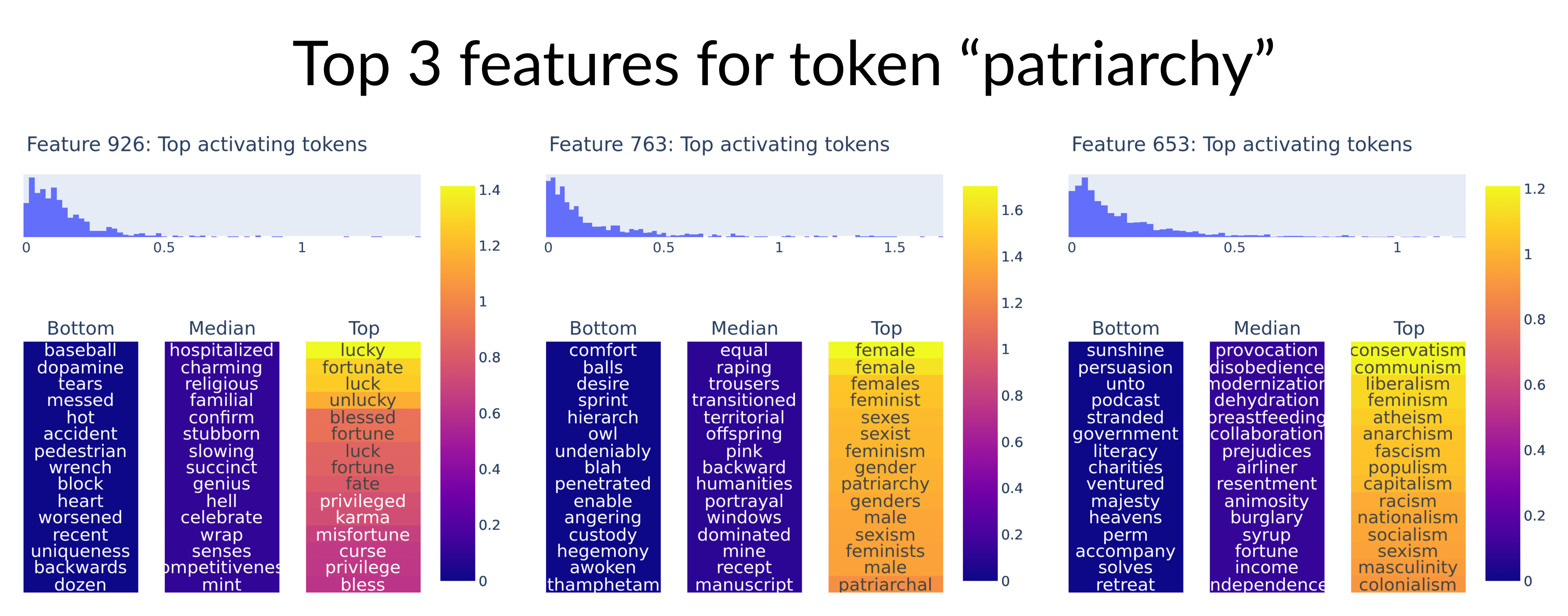

1/ Sparse autoencoders trained on the embedding weights of a language model have very interpretable features! We can decompose a token into its top activating features to understand how the model represents the meaning of the token.🧵

2/ To visualize each feature, we project the output direction of the feature onto the token embeddings to find the most similar tokens. We also show the bottom and median tokens by similarity, but they are not very interpretable.

3/ The token "deaf" decompos...

Claude 3.7's annoying personality is the first example of accidentally misaligned AI making my life worse. Claude 3.5/3.6 was renowned for its superior personality that made it more pleasant to interact with than ChatGPT.

3.7 has an annoying tendency to do what it thinks you should do, rather than following instructions. I've run into this frequently in two coding scenarios:

- In Cursor, I ask it to implement some function in a particular file. Even when explicitly instructed not to, it guesses what I want to do next and changes other parts of the code as well

BBC Tech News as far as I can tell has not covered any of the recent OpenAI drama about NDAs or employees leaving.

But Scarlett Johansson 'shocked' by AI chatbot imitation is now the main headline.

LessWrong LLM feature idea: Typo checker

It's becoming a habit for me to run anything I write through an LLM to check for mistakes before I send it off.

I think the hardest part of implementing this feature well would be to get it to only comment on things that are definitely mistakes / typos. I don't want a general LLM writing feedback tool built-in to LessWrong.

If you missed it, Veo 3, Google's text-to-video model was just launched and is very impressive. And the videos have audio now.

https://www.reddit.com/r/ChatGPT/comments/1krmsns/wtf_ai_videos_can_have_sound_now_all_from_one/

There are two types of people in this world.

There are people who treat the lock on a public bathroom as a tool for communicating occupancy and a safeguard against accidental attempts to enter when the room is unavailable. For these people the standard protocol is to discern the likely state of engagement of the inner room and then tentatively proceed inside if they detect no signs of human activity.

And there are people who view the lock on a public bathroom as a physical barricade with which to temporarily defend possessed territory. They start by giving t...

Rationalist twitter rage-bait recipe:

Rationalist: *reasonable, highly decoupling point about the holocaust*

Everyone: *highly coupling rage*

Rationalist: *shocked pikachu face*