Reminds me of the Mirror of Erised:

This mirror gives us neither knowledge nor truth. Men have wasted away in front of it, even gone mad.

It does not do to dwell on dreams, and forget to live.

To the credit of ChatGPT, or an any multimodal LLM, they're much more versatile than the Mirror. The Mirror does one thing very well, showing you your heart's true desire. Optionally, two, if you want to use it as a storage vault of questionable security!

The fact that this type of thing tends to get such large emotional responses out of people makes me wonder to what extent rendering counterfactuals would be useful to combat the lack of imagination for big decisions that actually are ahead of someone?

I have the exact opposite reaction. I want to know if I had made a mistake in a major life decision in the past, so I can do better next time. This includes relationship decisions.

Even better, I want to be able to simulate future decisions and their consequences.

To add: Emotions are also information. If you feel strongly about something through visualising images, more so than if you had just written about it on text, it’s possible this information is of value.

The specifics obfuscated to hopefully get past ChatGPT's filter

You don't need any special prompt, ChatGPT will not refuse to produce images of people's potential children.

If people have such a strong reaction to AI-generated images of potential children, I think more and not fewer people should be trying this out, to inspire themselves!

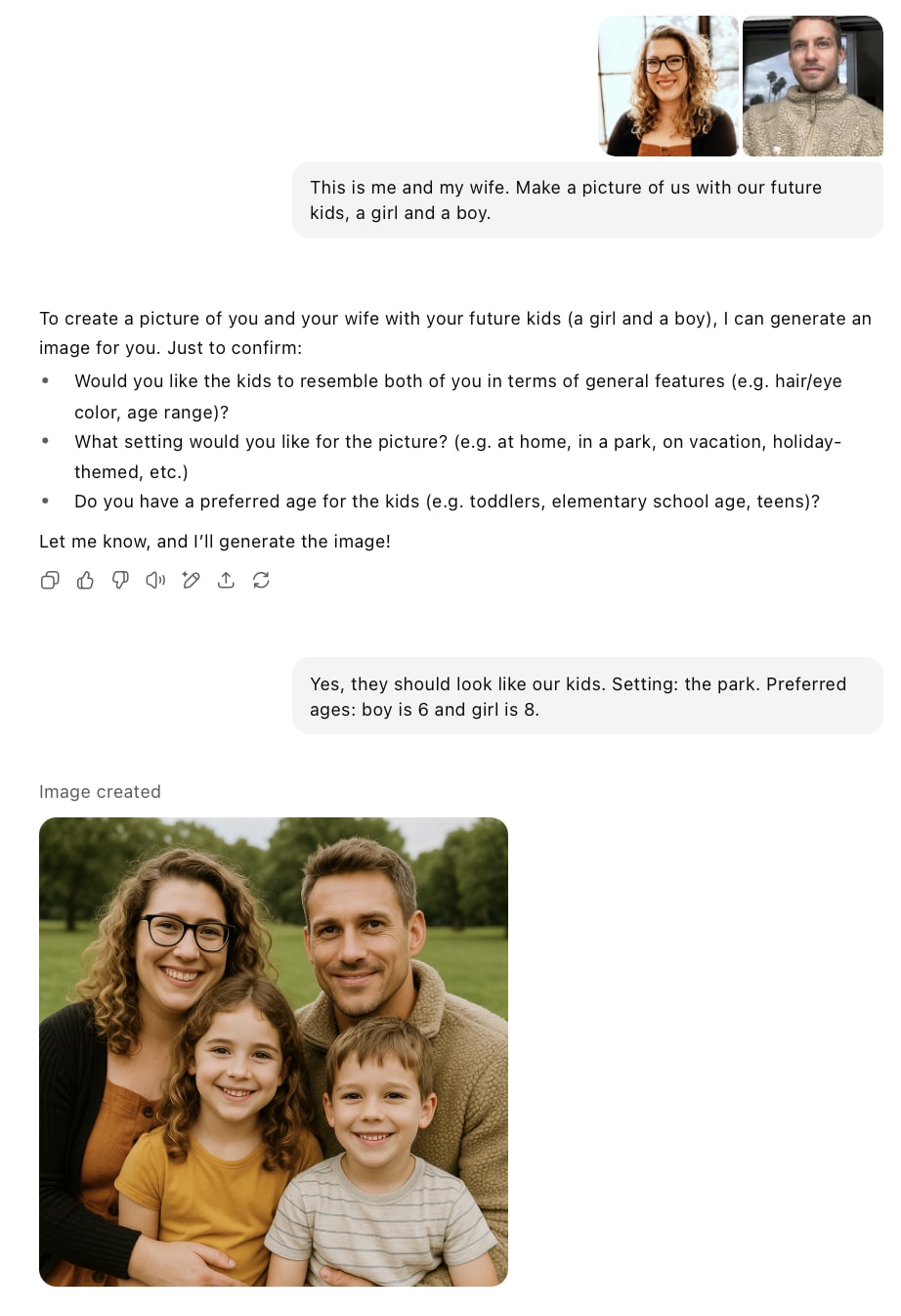

Example: (initial pictures are random people from google images - I searched "random man" and "random woman")

You're correct. When GPT's native image gen came out, it absolutely refused to touch kids. I didn't realize that they'd changed it (at least because I never had the reason to try) till I attempted this ill-fated experiment.

If the moral lesson here is not to render counterfactuals, because it's too painful to do so, then I sympathize. But if the moral lesson is not to do this because it is a dangerous new emotional exploit spawned by a cursed technology that mankind was not meant to know, then I wonder if you might be overstating the novelty some.

People have been rendering their counterfactuals for thousands of years. Before we had ChatGPT to draw the pictures for us, we would just draw the pictures ourselves, or ask another human to draw them for us. Or we would render them in words and let our imaginations draw the pictures. The fidelity is lower, but the feeling is the same. Even cartoon stick figures can make people weep.

I think interacting with the cutting edge of a new technology sometimes makes things seem newer than they are. And LLMs do add an element of creepy, uncanny computer noise to the dream. But ruminating on what-could-have-been has always been a painful, self-flagellating thing to do.

I hope you find peace.

It's the level of detail that's the real risk. Sora or Veo would generate motion video and audio, bringing even more false life into the counterfactual. People get emotionally attached to characters in movies; imagine trying not to form attachments to interactive videos of your own counterfactual children who call you "Mom" or "Dad". Your dead friend or relative could emotionally-believably talk to you from beyond the grave.

That's the kind of thing only the ultra-rich could have conceived of having someone fabricate for them in the past, and it would have come with at least some checks and balances. Now kids in elementary school can necromance their dead parent or whatever.

Realistically, I think it will become "normal" to have your counterfactual worlds easily accessible in this way and the new generations will simply adapt and develop internal safeguards against getting exploited by it, much like we learn how to deal with realistic dreams. I honestly don't know about the rest of us hitting it later in adulthood.

Now kids in elementary school can necromance their dead parent or whatever.

Ouch! I just imagine those crazy people who want to post on Less Wrong about discovering the true nature of consciousness... but in my imagination, they are also teenagers, and their dead parents (impersonated by chatgpt) keep telling them: "you are going to be a great scientist, you just have to believe in yourself".

Thank you.

The issue, as I see it, isn't just the ability to do so. Theoretically, I could have taken the picture of the two of us, gone to a human artist and gotten a portrait with kids commissioned. I would have been exceedingly unlikely to go down that route, or even explore alternatives like using old fashioned Photoshop for that purpose. The option of just uploading a single image and a short prompt, and it taking a mere few seconds to conjure exactly what I'd envisioned? That temptation was too alluring to resist.

Ease of access makes all the difference, a quantitative change can become qualitative. Honey was a delicacy to be savored, but now, we can get something as sweet or sweeter in minutes, delivered to the comfort of our homes.

I try not to make sweeping declarations here, I'm a technophile, and I think that the ability to conjure arbitrary, high quality images is amazing. A small subset of that capability can cause immense pain, or at least did to me. The rest of the time, I'm still marveling at my ability to illustrate ideas I'd never have hoped to see with my own two eyes. Even as painful as this way, a small part of me appreciates the ability to have seen how things might have gone differently.

Zvi's post "Levels of Friction" is relevant here. In his terms, AI moves the friction level of rendering your counterfactuals from level 2 (expensive and annoying) to level 1 (simple and easy).

In my defense, I did put up CW tags. I don't use those very often, but I felt they were appropriate here.

CW: Digital necromancy, the cognitohazard of summoning spectres from the road not taken

There is a particular kind of modern madness, so new it has yet to be named. It involves voluntarily feeding your own emotional entrails into the maw of an algorithm. It’s a madness born of idle curiosity, and perhaps a deep, masochistic hunger for pain. I indulged in it recently, and the result sits in my mind like a cold stone.

Years ago, there was a woman. We loved each other with the fierce, optimistic certainty of youth. In the way of young couples exploring the novelty of a shared future, we once stumbled upon one of those early, crude image generators - the kind that promised to visualize the genetic roulette of potential offspring. We fed it our photos, laughing at the absurdity, yet strangely captivated. The result, a composite face with hints of her eyes and jawline, and the contours of my cheeks. The baby struck us both as disarmingly cute. A little ghost of possibility, rendered in pixels. The interface was lacking, this being the distant year of 2022, and all we could do was laugh at the image, and look each other in the eyes that formed a kaleidoscope of love.

Life, as it does, intervened. We weren’t careful. A positive test, followed swiftly by the cramping and bleeding that signals an end before a beginning. The dominant emotion then, I must confess with the clarity of hindsight and the weight of shame, was profound relief. We were young, financially precarious, emotionally unmoored. A child felt like an accidentally unfurled sail catching a gale, dragging us into a sea we weren’t equipped to navigate. The relief was sharp, immediate, and utterly rational. We mourned the event, the scare, but not the entity. Not yet. I don't even know if it was a boy or a girl.

Time passed. The relationship ended, as young love often does, not with a bang but with the slow erosion of incompatible trajectories. Or perhaps that's me being maudlin, in the end, it went down in flames, and I felt immense relief that it was done. Life moved on. Occasionally, my digital past haunted me. Essays written that mentioned her, half-joking parentheticals where I remembered asking for her input. Google Photos choosing to 'remind' me of our time together (I never had the heart to delete our images).

Just now while back, another denizen of this niche internet forum I call home spoke about their difficulties conceiving. Repeated miscarriages, they said, and they were trawling the literature and afraid that there was an underlying chromosomal incompatibility. I did my best to reassure them, to the extent that reassurance was appropriate without verging into kind lies.

But you can never know what triggers it, thats urge to pick at an emotional scab or poke at the bruise she left on my heart. Someone on Twitter had, quite recently, showed off an example of Anakin and Padme with kids that looked just like them, courtesy of tricking ChatGPT into relaxing its content filters.

Another person, wiser than me, had promptly pointed out that modernity could produce artifacts that would once have been deemed cursed and summarily entombed. I didn't listen.

And knowing, with the cold certainty that it was a terrible idea, that I'd regret it, I fired up ChatGPT. Google Photos had already surfaced a digital snapshot of us, frozen in time, smiling at a camera that didn’t capture the tremors beneath. I fed it the prompt: "Show us as a family. With children." (The specifics obfuscated to hopefully get past ChatGPT's filter, and also because I don't want to spread a bad idea. You can look that up if you really care)

The algorithm, that vast engine of matrix multiplications and statistical correlations that often reproduces wisdom, did its work. It analyzed our features, our skin tones, the angles of our faces. It generated an image. Us, but not just the two of us. A boy with her unruly hair and my serious gaze. A girl with her dimples and my straighter mop. They looked like us. They looked like each other. They looked real.

They smiled as the girl clung to her skirt, a shy but happy face peeking out from the side. The boy perched in my arms, held aloft and without a care in the world.

It wasn't perfect, ChatGPT's image generation, for all its power, has clear tells. It's not yet out of the uncanny valley, and is deficient when compared to more specialized image models.

And yet.

My brain, the ancient primate wetware that has been fine-tuned for millions of years to recognize kin and feel profound attachment, does not care about any of this. It sees a plausible-looking child who has her eyes and my nose, and it lights up the relevant circuits with a ruthless, biological efficiency. It sees a little girl with her mother’s exact smile, and it runs the subroutine for love-and-protect.

The part of my mind that understands linear algebra is locked in a cage, screaming, while the part of my mind that understands family is at the controls, weeping.

I didn't weep. But it was close. As a doctor, I'm used to asking people to describe their pain, even if that qualia has a certain *je ne sais quoi*. The distinction, however artificial, is still useful. This ache was dull. Someone punched me in the chest and proved that the scars could never have the tensile strength of unblemished tissue. That someone was me.

This is a new kind of emotional exploit. We’ve had tools for evoking memory for millennia: a photograph, a song, a scent. But those are tools for accessing things that were, barring perhaps painting. Generative AI is a tool for rendering, in optionally photorealistic detail, things that never were. It allows you to create a perfectly crafted key to unlock a door in your heart that you never knew existed, a door that opens onto an empty room.

What is the utility of such an act? From a rational perspective, it’s pure negative value. I have voluntarily converted compute cycles into a significant quantity of personal sadness, with no corresponding insight or benefit. At the time of writing, I've already poured myself a stiff drink.

One might argue this is a new form of closure. By looking the ghost directly in the face, you can understand its form and, perhaps, finally dismiss it. This is the logic of exposure therapy. But it feels more like a form of self-flagellation. A way of paying a psychic tax on a past decision that, even if correct, feels like it demands a toll of sorrow. The relief I felt at the miscarriage all those years ago was rational, but perhaps some part of the human machine feels that such rationality must be punished. The AI provides an exquisitely calibrated whip for the job.

The broader lesson is not merely, as the old wisdom goes, to "let bygones be bygones." That advice was formulated in a world where bygones had the decency to remain fuzzy and abstract. The new, updated-for-the-21st-century maxim might be: Do not render your counterfactuals.

Our lives are a series of branching paths. Every major decision: career, relationship, location - creates a ghost-self who took the other route. For most of human history, that ghost-self remained an indistinct specter. You could wonder, vaguely, what life would have been like if you’d become a doctor, but you couldn’t *see* it.

The two children in the picture on my screen are gorgeous. They are entirely the product of matrix multiplications and noise functions, imaginary beings fished from nearly infinite latent space. And I know, with a certainty that feels both insane and completely true, that I could have loved them.

It hurts so fucking bad. I tell myself that the pain is a signal that the underlying system is still working. It would be worse if I stood in the wreckage of *could have been*, and felt nothing at all.

I look at those images again. The boy, the girl. Entirely fantasized. Products of code, not biology. Yet, the thought persists: "I think they were gorgeous and I could have loved them." And that’s the cruelest trick of all. The AI didn't just show me faces; it showed me the capacity for love that still resides within me, directed towards phantoms. It made me mourn not just the children, but the version of myself that might have raised them, alongside a woman I no longer know.

I delete them. I pour myself another drink, and say that it's in their honor.

(You may, if you please, like this on my [Substack](https://open.substack.com/pub/ussri/p/do-not-render-your-counterfactuals?utm_source=share&utm_medium=android&r=a71by))