I just made some dinner and was thinking about how salt and spices[1] now are dirt cheap, but throughout history they were precious and expensive. I did some digging and apparently low and middle class people didn't even really have access to spices. It was more for the wealthy.

Salt was important mainly to preserve food. They didn't have fridges back then! So even poor people usually had some amount of salt to preserve small quantities of food, but they had to be smart about how they allocated it.

In researching this I came to realize that throughout history, food was usually pretty gross. Meats were partially spoiled, fats went rancid, grains were moldy. This would often cause digestive problems. Food poisoning was a part of life.

Could you imagine! That must have been terrible!

Meanwhile, today, not only is it cheap to access food that is safe to eat, it's cheap to use basically as much salt and spices as you want. Fry up some potatoes in vegetable oil with salt and spices. Throw together some beans and rice. Incorporate a cheap acid if you're feeling fancy -- maybe some malt vinegar with the potatoes or white vinegar with the beans and rice. It's delicious!

I suppose there are ...

presumably ancient people weren't constantly sick.

I think you presume incorrectly. People in primitive cultures spend a lot of time with digestive issues and it's a major cause of discomfort, illness, and death.

I wish there were more discussion posts on LessWrong.

Right now it feels like it weakly if not moderately violates some sort of cultural norm to publish a discussion post (similar but to a lesser extent on the Shortform). Something low effort of the form "X is a topic I'd like to discuss. A, B and C are a few initial thoughts I have about it. What do you guys think?"

It seems to me like something we should encourage though. Here's how I'm thinking about it. Such "discussion posts" currently happen informally in social circles. Maybe you'll text a friend. Maybe you'll bring it up at a meetup. Maybe you'll post about it in a private Slack group.

But if it's appropriate in those contexts, why shouldn't it be appropriate on LessWrong? Why not benefit from having it be visible to more people? The more eyes you get on it, the better the chance someone has something helpful, insightful, or just generally useful to contribute.

The big downside I see is that it would screw up the post feed. Like when you go to lesswrong.com and see the list of posts, you don't want that list to have a bunch of low quality discussion posts you're not interested in. You don't want to spend time and energy sifting...

I remember a hygienist at the dentist once telling me that toothpaste isn't a huge deal and that it's the mechanical friction of the toothbrush that provides most of the value. Since being told that, after a meal, I often wet my toothbrush with water and brush for 10 seconds or so.

I just researched it some more and from what I understand, after eating, food debris that remains on your teeth forms a sort of biofilm. Once the biofilm is formed you need those traditional 2 minute long tooth brushing sessions to break it down and remove it. But it takes 30+ minutes to form the biofilm. Before it is formed you don't need long brushing sessions to significantly reduce the amount of debris that is left on your teeth. So then, these short informal brushing sessions after meals seem like a great "bang for your buck" in terms of reward vs effort.

(See my other comment)

Retracted: Original comment about how brushing after eating acidic food may be bad

I remember something about not brushing immediately after eating though. Here is a random article I googled. This says don't brush after eating acidic food, not sure about the general case.

https://www.cuimc.columbia.edu/news/brushing-immediately-after-meals-you-may-want-wait

“The reason for that is that when acids are in the mouth, they weaken the enamel of the tooth, which is the outer layer of the tooth,” Rolle says. Brushing immediately after consuming something acidic can damage the enamel layer of the tooth.

Waiting about 30 minutes before brushing allows tooth enamel to remineralize and build itself back up.

That's a good call out about acidic food. I remember hearing that too and so don't brush after eating something pretty acidic. Also because my teeth are sensitive to acid and it hurts when I brush after eating something pretty acidic.

For the general case, this excerpt from the article sounded like it was indicating that you should brush after eating.

We’ve all heard that it’s best to brush our teeth after meals. But in some cases, did you know it is best to hold off brushing, at least temporarily?

There is a concept related to scout mindset and soldier mindset (helpful outline) that I'd like to explore. Let's call it an "adversarial mindset".

From what I gather, both scout mindset and soldier mindset are about beliefs. They apply to people who are looking at the world through some belief-oriented frame. Someone who takes a soldier mindset engages in directionally motivated reasoning and asks "Can/must I believe?" whereas a scout asks "Is it true?".

On the other hand, someone who is in an adversarial mindset is looking through some sort of "combat-oriented frame". If you say "I think your belief that X is true is wrong" to someone in an adversarial mindset, they might infer subtext of something like "You're dumb".

But despite being in this frame, they likely won't respond by saying "Hey, that was mean of you to say I'm dumb. I'm not dumb, I'm smart!" Instead, they'll likely respond by saying something closer to the object level like "Well I'm pretty sure it's right", but the subtext will be something more combative like "I won't let you push me around like that!".

Adversarial mindset isn't about beliefs, it's about self-esteem. Maybe?

There are various phenomena that make me think...

I just learned some important things about indoor air quality after watching Why Air Quality Matters, a presentation by David Heinemeier Hanson, the creator of Ruby on Rails. It seems like something that is both important and under the radar, so I'll brain dump + summarize my takeaways here, but I encourage you to watch the whole thing.

- He said he spent three weeks researching and experimenting with it full time. I place a pretty good amount of trust in his credibility here, based on a) my prior experiences with his work and b) him seeming like he did pretty thorough research.

- It's easy for CO2 levels to build up. We breathe it out and if you're not getting circulation from fresh air, it'll accumulate.

- This has pretty big impacts on your cognitive function. It seems similar to not getting enough sleep. Not getting enough sleep also has a pretty big impact on your cognitive function. And perhaps more importantly, it's something that we are prone to underestimating. It feels like we're only a little bit off, when in reality we're a lot off.

- There are things called volatile organic compounds, aka VOCs. Those are really bad for your health. They come from a variety of sources. Cleaning pro

Project idea: virtual water coolers for LessWrong

Previous: Virtual water coolers

Here's an idea: what if there was a virtual water cooler for LessWrong?

- There'd be Zoom chats with three people per chat. Each chat is a virtual water cooler.

- The user journey would begin by the user expressing that they'd like to join a virtual water cooler.

- Once they do, they'd be invited to join one.

- I think it'd make sense to restrict access to users based on karma. Maybe only 100+ karma users are allowed.

- To start, that could be it. In the future you could do some investigation into things like how many people there should be per chat.

Seems like an experiment that is both cheap and worthwhile.

If there is interest I'd be happy to create a MVP.

(Related: it could be interesting to abstract this and build a sort of "virtual water cooler platform builder" such that eg. LessWrong could use the builder to build a virtual water cooler platform for LessWrong and OtherCommunity could use the builder to build a virtual water cooler platform for their community.)

In How to Get Startup Ideas, Paul Graham provides the following advice:

Live in the future, then build what's missing.

Something that feels to me like it's present in the future and missing in today's world: OkCupid for friendship.

Think about it. The internet is a thing. Billions and billions of people have cheap and instant access to it. So then, logistics are rarely an obstacle for chatting with people.

The actual obstacle in today's world is matchmaking. How do you find the people to chat with? And similarly, how do you communicate that there is a strong match so that each party is thinking "oh wow this person seems cool, I'd love to chat with them" instead of "this is a random person and I am not optimistic that I'd have a good time talking to them".

This doesn't really feel like such a huge problem though. I mean, assume for a second that you were able to force everyone in the world to spend an hour filling out some sort of OkCupid-like profile, but for friendship and conversation rather than romantic relationships. From there, it seems doable enough to figure out whatever matchmaking algorithm.

I think the issue is moreso getting people to fill out the survey in the first place. T...

I would like to see people write high-effort summaries, analyses and distillations of the posts in The Sequences.

When Eliezer wrote the original posts, he was writing one blog post a day for two years. Surely you could do a better job presenting the content that he produced in one day if you, say, took four months applying principles of pedagogy and iterating on it as a side project. I get the sense that more is possible.

This seems like a particularly good project for people who want to write but don't know what to write about. I've talked with a variety of people who are in that boat.

One issue with such distillation posts is discoverability. Maybe you write the post, it receives some upvotes, some people see it, and then it disappears into the ether. Ideally when someone in the future goes to read the corresponding sequence post they would be aware that your distillation post is available as a sort of sister content to the original content. LessWrong does have the "Mentioned in" section at the bottom of posts, but that doesn't feel like it is sufficient.

In public policy, experimenting is valuable. In particular, it provides a positive externality.

Let's say that a city tests out a somewhat quirky idea like paying NIMBYs to shut up about new housing. If that policy works well, other cities benefit because now they can use and benefit from that approach.

So then, shouldn't there be some sort of subsidy for cities that test out new policy ideas? Isn't it generally a good thing to subsidize things that provide positive externalities?

I'm sure there is a lot to consider. I'm not enough of a public policy person to know what the considerations are though or how to weigh them.

Every day I check Hacker News. Sometimes a few times, sometimes a few dozen times.

I've always felt guilty about it, like it is a waste of time and I should be doing more productive things. But recently I've been feeling a little better about it. There are things about coding, design, product, management, QA, devops, etc. etc. that feel like they're "in the water" to me, where everyone mostly knows about them. However, I've been running into situations where people turn out to not know about them.

I'm realizing that they're not actually "in the water", and that the reason I know about them is probably because I've been reading random blog posts from the front page of Hacker News every day for 10 years. I probably shouldn't have spent as much time doing this as I have, but I feel good about the fact that I've gotten at least something out of it.

Something kinda scary happened a few days ago. I was walking my dog and was trying to cross at an intersection. There's a stoplight which was red, and a cross walk which was on "walk". But this car was approaching the crosswalk really quickly. You could hear the motor rev and the driver was accelerating.

I didn't enter the crosswalk. I put my hand up to catch his attention. He came to a quick stop. I gave a shrug like "what are you doing". He gave a similar shrug back. Then after I crossed, he floored it right through the stop light that was still red, only to come to a stop light one block away that was also red.

It wasn't actually a close call. I looked before entering the crosswalk and wasn't at risk of getting hit. But I feel like it was a close call in a different sense.

I'm a very careful street crosser, but even I fail to look both ways sometimes when the crosswalk signal is on "walk", especially when visibility is poor. If there's a parked car or something that is blocking my view of oncoming traffic, sometimes I just go. What if this were one of those situations?

And furthermore, I've had a ton of similar encounters where a car seemingly would have hit and killed me if I weren...

There's some stats for this here: https://www.trafficsafetymarketing.gov/safety-topics/pedestrian-safety

- 68,244 pedestrians were injured in traffic crashes in 2023

Alcohol use was reported in 46% of all fatal pedestrian crashes in 2023, with a blood alcohol concentration of .01 for the driver and/or the pedestrian.- In 2023, urban areas had a pedestrian fatality rate much higher (84%) than rural areas (16%).

- 74% of the pedestrian fatalities occurred at locations that were not intersections, 17% occurred at intersections, and the remaining 9% occurred at other locations in 2023.

- More pedestrian fatalities occurred in the dark (77%) than in daylight (19%), dusk (2%), and dawn (2%) in 2023.

So one in every 10,000 people gets hit by a car while walking every year.

Something I've heard about car crashes in general is that they're usually the confluence of two mistakes, so you have one driver do something crazy like swerve in front of another car too closely and the other driver doesn't do anything about it. If you assume both actions are equally likely, you'd expect the probability of "close calls" (one driver does something crazy) to be about the square root of the probablity of an actual cras...

Against "yes and" culture

I sense that in "normie cultures"[1] directly, explicitly, and unapologetically disagreeing with someone is taboo. It reminds me of the "yes and" from improv comedy.[2] From Wikipedia:

"Yes, and...", also referred to as "Yes, and..." thinking, is a rule-of-thumb in improvisational comedy that suggests that an improviser should accept what another improviser has stated ("yes") and then expand on that line of thinking ("and").

If you want to disagree with someone, you're supposed to take a "yes and" approach where you say something somewhat agreeable about the other person's statement, and then gently take it in a different direction.

I don't like this norm. From a God's Eye perspective, if we could change it, I think we probably should. Doing so is probably impractical in large groups, but might be worth considering in smaller ones.

(I think this really needs some accompanying examples. However, I'm struggling to come up with ones. At least ones I'm comfortable sharing publicly.)

- ^

The US, at least. It's where I live. But I suspect it's like this in most western cultures as well.

- ^

See also this Curb Your Enthusiasm clip.

I've started to watch the YouTube channel Clean That Up. It started with me pragmatically searching for how to clean something up in my apartment that needed cleaning, but then I went down a bit of a rabbit hole and watched a bunch of his videos. Now they appear in my feed and I watch them periodically. To my surprise, I actually quite enjoy them.

It's made me realize how much skill it takes to clean. Nothing in the ballpark of requiring a PhD, but I dunno, it's not trivial. Different situations call for different tools, techniques and cleaning materials. But the thing that really caught my attention is simply knowing what to clean. In watching his videos I often am like "Oh, I wouldn't have thought to clean that but I'm glad that I know now."

One example that comes to my mind is trash cans. Previously I thought of trash cans as things you line with bags. You put the garbage in the bag and take the bag out when the garbage is full. The garbage never touches the can, so you don't need to clean the can. This makes sense in theory, but in practice things don't always work out that smoothly.

I just moved into a new (furnished) apartment and the garbage cans were all gross. I had to take t...

For the past week my girlfriend's sister has been visiting us along with her 1.5 year old baby. The baby is very cute and I've really enjoyed some of my time with her, but I've also found it pretty overwhelming and chaotic at times. Overall it's made me more confident that I don't want kids.

It's also made me think that it'd make a ton of sense for someone who does want kids to spend a week or so babysitting in order to test help test that assumption.

- It seems hard to be confident that you do in fact want kids.

- Anecdotally I feel like there are a fair amount of examples of people who have kids and end up regretting it, at least to a meaningful degree.

- Babysitting for a week is a relatively cheap test.

- The decision to have kids isn't really a reversible one (once they're born; most of the time).

Perhaps this makes more sense when you think about it more in the abstract. Like if you want to make a decision that is very, very important, hard to be confident about, not really reversible, and relatively cheap to test, it makes sense to test it.

I suppose finding someone to babysit for a week might be hard. The parents would need to trust you. So I guess ideally you'd be able to babysit for a f...

>> Anecdotally I feel like there are a fair amount of examples of people who have kids and end up regretting it, at least to a meaningful degree.

The social desirability bias against saying one regrets having kids is very intense. So although I would very much not say that the following observation is anything but a highly biased sample: I personally have never met a person regretting having children in real life. I've heard parents complain for sure --- true regrets seem to be rare.

Sure, some of those. But also I just expected parenthood to change me a bunch to be better suited to it. Like, it's a challenge such that rising to it transforms you. With babysitting you're just skipping to a random bit pretty far into the process, not already having been transformed.

Gotcha. That makes sense that this transformation should be factored in. However, it still feels to me like despite the possibility of transformation, babysitting would still likely be useful.

As an example, today I was babysitting while my girlfriend and her sister went out. The baby was eating lunch and got food everywhere, including her hair. And when I tried to clean it she'd cry and push me away. Before this I knew in principle that this sort of thing happens but I didn't realize how overwhelming and stressful I personally would find it.

As another example, I've been feeling anxious that the baby will get sick or hurt under my watch. On Friday I had her on my lap during a work call. She was holding my mousepad in front of her face as if she was camera shy which was cute. Then there was a point where I spoke for 30 seconds or so. During this time I lost track of what the baby was doing. Turns out she was eating the gel for the hand rest part of the mousepad! There's been a handful of similar situations where a moment of losing focus lead to her doing something potentially harmful. Before this week I didn't understand how frequently this sort of thing happens or how anxious it'd m...

One thing is, babies very gradually get harder in exactly the way you describe! Like, at first by default they breastfeed, and don't have teeth, which is at the very least highly instinctive to learn. Then they eat a tiiiny bit of solid food, like a bite or two once a day, to train you. So you have gotten way stronger at "baby eating challenges" by the time the baby can e.g. throw food. Likewise they'll very rarely try to put stuff in their mouths early on, then really gradually more and more, so you hone that instinct too. Even diapers don't smell bad the first couple of months! Hard to overestimate the effects of the extremely instinct compliant learning curve.

Something frustrating happened to me a week or two ago.

- I was at the vet for my dog.

- The vet assistant (I'm not sure if that's the proper term) asks if I want to put my dog on these two pills, one to protect against heartworm and another to protect against fleas.

- I asked what heartworm is, what fleas are, and what the pros and cons are. (It became clear later in the conversation that she was expecting a yes or no answer from me and perhaps had never been asked before about pros and cons, because she seemed surprised when I asked for them.)

- Iirc, she said something about there not really being any cons (I'm suspicious). For heartworm the dogs can die of it so the pros are strong. For fleas, it's just an annoyance to deal with, not really dangerous.

- I asked how likely it is for my dog to be exposed to fleas given that we're in a city and not eg. a forest.

- The assistant responded with something along the lines of "Ok, so we'll just do the heartworm pill then."

- I clarified something along the lines of "No, that wasn't a rhetorical question. I was actually interested in hearing about the likelihood. I have no clue what it is; I didn't mean to imply that it is low."

I wish that we had a culture of words being used more literally.

A friend of mine from the local LessWrong meetup created Democracy Chess - a game where the human players play against an AI, vote on moves, and the move with the most votes is played. Yesterday a bunch of us gave it a try.

In brainstorming how it could be better I had an interesting thought: what if there was a $10 buy in where if we win we get our money back but if we lose the money is donated to some pre-agreed upon charity?

I could see something like that being fun. Having something at stake can make games more fun. And along the lines of charity runs, it can be fun to get together with a group of people and raise/donate money for a cause.

My angle here is that if something like this is genuinely fun and appealing, it could be a good way to generate money for charities. Along the lines of 1,000 True Fans, if 1,000 people spend $200/year on this (~$20/month) and won half the time, that'd be $100k in charitable donations a year. A meaningful sum.

I don't think Democracy Chess would be the ideal game. I think something like trivia would make more sense. Something that has more mass appeal and is more social. Trivia is particularly appealing since it'd be easy to build an prototype for...

Wow, I just watched this video where Feynman makes an incredible analogy between the rules of chess and the rules of our physical world.

You watch the pieces move and try to figure out the underlying rules. Maybe you come up with a rule about bishops needing to stay on the same color, and that rule lasts a while. But then you realize that there is a deeper rule that explains the rule you've held to be true: bishops can only move diagonally.

I'm butchering the analogy though and am going to stop talking now. Just go watch the video. It's poetic.

I've noticed that there's a pretty big difference in the discussion that follows from me showing someone a draft of a post and asking for comments and the discussion in the comments section after I publish a post. The former is richer and more enjoyable whereas the latter doesn't usually result in much back and forth. And I get the sense that this is true for other authors as well.

I guess one important thing might be that with drafts, you're talking to people who you know. But I actually don't suspect that this plays much of a role, at least on LessWrong. As an anecdote, I've had some incredible conversations with the guy who reviews drafts of posts on LessWrong for free and I had never talked to him previously.

I wonder what it is about drafts. I wonder if it can or should be incorporated into regular posts.

I spent the day browsing the website of Josh W. Comeau yesterday. He writes educational content about web development. I am in awe.

For so many reasons. The quality of the writing. The clarity of the thinking. The mastery of the subject matter. The metaphors. The analogies. The quality and attention to detail of the website itself. Try zooming in to 300%. It still look gorgeous.

One thing that he's got me thinking about is the place that sound effects and animation have on a website. Previously my opinion was that you should usually just leave 'em out. Focus on more important things. It's hard to implement them well; they usually just make the site feel tacky. They also add a decent amount of complexity.

But Josh does such a good job of utilizing sound effects and animation! Try clicking one of the icons on the top right. Or moving your cursor around those dots at the top of the home page. Or clicking the "heart" button at the end of the "Table of contents" section for one of his posts. It's so satisfying.

I'm realizing that my previous opinion was largely a cached thought. When I think about it now, I arrive at a different perspective. Right now I'm suspecting that both sound effects ...

Something I've always wondered about is what I'll call sub-threshold successes. Some examples:

- A stand up comedian is performing. Their jokes are funny enough to make you smile, but not funny enough to pass the threshold of getting you to laugh. The result is that the comedian bombs.

- Posts or comments on an internet forum are appreciated but not appreciated enough to get people to upvote.

- A restaurant or product is good, but not good enough to motivate people to leave ratings or write reviews.

It feels to me like there is an inefficiency occurring in these sor...

A standard trick is to add noise to the signal to (stochastically) let parts get over the hump.

[This contains spoilers for the show The Sopranos.]

In the realm of epistemics, it is a sin to double-count evidence. From One Argument Against An Army:

...I talked about a style of reasoning in which not a single contrary argument is allowed, with the result that every non-supporting observation has to be argued away. Here I suggest that when people encounter a contrary argument, they prevent themselves from downshifting their confidence by rehearsing already-known support.

Suppose the country of Freedonia is debating whether its neighbor, Sylvania, is responsi

Against difficult reading

I kinda have the instinct that if I'm reading a book or a blog post or something and it's difficult, then I should buckle down, focus, and try to understand it. And that if I don't, it's a failure on my part. It's my responsibility to process and take in the material.

This is especially true for a lot of more important topics. Like, it's easy to clearly communicate what time a restaurant is open -- if you find yourself struggling to understand this, it's probably the fault of the restaurant, not you as the reader -- but for quantum ...

I think I just busted a cached thought. Yay.

I'm 30 years old now and have had achilles tendinitis since I was about 21. Before that I would get my cardio by running 1-3 miles a few times a week, but because of the tendinitis I can't do that anymore.

Knowing that cardio is important, I spent a bunch of time trying different forms of cardio. Nothing has worked though.

- Biking hurts my knees (I have bad knees).

- Swimming gives me headaches.

- Doing the stairs was ok, but kinda hurt my knees.

- Jumping rope is what gave me the tendinitis in the first place.

- Rowing hurts m

6th vs 16th grade logic

I want to write something about 6th grade logic vs 16th grade logic.

I was talking to someone, call them Alice, who works at a big well known company, call it Widget Corp. Widget Corp needs to advertise to hire people. They only advertise on Indeed and Google though.

Alice was telling me that she wants to explore some other channels (LinkedIn, ZipRecruiter, etc.). But in order to do that, Widget Corp needs evidence that advertising on those channels would be cheap enough. They're on a budget and really want to avoid spending money they...

Over the past however many months, I've noticed myself posting a lot more quick takes and a lot less posts. I wonder whether the quick takes feature is a net positive for me.

I posted this quick take a few minutes ago. If quick takes weren't available, maybe I would have taken more time to develop the idea further and turn it into a higher quality post. Then again, I also very well may have just not written anything at all.

...Now, every program believes they give students a chance to practice because they have them work with real clients, during what is even called "practicums". But seeing clients does not count as practice, at least not according to the huge body of research in the area of skill development.

According to the science, seeing clients would be categorized, not as practice, but as "performance". In order for something to be considered practice, it needs to be focused on one skill at a time. And when you're actually seeing a client, you're having to use a dozen or m

FYI, I couldn't click into this from the front page, nor could I see anything on it on the front page. I had to go to the permalink (and I assumed at first this was a joke post with no content) to see it.

In A Sketch of Good Communication -- or really, in the Share Models, Not Beliefs sequence, which A Sketch of Good Communication is part of -- the author proposes that, hm, I'm not sure exactly how to phrase it.

I think the author (Ben Pace) is proposing that in some contexts, it is good to spend a lot of effort building up and improving your models of things. And that in those contexts, if you just adopt the belief of others without improving your model, well, that won't be good.

I think the big thing here is research. In the context of research, Ben propose...

I was just watching this Andrew Huberman video titled "Train to Gain Energy & Avoid Brain Fog". The interviewee was talking about track athletes and stuff their coaches would have them do.

It made me think back to Anders Ericsson's book Peak: Secrets from the New Science of Expertise. The book is popular for discussing the importance of deliberate practice, but another big takeaway from the book is the importance of receiving coaching. I think that takeaway gets overlooked. Top performers in fields like chess, music and athletics almost universally rece...

I am a web developer. I remember reading some time in these past few weeks that it's good to design a site such that if the user zooms in/out (eg. by pressing cmd+/-), things still look reasonably good. It's like a form of responsive design, except instead of responding to the width of the viewport your design responds to the zoom level.

Anyway, since reading this, I started zooming in a lot more. For example, I just spent some time reading a post here on LessWrong at a 170% zoom level. And it was a lot more comfortable. I've found this to be a helpful little life hack.

Thought: It's better to link to tag pages rather than blog posts. Like Reversed Stupidity Is Not Intelligence instead of Reversed Stupidity Is Not Intelligence.

There is something inspiring about watching this little guy defeat all of the enormous sumo wrestlers. I can't quite put my finger on it though.

Maybe it's the idea of working smart vs working hard. Maybe something related to fencepost security, like how there's something admirable about, instead of trying to climb the super tall fence, just walking around it.

Noticing confusion about the nucleus

In school, you learn about forces. You learn about gravity, and you learn about the electromagnetic force. For the electromagnetic force, you learn about how likes repel and opposites attract. So two positively charged particles close together will repel, whereas a positively and a negatively charged particle will attract.

Then you learn about the atom. It consists of a bunch of protons and a bunch of neutrons bunched up in the middle, and then a bunch of electrons orbiting around the outside. You learn that protons are p...

"It's not obvious" is a useful critique

I recall hearing "it's not obvious that X" a lot in the rationality community, particularly in Robin Hanson's writing.

Sometimes people make a claim without really explaining it. Actually, this happens a lot of times. Often times the claim is made implicitly. This is fine if that claim is obvious.

But if the claim isn't obvious, then that link in the chain is broken and the whole argument falls apart. Not that it's been proven wrong or anything, just that it needs work. You need to spend the time establishing that claim...

I'm pretty into biking. I live in Portland, OR, bike as my primary mode of transport (I don't have a car), am sorta involved in the biking and urbanism communities here, read Bike Portland almost every day, think about bike infrastructure and urbanism whenever I visit new cities, have submitted pro-biking testimony, watched more YouTube videos about biking and urbanism than I'd like to admit, spent more time researching e-bikes and bike locks than I'd care to admit, etc etc.

I've been wanting to write up some thoughts on biking for a while but haven't pulle...

More dakka with festivals

In the rationality community people are currently excited about the LessOnline festival. Furthermore, my impression is that similar festivals are generally quite successful: people enjoy them, have stimulating discussions, form new relationships, are exposed to new and interesting ideas, express that they got a lot out of it, etc.

So then, this feels to me like a situation where More Dakka applies. Organize more festivals!

How? Who? I dunno, but these seem like questions worth discussing.

Some initial thoughts:

- Assurance contracts seem

Why not more specialization and trade?

I can probably make something like $100/hr doing freelance work as a programmer. Yet I'll spend an hour cooking dinner for myself.

Does this make any sense? Imagine if I spent that hour programming instead. I'd have $100. I can spend, say, $20 on dinner, end up with something that is probably much better than what I would cook, and have $80 left over. Isn't that a better use of my time than cooking?

Similarly, sometimes I'll spend an hour cleaning my apartment. I could instead spend that hour making $100, and paying some...

The other day Improve your Vocabulary: Stop saying VERY! popped up in my YouTube video feed. I was annoyed.

This idea that you shouldn't use the word "very" has always seemed pretentious to me. What value does it add if you say "extremely" or "incredibly" instead? I guess those words have more emphasis and a different connotation, and can be better fits. I think they're probably a good idea sometimes. But other times people just want to use different words in order to sound smart.

I remember there was a time in elementary school when I was working on a paper...

Toughness is a topic I spent some time thinking about today. The way I think about it is that toughness is one's ability to push through difficulty.

Imagine that Alice is able to sit in an ice bath for 6 minutes and Bob is only able to sit in the ice bath for 2 minutes. Is Alice tougher than Bob? Not necessarily. Maybe Alice takes lots of ice baths and the level of discomfort is only like a 4/10 for here whereas for Bob it's like an 8/10. I think when talking about toughness you want to avoid comparing apples to oranges.

I suspect that toughness depends on t...

Externality god

I was just walking my dog and there was this parked car that pulled out. As it did it's motor was crazy loud and I along with my dog got a big whiff of exhaust.

The first thought that came to my mind was something like, "Ugh, what a negative externality. It's so annoying that we can't easily measure these sorts of things and fine people accordingly."

But then I thought, "What if we could?". What if, hypothetically, there was some sort of externality god that was able to look down on us, detect these sorts of events, and report them to the gove...

I just saw (but didn't read) the post Anthropic is Quietly Backpedalling on its Safety Commitments. I've seen similar posts before.

I wonder: maybe it'd make sense to have some sort of watchdog organization officially tracking this sort of stuff. And maintaining a wall of shame-ish website. Maybe such a thing would make backpedalling on safety more costly for organizations, thus changing their calculus and causing them to spend incrementally more effort on safety.

I am tracking such things (although I don't really care about Garrison's critique in that particular post). I don't have an up-to-date webpage on this at the moment but this is relevant. I am not doing the job of loudly proclaiming things and thus incentivizing compliance. I would be interested in advising someone-who-wants-to-do-that on what to loudly proclaim, or maybe hiring someone.

A "wall of shame" is somewhat rough because it disincentivizes making (nontrivial, falsifiable) commitments, or because a company that makes various real commitments and breaks some of them tends to be better than a company that never makes commitments. So communicating requires some nuance (but you could do it well).

There is a nasty catch-22 when it comes to happiness: you want to be happy, but explicitly trying to be happy often makes you less happy, not more happy.

Consider the example described in this article:

...I saw it happen to Tom, a savant who speaks half a dozen languages, from Chinese to Welsh. In college, Tom declared a major in computer science but found it dissatisfying. He became obsessed with happiness, longing for a career and a culture that would provide the perfect match for his interests and values. Within two years of graduating from college, he had b

I kinda feel like a lot of random commercial things are better than residential things:

- Napkin dispensers at restaurants vs at home. I like how at restaurants you pull and only one comes out. At home it takes a bit of fine motor skill to pluck just one napkin from a bunch of stacked napkins.

- Toilet paper holders in public have a serrated edge that makes it easy to tear.

- Soap dispensers in public bathrooms and showers are attached to the wall. I like how that saves counter space.

I wonder why there's this difference. I suspect people would find the commercial approaches at home to feel "too utilitarian" and "not homey".

Pet peeve: when places close before their stated close time. For example, I was just at the library. Their signs say that they close at 6pm. However, they kick people out at 5:45pm. This caught me off guard and caused me to break my focus at a bad time.

The reason that places do this, I assume, is because employees need to leave when their shift ends. In this case with the library, it probably takes 15 minutes or so to get everyone to leave, so they spend the last 15 minutes of their shift shoeing people out. But why not make the official closing time is 5:...

Virtual watercoolers

As I mentioned in some recent Shortform posts, I recently listened to the Bayesian Conspiracy podcast's episode on the LessOnline festival and it got me thinking.

One thing I think is cool is that Ben Pace was saying how the valuable thing about these festivals isn't the presentations, it's the time spent mingling in between the presentations, and so they decided with LessOnline to just ditch the presentations and make it all about mingling. Which got me thinking about mingling.

It seems plausible to me that such mingling can and should h...

Sometimes I think to myself something along these lines:

I could read this post/comment in detail and respond to it, but I expect that others won't put much effort into the discussion and it will fizzle out, and so it isn't worth it for me to put the effort in in the first place.

This presents a sort of coordination problem, and one that would be reasonably easy to solve with some sort of assurance contract-like functionality.

There's a lot to say about whether or not such a thing is worth pursuing, but in short, it seems like trying it out as an experiment w...

Using examples of people being stupid

I've noticed that a lot of cool concepts stem from examples of people being stupid. For example, I recently re-read Detached Lever Fallacy and Beautiful Probability.

Detached Lever Fallacy:

...Eventually, the good guys capture an evil alien ship, and go exploring inside it. The captain of the good guys finds the alien bridge, and on the bridge is a lever. "Ah," says the captain, "this must be the lever that makes the ship dematerialize!" So he pries up the control lever and carries it back to his ship, after which his s

Closer to the truth vs further along

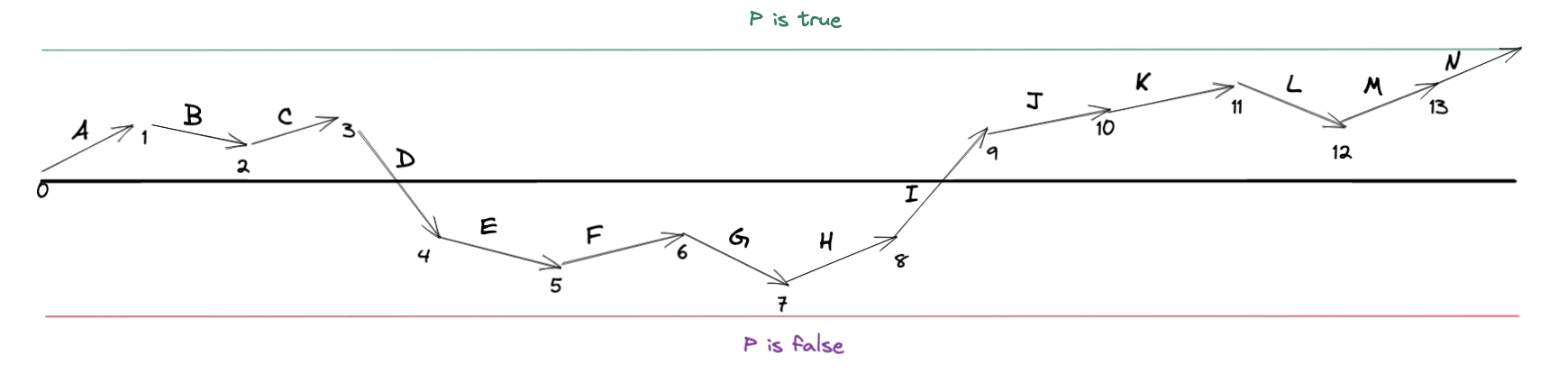

Consider a proposition P. It is either true or false. The green line represents us believing with 100% confidence that P is true. On the other hand, the red line represents us believing with 100% confidence that P is false.

We start off not knowing anything about P, so we start off at point 0, right at that black line in the middle. Then, we observe data point A. A points towards P being true, so we move upwards towards the green line a moderate amount, and end up at point 1. After that we observe data point B. B is weak ...

Suppose that you are having a disagreement with someone and you are frustrated and angry with them. It seems likely that this frustration would meaningfully harm your ability to reason well.

If so, similar to what we do in response to cognitive biases, it seems appropriate to make some adjustments. For example, given susceptibility to the planning fallacy, supposing we originally assume it'll take 20 minutes to get to the airport, we might adjust this towards a more pessimistic estimate of 45 minutes. I would think that it'd make sense to do something similar in response to noticing a feeling of frustration in yourself during a disagreement with someone.

I've seen surprisingly little discussion of this idea.

A pet peeve of mine is when people recommend books (or media, or other things) without considering how large of an investment they are to read. Books usually take 10 hours or so to read. If you're going to go slow and really dig into it, it's probably more like 20+ hours. To make the claim "I think you should read this book", the expected benefit should outweigh the relatively large investment of time.

Actually, no, the bar is higher than that. There are middle-ground options other than reading the book. You can find a summary, a review, listen to an interv...

A line of thought that I want to explore: a lot of times when people appear to be close-minded, they aren't actually being (too) close-minded. This line of thought is very preliminary and unrefined.

It's related to Aumann's Ageement Theorem. If you happen to have two perfectly Bayesian agents who are able to share information, then yes, they will end up agreeing. In practice people aren't 1) perfectly Bayesian or 2) able to share all of their information. I think (2) is a huge problem. A huge reason why it's hard to convince people of things.

Well, I guess w...

I think it's generally agreed that pizza and steak (and a bunch of other foods) taste significantly better when they're hot. But even if you serve it hot, usually about halfway through eating, the food cools enough such that it's notably worse because it's not hot enough.

One way to mitigate this is to serve food on a warmed plate. But that doesn't really do too much.

What makes the most sense to me would be to serve smaller portions in multiple courses. Like instead of a 10" pie, serve two 5" pies. Or instead of a 16oz ribeye, divide it into four 4oz ribeye...

Long text messages

I run into something that I find somewhat frustrating. When I write text messages to people, they're often pretty long. At least relative to the length of other people's text messages. I'll write something like 3-5 paragraphs at times. Or more.

I've had people point this out as being intimidating and a lot to read. That seems odd to me though. If it were an email, it'd be a very normal-ish length, and wouldn't feel intimidating, I suspect. If it were a blog post, it'd be quite short. If it were a Twitter thread, it'd be very normal and not...

Words as Bayesian Evidence

Alice: Hi, how are you?

Bob: Good. How are you?

Alice: Actually, I'm not doing so well.

Let me ask you a question. How confident are you that Bob is doing good? Not very confident, right? But why not? After all, Bob did say that he is doing good. And he's not particularly well known for being a liar.

I think the thing here is to view Bob's words as Bayesian evidence. They are evidence of Bob doing good. But how strong is this evidence? And how do we think about such a question?

Let's start with how we think about such a question. I...

There's a concept I want to think more about: gravy.

Turkey without gravy is good. But adding the gravy... that's like the cherry on top. It takes it from good to great. It's good without the gravy, but the gravy makes it even better.

An example of gravy from my life is starting a successful startup. It's something I want to do, but it is gravy. Even if I never succeed at it, I still have a great life. Eg. by default my life is, say, a 7/10, but succeeding at a startup would be so awesome it'd make it a 10/10. But instead of this happening, my brain pulls a ...

I just learned about the difference between fundamental and technical analysis in stock trading. It seems like a very useful metaphor to apply to other areas.

My thoughts here are very fuzzy though. It seems pretty similar to inside vs outside view.

Does anyone have thoughts here? What is the essence of the difference between fundamental and technical analysis? How similar is it to inside vs outside view? Whether or not you're modeling the thing itself (fundamental) or things "outside" the thing itself (technical)? Maybe it makes sense to think about causal ...

I learned about S-curves recently. It was in the context of bike networks. As you add bike infrastructure, at first it doesn't lead to much adoption because the infrastructure isn't good enough to get people to actually use it. Then you pass some threshold and you get lots of adoption. Finally, you hit a saturation point where improvements don't move the needle much because things are already good.

I think this is a really cool concept. I wish I knew about it when I wrote Beware unfinished bridges.

I feel like there are a lot of situations where people try t...

I've been doing Quantified Intuitions' Estimation Game every month. I really enjoy it. A big thing I've learned from it is the instinct to think in terms of orders of magnitude.

Well, not necessarily orders of magnitude, but something similar. For example, a friend just asked me about building a little web app calculator to provide better handicaps in golf scrambles. In the past I'd get a little overwhelmed thinking about how much time such a project would take and default to saying no. But this time I noticed myself approaching it differently.

Will it take ...

I recently started going through some of Rationality from AI to Zombies again. A big reason why is the fact that there are audio recordings of the posts. It's easy to listen to a post or two as I walk my dog, or a handful of posts instead of some random hour-long podcast that I would otherwise listen to.

I originally read (most of) The Sequences maybe 13 or 14 years ago when I was in college. At various times since then I've made somewhat deliberate efforts to revisit them. Other times I've re-read random posts as opposed to larger collections of posts. Any...

Squinting

...“You should have deduced it yourself, Mr Potter,” Professor Quirrell

said mildly. “You must learn to blur your vision until you can see the forest

obscured by the trees. Anyone who heard the stories about you, and who

did not know that you were the mysterious Boy-Who-Lived, could eas-

ily deduce your ownership of an invisibility cloak. Step back from these

events, blur away their details, and what do we observe? There was a great

rivalry between students, and their competition ended in a perfect tie.

That sort of thing only happens in stories, Mr Potter,

As a programmer, compared to other programmers, I am extremely uninterested in improving the speed of web apps I work on. I find that (according to my judgement) it rarely has more than a trivial impact on user experience. On the other hand, I am usually way more interested than others are in things like improving code quality.

I wonder if this has to do with me being very philosophically aligned with Bayesianism. Bayesianism preaches to update your beliefs incrementally, whereas Alternative is a lot more binary. For example, the way scientific experiments ...

I've had success with something: meal prepping a bunch of food and freezing it.

I want to write a blog post about it -- describing what I've done, discussing it, and recommending it as something that will quite likely be worthwhile for others as well -- but I don't think I'm ready. I did one round of prep that lasted three weeks or so and was a huge success for me, but I don't think that's quite enough "contact with reality". I think there's a risk that, after more "contact with reality", it proves to be not nearly as useful as it currently seems. So yeah, ...

I've gotta vent a little about communication norms.

My psychiatrist recommended a new drug. I went to take it last night. The pills are absolutely huge and make me gag. But I noticed that the pills look like they can be "unscrewed" and the powder comes out.

So I asked the following question (via chat in this app we use):

For the NAC, the pill is a little big and makes me gag. Is it possible to twist it open and pour the powder on my tongue? Or put it in water and drink it?

The psychiatrist responded:

...Yes it seems it may be opened and mixed into food or somethin

Subtextual politeness

In places like Hacker News and Stack Exchange, there are norms that you should be polite. If you said something impolite and Reddit-like such as "Psh, what a douchebag", you'd get flagged and disciplined.

But that's only one form of impoliteness. What about subtextual impoliteness? I think subtextual impoliteness is important too. Similarly important. And I don't think my views here are unique.

I get why subtextual impoliteness isn't policed though. Perhaps by definition, it's often not totally clear what the subtext behind a statement i...

Life decision that actually worked for me: allowing myself to eat out or order food when I'm hungry and pressed for time.

I don't think the stress of frantically trying to get dinner together is worth the costs in time or health. And after listening to this podcast episode, I'm suspect that, I'm not sure how to say this: "being overweight is bad, but like, it's not that bad, and stressing about it is also bad since stress is bad, all of this in such a way where stressing out over being marginally more overweight is worse for your health than being a little ...

I think that, for programmers, having good taste in technologies is a pretty important skill. A little impatience is good too, since it can drive you to move away from bad tools and towards good ones.

These points seem like they should generalize to other fields as well.

Inverted interruptions

Imagine that Alice is talking to Bob. She says the following, without pausing.

That house is ugly. You should read Harry Potter. We should get Chinese food.

We can think of it like this. Approach #1:

- At

t=1Alice says "That house is ugly." - At

t=2Alice says "You should read Harry Potter." - At

t=3Alice says "We should get Chinese food."

Suppose Bob wants to respond to the comment of "That house is ugly." Due to the lack of pauses, Bob would have to interrupt Alice in order to get that response in. On the other hand, if Alice paused in betwee...

Something that I run into, at least in normie culture, is that writing (really) long replies to comments has a connotation of being contentious, or even hostile (example). But what if you have a lot to say? How can you say it without appearing contentious?

I'm not sure. You could try to signal friendliness by using lots of smiley faces and stuff. Or you could be explicit about it and say stuff like "no hard feelings".

Something about that feels distasteful to me though. It shouldn't need to be done.

Also, it sets a tricky precedent. If you start using smiley ...

Capabilities vs alignment outside of AI

In the field of AI we talk about capabilities vs alignment. I think it is relevant outside of the field of AI though.

I'm thinking back to something I read in Cal Newport's book Digital Minimalism. He talked about how the Amish aren't actually anti-technology. They are happy to adopt technology. They just want to make sure that the technology actually does more good than harm before they adopt it.

And thy have a neat process for this. From what I remember, they first start by researching it. Then have small groups of pe...

Spreading the seed of ideas

A few of my posts actually seem like they've been useful to people. OTOH, a large majority don't.

I don't have a very good ability to discern this from the beginning though. Given this situation, it seems worth "spreading the seed" pretty liberally. The chance of it being a useful idea usually outweighs the chance that it mostly just adds noise for people to sift through. Especially given the fact that the LW team encourages low barriers for posting stuff. Doubly especially as shortform posts. Triply especially given that I person...

Notice when trust batteries start off low

...The basic idea is that your trust battery is pre-charged at 50% when you’re first hired or start working with someone for the first time. Every interaction you have with your colleagues from that point on then either charges, discharges, or maintains the battery - and as a result, affects how much you enjoy working with them and trust them to do a good job.

The things that influence your trust battery charge vary wildly - whether the other person has done what they said they’ll do, how well you get on with that per

Covid-era restaurant choice hack: Korean BBQ. Why? Think about it...

They have vents above the tables! Cool, huh? I'm not sure how much that does, but my intuition is that it cuts the risk in half at least.

Science as reversed stupidity

Epistemic status: Babbling. I don't have a good understanding of this, but it seems plausible.

Here is my understanding. Before science was a thing, people would derive ideas by theorizing (or worse, from the bible). It wasn't very rigorous. They would kinda just believe things willy-nilly (I'm exaggerating).

Then science came along and was like, "No! Slow down! You can't do that! You need to have sufficient evidence before you can justifiably believe something like that!" But as Eliezer explains, science is too slow. It judges t...

I was just listening to the Why Buddhism Is True episode of the Rationally Speaking podcast. They were talking about what the goal of meditation is. The interviewee, Robert Wright, explains:

the Buddha said in the first famous sermon, he basically laid out the goal, "Let's try to end suffering."

What an ambitious goal! But let's suppose that it was achieved. What would be the implications?

Well, there are many. But one that stands out to me as particularly important as well as ignored, is that it might be a solution to existential risk. Maybe if people we...

I came across this today. Pretty cool.

"If I had only one hour to save the world, I would spend fifty-five minutes defining the problem, and five minutes finding the solution." ~Einstein, maybe

I was thinking about what I mean when I say that something is "wrong" in a moral sense. It's frustrating and a little embarrassing that I don't immediately have a clear answer to this.

My first thought was that I'm referring to doing something that is socially suboptimal in a utilitarian sense. Something you wouldn't want to do from behind a veil of ignorance.

But I don't think that fully captures it. Suppose you catch a cold, go to a coffee shop when you're pre-symptomatic, and infect someone. I wouldn't consider that to be wrong. It was unintentional. So I...

Many years after having read it, I'm finding that the "Perils of Interacting With Acquaintances" section in The Great Perils of Social Interaction has really stuck with me. It is probably one of the more useful pieces of practical advice I've come across in my life. I think it's illustrated really well in this barber story:

...But that assumes that you can only be normal around someone you know well, which is not true. I started using a new barber last year, and I was pleasantly surprised when instead of making small talk or asking me questions about my l

Sometimes when I'm reading old blog posts on LessWrong, like old Sequence posts, I have something that I want to write up as a comment, and I'm never sure where to write that comment.

I could write it on the original post, but if I do that it's unlikely to be seen and to generate conversation. Alternatively, I could write it on my Shortform or on the Open Thread. That would get a reasonable amount of visibility, but... I dunno... something feels defect-y and uncooperative about that for some reason.

I guess what's driving that feeling is probably the thought...

Just as you can look at an arid terrain and determine what shape a river will one day take by assuming water will obey gravity, so you can look at a civilization and determine what shape its institutions will one day take by assuming people will obey incentives.

- Scott Alexander, Meditations on Moloch

There's been talk recently about there being a influx of new users to LessWrong and a desire to prevent this influx from harming the signal-to-noise ratio on LessWrong too much. I wonder: what if it costed something like $1 to make an account? Or $1/month? Some trivial amount of money that serves as a filter for unserious people.

From Childhoods of exceptional people:

...The importance of tutoring, in its more narrow definition as in actively instructing someone, is tied to a phenomenon known as Bloom’s 2-sigma problem, after the educational psychologist Benjamin Bloom who in the 1980s claimed to have found that tutored students

. . . performed two standard deviations better than students who learn via conventional instructional methods—that is, “the average tutored student was above 98% of the students in the control class.”

Simply put, if you tailor your instruction to a sing

Nonfiction books should be at the end of the funnel

Books take a long time to read. Maybe 10-20 hours. I think that there are two things that you should almost always do first.

-

Read a summary. This usually gives you the 80/20 and only takes 5-10 minutes. You can usually find a summary by googling around. Derek Sivers and James Clear come to mind as particularly good resources.

-

Listen to a podcast or talk. Nowadays, from what I could tell, authors typically go on a sort of podcast tour before releasing a book in order to promote it. I find that this typi

I've been in pursuit of a good startup idea lately. I went through a long list I had and deleted everything. None were good enough. Finding a good idea is really hard.

One way that I think about it is that a good idea has to be the intersection of a few things.

- For me at least, I want to be able to fail fast. I want to be able to build and test it in a matter of weeks. I don't want to raise venture funding and spend 18 months testing an idea. This is pretty huge actually. If one idea takes 10 days to build and the other takes 10 weeks, well, the burden of

Bayesian traction

A few years ago I worked on a startup called Premium Poker Tools as a solo founder. It is a web app where you can run simulations about poker stuff. Poker players use it to study.

It wouldn't have impressed any investors. Especially early on. Early on I was offering it for free and I only had a handful of users. And it wasn't even growing quickly. This all is the opposite of what investors want to see. They want users. Growth. Revenue.

Why? Because those things are signs. Indicators. Signal. Traction. They point towards an app being a big hi...

Collaboration and the early stages of ideas

Imagine the lifecycle of an idea being some sort of spectrum. At the beginning of the spectrum is the birth of the idea. Further to the right, the idea gets refined some. Perhaps 1/4 the way through the person who has the idea texts some friends about it. Perhaps midway through it is refined enough where a rough draft is shared with some other friends. Perhaps 3/4 the way through a blog post is shared. Then further along, the idea receives more refinement, and maybe a follow up post is made. Perhaps towards the ve...

This summer the Thinking Basketball podcast has been doing a series on the top 25 players[1] of the 21st century. I've been following the person behind the podcast for a while, Ben Taylor, and I think he has extremely good epistemics.

Taylor makes a lot of lists like these and he always is very nervous and hesitant. It's really hard to say that Chris Paul is definitively better than James Harden. And people get mad at you when you do rank Paul higher. So Taylor really, really emphasizes ranges. For Paul and Harden specifically, he says that Paul has a ...

I'm traveling right now and have been drinking out of my water bottle whereas when I'm at home I drink out of cups. The water bottle is insulated and the water in it stays cold for an impressively long time. It's awesome.

It's making me think that back at home I should use the water bottle or maybe a tumbler or something, instead of cups. At least for water that I'm drinking throughout the day. The cost of buying a tumbler, if I even wanted to use that instead of the water bottle, is only $30 or so. For something that I'm going to use every day for many yea...

I feel like it'd probably be more valuable to the community for me to, instead of spending a small amount of time on many posts, spend a long time on a few posts. Quality over quantity. I feel like this is true for most other authors as well. I'm not confident though.

If true, there is probably a question of motivation. People are probably more motivated to take quantity over quality. But I wonder what can be done to mitigate or even reverse that.

I wonder how widely agreed upon the whole "avoid unnecessarily political examples" in the "politics is the mindkiller" sense is. I was just reading Varieties Of Argumentative Examples by Scott Alexander. The examples seem maximally political:

...“I can’t believe it’s 2018 and we’re still letting transphobes on this forum.”

“Just another purple-haired SJW snowflake who thinks all disagreement is oppression.”

“Really, do conservatives have any consistent beliefs other than hating black people and wanting the poor to starve?”

“I see we’ve got a Silicon Valley techbr

I wish more people used threads on platforms like Slack and Discord. And I think the reason to use threads is very similar to the reason why one should aim for modularity when writing software.

Here's an example. I posted this question in the #haskell-beginners Discord channel asking whether it's advisable for someone learning Haskell to use a linter. I got one reply, but it wasn't as a thread. It was a normal message in #haskell-beginners. Between the time I asked the question and got a response, there were probably a couple dozen other messages. So then, ...

This is super rough and unrefined, but there's something that I want to think and write about. It's an epistemic failure mode that I think is quite important. It's pretty related to Reversed Stupidity is Not Intelligence. It goes something like this.

You think 1. Alice thinks 2. In your head, you think to yourself:

Gosh, Alice is so dumb. I understand why she thinks 2. It's because A, B, C, D and E. But she just doesn't see F. If she did, she'd think 1 instead of 2.

Then you run into other people being like:

...Gosh, Bob is so dumb. I understand why he thinks 1.

When I think about problems like these, I use what feels to me like a natural generalization of the economic idea of efficient markets. The goal is to predict what kinds of efficiency we should expect to exist in realms beyond the marketplace, and what we can deduce from simple observations. For lack of a better term, I will call this kind of thinking inadequacy analysis.

I think this is pretty applicable to highly visible blog posts, such as ones that make the home page in popular communities such as Less...

It's weird that people tend so strongly to be friends with people so close to their age. If you're 30, why are you so much more likely to be friends with another 30 year old than, say, a 45 year old?

...There were other lines of logic leading to the same conclusion. Complex machinery was always universal within a sexually reproducing species. If gene B relied on gene A, then A had to be useful on its own, and rise to near-universality in the gene pool on its own, before B would be useful often enough to confer a fitness advantage. Then once B was universal you would get a variant A* that relied on B, and then C that relied on A* and B, then B* that relied on C, until the whole machine would fall apart if you removed a single piece. But it all had to happe

I wonder if the Facebook algorithm is a good example of the counterintuitive difficulty of alignment (as a more general concept).

You're trying to figure out the best posts and comments to prioritize in the feed. So you look at things like upvotes, page views and comment replies. But it turns out that that captures things like how much of a demon thread it is. Who would have thought metrics like upvotes and page views could be so... demonic?

I don't think this is an alignment-is-hard-because-it's-mysterious, I think it's "FB has different goals than me". FB wants engagement, not enjoyment. I am not aligned with FB, but FB's algorithm is pretty aligned with its interests.

Open mic posts

In stand up comedy, performances are where you present your good jokes and open mics are where you experiment.

Sometimes when you post something on a blog (or Twitter, Facebook, a comment, etc.), you intend for it to be more of a performance. It's material that you have spent time developing, are confident in, etc.

But other times you intend for it to be more of an open mic. It's not obviously horribly or anything, but it's certainly experimental. You think it's plausibly good, but very well might end up being garbage.

Going further, in stand up...

On Stack Overflow you could offer a bounty for a question you ask. You sacrifice some karma in exchange for having your question be more visible to others. Sometimes I wish I could do that on LessWrong.

I'm not sure how it'd work though. Giving the post +N karma? A bounties section? A reward for the top voted comment?

Alignment research backlogs

I was just reading AI alignment researchers don't (seem to) stack and had the thought that it'd be good to research whether intellectual progress in other fields is "stackable". That's the sort of thing that doesn't take an Einstein level of talent to pursue.

I'm sure other people have similar thoughts: "X seems like something we should do and doesn't take a crazy amount of talent".

What if there was a backlog for this?

I've heard that, to mitigate procrastination, it's good to break tasks down further and further until they become ...

Mustachian Grants

I remember previous discussions that went something like this:

Alice: EA has too much money and not enough places to spend it.

Bob: Why not give grants anyone and everyone who wants to do, for example, alignment research?

Alice: That sets up bad incentives. Malicious actors would seek out those grants and wouldn't do real work. And that'd have various bad downstream effects.

But what if those grants were minimal? What if they were only enough to live out a Mustachian lifestyle?

Well, let's see. A Mustachian lifestyle costs something like $2...

Asset ceilings for politicians

A long time when I was a sophomore in college, I remember a certain line of thinking I went through:

- It is important for politicians to be incentivized properly. Currently they are too susceptible to bribery (hard, soft, in between) and other things.

- It is difficult to actually prevent bribes. For example, they may come in the form of "Pass the laws I want passed and instead of handing you a lump sum of money, I'll give you a job that pays $5M/year for the next 30 years after your term is up."

- Since preventing bribes is diffi

Goodhart's Law seems like a pretty promising analogy for communicating the difficulties of alignment to the general public, particularly those who are in fields like business or politics. They're already familiar with the difficulty and pain associated with trying to get their organization to do X.

When better is apples to oranges

I remember talking to a product designer before. I brought up the idea of me looking for ways to do things more quickly that might be worse for the user. Their response was something along the lines of "I mean, as a designer I'm always going to advocate for whatever is best for the user."

I think that "apples-to-oranges" is a good analogy for what is wrong about that. Here's what I mean.

Suppose there is a form and the design is to have inline validation (nice error messages next to the input fields). And suppose that "global"...

I was just watching this YouTube video on portable air conditioners. The person is explaining how air conditioners work, and it's pretty hard to follow.

I'm confident that a very large majority of the target audience would also find it hard to follow. And I'm also confident that this would be extremely easy to discover with some low-fi usability testing. Before releasing the video, just spend maybe 20 mins and have a random person watch the video, and er, watch them watch it. Ask them to think out loud, narrating their thought process. Stuff like that.

Moreo...

I think that people should write with more emotion. A lot more emotion!

Emotion is bayesian evidence. It communicates things.

...One could also propose making it not full of rants, but I don’t think that would be an improvement. The rants are important. The rants contain data. They reveal Eliezer’s cognitive state and his assessment of the state of play. Not ranting would leave important bits out and give a meaningfully misleading impression.

...

The fact that this is the post we got, as opposed to a different (in many ways better) post, is a reflection of the

I wonder whether it would be good to think about blog posts as open journaling.

When you write in a journal, you are writing for yourself and don't expect anyone else to read it. I guess you can call that "closed journaling". In which case "open journaling" would mean that you expect others to read it, and you at least loosely are trying to cater to them.

Well, there are pros and cons to look at here. The main con of treating blog posts as open journaling is that the quality will be lower than a more traditional blog post that is more refined. On the other h...

Inconsistency as the lesser evil

It bothers me how inconsistent I am. For example, consider covid-risk. I've eaten indoors before. Yet I'll say I only want to get coffee outside, not inside. Is that inconsistent? Probably. Is it the right choice? Let's say it is, for arguments sake. Does the fact that it is inconsistent matter? Hell no!

Well, it matters to the extent that it is a red flag. It should prompt you to have some sort of alarms going off in your head that you are doing something wrong. But the proper response to those alarms is to use that as an op...

The other day I was walking to pick up some lunch instead of having it delivered. I also had the opportunity to freelance for $100/hr (not always available to me), but I still chose to walk and save myself the delivery fee.

I make similarly irrational decisions about money all the time. There are situations where I feel like other mundane tasks should be outsourced. Eg. I should trade my money for time, and then use that time to make even more money. But I can't bring myself to do it.

Perhaps food is a good example. It often takes me 1-2 hours to "do" dinner...

Betting is something that I'd like to do more of. As the LessWrong tag explains, it's a useful tool to improve your epistemics.

But finding people to bet with is hard. If I'm willing to bet on X with Y odds and I find someone else eager to, it's probably because they know more than me and I am wrong. So I update my belief and then we can't bet.

But in some situations it works out with a friend, where there is mutual knowledge that we're not being unfair to one another, and just genuinely disagree, and we can make a bet. I wonder how I can do this more often. And I wonder if some sort of platform could be built to enable this to happen in a more widespread manner.

Idea: Athletic jerseys, but for intellectual figures. Eg. "Francis Bacon" on the back, "Science" on the front.

I've always heard of the veil of ignorance being discussed in a... social(?) context: "How would you act if you didn't know what person you would be?". A farmer in China? Stock trader in New York? But I've never heard it discussed in a temporal context: "How would you act if you didn't know what era you would live in?" 2021? 2025? 2125? 3125?

This "temporal veil of ignorance" feels like a useful concept.

I just came across an analogy that seems applicable for AI safety.

AGI is like a super powerful sports car that only has an accelerator, no brake pedal. Such a car is cool. You'd think to yourself:

Nice! This is promising! Now we have to just find ourselves a brake pedal.

You wouldn't just hop in the car and go somewhere. Sure, it's possible that you make it to your destination, but it's pretty unlikely, and certainly isn't worth the risk.

In this analogy, the solution to the alignment problem is the brake pedal, and we really need to find it.

Alice, Bob, and Steve Jobs

In my writing, I usually use the Alice and Bob naming scheme. Alice, Bob, Carol, Dave, Erin, etc. Why? The same reason Steve Jobs wore the same outfit everyday: decision fatigue. I could spend the time thinking of names other than Alice and Bob. It wouldn't be hard. But it's just nice to not have to think about it. It seems like it shouldn't matter, but I find it really convenient.

Epistemic status: Rambly. Perhaps incoherent. That's why this is a shortform post. I'm not really sure how to explain this well. I also sense that this is a topic that is studied by academics and might be a thing already.

I was just listening to Ben Taylor's recent podcast on the top 75 NBA players of all time, and a thought started to crystalize for me that I always have wanted to develop. For people who don't know him (everyone reading this?), his epistemics are quite good. If you want to see good epistemics applied to basketball, read his series of posts...

I wonder if it would be a good idea groom people from an early age to do AI research. I suspect that it would. Ie identify who the promising children are, and then invest a lot of resources towards grooming them. Tutors, therapists, personal trainers, chefs, nutritionists, etc.

Iirc, there was a story from Peak: Secrets from the New Science of Expertise about some parents that wanted to prove that women can succeed in chess, and raised three daughters doing something sorta similar but to a smaller extent. I think the larger point being made was that if you ...

I suspect that the term "cognitive" is often over/misused.

Let me explain what my understanding of the term is. I think of it as "a disagreement with behaviorism". If you think about how psychology progressed as a field, first there was Freudian stuff that wasn't very scientific. Then behaviorism emerged as a response to that, saying "Hey, you have to actually measure stuff and do things scientifically!" But behaviorists didn't think you could measure what goes on inside someone's head. All you could do is measure what the stimulus is and then how the human...

Everyone hates spam calls. What if a politician campaigned to address little annoyances like this? Seems like it could be a low hanging fruit.

Against "change your mind"

I was just thinking about the phrase "change your mind". It kind of implies that there is some switch that is flipped, which implies that things are binary (I believe X vs I don't believe X). That is incorrect[1] of course. Probability is in the mind, it is a spectrum, and you update incrementally.