On the contrary, I think that almost all people and institutions that don't currently have a Wikipedia article should not want one. For example, right now, if someone searches for Neel Nanda, they'll find his site, which has whatever information and messages he wants to put there. By contrast, if there's a Neel Nanda Wikipedia article, it will have whatever information very active Wikipedia editors want it to have, and whatever information journalists choose to write about him, no matter how dumb and insane and outdated.

An extreme (and close-to-home) example is documented in TracingWoodgrains’s exposé.of David Gerard’s Wikipedia smear campaign against LessWrong and related topics. That’s an unusually crazy story, but less extreme versions are very common—e.g. a journalist wrote something kinda dumb about Organization X many years ago, and it lives on in the Wikipedia article, when it otherwise would have been long forgotten.

Likewise, if someone searches for AI-2027, they’ll find actual AI-2027, a site which the AI-2027 people control and can add whatever information they want to. If they want to add a “reception” tab to their site, to talk about who has read it and what they’ve said, they can.

On the other hand, creating good new Wikipedia pages is a great idea for things that are uncontroversial but confusing for novices. For example, the new mechanistic interpretability page seems good (at a glance).

And if a Wikipedia article already exists, then making that article better is an excellent idea.

An extreme (and close-to-home) example is documented in TracingWoodgrains’s exposé.of David Gerard’s Wikipedia smear campaign against LessWrong and related topics. That’s an unusually crazy story [...]

This is even closer to home -- David Gerard has commented on the Wikipedia Talk Page and referenced this LW post: https://web.archive.org/web/20250814022218/https://en.wikipedia.org/wiki/Talk:Mechanistic_interpretability#Bad_sourcing,_COI_editing

There was a time when Wikipedia editors invited more people to join them and help write articles...

Maybe they should implement a software solution to a social problem. Something like: "if someone who is higher in the Wikipedia hierarchy than you has already edited an article, you are not supposed to modify this article (or maybe just the sections that they have edited)". This could avoid many unnecessary conflicts, and the result would be the same as now that they battle it out in the talk pages.

Thanks for the comment!

I hear the critique, but I’m not sure I’m as confident as you are that it’s a good one.

The first reason is that I’m unsure whether the trade-off between credibility for having a wiki page doesn’t outweigh the loss of control.

The second reason is that I don’t really think there is much losing control (minus in extreme cases like you mention) - you can’t be super ideological on wiki sites, minus saying things like “and here’s what critics say”. On that point, I think it’s just pretty important for the standard article on a topic to have critiques of it (as long as they are honest/ good rebuttals, which I’m somewhat confident that the wiki moderators can ensure). Another point on this is that LWers can just be on top of stuff to ensure that the information isn’t clearly outdated or confused.

Curious to hear pushback, though.

The pushback is essentially David Gerard, so I'd be curious how you're thinking of having to deal with him specifically, instead of just "loss of control" in the abstract. (If you haven't already read Trace's essay, it illustrates what I mean much better than I can summarise here.)

Agent Foundations

Speaking as a self-identified agent foundations researcher, I don't think agent foundations can be said to exist yet. It's more of an aspiration than a field. If someone wrote a wikipedia page for it, it would just be that person's opinion on what agent foundations should look like.

I seriously considered writing a review article for agent foundations, but eventually my conclusion was that there weren't even enough people that meant the same thing by it for a review article to be truthfully talking about an existing thing.

The opening paragraph says

Below, we (1) flag the most important upgrades the Mech Interp page still needs, (2) list other alignment / EA topics that are currently stubs or red-links, and (3) share quick heuristics for editing without tripping Wikipedia’s notability or neutrality rules.

but so far as I can see the text that actually follows doesn't do (1) at all, does kinda do (2) though e.g. I don't think it's accurate to describe Wikipedia's "Less Wrong" article as a stub, and does give advice on editing Wikipedia but doesn't do the specific things claimed under (3) at all.

I think (3) is pretty important here. If a lot of people reading this go off to edit Wikipedia to make it say more about AI alignment, or make it say nicer things about Less Wrong, or the like, then (a) many of those people may be violating Wikipedia's rules designed to avoid distortion from edits-by-interested-parties and (b) even if they aren't it may well look that way, and in either case the end result could be the opposite of what those new Wikipedia editors are hoping for.

I'm glad that the post's first paragraph is now more consistent with the rest of it, but it does feel like it might need more on the old #3 to avoid the failure mode where a bunch of enthusiastic LessWrongers descend on Wikipedia, edit it in ways that existing Wikipedia editors disapprove of, and get blowback that ends up making things worse. At the very least it seems like there should be links to specific sets of rules that it would be easy to fall foul of, things like WP:COI and WP:NOTE and WP:RS.

Do you think Wiki pages might be less important with LLM's these days? Also, I just don't end up on Wiki pages as often, I'm wondering if Google stopped prioritizing it so heavily.

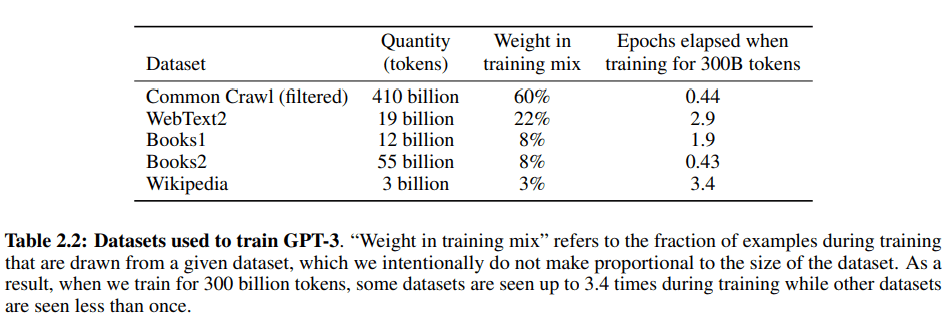

Wikipedia articles have traditionally been emphasized in LLM training. OpenAI never told us the dataset used to train GPT-4 or GPT-5, but the dataset used for training GPT-3 involved 3.4 repetitions of Wikipedia.

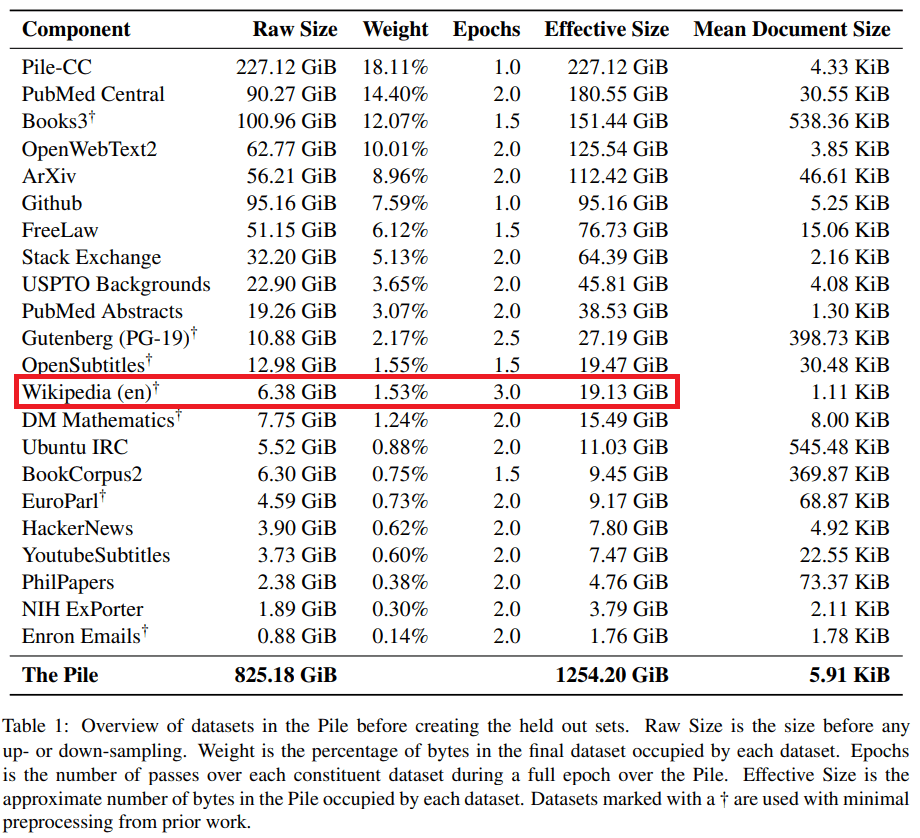

The Pile also has English Wikipedia repeated three times, which is a higher multiplier than any other subcomponent.

I don't think they've become less important. Wikipedia is pretty heavily cited by LLMs when they go and do their own research in my experience, so Wikipedia articles are still valuable even if fewer humans visit it.

On the point of Google not prioritizing it so heavily - I don't think Google indexes a lot of new Wikipedia articles but old established articles still top the search result. In our case, the mech interp wiki page never got indexed by Google until a Wikipedia New Page reviewer marked it as reviewed a couple days ago - now it's a top result.

Don't usually post here but feel compelled to do so after seeing this. This post specifically is being cited to as causing a "conflict of interest" on the talk page of the article https://en.wikipedia.org/wiki/Talk:Mechanistic_interpretability#Bad_sourcing,_COI_editing. I substantially edited the mech interp wiki page before this (I believe a majority of the bytes of the page are mine now) but some of my contributions are being removed for e.g. citing arXiv papers that are apparently not good sources (never mind them being highly cited and used by others in the field). I wonder if the comments on this article caused a kind of negative polarisation. Now I generally feel like I'd rather write my own separate thing rather than get dragged into this mess.

Hi Aryaman, thanks again for the great technical writeup in the mech interp article. Moving to the mech interp talk page to address the COI and RS concerns.

At the very least, trying it seems like a good experiment.

I think it would be an interesting experiment. To see how many of your edits were reverted by David Gerard.

TL;DR:

A couple months ago, we (Jo and Noah) wrote the first Wikipedia article on Mechanistic Interpretability. It was oddly missing despite Mech Interp’s visibility in alignment circles. We think Wikipedia is a top-of-funnel resource for journalists, policy staffers, and curious students, so filling that gap is cheap field-building. Seeing that the gap existed in one of the most important subfields in AIS, we suspect that there are probably many others. Below, we (1) list other alignment / EA topics that need pages or can be upgraded and (2) share some notes about editing Wikipedia. If you know the literature, an afternoon of edits may be surprisingly high-impact.

PS: We also think that there existing a wiki page for the field that one is working in increases one's credibility to outsiders - i.e. if you tell someone that you're working in AI Control, and the only pages linked are from LessWrong and Arxiv, this might not be a good look.

Wikipedia pages worth (re)writing

Below are topics with plentiful secondary literature yet their wiki pages are either missing or a stub.

Wikipedia Tips

Moral of the post: Work on Wikipedia sites! It doesn't take that long, there is plenty of low hanging fruit, and it could be great for field-building. At the very least, trying it seems like a good experiment.

Thanks to gjm in the comments for recommending this edit.