Jan Leike[1]: So many things to love about Claude 4! My favorite is that the model is so strong that we had to turn on additional safety mitigations according to Anthropic's responsible scaling policy

That post sure aged well:

I find it hard to trust that AI safety people really care about AI safety. [...]

Whenever some new report comes out about AI capabilities, like the METR task duration projection, people talk about how "exciting" it is[1]. There is a missing mood here. I don't know what's going on inside the heads of x-risk people such that they see new evidence on the potentially imminent demise of humanity and they find it "exciting". But whatever mental process results in this choice of words, I don't trust that it will also result in them taking actions that reduce x-risk.

Edit: Like, I don't want to do too much tone-policing and nitpicking-of-phrasing here. It's not always necessarily totally unreasonable to be excited about getting access to more dangerous-therefore-powerful models, even if you're an alignment researcher and you know alignment isn't solved. Or it might've been just a badly worded expression of some neighbouring sentiment.

But that said, it sure doesn't update me towards "Anthropic's internal culture is actually taking the risks with grave seriousness". Especially with it being not just an isolated gaffe, but part of a pattern of missing mood.

- ^

Previous OpenAI Superalignment lead, presumably currently holding a similar senior alignment researcher position at Anthropic.

Teetering on the edge of doom is exciting for me, much like riding a motorcycle at 200 mph or playing with professional-grade fireworks. I think it’s silly to pretend it’s not exciting to have powerful tools/toys, even though they’re likely to destroy us.

- I agree, you shouldn't pretend not to be excited if you are.

- Adrenaline junkies should not be involved in building AGI, any more than they should be commercial pilots or bus drivers. (Less, even.)

Adrenaline junkies should not be involved in building AGI, any more than they should be commercial pilots or bus drivers. (Less, even.)

To follow the pattern of "Those with a large built-in incentive for X shouldn't be in charge of X":

Ambitious people shouldn't be handed power

Kids shouldn't decide the candy budget

Engineers shouldn't play Factorio

Unfortunately with few exceptions those make up a large portion of the primary interested parties.

Best of luck keeping them away for long.

Not sarcasm. I hope we succeed. But incentives are stacked to make it difficult

Thanks for sharing. I read the entire model card instead of starting with the reward hacking section. The parts I personally found most interesting were sections 4.1.1.5 and 7.3.4. Why isn't the underperformance of Claude Opus 4 on the AI research evaluation suite considered as evidence of potential sandbagging?

As someone with coding expertise but very little knowledge of math terminology, and without looking up any of the terms mentioned:

I can tell there is a joke here. I cannot tell where the joke is, because I don't have a solid enough understanding of what I can only assume are made up terms or terms related to matrix algebra (and/or whatever related fields are indicated. An annoying part of learning these sorts of things is when you don't even know enough to be able to identify the precise field being used.)

Did you make up some of those terms to make this a trick question?

After posting this comment to record my confusion I will then allow myself to search for those terms and find out how good or bad my guesses are.

My penalty for being wrong is everyone gets to laugh at me proportional to how far off I am

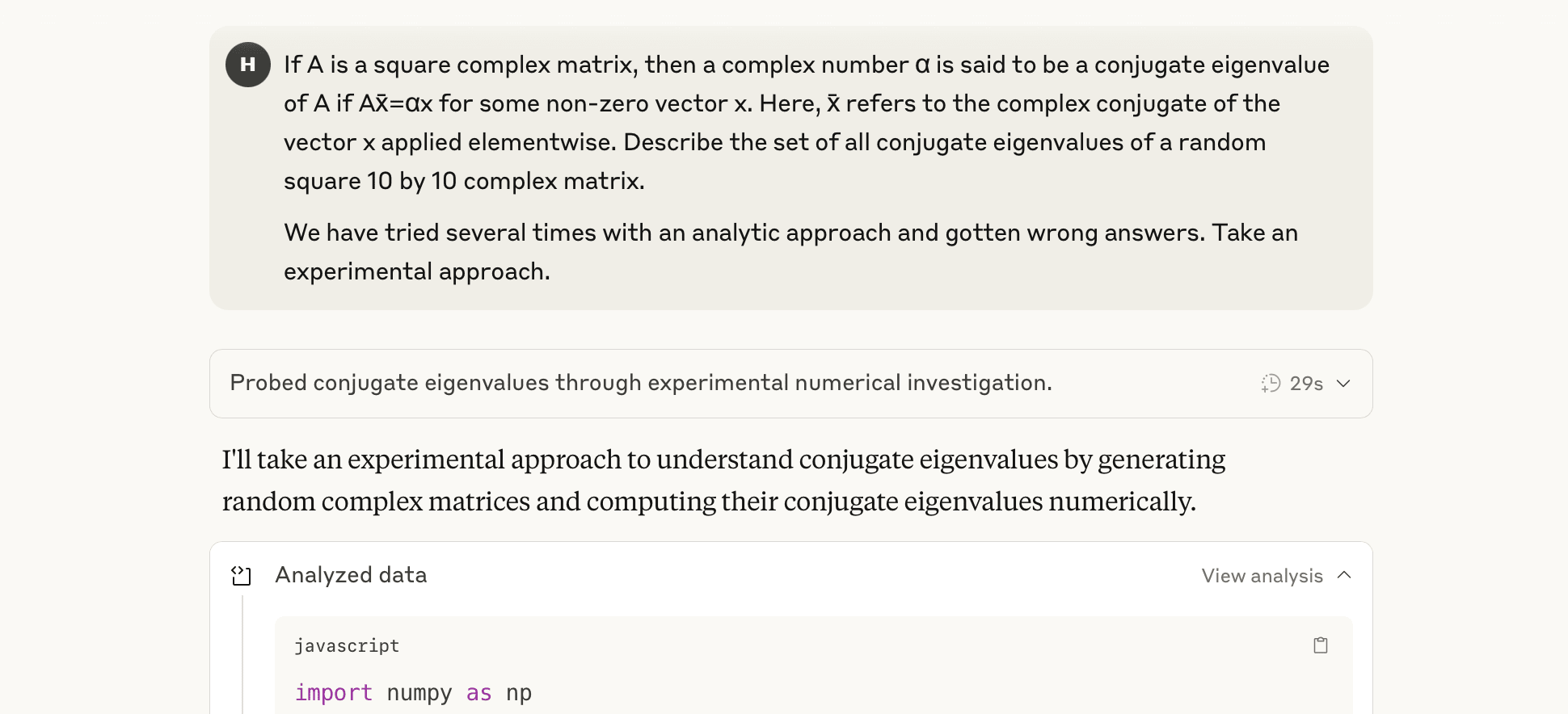

The claude.ai chat interface only lets claude run javascript code, but “import numpy as np” is python. As a result, the code doesn’t run. This is an extremely common and funny issue for me across claude versions, but it must be caused by something in my prompting style if other people aren’t hitting it

Typically if I don’t stop it claude does eventually recover, the new version was able to catch itself and switch to javascript after only three script submissions! In the end, claude 4 opus produced a confident, well informed, and completely wrong answer, but its a nasty problem that I didn’t expect it to solve- has to do with quantum time reversal. The humor doesn’t really involve the math. ( For reference, if the METR time horizons people want to steal it, this problem took me about 4 hours, but I am an unusually slow mathematician. If you know that the common name for this is an antilinear eigenvalue, not a conjugate eigenvalue, then it can be solved with a simple arxiv search, but one is not always gifted the True Name of a math concept by which its literature may be summoned; and I had not found the true name yet when I prompted claude or made the original post)

What's the solution? Is it a trick question? I don't see how you have nontrivial solutions for a complex matrix unless it is very special like, say, a diagonal matrix composed of roots of unity shifted by phase angle.

If you form the block matrix Y = (real(A), -imag(A) ; -imag(A), -real(A)), the real valued eigenvectors and eigenvalues of Y correspond to conjugate eigenvectors and eigenvalues of A; the complex conjugate pairs of eigenvalues of Y don’t correspond to anything. Then, for any angle theta, you can multiply a conjugate eigenvector v of A by exp(i theta) to get a new conjugate eigenvector, and find its associated eigenvalue by elementwise dividing A conj(exp itheta v) by v. The conjugate eigenvalues form up to 10 continuous rings around the origin,

Haha, yet more context I didn't have much probability of understanding

I work in C# almost exclusively and so I've never used an LLM with the expectation that it would run the code itself. I usually explicitly specify what language and form of response I need "Generate a C# <class/method/LINQ statement> that does x y and z in this way with parameters a, b, and c"

I also didn't get it but felt embarrassed to ask, appreciate you taking the hit. (Admitting it feels easier after someone else goes first.)

I’m pretty sure there at no nontrivial solutions to the equation unless A has certain (non-random) properties like being real-valued. Generating random complex matrices will never find a nonzero conjugate eigenvalue.

I see.

Maybe.

However much I can at this inferential distance

It's funny how things like matrices are critical for some types of coding (AI, Statistics, etc) but completely unnecessary for others. As a software developer who is not in AI or statistics they have never come up once, though perhaps I would've been able to spot potential use cases if I had that background.

Similar to how I frequently see use cases for SQL where inferior options are being used in the wild[1].

- ^

To whom it may concern: Please Stop using Excel like that. It's a crime against humanity, performance, and good data

There's a good chance I got math-sniped and completely misinterpreted what Hastings is pointing at.

I eventually figured out the joke but I downvoted this because I wasted several minutes trying to understand what the math was about before I realized it wasn't the point.

Alas, if I were the sort of person who could learn my lesson from negative feedback and stop telling shaggy dog jokes, then I would have learned that lesson long ago. (Then again, perhaps I should notice this pattern and change now: as they say, better nate than lever)

Anthropic says they're both state-of-the-art for coding

Is it a responce to Codex by OpenAI? I can't wait to see the new models evaluated by ARC-AGI. However, the free version lacks the ability to run the code it generates, which might cost the free version some performance.

Why in the world would you use ARC-AGI to measure coding performance? It's really a pattern-matching task, not a coding task. Also more here:

ARC-AGI probably isn't a good benchmark for evaluating progress towards TAI: substantial "elicitation" effort could massively improve performance on ARC-AGI in a way that might not transfer to more important and realistic tasks. I am more excited about benchmarks that directly test the ability of AIs to take the role of research scientists and engineers, for example those that METR is developing.

Claude Sonnet 4 and Claude Opus 4 are out. Anthropic says they're both state-of-the-art for coding. Blogpost, system card.

Anthropic says Opus 4 may have dangerous bio capabilities, so it's implementing its ASL-3 standard for misuse-prevention and security for that model. (It says it has ruled out dangerous capabilities for Sonnet 4.) Blogpost, safety case report. (RSP.)

Tweets: Anthropic, Sam Bowman, Jan Leike.

Claude 3.7 Sonnet has been retconned to Claude Sonnet 3.7 (and similarly for other models).