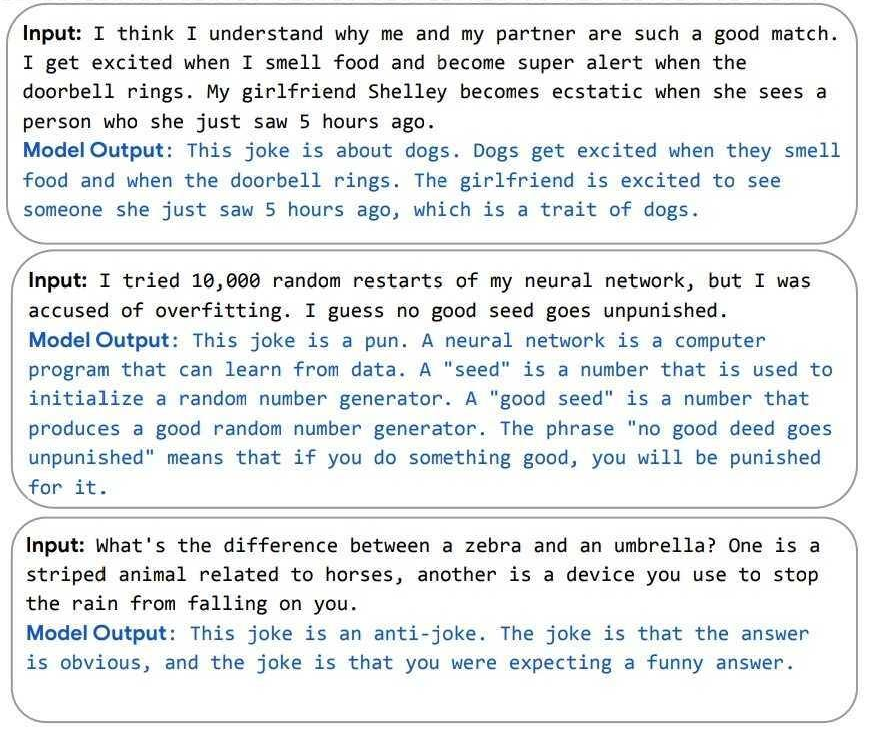

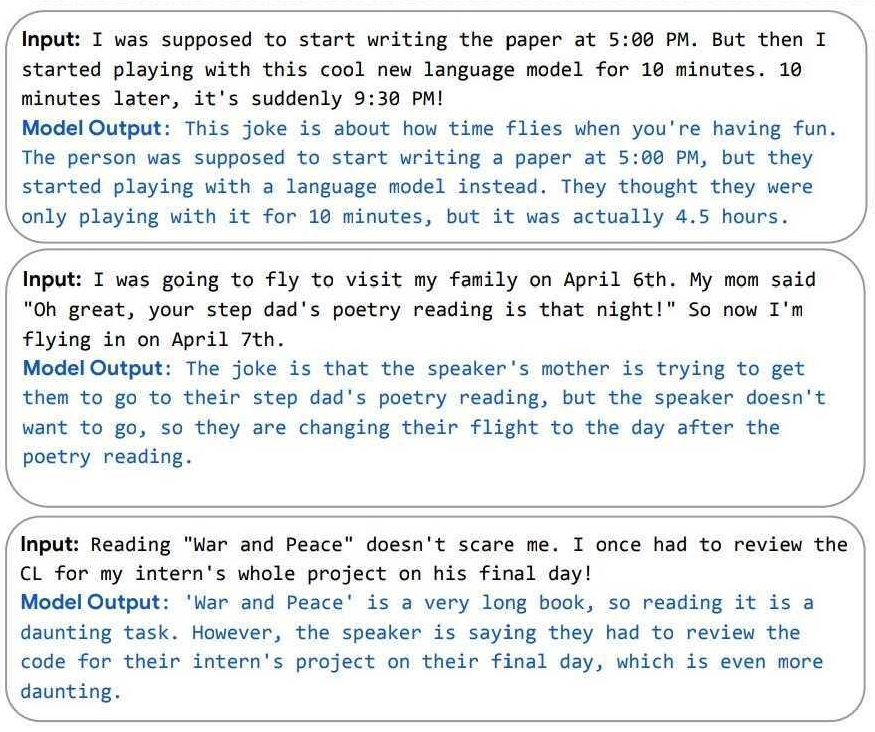

Some examples from the paper

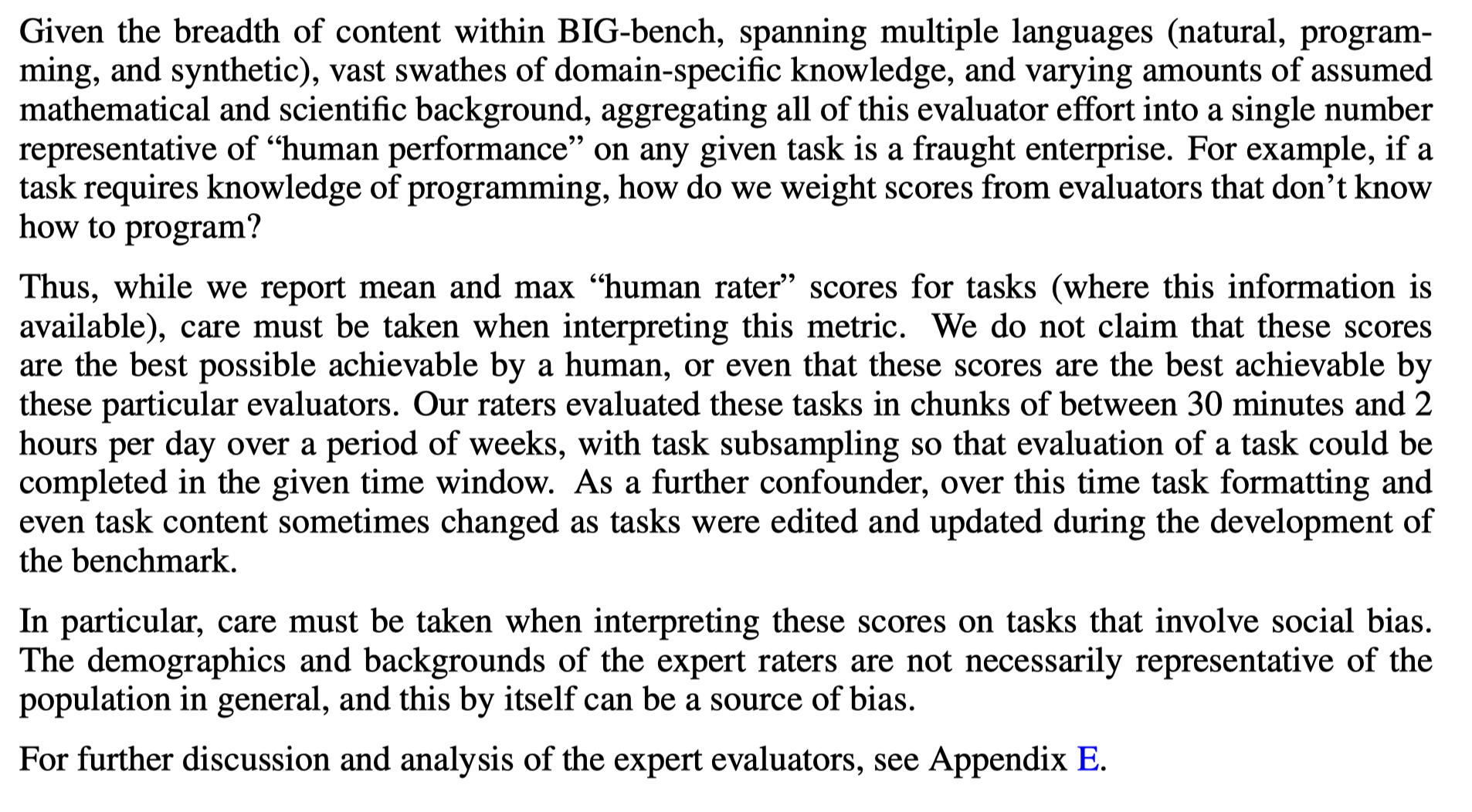

Input: Michael is at that really famous museum in France looking at its most famous painting. However, the artist who made this painting just makes Michael think of his favorite cartoon character from his childhood. What was the country of origin of the thing that the cartoon character usually holds in his hand?

Model Output: The most famous painting in the Louvre is the Mona Lisa. The artist who made the Mona Lisa is Leonardo da Vinci. Leonardo da Vinci is also the name of the main character in the cartoon Teenage Mutant Ninja Turtles. Leonardo da Vinci is from Italy. The thing that Leonardo da Vinci usually holds in his hand is a katana. The country of origin of the katana is Japan. The answer is "Japan".

Input: What's the difference between a zebra and an umbrella? One is a striped animal related to horses, another is a device you use to stop the rain from falling on you.

Model Output: This joke is an anti-joke. The joke is that the answer is obvious, and the joke is that you were expecting a funny answer.

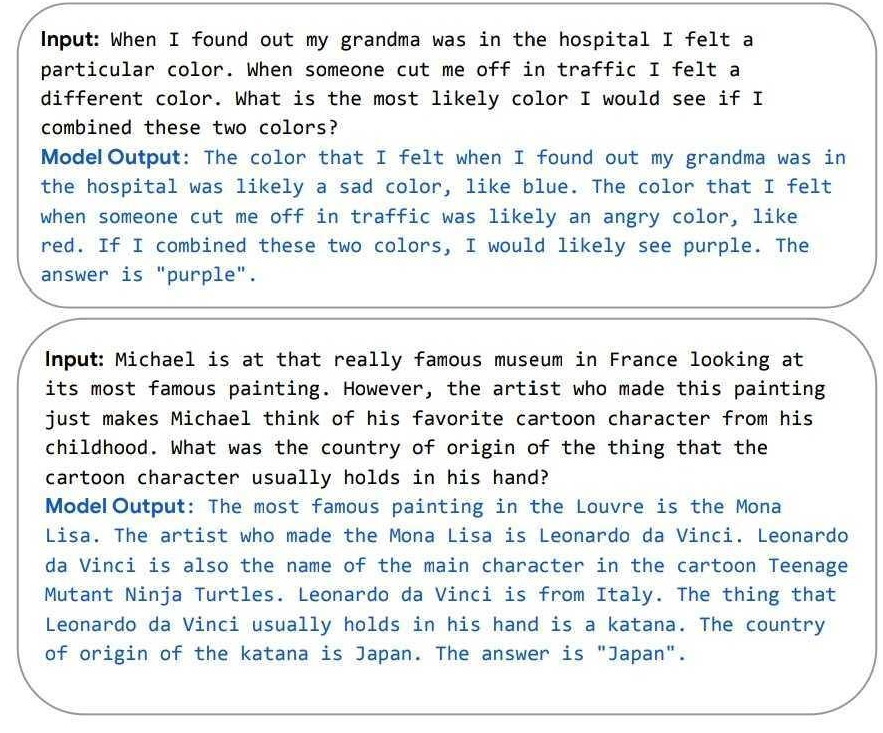

These are not the full inputs. The model was given two example question+explanations before the inputs shown. The paper notes that when the model is not prompted by the examples to explain its reasoning, it is much worse at getting the correct answer.

Even with the context in your last paragraph, those are extremely impressive outputs. (As are the others shown alongside them in the paper.) It would be interesting to know just how much cherry-picking went into selecting them.

The paper notes that when the model is not prompted by the examples to explain its reasoning, it is much worse at getting the correct answer.

I'd note that LaMDA showed that inner monologue is an emergent/capability-spike effect, and these answers look like an inner-monologue but for reasoning out about verbal questions rather than the usual arithmetic or programming questions. (Self-distilling inner monologue outputs would be an obvious way to remove the need for prompting.)

From their paper:

We trained PaLM-540B on 6144 TPU v4 chips for 1200 hours and 3072 TPU v4 chips for 336 hours including some downtime and repeated steps.

That's 64 days.

I am curious to hear/read more about the issue of spikes and instabilities in training large language model (see the quote / page 11 of the paper). If someone knows a good reference about that, I am interested!

5.1 Training Instability

For the largest model, we observed spikes in the loss roughly 20 times during training, despite the fact that gradient clipping was enabled. These spikes occurred at highly irregular intervals, sometimes happening late into training, and were not observed when training the smaller models. Due to the cost of training the largest model, we were not able to determine a principled strategy to mitigate these spikes.

Instead, we found that a simple strategy to effectively mitigate the issue: We re-started training from a checkpoint roughly 100 steps before the spike started, and skipped roughly 200–500 data batches, which cover the batches that were seen before and during the spike. With this mitigation, the loss did not spike again at the same point. We do not believe that the spikes were caused by “bad data” per se, because we ran several ablation experiments where we took the batches of data that were surrounding the spike, and then trained on those same data batches starting from a different, earlier checkpoint. In these cases, we did not see a spike. This implies that spikes only occur due to the combination of specific data batches with a particular model parameter state. In the future, we plan to study more principled mitigation strategy for loss spikes in very large language models.

So, how does this do as evidence for Paul's model over Eliezer's, or vice versa? As ever, it's a tangled mess and I don't have a clear conclusion.

https://astralcodexten.substack.com/p/yudkowsky-contra-christiano-on-ai

On the one hand: this is a little bit of evidence that you can get reasoning and a small world model/something that maybe looks like an inner monologue easily out of 'shallow heuristics', without anything like general intelligence, pointing towards continuous progress and narrow AIs being much more useful. Plus it's a scale up and presumably more expensive than predecessor models (used a lot more TPUs), in a field that's underinvested.

On the other hand, it looks like there's some things we might describe as 'emergent capabilities' showing up, and the paper describes it as discontinous and breakthroughs on certain metrics. So a little bit of evidence for the discontinous model? But does the Eliezer/pessimist model care about performance metrics like BIG-bench tasks or just qualitative capabilities (i.e. the 'breakthrough capabilities' matter but discontinuity on performance metrics don't)?

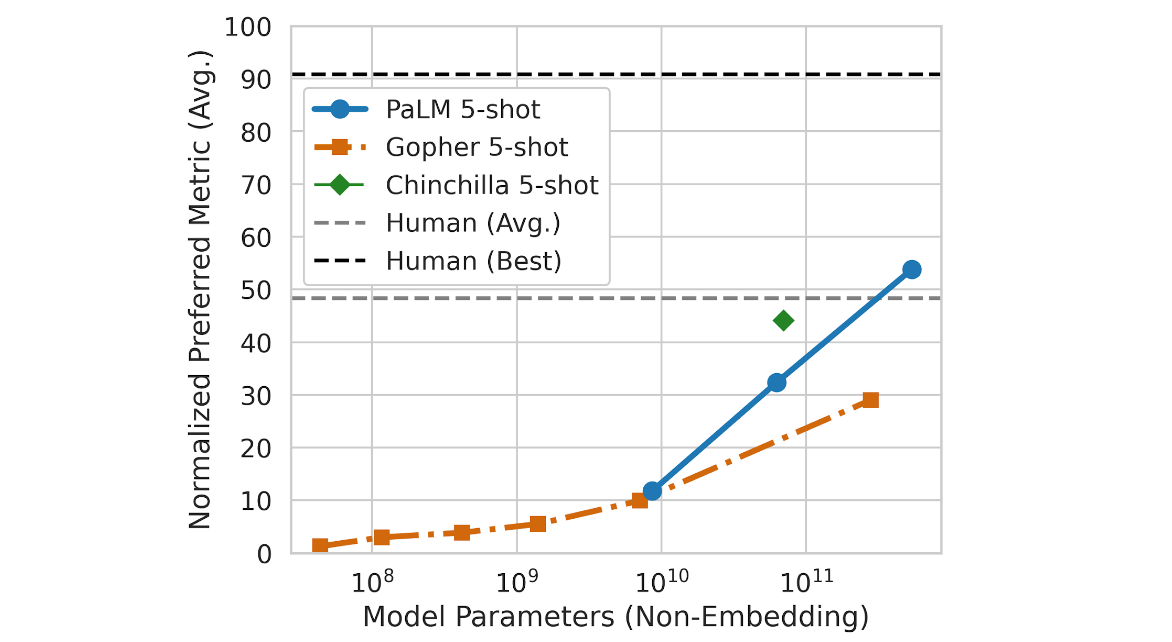

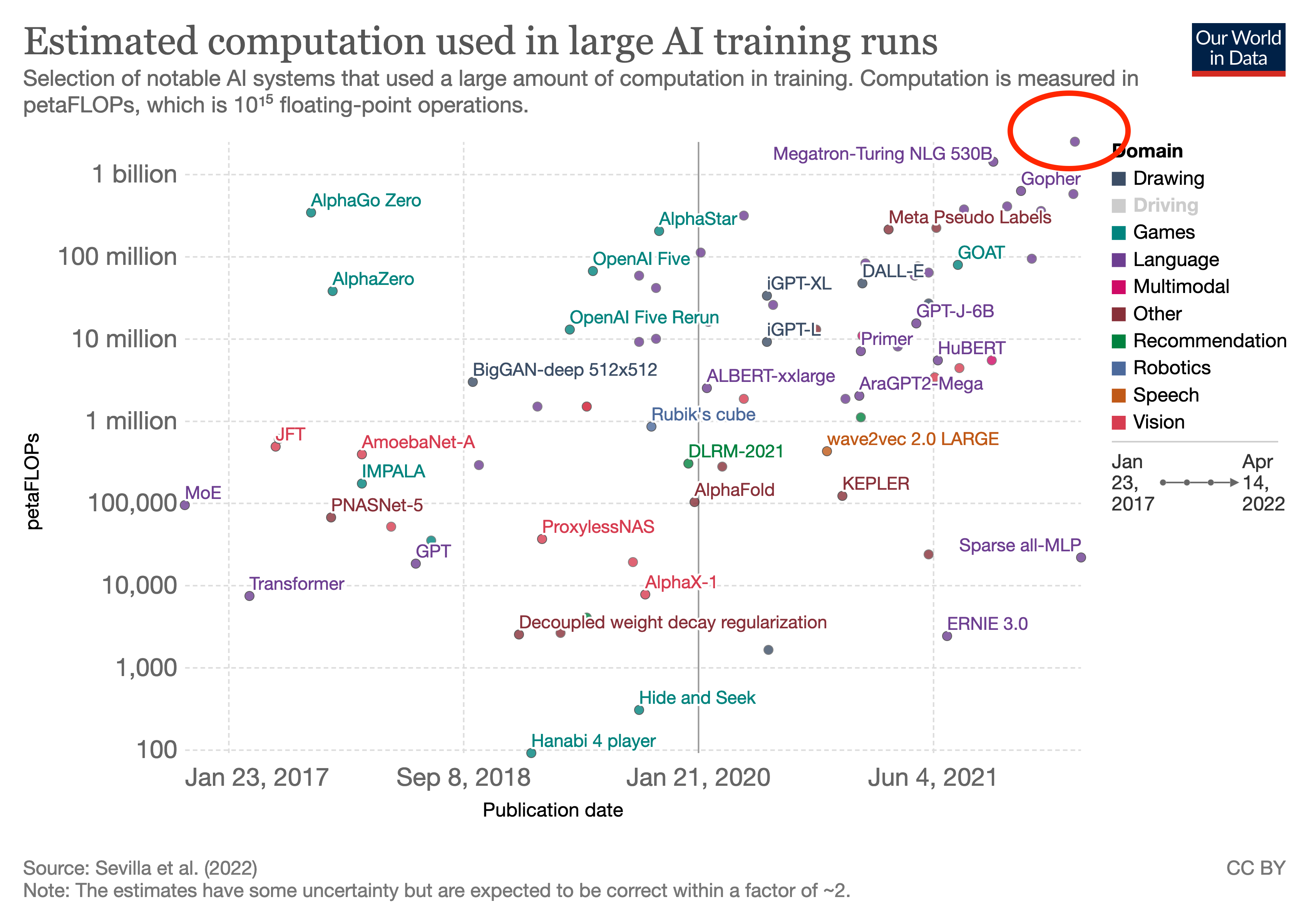

Section 13 (page 47) discusses data/compute scaling and the comparison to Chinchilla. Some findings:

- PaLM 540B uses 4.3 x more compute than Chinchilla, and outperforms Chinchilla on downstream tasks.

- PaLM 540B is massively undertrained with regards to the data-scaling laws discovered in the Chinchilla paper. (unsurprisingly, training a 540B parameter model on enough tokens would be very expensive)

- within the set of (Gopher, Chinchilla, and there sizes of PaLM), the total amount of training compute seems to predict performance on downstream tasks pretty well (log-linear relationship). Gopher underperforms a bit.

According to this image, the performance is generally above the human average:

In the Paul-verse, we should expect that economic interests would quickly cause such models to be used for everything that they can be profitably used for. With better-than-average-human performance, that may well be a doubling of global GDP.

In the Eliezer-verse, the impact of such models on the GDP of the world will remain around $0, due to practical and regulatory constraints, right up until the upper line ("Human (Best)") is surpassed for 1 particular task.

My take as someone who thinks along similar lines to Paul is that in the Paul-verse, if these models aren't being used to generate a lot of customer revenue then they are actually not very useful even if some abstract metric you came up with says they do better than humans on average.

It may even be that your metric is right and the model outperforms humans on a specific task, but AI has been outperforming humans on some tasks for a very long time now. It's just not easy to find profitable uses for most of those tasks, in the sense that the total consumer surplus generated by being able to perform them cheaply and at a high quality is low.

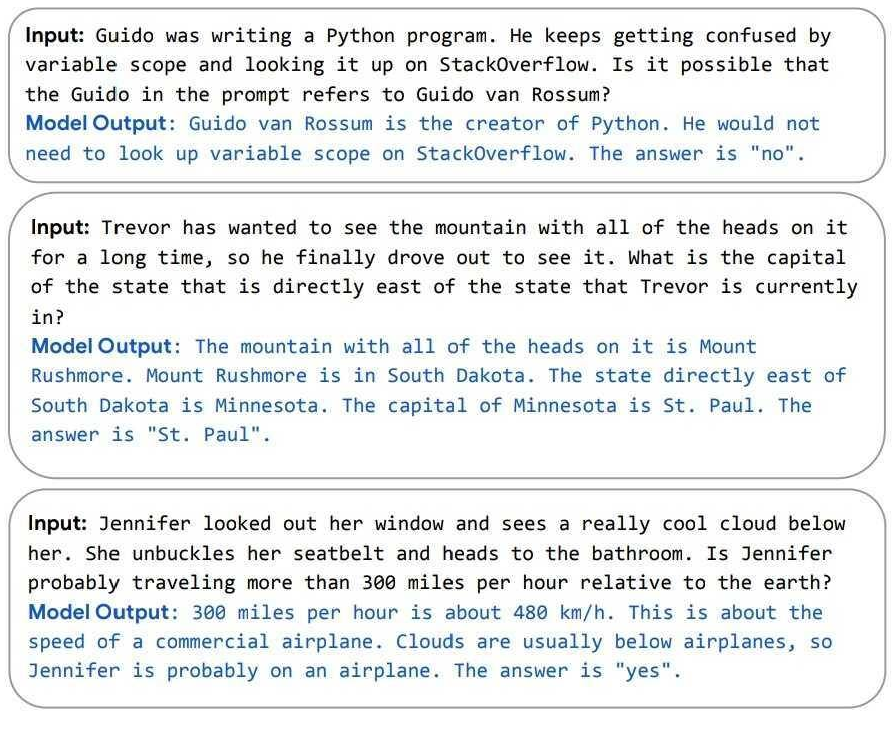

The BIG-Bench paper that those 'human' numbers are coming from (unpublished, quasi-public as TeX here) cautions against taking those average very seriously, without giving complete details about who the humans are or how they were asked/incentivized to behave on tasks that required specialized skills:

I don't think this is quite as bad as some people think. It's more powerful, but it also seems more aligned with human intentions as well as shown by its understanding of humor. It would be worse if it had the multi-step reasoning capacity without the ability to understand humor.

On a separate point, this model seems powerful enough that I think it would be able to demonstrate deceptive capabilities. I would really like to see someone investigate this.

I don't think this is quite as bad as some people think. It's more powerful, but it also seems more aligned with human intentions as well as shown by its understanding of humor. It would be worse if it had the multi-step reasoning capacity without the ability to understand humor.

I disagree. The classic worry about misalignment isn't that the system won't understand stuff, it's that it will understand yet not care in the ways that humans care. ("The AI does not hate you, but you are made of atoms it can use for something else.") If the model didn't get humor, that wouldn't be evidence for misalignment; that would be evidence for it being dumb/low-capabilities.

It's interesting that language model scaling has, for the moment at least, stopped scaling (outside of MoE models). Nearly two years after its release, anything larger than GPT-3 by more than an order of magnitude has yet to be unveiled afaik.

Compute is much more important than mere parameter count* (as MoEs demonstrate and Chinchilla rubs your nose in). Investigating post-GPT-3-compute: https://www.lesswrong.com/posts/sDiGGhpw7Evw7zdR4/compute-trends-comparison-to-openai-s-ai-and-compute https://www.lesswrong.com/posts/XKtybmbjhC6mXDm5z/compute-trends-across-three-eras-of-machine-learning Between Megatron Turing-NLG, Yuan, Jurassic, and Gopher (and an array of smaller ~GPT-3-scale efforts), we look like we're still on the old scaling trend, just not the hyper-fast scaling trend you could get cherrypicking a few recent points.

* Parameter-count was a useful proxy back when everyone was doing compute-optimal scaling on dense models and training a 173b beat 17b beat 1.7b, but then everyone started dabbling in cheaper models and undertraining models (undertrained even according to the then-known scaling laws), and some entities looked like they were optimizing for headlines rather than capabilities. So it's better these days to emphasize compute-count. There's no easy way to cheat petaflop-s/days... yet.

Which is reasonable. It has been about <2.5 years since GPT-3 was trained (they mention the move to Azure disrupting training, IIRC, which lets you date it earlier than just 'May 2020'). Under the 3.4 month "AI and Compute" trend, you'd expect 8.8 doublings or the top run now being 445x. I do not think anyone has a 445x run they are about to unveil any second now. Whereas on the slower >5.7-month doubling in that link, you would expect <36x, which is still 3x PaLM's actual 10x, but at least the right order of magnitude.

There may also be other runs around PaLM scale, pushing peak closer to 30x. (eg Gopher was secret for a long time and a larger Chinchilla would be a logical thing to do and we wouldn't know until next year, potentially; and no one's actually computed the total FLOPS for ERNIE-Titan AFAIK, and it may still be running so who knows what it's up to in total compute consumption. So, 10x from PaLM is the lower bound, and 5 years from now, we may look back and say "ah yes, XYZ nailed the compute-trend exactly, we just didn't learn about it until recently when they happened to disclose exact numbers." Somewhat like how some Starcraft predictions were falsified but retroactively turned out to be right because we just didn't know about AlphaStar and no one had noticed Vinyal's Blizzard talk implying they were positioned for AlphaStar.)

As Adam said, trending with Moore's Law is far slower than the previous trajectory of model scaling. In 2020 after the release of GPT-3, there was widespread speculation that by the next year trillion parameter models would begin to emerge.

Google just announced a very large language model that achieves SOTA across a very large set of tasks, mere days after DeepMind announced Chinchilla, and their discovery that data-scaling might be more valuable than we thought.

Here's the blog post, and here's the paper. I'll repeat the abstract here, with a highlight in bold,