I will use orthonormal definition of transformative AI: I read it as transformative AI would permanently alter world GDP growth rates, increasing them by 3x-10x. There is some disagreement between economists that is the case, i.e the economic growth could be slowed down by human factors, but my intuition says that's unlikely: i.e human-level AI will lead to much higher economic growth.

The assumption that I now think it is likely to be true (90% confident), that's possible to reach transformative AI by using deep learning, a lot of compute and data and the right architecture (which could also be different from a Transformer). Having said that, to scale models 1000x further there is significant engineering effort to be done, and it will take some time (improving model/data parallelism). We are also reaching the point where spending hundreds of millions of dollars of compute will have to be justified, so the ROI of these projects will be important. I have little doubt that they will be useful, but those considerations could slow down the exponential doubling of 3.4 months. For example, to train a 100 trillion parameters GPT model today (roughly 1 zettaflops day), it would require ad-hoc supercomputer with 100,000 A100s GPUs running for 100 days, costing a few billion $. Clearly, such cost would be justified and very profitable if we were sure to get a very intelligent AI system, but from the data we have now we aren't yet sure.

But I do expect 100 trillions models by the end of 2024, and there is a little chance that they could already be intelligent enough to be transformative AIs.

[10/50/90% || 2024/2030/2040] - 50% scenario: Microsoft is the first to release a 1 trillion parameter model in 2020, using it to power Azure NLP API. In 2021 and 2022, there is significant research into better architectures and other companies release their trillion parameters models: to do that they invested into even larger GPUs clusters. Nvidia datacenter revenue grows >100% year over year, and the company breaks the 1 trillion $ marketcap.

By end 2023, a lot of cognitive low-effort jobs, such as call centers, are completely automatized. It is also common to have personal virtual assistant. We also reach Level5 autonomous driving vehicles, but the models are so large that have to run remotely: low-latency 5G connections and many sparse GPUs clusters are the key. In 2024, the first 100 trillion model is unveiled and it can write novel math proofs/papers. This justifies a race towards larger models by all tech companies: billion $ or 10 billion $ scale private supercomputer/datacenter are build to train quadrillion-scale models (2025-2026). The world GDP growth rate start accelerating and it reaches 5-10% per year. Nvidia breaks above 5 trillion $ of marketcap.

By 2028-2030, large nations/blocks such as EU, China and US invest 100s billion $ into 1-5 Gigawatt-class supercomputers, or high-speed networks of them, to train and run their multi-quadrillion-scale model which reach superhuman intelligence and bring transformative AI. The world GDP grows 30%-40% a year. Nvidia is a 25 T$ company.

In Developmental Stages of GPTs, orthonormal explains why we might need "zero more cognitive breakthroughs" to reach transformative AI, aka "an AI capable of making an Industrial Revolution sized impact". More specifically, he says that "basically, GPT-6 or GPT-7 might do it".

Besides, Are we in an AI overhang? makes the case that "GPT-3 is the trigger for 100x larger projects at Google, Facebook and the like, with timelines measured in months."

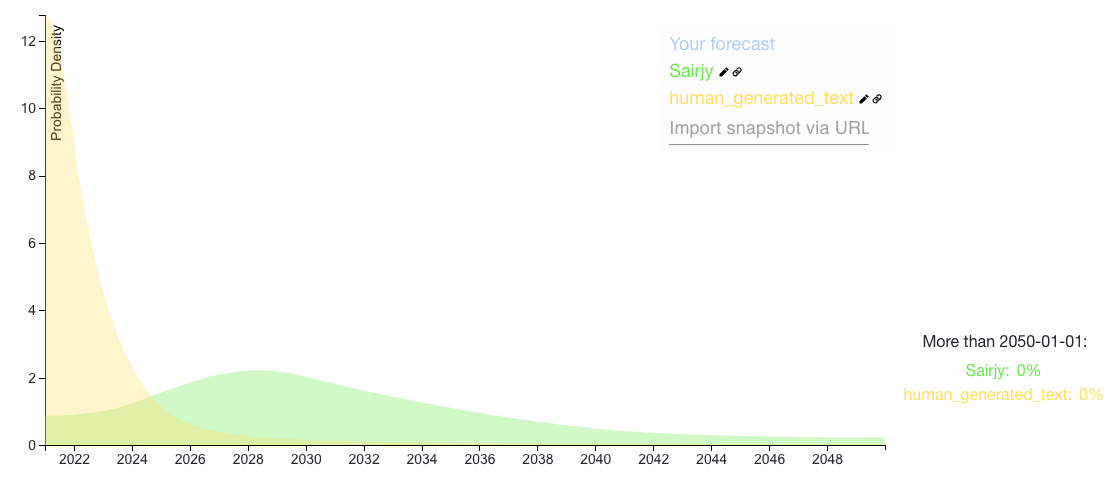

Now, assuming that "GPT-6 or GPT-7 might do it" and that timelines are "measured in months" for 100x larger projects, in what year will there be a 10% (resp. 50% / 90%) chance of having transformative AI and what would the 50% timeline look like?