(I'm posting this comment under this public throwaway account for emotional reasons.)

What made you think the torture hypothetical was a good idea? When I read a math book, I expect to read about math. If the writer insists on using the most horrifying example possible to illustrate their math for no reason at all, that's extremely rude. Granted, sometimes there might a good reason to mention horrifying things, like if you're explicitly making a point about scope-insensitive moral intuitions being untrustworthy. But if you're just doing some ordinary mundane decision theory, you can just say "lose $50," or "utility −10." Then your more sensitive readers might actually learn some new math instead of wasting time and energy desperately trying not to ask whether life is worth living for fear that the answer is obviously that it isn't.

Thank you for the feedback, and sorry for causing you distress! I genuinely did not take into consideration that this choice could cause distress, and it could have occurred to me, and I apologize.

On how I came to think that it might be a good idea (as opposed to missing that it might be a bad idea): While there's math in this post, the point is really the philosophy rather than the math (whose role is just to help thinking more clearly about the philosophy, e.g. to see that PBDT fails in the same way as NBDT on this example). The original counterfactual mugging was phrased in terms of dollars, and one thing I wondered about in the early discussions was whether thinking in terms of these low stakes made people think differently than they would if something really important was at stake. I'm reconstructing, it's been a while, but I believe that's what made me rephrase it in terms of the whole world being at stake. Later, I chose the torture as something that, on a scale I'd reflectively endorse (as opposed, I acknowledge, actual psychology), is much less important than the fate of the world, but still important. But I entirely agree that for the purposes of this post, "paying $1" (any small negative effect) would have made the point just as well.

Yes, I now regret making this mistake at the dawn of the site and regret more having sneezed the mistake onto other people.

Had you used small and large numbers instead of the terms torture and dust specks, the whole post would have been trivial. I learned a fair bit about my own thinking in the aftermath of reading that infamous post, and I suspect I am not the only one. I even intentionally used politically charged terms in my own post.

Username explicitly linked to torture vs. dust specks as a case where it makes sense to use torture as an example. Username is just objecting to using torture for general decision theory examples where there's no particular reason to use that example.

That doesn't change the fact that there is no reason to involve torture in this thought experiment. (or most of the thought experiments we put it in)

I think as a general rule, we should try to frame problems into positive utility as opposed to negative utility, unless we have a reason not to.

One reason for this is that people feel guilt for participating in a thought experiment where they have to choose between two bad things, and they do not feel the same guilt for choosing between two good things. Another is that people might have a feeling like they have a moral obligation to avoid certain bad scenarios no matter what, and this might interfere with their ability to compare them to other things rationally. I do not think that people often have the same feeling of moral obligation to irrationally always seek a certain good scenario.

There is a much more principled possibility, which I'll call pseudo-Bayesian decision theory, or PBDT. PBDT can be seen as re-interpreting updating as saying that you're indifferent about what happens in possible worlds in which you don't exist as a conscious observer, rather than ruling out those worlds as impossible given your evidence.

I can see why you'd rule out being completely indifferent about what happens in possible worlds in which you don't exist, but what about something in between being fully updateless and fully updateful? What if you cared less (but isn't completely indifferent) about worlds in which you don't exist as a conscious observer, or perhaps there are two parts to your utility function, an other-regarding part which is updateless and a self-regarding part which changes as you make observations?

Suppose you face a counterfactual mugging where the dollar amounts are $101 and $100 instead of the standard $10000 and $100. If you're fully updateless then you'd still pay up, but if you cared less about the other world (or just the version of yourself in the other world) then you wouldn't pay. Being fully updateless seems problematic for reasons I explained in Where do selfish values come from? so I'm forced to consider the latter as a possibility.

Imagine that Omega tells you that it threw its coin a million years ago, and would have turned the sky green if it had landed the other way. Back in 2010, I wrote a post arguing that in this sort of situation, since you've always seen the sky being blue, and every other human being has also always seen the sky being blue, everyone has always had enough information to conclude that there's no benefit from paying up in this particular counterfactual mugging, and so there hasn't ever been any incentive to self-modify into an agent that would pay up ... and so you shouldn't.

I think this sort of reasoning doesn't work if you also have a precommitment regarding logical facts. Then you know the sky is blue, but you don't know what that implies. When Omega informs you about the logical connection between sky color, your actions, and your payoff, then you won't update on this logical fact. This information is one implication away from the logical prior you precommitted yourself to. And the best policy given this prior, which contains information about sky color, but not about this blackmail, is not to pay: not paying will a priori just change the situation in which you will be blackmailed (hence, what blue sky color means), but not the probability of a positive intelligence explosion in the first place. Knowing or not knowing the color of the sky doesn't make a difference, as long as we don't know what it implies.

(HT Lauro Langosco for pointing this out to me.)

I think the "transparent Newcomb" version of the blue-sky scenario is more well-known? Might want to point that out.

There is a much more principled possibility, which I'll call pseudo-Bayesian decision theory, or PBDT. PBDT can be seen as re-interpreting updating as saying that you're indifferent about what happens in possible worlds in which you don't exist as a conscious observer, rather than ruling out those worlds as impossible given your evidence.

I can't follow the math but this feels like it'd inexorably lead to Quantum Suicide.

The naive answer to that situation still sounds correct to me. (At least, if one is to be consistent with the notion that one-boxing is the correct answer in the original Newcomb paradox).

To see the difference between these two scenarios, ask the following question: "what policy should I precommit to before the whole story unravels?" In Newcomb, you should clearly precommit to one-boxing: it causes Omega to put lots of money in the first box. Here, precommitting to push the button is BAD: it doesn't influence FOOM vs. DOOM, only influences in which scenario Omega hands you the button + whether you get tortured.

It does not seem to me like he is referring to the "finished program" reference class at all. There is a class of l-zombies, and finished programs that are never run are in this class, but so are unfinished programs. The finished programs are just members of the l-zombies reference class that are particularly easy to point to.

Sorry about that; I've had limited time to spend on this, and have mostly come down on the side of trying to get more of my previous thinking out there rather than replying to comments. (It's a tradeoff where neither of the options is good, but I'll try to at least improve my number of replies.) I've replied there. (Actually, now that I spent some time writing that reply, I realize that I should probably just have pointed to Coscott's existing reply in this thread.)

(I'm posting this comment under this public throwaway account for emotional reasons.)

What made you think the torture hypothetical was a good idea? When I read a math book, I expect to read about math. If the writer insists on using the most horrifying example possible to illustrate their math for no reason at all, that's extremely rude. Granted, sometimes there might a good reason to mention horrifying things, like if you're explicitly making a point about scope-insensitive moral intuitions being untrustworthy. But if you're just doing some ordinary mundane decision theory, you can just say "lose $50," or "utility −10." Then your more sensitive readers might actually learn some new math instead of wasting time and energy desperately trying not to ask whether life is worth living for fear that the answer is obviously that it isn't.

Yes, I now regret making this mistake at the dawn of the site and regret more having sneezed the mistake onto other people.

Trigger warning: In a thought experiment in this post, I used a hypothetical torture scenario without thinking, even though it wasn't necessary to make my point. Apologies, and thanks to an anonymous user for pointing this out. I'll try to be more careful in the future.

Should you pay up in the counterfactual mugging?

I've always found the argument about self-modifying agents compelling: If you expected to face a counterfactual mugging tomorrow, you would want to choose to rewrite yourself today so that you'd pay up. Thus, a decision theory that didn't pay up wouldn't be reflectively consistent; an AI using such a theory would decide to rewrite itself to use a different theory.

But is this the only reason to pay up? This might make a difference: Imagine that Omega tells you that it threw its coin a million years ago, and would have turned the sky green if it had landed the other way. Back in 2010, I wrote a post arguing that in this sort of situation, since you've always seen the sky being blue, and every other human being has also always seen the sky being blue, everyone has always had enough information to conclude that there's no benefit from paying up in this particular counterfactual mugging, and so there hasn't ever been any incentive to self-modify into an agent that would pay up ... and so you shouldn't.

I've since changed my mind, and I've recently talked about part of the reason for this, when I introduced the concept of an l-zombie, or logical philosophical zombie, a mathematically possible conscious experience that isn't physically instantiated and therefore isn't actually consciously experienced. (Obligatory disclaimer: I'm not claiming that the idea that "some mathematically possible experiences are l-zombies" is likely to be true, but I think it's a useful concept for thinking about anthropics, and I don't think we should rule out l-zombies given our present state of knowledge. More in the l-zombies post and in this post about measureless Tegmark IV.) Suppose that Omega's coin had come up the other way, and Omega had turned the sky green. Then you and I would be l-zombies. But if Omega was able to make a confident guess about the decision we'd make if confronted with the counterfactual mugging (without simulating us, so that we continue to be l-zombies), then our decisions would still influence what happens in the actual physical world. Thus, if l-zombies say "I have conscious experiences, therefore I physically exist", and update on this fact, and if the decisions they make based on this influence what happens in the real world, a lot of utility may potentially be lost. Of course, you and I aren't l-zombies, but the mathematically possible versions of us who have grown up under a green sky are, and they reason the same way as you and me—it's not possible to have only the actual conscious observers reason that way. Thus, you should pay up even in the blue-sky mugging.

But that's only part of the reason I changed my mind. The other part is that while in the counterfactual mugging, the answer you get if you try to use Bayesian updating at least looks kinda sensible, there are other thought experiments in which doing so in the straight-forward way makes you obviously bat-shit crazy. That's what I'd like to talk about today.

*

The kind of situation I have in mind involves being able to influence whether you exist, or more precisely, influence whether the version of you making the decision exists as a conscious observer (or whether it's an l-zombie).

Suppose that you wake up and Omega explains to you that it's kidnapped you and some of your friends back in 2014, and put you into suspension; it's now the year 2100. It then hands you a little box with a red button, and tells you that if you press that button, Omega will slowly torture you and your friends to death; otherwise, you'll be able to live out a more or less normal and happy life (or to commit painless suicide, if you prefer). Furthermore, it explains that one of two things have happened: Either (1) humanity has undergone a positive intelligence explosion, and Omega has predicted that you will press the button; or (2) humanity has wiped itself out, and Omega has predicted that you will not press the button. In any other scenario, Omega would still have woken you up at the same time, but wouldn't have given you the button. Finally, if humanity has wiped itself out, it won't let you try to "reboot" it; in this case, you and your friends will be the last humans.

There's a correct answer to what to do in this situation, and it isn't to decide that Omega's just given you anthropic superpowers to save the world. But that's what you get if you try to update in the most naive way: If you press the button, then (2) becomes extremely unlikely, since Omega is really really good at predicting. Thus, the true world is almost certainly (1); you'll get tortured, but humanity survives. For great utility! On the other hand, if you decide to not press the button, then by the same reasoning, the true world is almost certainly (2), and humanity has wiped itself out. Surely you're not selfish enough to prefer that?

The correct answer, clearly, is that your decision whether to press the button doesn't influence whether humanity survives, it only influences whether you get tortured to death. (Plus, of course, whether Omega hands you the button in the first place!) You don't want to get tortured, so you don't press the button. Updateless reasoning gets this right.

*

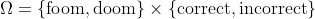

Let me spell out the rules of the naive Bayesian decision theory ("NBDT") I used there, in analogy with Simple Updateless Decision Theory (SUDT). First, let's set up our problem in the SUDT framework. To simplify things, we'll pretend that FOOM and DOOM are the only possible things that can happen to humanity. In addition, we'll assume that there's a small probability that Omega makes a mistake when it tries to predict what you will do if given the button. Thus, the relevant possible worlds are

that Omega makes a mistake when it tries to predict what you will do if given the button. Thus, the relevant possible worlds are  . The precise probabilities you assign to these doesn't matter very much; I'll pretend that FOOM and DOOM are equiprobable,

. The precise probabilities you assign to these doesn't matter very much; I'll pretend that FOOM and DOOM are equiprobable,  = \varepsilon/2) and

and  = (1-\varepsilon)/2) .

.

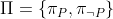

There's only one situation in which you need to make a decision, ; I won't try to define NBDT when there is more than one situation. Your possible actions in this situation are to press or to not press the button,

; I won't try to define NBDT when there is more than one situation. Your possible actions in this situation are to press or to not press the button,  = \{P,\neg P\}) , so the only possible policies are

, so the only possible policies are  , which presses the button (

, which presses the button ( = P) ), and

), and  , which doesn't (

, which doesn't ( = \neg P) );

);  .

.

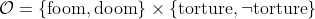

There are four possible outcomes, specifying (a) whether humanity survives and (b) whether you get tortured: . Omega only hands you the button if FOOM and it predicts you'll press it, or DOOM and it predicts you won't. Thus, the only cases in which you'll get tortured are

. Omega only hands you the button if FOOM and it predicts you'll press it, or DOOM and it predicts you won't. Thus, the only cases in which you'll get tortured are ,\pi_P) = (\mathrm{foom},\mathrm{torture})) and

and ,\pi_P) = (\mathrm{doom},\mathrm{torture})) . For any other

. For any other  ,

,  , and

, and  , we have

, we have ,\pi) = (x,\neg\mathrm{torture})) .

.

Finally, let's define our utility function by) = L) ,

, ) = L-1) ,

, ) = -L) , and

, and ) = -L-1) , where

, where  is a very large number.

is a very large number.

This suffices to set up an SUDT decision problem. There are only two possible worlds where

where )) differs from

differs from )) , namely

, namely ) and

and ) , where

, where  results in torture and

results in torture and  doesn't. In each of these cases, the utility of

doesn't. In each of these cases, the utility of  is lower (by one) than that of

is lower (by one) than that of  . Hence,

. Hence, ![\mathbb{E}[u(o(\boldsymbol{\omega},\pi_P))] < \mathbb{E}[u(o(\boldsymbol{\omega},\pi_{\neg P}))]](http://www.codecogs.com/png.latex?\textstyle \mathbb{E}[u(o(\boldsymbol{\omega},\pi_P))] < \mathbb{E}[u(o(\boldsymbol{\omega},\pi_{\neg P}))]) , implying that SUDT says you should choose

, implying that SUDT says you should choose  .

.

*

For NBDT, we need to know how to update, so we need one more ingredient: a function specifying in which worlds you exist as a conscious observer. In anticipation of future discussions, I'll write this as a function) , which gives the "measure" ("amount of magical reality fluid") of the conscious observation

, which gives the "measure" ("amount of magical reality fluid") of the conscious observation  if policy

if policy  is executed in the possible world

is executed in the possible world  . In our case,

. In our case,  and

and \in\{0,1\}) , indicating non-existence and existence, respectively. We can interpret

, indicating non-existence and existence, respectively. We can interpret ) as the conditional probability of making observation

as the conditional probability of making observation  , given that the true world is

, given that the true world is  , if plan

, if plan  is executed. In our case,

is executed. In our case, ,\pi_P) =)

,\pi_{\neg P}) =)

,\pi_{\neg P}) =)

,\pi_P) = 1) , and

, and  = 0) in all other cases.

in all other cases.

Now, we can use Bayes' theorem to calculate the posterior probability of a possible world, given information and policy

and policy  :

:  = \mathbb{P}(\omega)\cdot\mu(i;\omega,\pi) / \sum_{\omega'\in\Omega} \mathbb{P}(\omega')\cdot\mu(i;\omega',\pi)) . NBDT tells us to choose the policy

. NBDT tells us to choose the policy  that maximizes the posterior expected utility,

that maximizes the posterior expected utility, ![\mathbb{E}[u(o(\boldsymbol{\omega},\pi))\mid i;\pi]](http://www.codecogs.com/png.latex?\textstyle \mathbb{E}[u(o(\boldsymbol{\omega},\pi))\mid i;\pi]) .

.

In our case, we have \mid *;\pi_P) = \mathbb{P}((\mathrm{doom},\mathrm{correct}) \mid *;\pi_{\neg P}) = 1-\varepsilon) and

and  \mid *;\pi_P) = \mathbb{P}((\mathrm{foom},\mathrm{incorrect}) \mid *;\pi_{\neg P}) = \varepsilon) . Thus, if we press the button, our expected utility is dominated by the near-certainty of humanity surviving, whereas if we don't, it's dominated by humanity's near-certain doom, and NBDT says we should press.

. Thus, if we press the button, our expected utility is dominated by the near-certainty of humanity surviving, whereas if we don't, it's dominated by humanity's near-certain doom, and NBDT says we should press.

*

But maybe it's not updating that's bad, but NBDT's way of implementing it? After all, we get the clearly wacky results only if our decisions can influence whether we exist, and perhaps the way that NBDT extends the usual formula to this case happens to be the wrong way to extend it.

One thing we could try is to mark a possible world as impossible only if

as impossible only if  = 0) for all policies

for all policies  (rather than: for the particular policy

(rather than: for the particular policy  whose expected utility we are computing). But this seems very ad hoc to me. (For example, this could depend on which set of possible actions

whose expected utility we are computing). But this seems very ad hoc to me. (For example, this could depend on which set of possible actions ) we consider, which seems odd.)

we consider, which seems odd.)

There is a much more principled possibility, which I'll call pseudo-Bayesian decision theory, or PBDT. PBDT can be seen as re-interpreting updating as saying that you're indifferent about what happens in possible worlds in which you don't exist as a conscious observer, rather than ruling out those worlds as impossible given your evidence. (A version of this idea was recently brought up in a comment by drnickbone, though I'd thought of this idea myself during my journey towards my current position on updating, and I imagine it has also appeared elsewhere, though I don't remember any specific instances.) I have more than one objection to PBDT, but the simplest one to argue is that it doesn't solve the problem: it still believes that it has anthropic superpowers in the problem above.

Formally, PBDT says that we should choose the policy that maximizes

that maximizes ![\mathbb{E}[u(o(\boldsymbol{\omega},\pi))\cdot\mu(*;\boldsymbol{\omega},\pi)]](http://www.codecogs.com/png.latex?\textstyle \mathbb{E}[u(o(\boldsymbol{\omega},\pi))\cdot\mu(*;\boldsymbol{\omega},\pi)]) (where the expectation is with respect to the prior, not the updated, probabilities). In other words, we set the utility of any outcome in which we don't exist as a conscious observer to zero; we can see PBDT as SUDT with modified outcome and utility functions.

(where the expectation is with respect to the prior, not the updated, probabilities). In other words, we set the utility of any outcome in which we don't exist as a conscious observer to zero; we can see PBDT as SUDT with modified outcome and utility functions.

When our existence is independent on our decisions—that is, if) doesn't depend on

doesn't depend on  —then it turns out that PBDT and NBDT are equivalent, i.e., PBDT implements Bayesian updating. That's because in that case,

—then it turns out that PBDT and NBDT are equivalent, i.e., PBDT implements Bayesian updating. That's because in that case, ![\mathbb{E}[u(o(\boldsymbol{\omega},\pi))\mid *;\pi] =](http://www.codecogs.com/png.latex?\textstyle \mathbb{E}[u(o(\boldsymbol{\omega},\pi))\mid *;\pi] =)

)\cdot\mathbb{P}(\omega\mid *;\pi))

)\cdot\mathbb{P}(\omega)\cdot \mu(*;\omega,\pi) / \sum_{\omega'\in\Omega} \mathbb{P}(\omega')\cdot\mu(*;\omega',\pi)) . If

. If ) doesn't depend on

doesn't depend on  , then the whole denominator doesn't depend on

, then the whole denominator doesn't depend on  , so the fraction is maximized if and only if the numerator is. But the numerator is

, so the fraction is maximized if and only if the numerator is. But the numerator is )\cdot\mathbb{P}(\omega)\cdot \mu(*;\omega,\pi) =)

![\mathbb{E}[u(o(\boldsymbol{\omega},\pi))\cdot\mu(*;\omega,\pi)]](http://www.codecogs.com/png.latex?\textstyle \mathbb{E}[u(o(\boldsymbol{\omega},\pi))\cdot\mu(*;\omega,\pi)]) , exactly the quantity that PBDT says should be maximized.

, exactly the quantity that PBDT says should be maximized.

Unfortunately, although in our problem above) does depend of

does depend of  , the denominator as a whole still doesn't: For both

, the denominator as a whole still doesn't: For both  and

and  , there is exactly one possible world with probability

, there is exactly one possible world with probability /2) and one possible world with probability

and one possible world with probability  in which

in which  is a conscious observer, so we have

is a conscious observer, so we have \cdot\mu(*;\omega',\pi) = 1/2) for both

for both  . Thus, PBDT gives the same answer as NBDT, by the same mathematical argument as in the case where we can't influence our own existence. If you think of PBDT as SUDT with the utility function

. Thus, PBDT gives the same answer as NBDT, by the same mathematical argument as in the case where we can't influence our own existence. If you think of PBDT as SUDT with the utility function )\cdot\mu(*;\omega,\pi)) , then intuitively, PBDT can be thought of as reasoning, "Sure, I can't influence whether humanity is wiped out; but I can influence whether I'm an l-zombie or a conscious observer; and who cares what happens to humanity if I'm not? Best to press to button, since getting tortured in a world where there's been a positive intelligence explosion is much better than life without torture if humanity has been wiped out."

, then intuitively, PBDT can be thought of as reasoning, "Sure, I can't influence whether humanity is wiped out; but I can influence whether I'm an l-zombie or a conscious observer; and who cares what happens to humanity if I'm not? Best to press to button, since getting tortured in a world where there's been a positive intelligence explosion is much better than life without torture if humanity has been wiped out."

I think that's a pretty compelling argument against PBDT, but even leaving it aside, I don't like PBDT at all. I see two possible justifications for PBDT: You can either say that)\cdot\mu(*;\omega,\pi)) is your real utility function—you really don't care about what happens in worlds where the version of you making the decision doesn't exist as a conscious observer—or you can say that your real preferences are expressed by

is your real utility function—you really don't care about what happens in worlds where the version of you making the decision doesn't exist as a conscious observer—or you can say that your real preferences are expressed by )) , and multiplying by

, and multiplying by ) is just a mathematical trick to express a steelmanned version of Bayesian updating. If your preferences really are given by

is just a mathematical trick to express a steelmanned version of Bayesian updating. If your preferences really are given by )\cdot\mu(*;\omega,\pi)) , then fine, and you should be maximizing

, then fine, and you should be maximizing ![\mathbb{E}[u(o(\boldsymbol{\omega},\pi))\cdot\mu(*;\omega,\pi)]](http://www.codecogs.com/png.latex?%5Ctextstyle%20%5Cmathbb%7BE%7D[u%28o%28%5Cboldsymbol%7B%5Comega%7D,%5Cpi%29%29%5Ccdot%5Cmu%28*;%5Comega,%5Cpi%29]) (because you should be using (S)UDT), and you should press the button. Some kind of super-selfish agent, who doesn't care a fig even about a version of itself that is exactly the same up till five seconds ago (but then wasn't handed the button) could indeed have such preferences. But I think these are wacky preferences, and you don't actually have them. (Furthermore, if you did have them, then

(because you should be using (S)UDT), and you should press the button. Some kind of super-selfish agent, who doesn't care a fig even about a version of itself that is exactly the same up till five seconds ago (but then wasn't handed the button) could indeed have such preferences. But I think these are wacky preferences, and you don't actually have them. (Furthermore, if you did have them, then )\cdot\mu(*;\omega,\pi)) would be your actual utility function, and you should be writing it as just

would be your actual utility function, and you should be writing it as just )) , where

, where ) must now give information about whether

must now give information about whether  is a conscious observer.)

is a conscious observer.)

If multiplying by) is just a trick to implement updating, on the other hand, then I find it strange that it introduces a new concept that doesn't occur at all in classical Bayesian updating, namely the utility of a world in which

is just a trick to implement updating, on the other hand, then I find it strange that it introduces a new concept that doesn't occur at all in classical Bayesian updating, namely the utility of a world in which  is an l-zombie. We've set this to zero, which is no loss of generality because classical utility functions don't change their meaning if you add or subtract a constant, so whenever you have a utility function where all worlds in which

is an l-zombie. We've set this to zero, which is no loss of generality because classical utility functions don't change their meaning if you add or subtract a constant, so whenever you have a utility function where all worlds in which  is an l-zombie have the same utility

is an l-zombie have the same utility  , then you can just subtract

, then you can just subtract  from all utilities (without changing the meaning of the utility function), and get a function where that utility is zero. But that means that the utility functions I've been plugging into PBDT above do change their meaning if you add a constant to them. You can set up a problem where the agent has to decide whether to bring itself into existence or not (Omega creates it iff it predicts that the agent will press a particular button), and in that case the agent will decide to do so iff the world has utility greater than zero—clearly not invariant under adding and subtracting a constant. I can't find any concept like the utility of not existing in my intuitions about Bayesian updating (though I can find such a concept in my intuitions about utility, but regarding that see the previous paragraph), so if PBDT is just a mathematical trick to implement these intuitions, where does that utility come from?

from all utilities (without changing the meaning of the utility function), and get a function where that utility is zero. But that means that the utility functions I've been plugging into PBDT above do change their meaning if you add a constant to them. You can set up a problem where the agent has to decide whether to bring itself into existence or not (Omega creates it iff it predicts that the agent will press a particular button), and in that case the agent will decide to do so iff the world has utility greater than zero—clearly not invariant under adding and subtracting a constant. I can't find any concept like the utility of not existing in my intuitions about Bayesian updating (though I can find such a concept in my intuitions about utility, but regarding that see the previous paragraph), so if PBDT is just a mathematical trick to implement these intuitions, where does that utility come from?

I'm not aware of a way of implementing updating in general SUDT-style problems that does better than NBDT, PBDT, and the ad-hoc idea mentioned above, so for now I've concluded that in general, trying to update is just hopeless, and we should be using (S)UDT instead. In classical decision problems, where there are no acausal influences, (S)UDT will of course behave exactly as if it did do a Bayesian update; thus, in a sense, using (S)UDT can also be seen as a reinterpretation of Bayesian updating (in this case just as updateless utility maximization in a world where all influence is causal), and that's the way I think about it nowadays.