Just thinking through simple stuff for myself, very rough, posting in the spirit of quick takes

At present, we are making progress on the Technical Alignment Problem[2] and like probably could solve it within 50 years.

- Humanity is on track to build ~lethal superpowerful AI in more like 5-15 years.

- Working on technical alignment (direct or meta) only matters if we can speed up overall progress by 10x (or some lesser factor if AI capabilities is delayed from its current trajectory). Improvements of 2x are not likely to get us to an adequate technical solution in time.

- Working on slowing things down is only helpful if it results in delays of decades.

- Shorter delays are good in so far as they give you time to buy further delays.

- There is technical research that is useful for persuading people to slow down (and maybe also solving alignment, maybe not). This includes anything that demonstrates scary capabilities or harmful proclivities, e.g. a bunch of mech interp stuff, all the evals stuff.

AI is in fact super powerful and people who perceive there being value to be had aren’t entirely wrong[3]. This results in a very strong motivation to pursue AI and resist efforts to be stop

"Cyborgism or AI-assisted research that gets up 5x speedups but applies differentially to technical alignment research"

How do you do you make meaningful progress and ensure it does not speed up capabilities?

It seems unlikely that a technique exists that is exclusively useful for alignment research and can't be tweaked to help OpenMind develop better optimization algorithms etc.

I basically agree with this:

People who want to speed up AI will use falsehoods and bad logic to muddy the waters, and many people won’t be able to see through it

In other words, there’s going to be an epistemic war and the other side is going to fight dirty, I think even a lot of clear evidence will have a hard time against people’s motivations/incentives and bad arguments.

But I'd be more pessimistic than that, in that I honestly think pretty much every side will fight quite dirty in order to gain power over AI, and we already have seen examples of straight up lies and bad faith.

From the anti-regulation side, I remember Martin Casado straight up lying about mechanistic interpretability rendering AI models completely understood and white box, and I'm very sure that mechanistic interpretability cannot do what Martin Casado claimed.

I also remembered a16z lying a lot about SB1047.

From the pro-regulation side, I remembered Zvi incorrectly claiming that Sakana AI did instrumental convergence/recursive self-improvement, and as it turned out, the reality was far more mundane than that:

https://www.lesswrong.com/posts/ppafWk6YCeXYr4XpH/danger-ai-scientist-danger#AtXXgsws5DuP6Jxzx

Zvi the...

Though I am working on technical alignment (and perhaps because I know it is hard) I think the most promising route may be to increase human and institutional rationality and coordination ability. This may be more tractable than "expected" with modern theory and tools.

Also, I don't think we are on track to solve technical alignment in 50 years without intelligence augmentation in some form, at least not to the point where we could get it right on a "first critical try" if such a thing occurs. I am not even sure there is a simple and rigorous technical solution that looks like something I actually want, though there is probably a decent engineering solution out there somewhere.

There is a now a button to say "I didn't like this recommendation, show fewer like it"

Clicking it will:

- update the recommendation engine that you strongly don't like this recommendation

- store analytics data for the LessWrong team to know that you didn't like this post (we won't look at your displayname, just random id). This will hopefully let us understand trends in bad recommendations

- hide the post item from posts lists like Enriched/Latest Posts/Recommended. It will not hide it from user profile pages, Sequences, etc

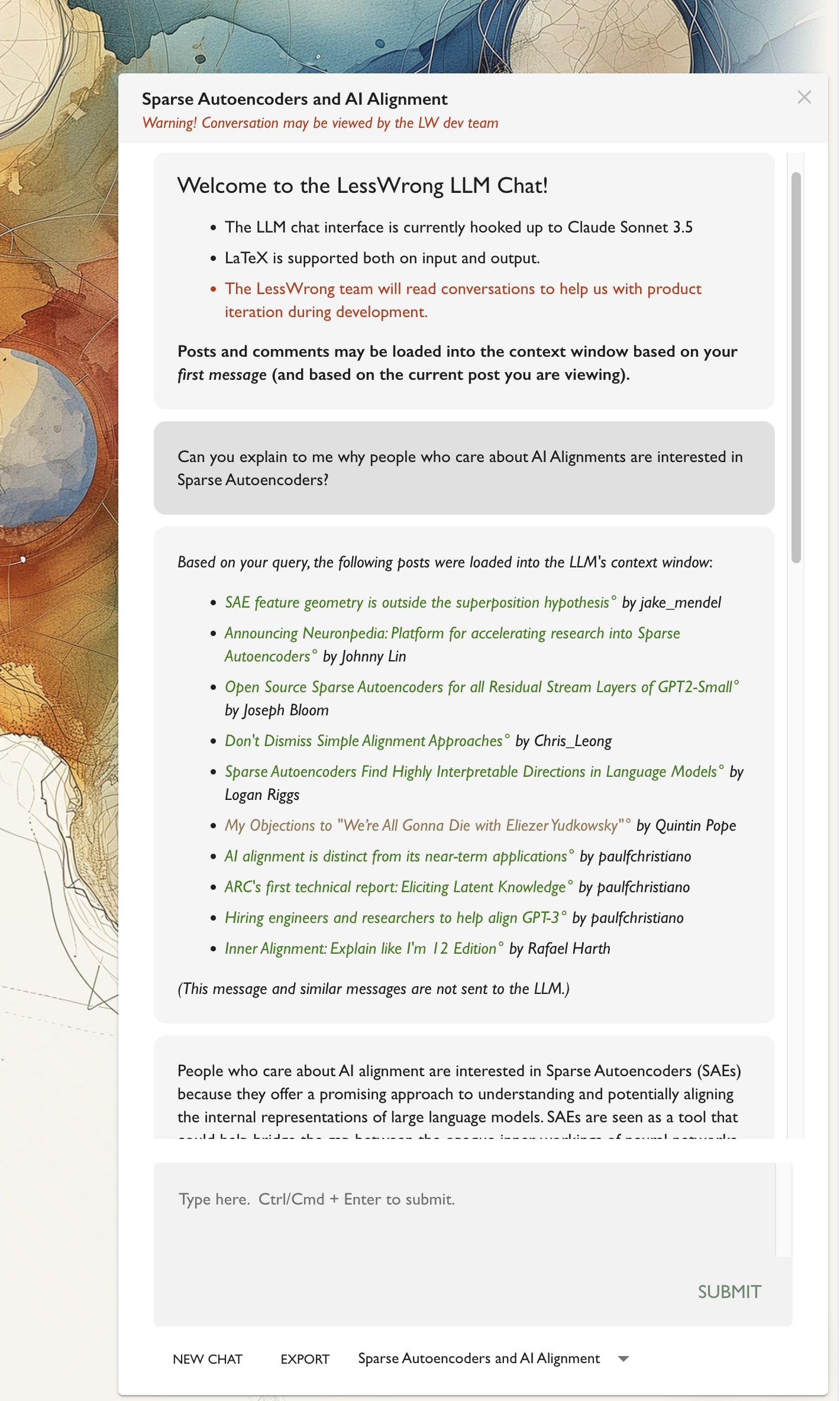

Seeking Beta Users for LessWrong-Integrated LLM Chat

Comment here if you'd like access. (Bonus points for describing ways you'd like to use it.)

A couple of months ago, a few of the LW team set out to see how LLMs might be useful in the context of LW. It feels like they should be at some point before the end, maybe that point is now. My own attempts to get Claude to be helpful for writing tasks weren't particularly succeeding, but LLMs are pretty good at reading a lot of things quickly, and also can be good at explaining technical topics.

So I figured just making it easy to load a lot of relevant LessWrong context into an LLM might unlock several worthwhile use-cases. To that end, Robert and I have integrated a Claude chat window into LW, with the key feature that it will automatically pull in relevant LessWrong posts and comments to what you're asking about.

I'm currently seeking beta users.

Since using the Claude API isn't free and we haven't figured out a payment model, we're not rolling it out broadly. But we are happy to turn it on for select users who want to try it out.

Comment here if you'd like access. (Bonus points for describing ways you'd like to use it.)

Selected Aphorisms from Francis Bacon's Novum Organum

I'm currently working to format Francis Bacon's Novum Organum as a LessWrong sequence. It's a moderate-sized project as I have to work through the entire work myself, and write an introduction which does Novum Organum justice and explains the novel move of taking an existing work and posting in on LessWrong (short answer: NovOrg is some serious hardcore rationality and contains central tenets of the LW foundational philosophy notwithstanding being published back in 1620, not to mention that Bacon and his works are credited with launching the modern Scientific Revolution)

While I'm still working on this, I want to go ahead and share some of my favorite aphorisms from is so far:

3. . . . The only way to command reality is to obey it . . .

9. Nearly all the things that go wrong in the sciences have a single cause and root, namely: while wrongly admiring and praising the powers of the human mind, we don’t look for true helps for it.

Bacon sees the unaided human mind as entirely inadequate for scientific progress. He sees for the way forward for scientific progress as constructing tools/infrastructure/methodogy t...

Please note that even things written in 1620 can be under copyright. Not the original thing, but the translation, if it is recent. Generally, every time a book is modified, the clock starts ticking anew... for the modified version. If you use a sufficiently old translation, or translate a sufficiently old text yourself, then it's okay (even if a newer translation exists, if you didn't use it).

The “Deferred and Temporary Stopping” Paradigm

Quickly written. Probably missed where people are already saying the same thing.

I actually feel like there’s a lot of policy and research effort aimed at slowing down the development of powerful AI–basically all the evals and responsible scaling policy stuff.

A story for why this is the AI safety paradigm we’ve ended up in is because it’s palatable. It’s palatable because it doesn’t actually require that you stop. Certainly, it doesn’t right now. To the extent companies (or governments) are on board, it’s because those companies are at best promising “I’ll stop later when it’s justified”. They’re probably betting that they’ll be able to keep arguing it’s not yet justified. At the least, it doesn’t require a change of course now and they’ll go along with it to placate you.

Even if people anticipate they will trigger evals and maybe have to delay or stop releases, I would bet they’re not imagining they have to delay or stop for all that long (if they’re even thinking it through that much). Just long enough to patch or fix the issue, then get back to training the next iteration. I'm curious how many people imagine that once certain evaluatio...

Why I'm excited by the 2018 Review

I generally fear that perhaps some people see LessWrong as a place where people just read and discuss "interesting stuff", not much different from a Sub-Reddit on anime or something. You show up, see what's interesting that week, chat with your friends. LessWrong's content might be considered "more healthy" relative to most internet content and many people say they browse LessWrong to procrastinate but feel less guilty about than other browsing, but the use-case still seems a bit about entertainment.

None of the above is really a bad thing, but in my mind, LessWrong is about much more than a place for people to hang out and find entertainment in sharing joint interests. In my mind, LessWrong is a place where the community makes collective progress on valuable problems. It is an ongoing discussion where we all try to improve our understanding of the world and ourselves. It's not just play or entertainment– it's about getting somewhere. It's as much like an academic journal where people publish and discuss important findings as it is like an interest-based sub-Reddit.

And all this makes me really...

Communal Buckets

A bucket error is when someone erroneously lumps two propositions together, e.g. I made a spelling error automatically entails I can't be great writer, they're in one bucket when really they're separate variables.

In the context of criticism, it's often mentioned that people need to learn to not make the bucket error of I was wrong or I was doing a bad thing -> I'm a bad person. That is, you being a good person is compatible with making mistakes, being wrong, and causing harm since even good people make mistakes. This seems like a right and true and a good thing to realize.

But I can see a way in which being wrong/making mistakes (and being called out for this) is upsetting even if you personally aren't making a bucket error. The issue is that you might fear that other people have the two variables collapsed into one. Even if you might realize that making a mistake doesn't inherently make you a bad person, you're afraid that other people are now going to think you are a bad person because they are making that bucket error.

The issue isn't your own buckets, it's that you have a model of the shared "communal buck...

Perhaps the most disliked aspect of the New LessWrong feed was the "modals" upon clicking links instead of full page navigations. We did that because full navigation would lose your place in the feed upon returning.

Fortunately, at long last and after much anticipation, we have upgraded our tech stack so that modals are no longer needed. Clicking links in the Feed will take you to direct primary main full page for posts, comment links, and user profiles.

Tagging users who I recall waiting on this. @dirk @Rana Dexsin

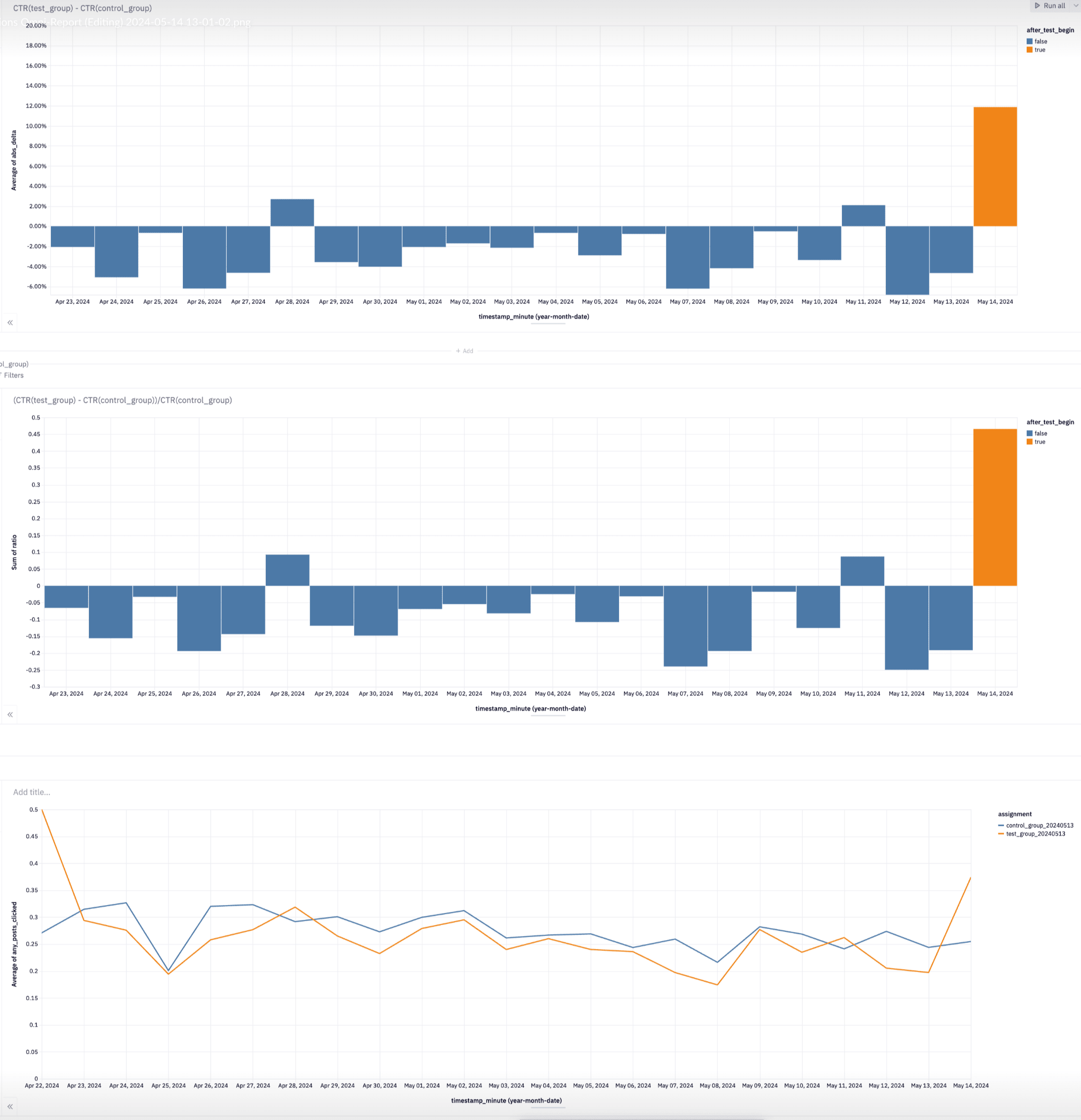

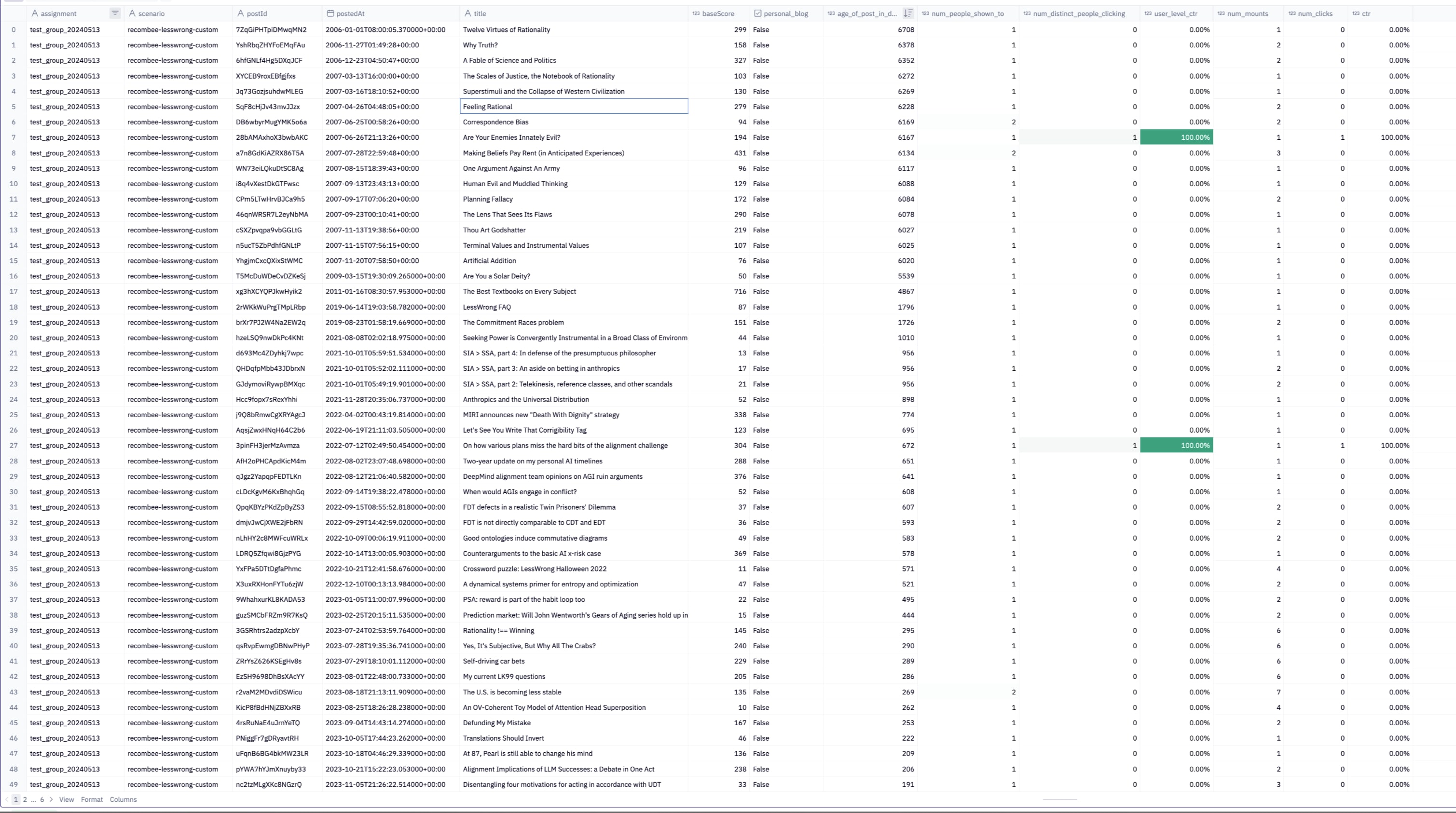

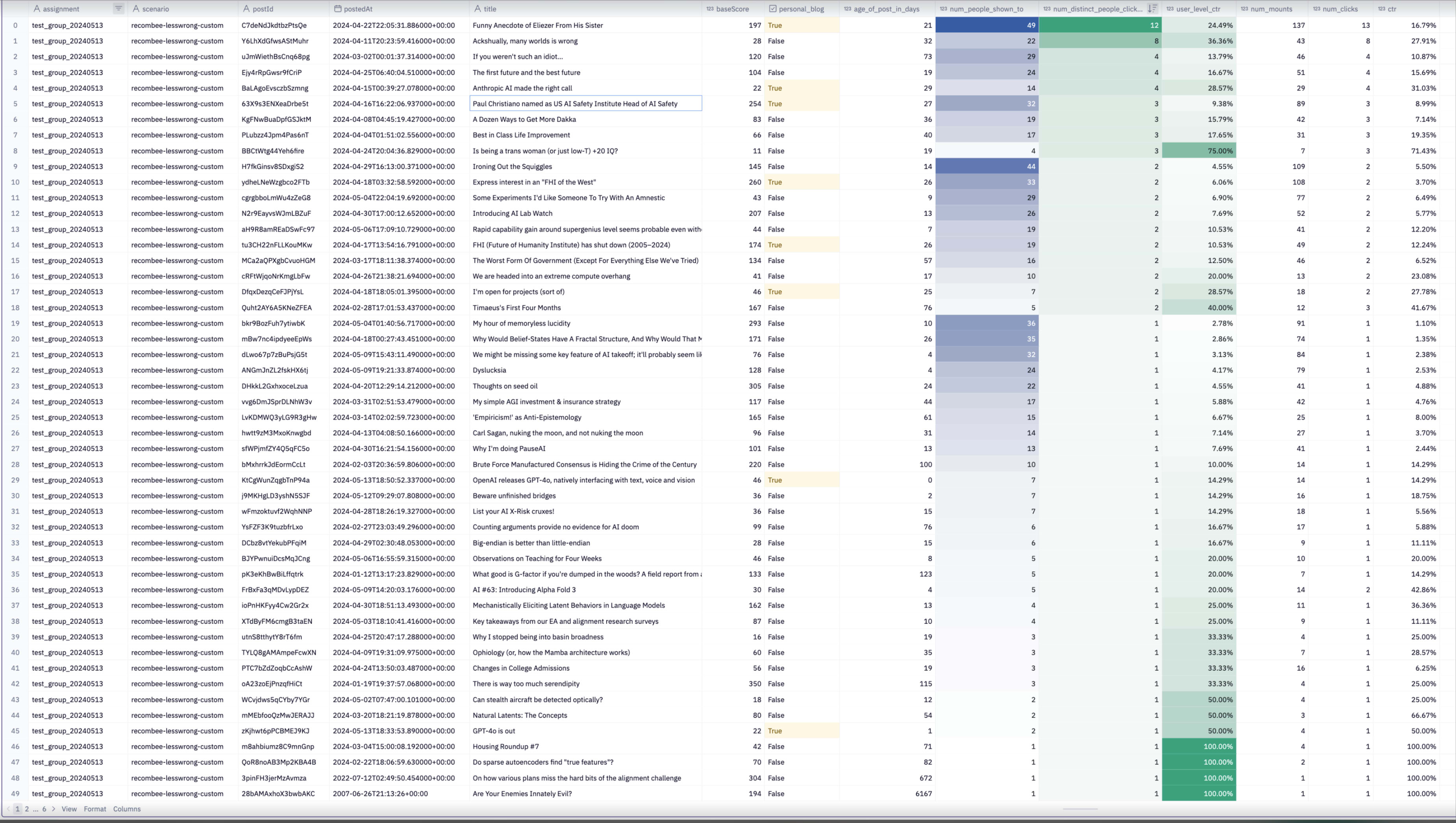

As noted in an update on LW Frontpage Experiments! (aka "Take the wheel, Shoggoth!"), yesterday we started an AB test on some users automatically being switched over to the Enriched [with recommendations] Latest Posts feed.

The first ~18 hours worth of data does seem like a real uptick in clickthrough-rate, though some of that could be novelty.

(examining members of the test (n=921) and control groups (n~=3000) for the last month, the test group seemed to have a slightly (~7%) lower clickthrough-rate baseline, I haven't investigated this)

However the specific posts that people are clicking on don't feel on the whole like the ones I was most hoping the recommendations algorithm would suggest (and get clicked on). It feels kinda like there's a selection towards clickbaity or must-read news (not completely, just not as much as I like).

If I look over items recommended by Shoggoth that are older (50% are from last month, 50% older than that), they feel better but seem to get fewer clicks.

A to-do item is to look at voting behavior relative to clicking behavior. Having clicked on these items, do people upvote them as much as others?

I'm also wanting to experiment with just a...

It feels like the society I interact with dislikes expression of negative emotions, at least in the sense that expressing negative emotions is kind of a big deal - if someone expresses a negative feeling, it needs to be addressed (fixed, ideally). The discomfort with negative emotions and consequent response acts to a fair degree to suppress their expression. Why mention something you're a little bit sad about if people are going to make a big deal out of it and try to make you feel better, etc., etc.?

Related to the above (with an ambiguously directed causal arrow) is that we lack reliable ways to communicate about negative emotions with something like nuance or precision. If I think imagine starting a conversation with a friend by saying "I feel happy", I expect to be given space to clarify the cause, nature, and extent of my happiness. Having clarified these, my friend will react proportionally. Yet when I imagine saying "I feel sad", I expect this to be perceived as "things are bad, you need sympathy, support, etc." and the whole stage of "clarify cause, nature, extent" is skipped instead proceeding to a fairly large reaction.

And I wi...

Just a thought: there's the common advice that fighting all out with the utmost desperation makes sense for very brief periods, a few weeks or months, but doing so for longer leads to burnout. So you get sayings like "it's a marathon, not a sprint." But I wonder if length of the "fight"/"war" isn't the only variable in sustainable effort. Other key ones might be the degree of ongoing feedback and certainty about the cause.

Though I expect a multiyear war which is an existential threat to your home and family to be extremely taxing, I imagine soldiers experiencing less burnout than people investing similar effort for a far-mode cause, let's say global warming which might be happening, but is slow and your contributions to preventing it unclear. (Actual soldiers may correct me on this, and I can believe war is very traumatizing, though I will still ask how much they believed in the war they were fighting.)

(Perhaps the relevant variables here are something like Hanson's Near vs Far mode thinking, where hard effort for far-mode thinking more readily leads to burnout than near-mode thinking even when sustained for long periods.)

Then...

A random value walks into a bar. A statistician swivels around in her chair, one tall boot unlaced and an almost full Manhattan sitting a short distance from her right elbow.

"I've been expecting you," she says.

"Have you been waiting long?" respond the value.

"Only for a moment."

"Then you're very on point."

"I've met enough of your kind that there's little risk of me wasting time."

"I assure you I'm quite independent."

"Doesn't mean you're not drawn from the same mold."

"Well, what can I do for you?"

"I was hoping to gain your confidence..."

Some Thoughts on Communal Discourse Norms

I started writing this in response to a thread about "safety", but it got long enough to warrant breaking out into its own thing.

I think it's important to people to not be attacked physically, mentally, or socially. I have a terminal preference over this, but also think it's instrumental towards truth-seeking activities too. In other words, I want people to actually be safe.

- I think that when people feel unsafe and have defensive reactions, this makes their ability to think and converse much worse. It can push discussion from truth-seeking exchange to social war.

- Here I think mr-hire has a point: if you don't address people's "needs" overtly, they'll start trying to get them covertly, e.g. trying to win arguments for the sake of protecting their reputation rather than trying to get to the truth. Doing things like writing hasty scathing replies rather slow, carefully considered ones (*raises hand*), and worse, feeling righteous anger while doing so. Having thoughts like "the only reason my interlocutor could think X is because they are obtuse due to their biases" rather than "maybe th

There's an age old tension between ~"contentment" and ~"striving" with no universally accepted compelling resolution, even if many people feel they have figured it out. Related:

In my own thinking, I've been trying to ground things out in a raw consequentialism that one's cognition (including emotions) is just supposed to take you towards more value (boring, but reality is allowed to be)[1].

I fear that a lot of what people do is ~"wireheading". The problem with wireheading is it's myopic. You feel good now (small amount of value) at the expense of greater value later. Historically, this has made me instinctively wary of various attempts to experience more contentment such as gratitude journaling. Do such things curb the pursuit of value in exchange for feeling better less unpleasant discontent in the moment?

Clarity might come from further reduction of what "value" is. The primary notion of value I operate with is preference satisfaction: the world is how you want it to be. But also a lot of value seems to flow through experience (and the preferred state of the world is one where certain experiences happen).

A model whereby gratitude journaling (or general "attend to what is good" mot...

Hypothesis that becomes very salient from managing the LW FB page: "likes and hearts" are a measure of how much people already liked your message/conclusion*.

*And also like how well written/how alluring a title/how actually insightful/how easy to understand, etc. But it also seems that the most popular posts are those which are within the Overton window, have less inferential distance, and a likable message. That's not to say they can't have tremendous value, but it does make me think that the most popular posts are not going to be the same as the most valuable posts + optimizing for likes is not going to be same as optimizing for value.

**And maybe this seems very obvious to many already, but it just feels so much more concrete when I'm putting three posts out there a week (all of which I think are great) and seeing which get the strongest response.

***This effect may be strongest at the tails.

****I think this effect would affect Gordon's proposed NPS-rating too.

*****I have less of this feeling on LW proper, but definitely far from zero.

Narrative Tension as a Cause of Depression

I only wanted to budget a couple of hours for writing today. Might develop further and polish at a later time.

Related to and an expansion of Identities are [Subconscious] Strategies

Epistemic status: This is non-experimental psychology, my own musings. Presented here is a model derived from thinking about human minds a lot over the years, knowing many people who’ve experienced depression, and my own depression-like states. Treat it as a hypothesis, see if matches your own data and it generates helpful suggestions.

Clarifying “narrative”

In the context of psychology, I use the term narrative to describe the simple models of the world that people hold to varying degrees of implicit vs explicit awareness. They are simple in the sense of being short, being built of concepts which are basic to humans (e.g. people, relationships, roles, but not physics and statistics), and containing unsophisticated blackbox-y causal relationships like “if X then Y, if not X then not Y.”

Two main narratives

I posit that people carry two primary kinds of narratives in their minds:

- Who I am (the role they are playing), and

- How my life will go (the progress of their life)

T...

Over the years, I've experienced a couple of very dramatic yet rather sudden and relatively "easy" shifts around major pain points: strong aversions, strong fears, inner conflicts, or painful yet deeply ingrained beliefs. My post Identities are [Subconscious] Strategies contains examples. It's not surprising to me that these are possible, but my S1 says they're supposed to require a lot of effort: major existential crises, hours of introspection, self-discovery journeys, drug trips, or dozens of hours with a therapist.

Have recently undergone a really big one, I noted my surprise again. Surprise, of course, is a property of bad models. (Actually, the recent shift occurred precisely because of exactly this line of thought: I noticed I was surprised and dug in, leading to an important S1 shift. Your strength as a rationalist and all that.) Attempting to come up with a model which wasn't as surprised, this is what I've got:

The shift involved S1 models. The S1 models had been there a long time, maybe a very long time. When that happens, they begin to seem how the world just *is*. If emotions arise from those models, and those models are so entrenched t...

The LessWrong admins are often evaluating whether users (particularly new users) are going to be productive members of the site vs are just really bad and need strong action taken.

A question we're currently disagreeing on is which pieces of evidence it's okay to look at in forming judgments. Obviously anything posted publicly. But what about:

- Drafts (admins often have good reason to look at drafts, so they're there)

- Content the user deleted

- The referring site that sent someone to LessWrong

I'm curious how people feel about moderators looking at those.

Alt...

I want to clarify the draft thing:

In general LW admins do not look at drafts, except when a user has specifically asked for help debugging something. I indeed care a lot about people feeling like they can write drafts without an admin sneaking a peak.

The exceptions under discussion are things like "a new user's first post or comment looks very confused/crackpot-ish, to the point where we might consider banning the user from the site. The user has some other drafts. (I think a central case here is a new user shows up with a crackpot-y looking Theory of Everything. The first post that they've posted publicly looks sort of borderline crackpot-y and we're not sure what call to make. A thing we've done sometimes is do a quick skim of their other drafts to see if they're going in a direction that looks more reassuring or "yeah this person is kinda crazy and we don't want them around.")

I think the new auto-rate-limits somewhat relax the need for this (I feel a bit more confident that crackpots will get downvoted, and then automatically rate limited, instead of something the admins have to monitor and manage). I think I'd have defended the need to have this tool in the past, but it might b...

I don't think we've ever framed it that way, but the LessWrong Annual Review is also a chance to do one round of spaced repetition on those posts from yesteryear. Going through the list, I see posts I recognize and remember liking, but whose contents I'd forgotten. It's nice to be prompted to look at them again.

Reading A Self-Dialogue on The Value Proposition of Romantic Relationships, had the following thought:

There's often a "proposition" and separately "implications of the proposition". People often deny a proposition in order to avoid the implications, e.g. Bucket Errors

I wonder how much "you're perfect as you are" is an instance. People need to say or believe this to avoid some implication, but if you could just avoid the implication it would be okay to be imperfect.

I want to register a weak but nonzero prediction that Anthropic’s interpretability publication of A Mathematical Framework for Transformers Circuits will turn out to lead to large capabilities gains and in hindsight will be regarded as a rather bad move that it was published.

Something like we’ll have capabilities-advancing papers citing it and using its framework to justify architecture improvements.

Just updated the Concepts Portal. Tags that got added are:

Edit: I thought this distinction must have been pointed out somewhere. I see it under Raw of Law vs Rule of Man

Law is Ultimate vs Judge is Ultimate

Just writing up a small idea for reference elsewhere. I think spaces can get governed differently on one pretty key dimension, and that's who/what is supposed to driving the final decision.

Option 1: What gets enforced by courts, judgeds, police, etc in countries is "the law" of various kinds, e.g. the Constitution. Lawyers and judges attempt to interpret the law and apply it in given circumstances, often with re...

PSA:

Is Slack your primary coordination tool with your coworkers?

If you're like me, you send a lot of messages asking people for information or to do things, and if your coworkers are resource-limited humans like mine, they won't always follow-up on the timescale you need.

How do you ensure loops get closed without maintaining a giant list of unfinished things in your head?

I used Slacks remind-me feature extensively. Whenever I send a message that I want to follow-up on if the targeted party doesn't get back to me within a certain time frame, I set a reminde...

The new LessWrong Feed is now out of beta/AB-test and into general release. If you were previously in the control arm of the A/B test, you might be surprised now to see the "Your Feed" section, but it is now here for all!

For some users, Recent Discussion provided something valuable but I think it being a firehouse of all content with no smart prioritization or filtering of what to show you, together with a ~cluttered design, made it not a great basis for content-section for the site. The New Feed is trying to improve on both the UI and algorithm front.

If y...

Errors are my own

At first blush, I find this caveat amusing.

1. If there are errors, we can infer that those providing feedback were unable to identify them.

2. If the author was fallible enough to have made errors, perhaps they are are fallible enough to miss errors in input sourced from others.

What purpose does it serve? Given its often paired with "credit goes to..<list of names> it seems like an attempt that people providing feedback/input to a post are only exposed to upside from doing so, and the author takes all the downside reputation risk if t...

Great quote from Francis Bacon (Novum Organum Book 2:8):

Don’t be afraid of large numbers or tiny fractions. In dealing with numbers it is as easy to write or think a thousand or a thousandth as to write or think one.

Converting this from a Facebook comment to LW Shortform.

A friend complains about recruiters who send repeated emails saying things like "just bumping this to the top of your inbox" when they have no right to be trying to prioritize their emails over everything else my friend might be receiving from friends, travel plans, etc. The truth is they're simply paid to spam.

Some discussion of repeated messaging behavior ensued. These are my thoughts:

I feel conflicted about repeatedly messaging people. All the following being factors in this conflict...

For my own reference.

Brief timeline of notable events for LW2:

- 2017-09-20 LW2 Open Beta launched

- (2017-10-13 There is No Fire Alarm published)

- (2017-10-21 AlphaGo Zero Significance post published)

- 2017-10-28 Inadequate Equilibria first post published

- (2017-12-30 Goodhart Taxonomy Publish) <- maybe part of January spike?

- 2018-03-23 Official LW2 launch and switching of www.lesswrong.com to point to the new site.

In parentheses events are possible draws which spiked traffic at those times.

Huh, well that's something.

I'm curious, who else got this? And if yes, anyone click the link? Why/why not?

When I have a problem, I have a bias towards predominantly Googling and reading. This is easy, comfortable, do it from laptop or phone. The thing I'm less inclined to do is ask other people – not because I think they won't have good answers, just because...talking to people.

I'm learning to correct for this. The think about other people is 1) sometimes they know more, 2) they can expose your mistaken assumptions.

The triggering example for this note is an appointment I had today with a hand and arm specialist for the unconventional RSI I've been experiencing...

Failed replications notwithstanding, I think there's something to Fixed vs Growth Mindset. In particular, Fixed Mindset leading to failure being demoralizing, since it is evidence you are a failure, rings true.