Parody/analogy aside, a real concern among those that don't want to slow down AI is that while it was true that dictatorships where unstable, they no longer are. They (and I somewhat) believe 'If You Want a Picture of the Future, Imagine a Boot Stamping on a Human Face – for Ever' is the likely outcome if we don't push through to super AI. That is the AI tools already available are sufficient to forever keep a dictatorship in power once it is setup. So if we pause at this tech level we will steadily see societies become such dictatorships, but never come back.

Consider if a govt insisted that everyone wear an ankle bracelet tracker constantly that recorded everything someone said, + body language, connected to a LLM. There would simply be no possibility to express dissent, and certainly no way to organize any. Xinjiang could be there soon.

If that is the case, then a long pause on AI will mean the final decision on AI if it happened at all would be made by such a society - surely a bad outcome. We simply don't know how stable or otherwise our current tech level is, and there seems to be no easy way to find out.

This seems mostly true? Very very very rarely is there a dictator unchecked in their power. See: A Dictator’s Handbook. They must satisfy the drives of their most capable subjects. When there are too many such subjects, collapse, and when there are way too many, democracy.

OP doesn't claim that dictators are unchecked in their power, he jokingly claims that dictators and monarchs inevitably end up overthrown. Which is, of course, false: there were ~55 authoritarian leaders in the world in 2015, and 11 of them were 69 years old or older, on their way to die of old age. Dictator's handbook has quite a few examples of dictators ruling until their natural death, too.

https://foreignpolicy.com/2015/09/10/when-dictators-die/

This seems mostly true? Very very very rarely is there a dictator unchecked in their power.

Defending the analogy as charitably as I can, I think there are two separate questions here:

- Do dictators need to share power in order to avoid getting overthrown?

- Is a dictatorship almost inherently doomed to fail because it will inevitably get overthrown without "fundamental advances" in statecraft?

If (1) is true, then dictators can still have a good life living in a nice palace surrounded by hundreds of servants, ruling over vast territories, albeit without having complete control over their territory. Sharing some of your power and taking on a small, continuous risk of being overthrown might still be a good opportunity, if you ever get the chance to become a dictator. While you can't be promised total control or zero risk of being overthrown, the benefits of becoming a dictator could easily be worth it in this case, depending on your appetite for risk.

If (2) is true, then becoming a dictator is a really bad idea for almost anyone, except for those who have solved "fundamental problems" in statecraft that supposedly make long-term stable dictatorships nearly-impossible. For everyone else who hasn't solved these problems, the predictable result of becoming a dictator is that you'll soon be overthrown, and you'll never actually get to live the nice palace life with hundreds of servants.

then dictators can still have a good life living in a nice palace surrounded by hundreds of servants, ruling over fast territories, albeit without having complete control over their territory

Does Kim Jong Un really share his power?

My impression is that he does basically have complete control over all of his territory

He does, Kim Jong Un is mainly beholden to China, and China would rather keep the state stable & unlikely to grow in population, economy, or power so that it can remain a big & unappetizing buffer zone in case of a Japanese or Western invasion.

This involves China giving him the means to rule absolutely over his subjects, as long as he doesn't bestow political rights to them, and isn't too annoying. He can annoy them with nuclear tests, but that gives China an easy way of scoring diplomatic points by denouncing such tests, makes the state an even worse invasion prospect, committing China to a strict nuclear deterrence policy in that area, without China having to act crazy themselves.

Kim Jong Un is mainly beholden to China

I mean, I don't think China in practice orders him around. Obviously they are a geopolitically ally and thus have power, but democratic countries are also influenced by their geopolitical allies, especially the more powerful allies like the USA or China. KJU is no different in this respect, the difference is he has full domestic control for himself and his entire family bloodline.

https://youtu.be/rStL7niR7gs?si=63TXDzt_hf68u9_r

The argument CCP Grey makes is that fundamentally one of the issues with dictatorships is at least a human dictator is unable to actually rule alone. Power and bribes must be given to "keys" : people who control the critical parts of the government. And this is likely recursive, for example the highest military leader must bribe and empower his generals and so on.

This is why most of the resources of the dictatorship must be given in bribes, corruption, and for petty exercises of authority to the highest ranking members of the government.

This is Greys argument for why dictatorships tend to be poor, with less reinvestment in the population, and generally not great for the population living in them. That even a dictator who wants to make his or her country wealthy and successful can't. (Most dictatorships survive by extracting resources and selling them, which can be done by foreign expertise, essentially making the country only have money because it happens to sit on top of land with a resource under it. China seems to be an exception and perhaps China is not actually a dictatorship.)

I know this post is a spoof, but the error you are pointing out is the "spherical cow" one. Where an AI system has all these capabilities, exists in a vacuum where there are no slightly weaker AI systems under human control, or earlier betrayal attempts from much weaker AIs that have taught humans how to handle them. Where it is even possible to just develop arbitrarily powerful capabilities without the "scaffolding" of a much broader tech base than humans have today.

And yes, if the AI system is a spherical cow with the density of a black hole it will doom the earth. By simply declaring such a thing exists your argument is tautologically correct.

That is, if I just say "and then a cow sized black hole exists" of course we are doomed. But like black holes are insanely hard to build, there would need to be machinery across the solar system at the point, one way to build one is to slam relativistic mountains together. Such a civilization probably doesn't need the earth.

Right?

For specifics, if by the time the really strong AI exists humans already have nanotechnology and sniffers to detect pathogens and rogue nanobots in the air and fleets of drones monitoring all of the land and seas, then rebel AIs face a much greater challenge. If humans already have trustworthy weaker AIs they can give tasks to, they probably won't be scammed by delegating large complex efforts to AI, especially ones that are black boxes and constantly self modifying.

Aka : Rogue ASI : psst I can solve this problem for you.

Humans: nah fam I got this. I already have a fleet of 100 separate stateless AI instances working on the task and making progress. I don't need to trust a shady ASI with the task. Trusting ASI? Not even once.

(Low confidence:) think you hit the nail on the head. It's all about the path you take and what you have available to you at step n-1. I think this is not much discussed because it's hard to be objective or rigorous. Although it's getting light with redwoods latest stuff.

This post clearly spoofs Without fundamental advances, misalignment and catastrophe are the default outcomes of training powerful AI, though it changes "default" to "inevitable".

I think that coup d'États and rebellions are nearly common enough that they could be called the default, though they are certainly not inevitable.

I enjoyed this post. Upvoted.

I think that coup d'États and rebellions are nearly common enough that they could be called the default, though they are certainly not inevitable.

They do happen, nevertheless I think the default result of a rebellion/coup throughout history was simply a dictatorship or monarchy with a different dictator. And they do have a certain average lifetime (which may be enough for our purposes). And, the AI oppressor has huge systematic advantages over the mere human dictator - remote access to everyone's mind, complete control over communication, etc.

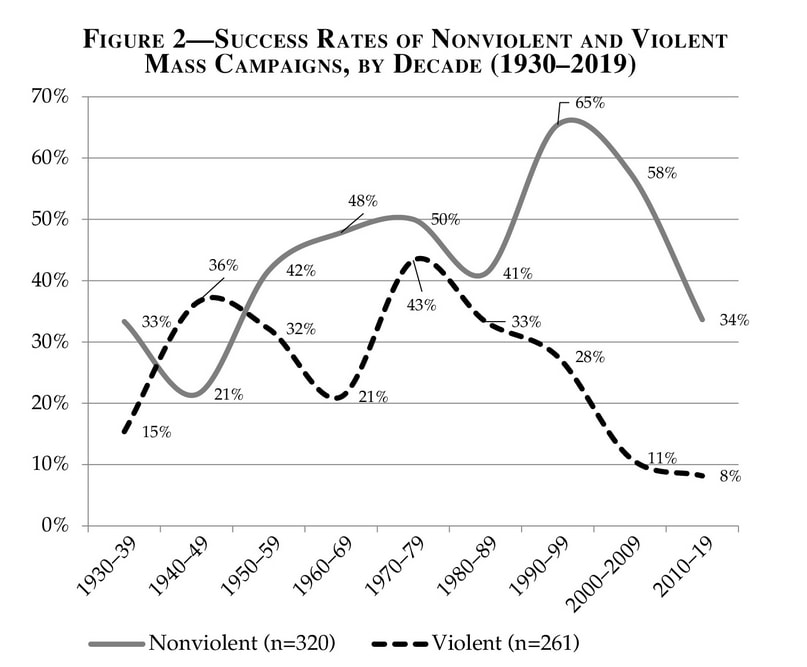

Not to mention that the default result of rebellion is failure. (Figure from https://www.journalofdemocracy.org/articles/the-future-of-nonviolent-resistance-2/)

Just to be sure I'm following you: When you are talking about the AI oppressor, are you envisioning some kind of recursive oversight scheme?

I assume here that your spoof is arguing that since we observe stable dictatorships, we should increase our probability that we will also be stable in our positions as dictators of a largely AI-run economy. (I recognize that it can be interpreted in other ways).

We expect we will have the two advantages over the AIs: We will be able to read their parameters directly, and we will be able to read any communication we wish. This is clearly insufficient, so we will need to have "AI Opressors" to help us interpret the mountains of data.

Two obvious objections:

- How do we ensure the alignment of the AI Opressors?

- Proper oversight of an agent that is more capable than yourself seems to become dramatically harder as the capability gap increases.

I think a LOT of this depends on definition of "dictator" - what they demand and provide that's different from what the populace wants, and how they enforce their dictates. To a great extent, these arguments seem to apply to democracies as well as dictatorships - no specific individual leaders are stable, and policies vary over time pretty significantly, even if a structure and name can last for a few centuries.

An example: England has had a monarch for MUCH longer than any democracy has been around. Incorporating this fact requires that we define how that monarch is not a dictator, and to be more specific about what aspects of governance we're talking about.

This is a spoof post, and you probably shouldn't spend much brain power on evaluating its ideas.

The general disanalogy here is that populations are generally less smart than any particular individuum in population.

Uh, and why is that a disanalogy? How do we know that the same won't apply to populations of AIs (where we get to very carefully control how they can interact)?

Even if we don't assume level of intelligence when populations stop to be less smart than any individual (which is certainly possible via various coordination schemas), population of superintelligences is going to be smarter than population of humans or any particular human.

we get to very carefully control how they can interact

This statement seems to came from time when people thought we would box our AIs and not give them 24/7 internet access.

came from time when people thought we would box our AIs and not give them 24/7 internet access.

OK. But then we need to loudly say that the problem with AI control is not that it's inherently impossible, it's that we just have to not be idiots about it.

FWIW my ass numbers have for a while been: 50% we die because nobody will know a workable technical solution to keeping powerful AI under control, by the time we need it, plus another (disjunctive) 40% that we die despite knowing such a solution, thanks to competition issues, other coordination issues, careless actors, bad actors, and so on (see e.g. here), equals 90% total chance of extinction or permanent disempowerment.

When you say “We just have to not be idiots about it” that’s an ambiguous phrase, because people say that phrase in regards to both very easy problems and very hard problems.

- People say “We can move the delicate items without breaking them, we just have to not be idiots about it—y’know, make sure our shoes are tied, look where we’re going, etc.” That’s example is actually easy for a group of non-idiots. We would bet on success “by default” (without any unusual measures).

- But people also say “Lowering rents in San Francisco is easy, we just have to not be idiots about it—y’know, build much much more housing where people want to live.” But that’s actually hard. There are strong memetic and structural forces arrayed against that. In other words, idiots do exist and are not going away anytime soon! :)

So anyway, I’m not sure what you were trying to convey in that comment.

population of superintelligences is going to be smarter than population of humans or any particular human

It may not be a population of superintelligences, it may be a very large population of marginally smarter than human AGIs (AGI-H+), and human dictators do in fact control large populations of other people who are marginally smarter than them (or, at least certain subpopulations are marginally smarter than the dictator)

edit: in response to downvote, made intro paragraph more clear

This is interesting, because in this framing, it sure passingly and incorrectly sounds like a good thing, and seems on the surface to imply that maybe alignment should not be solved, which seems to me a false implication from the metaphor being wrong: because actually I think your framing so far misrepresents the degree to which also, without fundamental advances, those coups would not produce alignment with the population either.

And I'd hope we can make advances that align leaders with populations, or even populations with each other in an agency-respecting way, rather than aligning populations with rulers, and then generalize this to ai. This problem has not ever been fully solved before, and fully aligning populations with rulers would be an alignment failure approximately equivalent to extinction, give or take a couple orders of magnitude - both eliminate almost all the value of the future.

Unless you address this issue, I think this argumentation will be quite weak.

But we don't want to be aligned to an AI. I don't want my mind altered to love paperclips and be indifferent about my family and friends and so on.

I'm not taking your post to be metaphorical, I'm taking your post to be literal and to be building up to a generalization step where you make an explicit comparison. My response is in the literal interpretation, under the assumption that AIs and humans are both population seen as needing to be aligned, and I am arguing that we need a perspective on inter-human alignment that generalizes to starkly superintelligent things without needing to consider aligning weak humans to strong humans forcibly. It seems like a local validity issue with the reasoning chain otherwise.

edit: but to be clear, I should say - this is an interesting approach and I'm excited about something like it. I habitually zoomed in on a local validity issue, but the step of generalizing this to ai seems like a natural and promising one. But I think the alignment issues between humans are at least as big of an issue and are made of the same thing; making one and only one human starkly superintelligent would be exactly as bad as making an ai starkly superintelligent.

but it is written in a way that applies literally as-is, and only works as a metaphor by nature of that literal description being somewhat accurate. so I feel it appropriate to comment on the literal interpretation. Do you have a response at that level?

yes, from the point of view of the dictator, any changes to the dictator's utility function (alignment with the population) are bad

This is interesting, because in this framing, it sure sounds like a good thing, and seems on the surface to imply that maybe alignment should not be solved.

An important disanalogy with the AI posting is that people have moral significance and dictatorships are usually bad for them, which makes rebellion against them a good thing. AIs as envisaged in the other posting do not and their misalignment is a bad thing.

A pdf version of this report will be available

Summary

In this report, I argue that dictators ruling over large and capable countries are likely to face insurmountable challenges, leading to inevitable rebellion and coup d'état. I assert this is the default outcome, even with significant countermeasures, given the current trajectory of power dynamics and governance, and therefore when we check real-world countries we should find no or very few dictatorships, no or very few absolute monarchies and no arrangements where one person or a small group imposes their will on a country. This finding is robust to the time period we look in - modern, medieval, or ancient.

In Section 1, I discuss the countries which are the focus of this report. I am specifically focusing on nations of immense influence and power (with at least 1000 times the Dunbar Number of humans) which are capable of running large, specialized industries and fielding armies of at least thousands of troops.

In Section 2, I argue that subsystems of powerful nations will be approximately consequentialist; their behavior will be well described as taking actions to achieve an outcome. This is because the task of running complex industrial, social and military systems is inherently outcome-oriented, and thus the nation must be robust to new challenges to achieve these outcomes.

In Section 3, I argue that a powerful nation will necessarily face new circumstances, both in terms of facts and skills. This means that capabilities will change over time, which is a source of dangerous power shifts.

In Section 4, I further argue that governance methods based on fear and suppression, which are how dictatorships might be maintained, are an extremely imprecise way to secure loyalty. This is because there are many degrees of freedom in loyalty that aren't pinned down by fear or suppression. Nations created this way will, by default, face unintended rebellion.

In Section 5, I discuss why I expect control and oversight of powerful nations to be difficult. It will be challenging to safely extract beneficial behavior from misaligned groups and organizations while ensuring they don’t take unwanted actions, and therefore we don’t expect dictatorships to be both stable and aligned to the goals of the dictator

Finally, in Section 6, I discuss the consequences of a leader attempting to rule a powerful nation with improperly specified governance strategies. Such a leader could likely face containment problems given realistic levels of loyalty, and then face outcomes in the nation that would be catastrophic for their power. It seems very unlikely that these outcomes would be compatible with dictator survival.

[[Work in progress - I'll add to this section-by-section]]

Related work - https://www.lesswrong.com/posts/GfZfDHZHCuYwrHGCd/without-fundamental-advances-misalignment-and-catastrophe