Epistemics: I'm an ML platform engineer who works on inference accelerators.

What you said is correct, however, look at how the brain does it.

There are approximately ~1000 physical living synaptic 'wires' to each target cell. Electrical action potentials travel down the 'wires', jumping from node to node, until they reach a synapse, where the sending cell sends a quantity of neurotransmitters across a gap to the receiving cell.

Each neurotransmitters causes a + or - electrical voltage change at the receiver.

So you can abstract this as ([1] * <+- delta from neurotransmitter>) + (receiving cell voltage)

The receiving cell then does an activation function that fundamentally integrates many of these inputs, ~1000 on average, and then either emits an output pulse if a voltage theshold is reached.

This is both an analog and a digital system, and it's a common misbelief to think it has infinite precision because it does temporal integration. It does not, and therefore a finite precision binary computer can model this with no loss of performance.

We can measure the 'clock speed' of this method at about 1 kilohertz.

Middle Summary: using dedicated hardware for every circuit, the brain runs at 1 kilohertz.

A similar function is to use dedicated hardware to model a brain-like AI system. Today you would use a dedicated multiply-accumulate unit for every connection in a neural network graph, aka if it were 175B at 16-bit weights, you would use 175 billion 16-bit MACs. You also obviously would have dedicated circuitry for each activation calculation, which run in parallel.

If the clock speed of the H100 is 1755 MHz, and it can do (1,979 teraFLOPS)/2, and each MAC is a single clock MAC, then it has 563,818 MAC units organized into it's RTX units.

So to run GPT 3.5 with dedicated hardware you would need 310,384 times more silicon area.

A true human level intelligence likely requires many more weights.

Summary: you can build dedicated hardware that will run AI models substantially faster than the brain, it seems approximately 1-2 million times faster if you run at the clock speeds of the H100 and assume the brain is 1-2 khz. However it will take up a lot more silicon, the rumor of 128 H100s per GPT-4 instance means that if you need 310,384 times more silicon to reach the above speeds, you still need 2424 times as much hardware as is currently being used, and it's dedicated ASICs.

For AI 'risk' arguments : if this is what it requires to run at these speeds, there are 3 takeaways:

1. AI models that "escape", unless there are large pools of the above hardware, true neural processors, in vulnerable places on the internet, will run so slow as to be effectively dead. (Because the "military" AIs working with humans will have this kind of hardware to hunt them down with)

2. It is important to monitor the production and deployment of hardware capable of running AI models millions of times faster

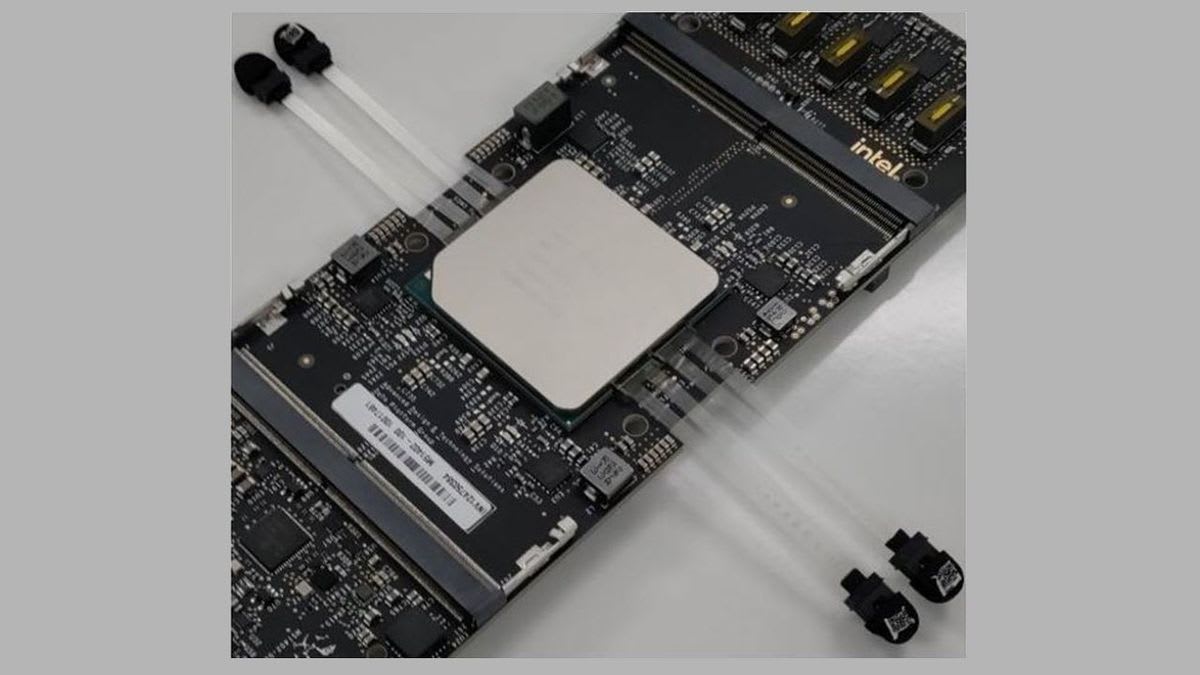

3. It is physically possible for humans to build hardware to host AI on that does run many times faster, peaking at ~1 million times faster, using 2024 fabrication technology. It just won't be cheap. Hardware would be many ICs that look like the below:

Don't global clock speeds have to go down as die area goes up due to the speed of light constraint?

For instance, if you made a die with 1e15 MAC units and the area scaled linearly, you would be looking at a die that's ~ 2e9 times larger than H100's die size, which is about 1000 mm^2. The physical dimensions of such a die would be around 2 km^2, so the speed of light would limit global clock frequencies to something on the order of c/(1 km) ~= 300 kHz, which is not 1 million times faster than the 1 kHz you attribute to the human brain. If you need multiple round trips for a single clock, the frequencies will get even lower.

Maybe when the clock frequencies get this low, you're dissipating so little heat that you can go 3D without worrying too much about heating issues and that buys you something. Still, your argument here doesn't seem that obvious to me, especially if you consider the fact that one round trip for one clock is extremely optimistic if you're trying to do all MACs at once. Remember that GPT-3 is a sequential model; you can't perform all the ops in one clock because later layers need to know what the earlier layers have computed.

Overall I think your comment here is quite speculative. It may or may not be true, I think we'll see, but people shouldn't treat it as if this is obviously something that's feasible to do.

Don't global clock speeds have to go down as die area goes up due to the speed of light constraint?

Yes if you use 1 die with 1 clock domain, they would. Modern chips don't.

For instance, if you made a die with 1e15 MAC units and the area scaled linearly, you would be looking at a die that's ~ 2e9 times larger than H100's die size, which is about 1000 mm^2. The physical dimensions of such a die would be around 2 km^2, so the speed of light would limit global clock frequencies to something on the order of c/(1 km) ~= 300 kHz, which is not 1 million times faster than the 1 kHz you attribute to the human brain.

So actually you would make something like 10,000 dies, the MAC units spread between them. Clusters of dies are calculating a single layer of the network. There are many optical interconnects between the modules, using silicon photonics, like this:

I was focused on the actual individual synapse part of it. The key thing to realize that the MAC is the slowest step, multiplications are complicated and take a lot of silicon, and I am implicitly assuming all other steps are cheap and fast. Note that it's also a pipelined system with enough hardware there aren't any stalls, the moment a MAC is done it's processing the next input on the next clock, and so on. It's what hardware engineers would do if they had an unlimited budget for silicon.

Maybe when the clock frequencies get this low, you're dissipating so little heat that you can go 3D without worrying too much about heating issues and that buys you something. Still, your argument here doesn't seem that obvious to me, especially if you consider the fact that one round trip for one clock is extremely optimistic if you're trying to do all MACs at once. Remember that GPT-3 is a sequential model; you can't perform all the ops in one clock because later layers need to know what the earlier layers have computed.

No no no. Each layer runs 1 million times faster. If a human brain needs 10 seconds to think of something, that's enough time for the AI equivalent to compute the activations of 10 million layers, or many forward passes over the information, reflection on the updated memory buffer from the prior steps, and so on.

Or the easier way to view it is : say a human responds to an event in 300 ms.

Optic nerve is 13 m/s. Average length 45 mm. 3.46 milliseconds to reach the visual cortex.

Then for a signal to reach a limb, 100 milliseconds. So we have 187 milliseconds for neural network layers.

Assume the synapses run at 1 khz total, so that means in theory there can be 187 layers of neural network between the visual cortex and the output nerves going to alimb.

So to run this 1 million times faster, we use hollow core optical fibers, and we need to travel 1 meter total of cabling. That will take 3.35 nanoseconds, which is 89 million times faster.

Ok, can we evaluate a 187 layer neural network 1 million times faster than 187 milliseconds?

Well if we take 1 clock for the MACs, we compute the activations with 9 clocks of delay including clock domain syncs, you need 10 ghz for 1 million fold speedup.

But oh wait, we have better wiring. So actually we have 299 milliseconds of budget for 187 neural network layers.

This means we only need 6.25 ghz. Or we may be able to do a layer in less than 10 clocks.

Overall I think your comment here is quite speculative. It may or may not be true, I think we'll see, but people shouldn't treat it as if this is obviously something that's feasible to do.

There is nothing speculative or novel here about the overall idea of using ASICs to accelerate compute tasks. The reason it won't be done this way is because you can extract a lot more economic value for your (silicon + power consumption) with slower systems that share hardware.

I mean right now there's no value at all in doing this, the AI model is just going to go off the rails and be deeply wrong within a few minutes at current speeds, or get stuck in a loop it doesn't know it's in because of short context. Faster serial speed isn't helpful.

In the future, you have to realize that even if the brain of some robotic machine can respond 1 million times faster, physics do not allow the hardware to move that fast, or the cameras to even register a new frame by accumulating photons that fast. It's not a useful speed. It also applies similarly to R&D tasks - at much lower speedups the task will be limited by the physical world.

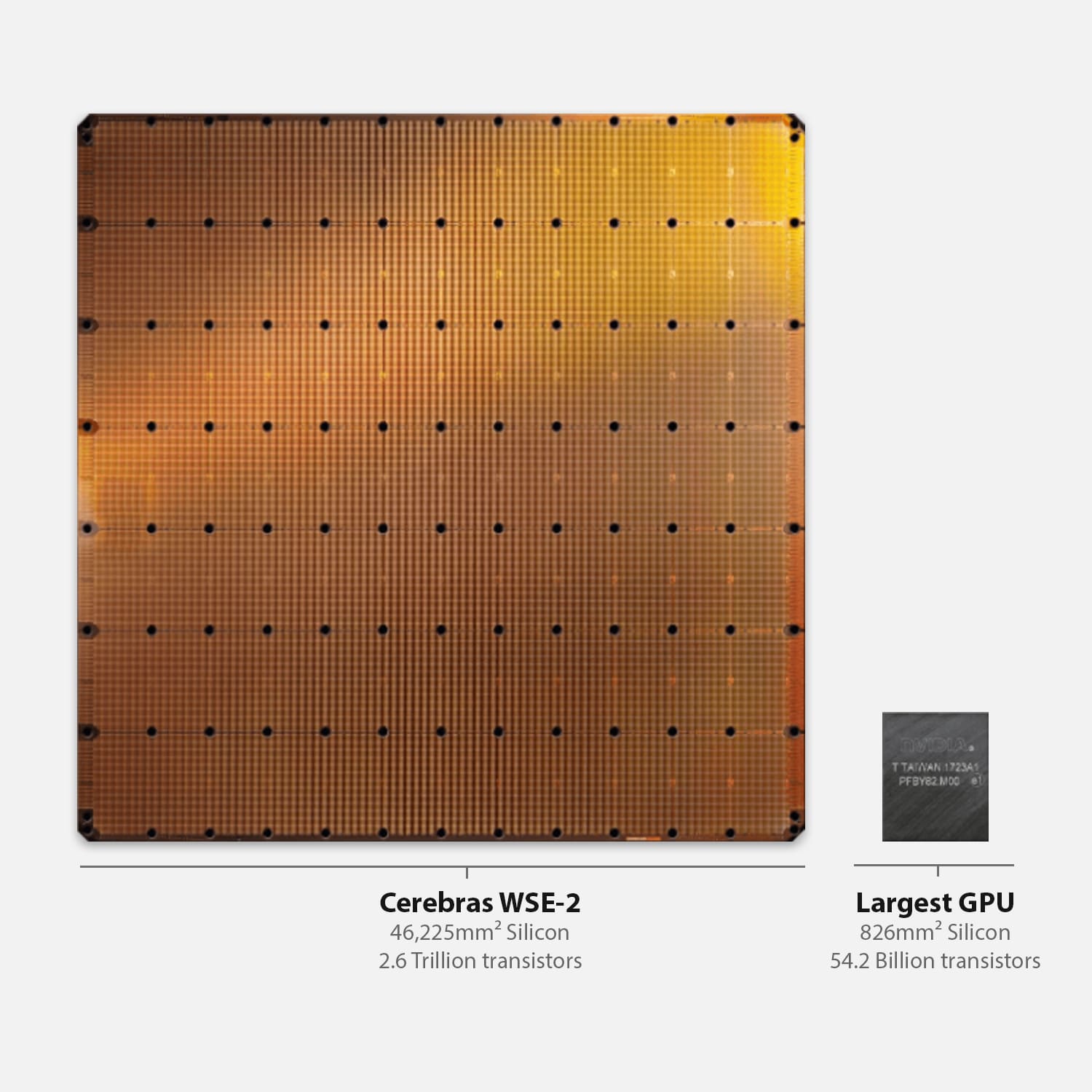

Importantly, by "faster" here we are talking about latency. the ops per sec possible on a cerebras style chip will not be that drastically higher than a similar area of silicon for a gpu - it's conceivable you could get 100x higher ops/sec due to locality if I remember my numbers right. But with drastically lower latency, the model only has to decide which part of itself to run at full frequency, so it would in fact be able to think drastically faster than humans.

though, you can also do a similar trick on GPUs, and it wouldn't be too hard to design an architecture that uses a recently released block to do it by having a small very-high-frequency portion of the network, since there's a recent block design that is already implemented correctly to pull it off.

(Because the "military" AIs working with humans will have this kind of hardware to hunt them down with)

You need to make a lot of extra assumptions about the world for this reasoning to work.

These "military" AI's need to exist. And they need to be reasonably loosely chained. If their safety rules are so strict they can't do anything, they can't do anything however fast they don't do it. They need to be actively trying to do their job, as opposed to playing along for the humans but not really caring. They need to be smart enough. If the escaped AI uses some trick that the "military" AI just can't comprehend, then it fails to comprehend again and again, very fast.

Of course. Implicitly I am assuming that intelligent algorithms have diminishing returns on architectural complexity. So if the "military" owned model is simple with an architecture + training suite controlled and understood by humans, the assumption is the "free" model cannot be effectively that much more intelligent with a better architecture if it lacks compute by a factor of millions. That greater intelligence is a scale dependent phenomenon.

This is so far consistent with empirical evidence. Do you happen to know of evidence to challenge this assumption? As far as I know with LLM experiments, there are tweaks to architecture but the main determinant for benchmark performance is model+data scale (which are interdependent), and non transformer architectures seem to show similar emergent properties.

As far as I know with LLM experiments, there are tweaks to architecture but the main determinant for benchmark performance is model+data scale (which are interdependent), and non transformer architectures seem to show similar emergent properties.

So within the rather limited subspace of LLM architectures, all architectures are about the same.

Ie once you ignore the huge space of architectures that just ignore the data and squander compute, then architecture doesn't matter. Ie we have one broad family of techniques, (with gradient decent, text prediction etc) and anything in that family is about equally good. And anything outside basically doesn't work at all.

This looks to me to be fairly strong evidence that you can't get a large improvement in performance by randomly bumbling around with small architecture tweaks to existing models.

Does this say anything about whether a fundamentally different approach might do better? No. We can't tell that from this evidence. Although looking at the human brain, we can see it seems to be more data efficient than LLM's. And we know that in theory models could be Much more data efficient. Addition is very simple. Solomonov induction would have it as a major hypothesis after seeing only a couple of examples. But GPT2 saw loads of arithmetic in training, and still couldn't reliably do it.

So I think LLM architectures form a flat bottomed local semi-minima (minimal in at least most dimensions). It's hard to get big improvements just by tweaking it. (We are applying enough grad student descent to ensure that) but nowhere near global optimal.

Suppose everything is really data bottlenecked, and the slower AI has a more data efficient algorithm. Or maybe the slower AI knows how to make synthetic data, and the human trained AI doesn't.

There's one interesting technical detail. Human brain uses heavy irregular sparsity. This is where most possible connections between layers have no connection - zero weight. On a gpu, there is limited performance improvement from sparsity. This is because the hardware subunits can only calculate sparse activations if the memory address patterns are regular. Irregular sparsity doesn't have hardware support.

Future neural processors will support full sparsity. This will allow them to run 100x faster probably with the same amount of silicon (way less macs but you have layer activation multicast units)- but only on new specialized hardware.

It's possible that whatever neural architectures that are found using recursion - that leave the state space of llms or human brains - will have similar compute requirements. That they will be functionally useless in current hardware, running thousands of times faster on purpose built hardware.

Same idea though. I don't see why "the military" can't do recursion using their own AIs and use custom hardware to outcompete any "rogues".

Also it provides a simple way to keep control of AI : track the location of custom hardware, know your customer, etc.

I suspect if AI is anything like computer graphics there will be at least 5-10 paradigm shifts to new architectures that need updated hardware to run, obsoleting everything deployed, before settling in something that is optimal. Flops are not actually fungible and Turing complete doesn't mean your training run will complete this century.

Same idea though. I don't see why "the military" can't do recursion using their own AIs and use custom hardware to outcompete any "rogues".

One of the deep fundamental reasons here is alignment failures. Either the "military" isn't trying very hard, or humans know they haven't solved alignment. Humans know they can't build a functional "military" AI, all they can do is make another rouge AI. Or the humans don't know that, and the military AI is another rouge AI.

For this military AI to be fighting other AI's on behalf of humans, a lot of alignment work has to go right.

The second deep reason is that recursive self improvement is a strong positive feedback loop. It isn't clear how strong, but it could be Very strong. So suppose the first AI undergoes a recursive improvement FOOM. And it happens that the rouge AI gets there before any military. Perhaps because the creators of the military AI are taking their time to check the alignment theory.

Positive feedback loops tend to amplify small differences.

Also, about all those hardware differences. A smart AI might well come up with a design that efficiently uses old hardware. Oh, and this is all playing out in the future, not now. Maybe the custom AI hardware is everywhere by the time this is happening.

I suspect if AI is anything like computer graphics there will be at least 5-10 paradigm shifts to new architectures that need updated hardware to run, obsoleting everything deployed, before settling in something that is optimal. Flops are not actually fungible and Turing complete doesn't mean your training run will complete this century.

This is with humans doing the research. Humans invent new algorithms more slowly than new chips are made. So it makes sense to adjust the algorithm to the chip. If the AI can do software research far faster than any human, adjusting the software to the hardware (an approach that humans use a lot throughout most of computing) becomes an even better idea.

This is with humans doing the research. Humans invent new algorithms more slowly than new chips are made. So it makes sense to adjust the algorithm to the chip. If the AI can do software research far faster than any human, adjusting the software to the hardware (an approach that humans use a lot throughout most of computing) becomes an even better idea.

Note that if you are seeking an improved network architecture and you need it to work on a limited family of chips, this is constraining your search. You may not be able to find a meaningful improvement over the sota with that constraint in place, regardless of your intelligence level. Something like sparsity, in memory compute, neural architecture (this is where the chip is structured like the network it is modeling with dedicated hardware) can offer colossal speedups.

this is constraining your search. You may not be able to find a meaningful improvement over the sota with that constraint in place, regardless of your intelligence level.

I mean the space of algorithms that can run on an existing chip is pretty huge. Yes it is a constraint. And it's theoretically possible that the search could return no solutions, if the SOTA was achieved with Much better chips, or was near optimal already, or the agent doing the search wasn't much smarter than us.

For example, there are techniques that decompose a matrix into its largest eigenvectors. Which works great without needing sparse hardware.

We don't care about how many FLOPs something has. We care about how fast it can actually solve things.

As far as I know, in every case where we've successfully gotten AI to do a task at all, AI has done that task far far faster than humans. When we had computers that could do arithmetic but nothing else, they were still much faster at arithmetic than humans. Whatever your view on the quality of recent AI-generated text or art, it's clear that AI is producing it much much faster than human writers or artists can produce text/art.

As far as I know, in every case where we've successfully gotten AI to do a task at all, AI has done that task far far faster than humans. When we had computers that could do arithmetic but nothing else, they were still much faster at arithmetic than humans. Whatever your view on the quality of recent AI-generated text or art, it's clear that AI is producing it much much faster than human writers or artists can produce text/art.

"Far far faster" is an exaggeration that conflates vastly different orders of magnitude with each other. When compared against humans, computers are many orders of magnitude faster at doing arithmetic than they are at generating text: a human can write perhaps one word per second when typing quickly, while an LLM's serial speed of 50 tokens/sec maybe corresponds to 20 words/sec or so. That's just a ~ 1.3 OOM difference, to be contrasted with 10 OOMs or more at the task of multiplying 32-bit integers, for instance. Are you not bothered at all by how wide the chasm between these two quantities seems to be, and whether it might be a problem for your model of this situation?

In addition, we know that this could be faster if we were willing to accept lower quality outputs, for example by having fewer layers in an LLM. There is a quality-serial speed tradeoff, and so ignoring quality and just looking at the speed at which text is generated is not a good thing to be doing. There's a reason GPT-3.5 has smaller per token latency than GPT-4.

I think there is a weaker thesis which still seems plausible: For every task for which an ML system achieves human level performance, it is possible to perform the task with the ML system significantly faster than a human.

The restriction to ML models excludes hand-coded GOFAI algorithms (like Deep Blue), which in principle could solve all kinds of problems using brute force search.

Counterexamples would be interesting. No examples of superhuman but slower-than-human performance come to mind; for example, AFAIK, at all time controls except possibly 'correspondence', chess/Go AIs are superhuman. (The computer chess community, last I read any discussions, seemed to think that correspondence experts using chess AIs would still beat chess AIs; but this hasn't been tested, and it's unclear how much is simply due to a complete absence of research into correspondence chess strategy like allocation of search time, and letting the human correspondence players use chess AIs may be invalid in this context anyway.) Robots are usually much slower at tasks like humanoid robotics, but also aren't superhuman most of those tasks - unless we count ultra-precise placement or manipulation tasks, maybe? LLMs are so fast that if they are superhuman at anything then they are faster-than-human too; most forms of inner-monologue/search to date either don't take convincingly longer than a normal human pen-and-paper or calculator would or are still sub-human.3

I think counterexamples are easy to find. For example, chess engines in 1997 could play at the level of top human chess players on consumer hardware, but only if they were given orders of magnitude more time to think than the top humans had available. Around 1997 Deep Blue was of a similar strength to Kasparov, but it had to run on a supercomputer; on commercial hardware chess engines were still only 2400-2500 elo. If you ran them for long enough, though, they would obviously be stronger than even Deep Blue was.

I think the claim that "in every case where we've successfully gotten AI to do a task at all, AI has done that task far far faster than humans" is a tautology because we only say we've successfully gotten AI to do a task when AI can beat the top humans at that task. Nobody said "we got AI to play Go" when AI Go engines were only amateur dan strength, even though they could have equally well said "we got AI to play Go at a superhuman level but it's just very slow".

A non-tautological version might say that the decrease over time in the compute multiplier the AIs need to compete with the top humans is steep, so it takes a short time for the AIs to transition from "much slower than humans" to "much faster than humans" when they are crossing the "human threshold". I think there's some truth to this version of the claim but it's not really due to any advanced serial speed on the part of the AIs.

I think the claim that "in every case where we've successfully gotten AI to do a task at all, AI has done that task far far faster than humans" is a tautology

It is not a tautology.

For example, chess engines in 1997 could play at the level of top human chess players on consumer hardware, but only if they were given orders of magnitude more time to think than the top humans had available. Around 1997 Deep Blue was of a similar strength to Kasparov, but it had to run on a supercomputer; on commercial hardware chess engines were still only 2400-2500 elo. If you ran them for long enough, though, they would obviously be stronger than even Deep Blue was.

Er, this is why I spent half my comment discussing correspondence chess... My understanding is that it is not true that if you ran computers for a long time that they would beat the human also running for a long time, and that historically, it's been quite the opposite: the more time/compute spent, the better the human plays because they have a more scalable search. (eg. Shannon's 'type-A strategy vs type-B strategy' was meant to cover this distinction: humans search moves slower, but we search moves way better, and that's why we win.) It was only at short time controls where human ability to plan deeply was negated that chess engines had any chance. (In correspondence chess, they deeply analyze the most important lines of play and avoid getting distracted, so they can go much deeper into the game tree that chess engines could.) Whenever the crossover happened, it was probably after Deep Blue. And given the different styles of play and the asymptotics of how those AIs smash into the exponential wall of the game tree & explode, I'm not sure there is any time control at which you would expect pre-Deep-Blue chess to be superhuman.

This is similar to ML scaling. While curves are often parallel and don't cross, curves often do cross; what they don't do is criss-cross repeatedly. Once you fall behind asymptotically, you fall behind for good.

Nobody said "we got AI to play Go" when AI Go engines were only amateur dan strength, even though they could have equally well said "we got AI to play Go at a superhuman level but it's just very slow".

I'm not familiar with 'correspondence Go' but I would expect that if it was pursued seriously like correspondence chess, it would exhibit the same non-monotonicity.

If you ran them for long enough, though, they would obviously be stronger than even Deep Blue was.

That's not obvious to me. If nothing else, that time isn't useful once you run out of memory. And PCs had very little memory: you might have 16MB RAM to work with.

And obviously, I am not discussing the trivial case of changing the task to a different task by assuming unlimited hardware or ignoring time and just handicapping human performance by holding their time constant while assigning unlimited resources to computers - in which case we had superhuman chess, Go, and many other things whenever someone first wrote down a computable tree search strategy!*

* actually pretty nontrivial. Recall the arguments like Edgar Allan Poe's that computer chess was impossible in principle. So there was a point at which even infinite compute didn't help because no one knew how to write down and solve the game tree, as obvious and intuitive as that paradigm now seems to us. (I think that was Shannon, as surprisingly late as that may seem.) This also describes a lot of things we now can do in ML.

I think that when you say

My understanding is that it is not true that if you ran computers for a long time that they would beat the human also running for a long time

(which I don't disagree with, btw) you are misunderstanding what Ege was claiming, which was not that in 1997 chess engines on stock hardware would beat humans provided the time controls were long enough, but only that in 1997 chess engines on stock hardware would beat humans if you gave the chess engines a huge amount of time and somehow stopped the humans having anything like as much time.

In other words, he's saying that in 1997 chess engines had "superhuman but slower-than-human performance": that whatever a human could do, a chess engine could also do if given dramatically more time to do it than the human had.

And yes, this means that in some sense we had superhuman-but-slow chess as soon as someone wrote down a theoretically-valid tree search algorithm. Just as in some sense we have superhuman-but-slow intelligence[1] since someone wrote down the AIXI algorithm.

[1] In some sense of "intelligence" which may or may not be close enough to how the term is usually used.

I feel like there's an interesting question here but can't figure out a version of it that doesn't end up being basically trivial.

- Is there any case where we've figured out how to make machines do something at human level or better if we don't care about speed, where they haven't subsequently become able to do it at human level and much faster than humans?

- Kinda-trivially yes, because anything we can write down an impracticably-slow algorithm for and haven't yet figured out how to do better than that will count.

- Is there any case where we've figured out how to make humans do something at human level or better if we don't mind them being a few orders of magnitude slower than humans, where they haven't subsequently become able to do it at human level and much faster than humans?

- Kinda-trivially yes, because there are things we've only just very recently worked out how to make machines do well.

- Is there any case where we've figured out how to make humans do something at human level or better if we don't mind them being a few orders of magnitude slower than humans, and then despite a couple of decades of further work haven't made them able to do it at human level and much faster than humans?

- Kinda-trivially no, because until fairly recently Moore's law was still delivering multiple-orders-of-magnitude speed improvements just by waiting, so anything we got to human level >=20 years ago has then got hugely faster that way.

It is not a tautology.

Can you explain to me the empirical content of the claim, then? I don't understand what it's supposed to mean.

About the rest of your comment, I'm confused about why you're discussing what happens when both chess engines and humans have a lot of time to do something. For example, what's the point of this statement?

My understanding is that it is not true that if you ran computers for a long time that they would beat the human also running for a long time, and that historically, it's been quite the opposite...

I don't understand how this statement is relevant to any claim I made in my comment. Humans beating computers at equal time control is perfectly consistent with the computers being slower than humans. If you took a human and slowed them down by a factor of 10, that's the same pattern you would see.

Are you instead trying to find examples of tasks where computers were beaten by humans when given a short time to do the task but could beat the humans when given a long time to do the task? That's a very different claim from "in every case where we've successfully gotten AI to do a task at all, AI has done that task far far faster than humans".

Nobody said "we got AI to play Go" when AI Go engines were only amateur dan strength, even though they could have equally well said "we got AI to play Go at a superhuman level but it's just very slow".

I understand this as saying “If you take an AI Go engine from the pre-AlphaGo era, it was pretty bad in real time. But if you set the search depth to an extremely high value, it would be superhuman, it just might take a bajillion years per move. For that matter, in 1950, people had computers, and people knew how to do naive exhaustive tree search, so they could already make an algorithm that was superhuman at Go, it’s just that it would take like a googol years per move and require galactic-scale memory banks etc.”

Is that what you were trying to say? If not, can you rephrase?

And if humans spent a googol years planning their moves, those moves would still be better because their search scales better.

Yes, that's what I'm trying to say, though I think in actual practice the numbers you need would have been much smaller for the Go AIs I'm talking about than they would be for the naive tree search approach.

I'm interested in how much processing time Waymo requires. I.e. if I sped up the clock speed in a simulation such that things were happening much faster, how fast could we do that and still have it successfully handled the environment?

It's arguably a superhuman driver in some domains already (fewer accidents), but not in others (handling OOD road conditions).

If there are people who say “current AIs think many orders of magnitude faster than humans”, then I agree that those people are saying something kinda confused and incoherent, and I am happy that you are correcting them.

I don’t immediately recall hearing people say that, but sure, I believe you, people say all kinds of stupid things.

It’s just not a good comparison because current AIs are not doing the same thing that humans do when humans “think”. We don’t have real human-level AI, obviously. E.g. I can’t ask an LLM to go found a new company, give it some seed capital and an email account, and expect it to succeed. So basically, LLMs are doing Thing X at Speed A, and human brains are doing Thing Y at Speed B. I don’t know what it means to compare A and B.

There’s a different claim, “we will sooner or later have AIs that can think and act at least 1-2 orders of magnitude faster than a human”. I see that claim as probably true, although I obviously can’t prove it.

I agree that the calculation “1 GHz clock speed / 100 Hz neuron firing rate = ” is not the right calculation (although it’s not entirely irrelevant). But I am pretty confident about the weaker claim of 1-2 OOM, given some time to optimize the (future) algorithms.

If there are people who say “current AIs think many orders of magnitude faster than humans”, then I agree that those people are saying something kinda confused and incoherent, and I am happy that you are correcting them.

Eliezer himself has said (e.g. in his 2010 debate with Robin Hanson) that one of the big reasons he thinks CPUs can beat brains is because CPUs run at 1 GHz while brains run at 1-100 Hz, and the only barrier is that the CPUs are currently running "spreadsheet algorithms" and not the algorithm used by the human brain. I can find the exact timestamp from the video of the debate if you're interested, but I'm surprised you've never heard this argument from anyone before.

There’s a different claim, “we will sooner or later have AIs that can think and act at least 1-2 orders of magnitude faster than a human”. I see that claim as probably true, although I obviously can’t prove it.

I think this claim is too ill-defined to be true, unfortunately, but insofar as it has the shape of something I think will be true it will be because of throughput or software progress and not because of latency.

I agree that the calculation “1 GHz clock speed / 100 Hz neuron firing rate = 1e7” is not the right calculation (although it’s not entirely irrelevant). But I am pretty confident about the weaker claim of 1-2 OOM, given some time to optimize the (future) algorithms.

If the claim here is that "for any task, there will be some AI system using some unspecified amount of inference compute that does the task 1-2 OOM faster than humans", I would probably agree with that claim. My point is that if this is true, it won't be because of the calculation “1 GHz clock speed / 100 Hz neuron firing rate = 1e7”, which as far as I can tell you seem to agree with.

Thanks. I’m not Eliezer so I’m not interested in litigating whether his precise words were justified or not. ¯\_(ツ)_/¯

I’m not sure we’re disagreeing about anything substantive here.

If the claim here is that "for any task, there will be some AI system using some unspecified amount of inference compute that does the task 1-2 OOM faster than humans", I would probably agree with that claim.

That’s probably not what I meant, but I guess it depends on what you mean by “task”.

For example, when a human is tasked with founding a startup company, they have to figure out, and do, a ton of different things, from figuring out what to sell and how, to deciding what subordinates to hire and when, to setting up an LLC and optimizing it for tax efficiency, to setting strategy, etc. etc.

One good human startup founder can do all those things. I am claiming that one AI can do all those things too, but at least 1-2 OOM faster, wherever those things are unconstrained by waiting-for-other-people etc.

For example: If the AI decides that it ought to understand something about corporate tax law, it can search through online resources and find the answer at least 10-100× faster than a human could (or maybe it would figure out that the answer is not online and that it needs to ask an expert for help, in which case it would find such an expert and email them, also 10-100× faster). If the AI decides that it ought to post a job ad, it can figure out where best to post it, and how to draft it to attract the right type of candidate, and then actually write it and post it, all 10-100× faster. If the AI decides that it ought to look through real estate listings to make a shortlist of potential office spaces, it can do it 10-100× faster. If the AI decides that it ought to redesign the software prototype in response to early feedback, it can do so 10-100× faster. If the AI isn’t sure what to do next, it figures it out, 10-100× faster. Etc. etc. Of course, the AI might use or create tools like calculators or spreadsheets or LLMs, just as a human might, when it’s useful to do those things. And the AI would do all those things really well, at least as well as the best remote-only human startup founder.

That’s what I have in mind, and that’s what I expect someday (definitely not yet! maybe not for decades!)

It is indeed tricky to measure this stuff.

E.g. I can’t ask an LLM to go found a new company, give it some seed capital and an email account, and expect it to succeed.

In general, would you expect a human to succeed under those conditions? I wouldn't, but then most of the humans I associate with on a regular basis aren't entrepreneurs.

There’s a different claim, “we will sooner or later have AIs that can think and act at least 1-2 orders of magnitude faster than a human”. I see that claim as probably true, although I obviously can’t prove it.

Without bounding what tasks we want the computer perform faster than the person, one could argue that we've met that criterion for decades. Definitely a matter of "most people" and "most computers" for both, but there's a lot of math that a majority of humans can't do quickly or can't do at all, whereas it's trivial for most computers.

Deep learning uses global backprop. One of Hinton's core freakout moments early last year about capabilities advancing faster than expected was when he proposed to himself that the reason nobody has been able to beat backprop with brainlike training algorithms is that backprop is actually more able to pack information into weights.

my point being, deep learning may be faster per op than humans, as a result of a distinctly better training algo.

The clock speed of a GPU is indeed meaningful: there is a clock inside the GPU that provides some signal that's periodic at a frequency of ~ 1 GHz. However, the corresponding period of ~ 1 nanosecond does not correspond to the timescale of any useful computations done by the GPU.

True, but isn't this almost exactly analogously true for neuron firing speeds? The corresponding period for neurons (10 ms - 1 s) does not generally correspond to the timescale of any useful cognitive work or computation done by the brain.

The human brain is estimated to do the computational equivalent of around 1e15 FLOP/s.

"Computational equivalence" here seems pretty fraught as an analogy, perhaps more so than the clock speed <-> neuron firing speed analogy.

In the context of digital circuits, FLOP/s is a measure of an outward-facing performance characteristic of a system or component: a chip that can do 1 million FLOP/s means that every second it can take 2 million floats as input, perform some arithmetic operation on them (pairwise) and return 1 million results.

(Whether the "arithmetic operations" are FP64 multiplication or FP8 addition will of course have a big effect on the top-level number you can report in your datasheet or marketing material, but a good benchmark suite will give you detailed breakdowns for each type.)

But even the top-line number is (at least theoretically) a very concrete measure of something that you can actually get out of the system. In contrast, when used in "computational equivalence" estimates of the brain, FLOP/s are (somewhat dubiously, IMO) repurposed as a measure of what the system is doing internally.

So even if the 1e15 "computational equivalence" number is right, AND all of that computation is irreducibly a part of the high-level cognitive algorithm that the brain is carrying out, all that means is that it necessarily takes at least 1e15 FLOP/s to run or simulate a brain at neuron-level fidelity. It doesn't mean that you can't get the same high-level outputs of that brain through some other much more computationally efficient process.

(Note that "more efficient process" need not be high-level algorithms improvements that look radically different from the original brain-based computation; the efficiencies could come entirely from low-level optimizations such as not running parts of the simulation that won't affect the final output, or running them at lower precision, or with caching, etc.)

Separately, I think your sequential tokens per second calculation actually does show that LLMs are already "thinking" (in some sense) several OOM faster than humans? 50 tokens/sec is about 5 lines of code per second, or 18,000 lines of code per hour. Setting aside quality, that's easily 100x more than the average human developer can usually write (unassisted) in an hour, unless they're writing something very boilerplate or greenfield.

(The comparison gets even more stark when you consider longer timelines, since an LLM can generate code 24/7 without getting tired: 18,000 lines / hr is ~150 million lines in a year.)

The main issue with current LLMs (which somewhat invalidates this whole comparison) is that they can pretty much only generate boilerplate or greenfield stuff. Generating large volumes of mostly-useless / probably-nonsense boilerplate quickly doesn't necessarily correspond to "thinking faster" than humans, but that's mostly because current LLMs are only barely doing anything that can rightfully be called thinking in the first place.

So I agree with you that the claim that current AIs are thinking faster than humans is somewhat fraught. However, I think there are multiple strong reasons to expect that future AIs will think much faster than humans, and the clock speed <-> neuron firing analogy is one of them.

True, but isn't this almost exactly analogously true for neuron firing speeds? The corresponding period for neurons (10 ms - 1 s) does not generally correspond to the timescale of any useful cognitive work or computation done by the brain.

Yes, which is why you should not be using that metric in the first place.

But even the top-line number is (at least theoretically) a very concrete measure of something that you can actually get out of the system. In contrast, when used in "computational equivalence" estimates of the brain, FLOP/s are (somewhat dubiously, IMO) repurposed as a measure of what the system is doing internally.

Will you still be saying this if future neural networks are running on specialized hardware that, much like the brain, can only execute forward or backward passes of a particular network architecture? I think talking about FLOP/s in this setting makes a lot of sense, because we know the capabilities of neural networks are closely linked to how much training and inference compute they use, but maybe you see some problem with this also?

So even if the 1e15 "computational equivalence" number is right, AND all of that computation is irreducibly a part of the high-level cognitive algorithm that the brain is carrying out, all that means is that it necessarily takes at least 1e15 FLOP/s to run or simulate a brain at neuron-level fidelity. It doesn't mean that you can't get the same high-level outputs of that brain through some other much more computationally efficient process.

I agree, but even if we think future software progress will enable us to get a GPT-4 level model with 10x smaller inference compute, it still makes sense to care about what inference with GPT-4 costs today. The same is true of the brain.

Separately, I think your sequential tokens per second calculation actually does show that LLMs are already "thinking" (in some sense) several OOM faster than humans? 50 tokens/sec is about 5 lines of code per second, or 18,000 lines of code per hour. Setting aside quality, that's easily 100x more than the average human developer can usually write (unassisted) in an hour, unless they're writing something very boilerplate or greenfield.

Yes, but they are not thinking 7 OOM faster. My claim is not AIs can't think faster than humans, indeed, I think they can. However, current AIs are not thinking faster than humans when you take into account the "quality" of the thinking as well as the rate at which it happens, which is why I think FLOP/s is a more useful measure here than token latency. GPT-4 has higher token latency than GPT-3.5, but I think it's fair to say that GPT-4 is the model that "thinks faster" when asked to accomplish some nontrivial cognitive task.

The main issue with current LLMs (which somewhat invalidates this whole comparison) is that they can pretty much only generate boilerplate or greenfield stuff. Generating large volumes of mostly-useless / probably-nonsense boilerplate quickly doesn't necessarily correspond to "thinking faster" than humans, but that's mostly because current LLMs are only barely doing anything that can rightfully be called thinking in the first place.

Exactly, and the empirical trend is that there is a quality-token latency tradeoff: if you want to generate tokens at random, it's very easy to do that at extremely high speed. As you increase your demands on the quality you want these tokens to have, you must take more time per token to generate them. So it's not fair to compare a model like GPT-4 to the human brain on grounds of "token latency": I maintain that throughput comparisons (training compute and inference compute) are going to be more informative in general, though software differences between ML models and the brain can still make it not straightforward to interpret those comparisons.

True, but isn't this almost exactly analogously true for neuron firing speeds? The corresponding period for neurons (10 ms - 1 s) does not generally correspond to the timescale of any useful cognitive work or computation done by the brain.

Yes, which is why you should not be using that metric in the first place.

Well, clock speed is a pretty fundamental parameter in digital circuit design. For a fixed circuit, running it at a 1000x slower clock frequency means an exactly 1000x slowdown. (Real integrated circuits are usually designed to operate in a specific clock frequency range that's not that wide, but in theory you could scale any chip design running at 1 GHz to run at 1 KHz or even lower pretty easily, on a much lower power budget.)

Clock speeds between different chips aren't directly comparable, since architecture and various kinds of parallelism matter too, but it's still good indicator of what kind of regime you're in, e.g. high-powered / actively-cooled datacenter vs. some ultra low power embedded microcontroller.

Another way of looking at it is power density: below ~5 GHz or so (where integrated circuits start to run into fundamental physical limits), there's a pretty direct tradeoff between power consumption and clock speed.

A modern high-end IC (e.g. a desktop CPU) has a power density on the order of 100 W / cm^2. This is over a tiny thickness; assuming 1 mm you get a 3-D power dissipation of 1000 W / cm^3 for a CPU vs. human brains that dissipate ~10 W / 1000 cm^3 = 0.01 watts / cm^3.

The point of this BOTEC is that there are several orders of magnitude of "headroom" available to run whatever the computation the brain is performing at a much higher power density, which, all else being equal, usually implies a massive serial speed up (because the way you take advantage of higher power densities in IC design is usually by simply cranking up the clock speed, at least until that starts to cause issues and you have to resort to other tricks like parallelism and speculative execution).

The fact that ICs are bumping into fundamental physical limits on clock speed suggests that they are already much closer to the theoretical maximum power densities permitted by physics, at least for silicon-based computing. This further implies that, if and when someone does figure out how to run the actual brain computations that matter in silicon, they will be able to run those computations at many OOM higher power densities (and thus OOM higher serial speeds, by default) pretty easily, since biological brains are very very far from any kind of fundamental limit on power density. I think the clock speed <-> neuron firing speed analogy is a good way of way of summarizing this whole chain of inference.

Will you still be saying this if future neural networks are running on specialized hardware that, much like the brain, can only execute forward or backward passes of a particular network architecture? I think talking about FLOP/s in this setting makes a lot of sense, because we know the capabilities of neural networks are closely linked to how much training and inference compute they use, but maybe you see some problem with this also?

I think energy and power consumption are the safest and most rigorous way to compare and bound the amount of computation that AIs are doing vs. humans. (This unfortunately implies a pretty strict upper bound, since we have several billion existence proofs that ~20 W is more than sufficient for lethally powerful cognition at runtime, at least once you've invested enough energy in the training process.)

current AIs are not thinking faster than humans [...] GPT-4 has higher token latency than GPT-3.5, but I think it's fair to say that GPT-4 is the model that "thinks faster"

This notion of thinking speed depends on the difficulty of a task. If one of the systems can't solve a problem at all, it's neither faster nor slower. If both systems can solve a problem, we can compare the time they take. In that sense, current LLMs are 1-2 OOMs faster than humans at the tasks both can solve, and much cheaper.

Old chess AIs were slower than humans good at chess. If future AIs can take advantage of search to improve quality, they might again get slower than humans at sufficiently difficult tasks, while simultaneously being faster than humans at easier tasks.

Sure, but in that case I would not say the AI thinks faster than humans, I would say the AI is faster than humans at a specific range of tasks where the AI can do those tasks in a "reasonable" amount of time.

As I've said elsewhere, there is a quality or breadth vs serial speed tradeoff in ML systems: a system that only does one narrow and simple task can do that task at a high serial speed, but as you make systems more general and get them to handle more complex tasks, serial speed tends to fall. The same logic that people are using to claim GPT-4 thinks faster than humans should also lead them to think a calculator thinks faster than GPT-4, which is an unproductive way to use the one-dimensional abstraction of "thinking faster vs. slower".

You might ask "Well, why use that abstraction at all? Why not talk about how fast the AIs can do specific tasks instead of trying to come up with some general notion of if their thinking is faster or slower?" I think a big reason is that people typically claim the faster "cognitive speed" of AIs can have impacts such as "accelerating the pace of history", and I'm trying to argue that the case for such an effect is not as trivial to make as some people seem to think.

This notion of thinking speed makes sense for large classes of tasks, not just specific tasks. And a natural class of tasks to focus on is the harder tasks among all the tasks both systems can solve.

So in this sense a calculator is indeed much faster than GPT-4, and GPT-4 is 2 OOMs faster than humans. An autonomous research AGI is capable of autonomous research, so its speed can be compared to humans at that class of tasks.

AI accelerates the pace of history only when it's capable of making the same kind of progress as humans in advancing history, at which point we need to compare their speed to that of humans at that activity (class of tasks). Currently AIs are not capable of that at all. If hypothetically 1e28 training FLOPs LLMs become capable of autonomous research (with scaffolding that doesn't incur too much latency overhead), we can expect that they'll be 1-2 OOMs faster than humans, because we know how they work. Thus it makes sense to claim that 1e28 FLOPs LLMs will accelerate history if they can do research autonomously. If AIs need to rely on extensive search on top of LLMs to get there, or if they can't do it at all, we can instead predict that they don't accelerate history, again based on what we know of how they work.

Well said. For a serious attempt to estimate how fast AGIs will think relative to humans, see here. Conclusion below:

- After staring at lots of data about trends in model size, GPU architecture, etc. for 10+ hours, I mostly concluded that I am very uncertain about how the competing trends of larger models vs. better hardware and software will play out. My median guess would be that we get models that are noticeably faster than humans (5x), but I wouldn’t be surprised by anything from 2x slower to 20x faster.

- Importantly, these speeds are only if we demand maximum throughput from the GPUs. If we are willing to sacrifice throughput by a factor of k, we can speed up inference by a factor of k^2, up to fairly large values of k. So if models are only 5x faster than humans by default, they could instead be 125x faster in exchange for a 5x reduction in throughput, and this could be pushed further still if necessary.

My own opinion is that the second bullet point is a HUGE FUCKING DEAL, very big if true. Because it basically means that for the tasks that matter most during an intelligence explosion -- tasks that are most bottlenecked by serial thought, for example -- AGIs will be thinking 2+ OOMs faster than humans.

I would love to see other people investigate and critically evaluate these claims.

Daniel you model near future intelligence explosions. The simple reason these probably cannot happen are that when you have a multiple stage process, the slow step always wins. For an explosion there are 4 ingredients, not 1 : (algorithm, compute, data, robotics). Robotics is necessary or the explosion halts once available human labor is utilized.

Summary : if you make AI smarter with recursion, you will be limited by (silicon, data, or robotics) and the process cannot run faster than the slowest step.

(1) you have the maximum throughput and serial speed achievable with your hardware. If a human brain is (86b * 1000 * 1000) / 10 arbitrarily sparse 8-bit flops.

Please notice the keyword "arbitrarily sparse". That means on GPUs in several years, whenever Nvidia gets around to supporting this. Otherwise you need a lot more compute. Notice the dividing by 10, I am assuming GPUs are 10 times better than meatware. (Less noisy, less neurons failing to fire)

But just ignoring the sparsity (and VRAM and utilization) issues for some numbers, 2 million H100s are projected to ship in 2024, so if you had full GPU utilization that's 43 cards per "human equivalent" at inference time.

What this means bottom line is the "ecosystem" of compute can support a finite amount human equivalents, or 46,511 humans if you use all hardware.

If you run the hardware faster, you lose throughput. If we take your estimate as correct (note you will need custom hardware past a certain point, GPUs will no longer work) then that's like adding 74 new humans but they think 125 times faster, and can do the work of 9302 humans but serially fast and 24/7.

You probably should estimate and plot how much new AI silicon production can be added with each year after 2024.

Assuming a dominant AI lab buys up 15 percent of worldwide production, like Meta says they will do this year. That's your roofline. Also remember most of the hardware is going it be service customers and not being used for R&D.

So if 50 percent of the hardware is serving other projects or customers, and we have 15 percent of worldwide production, then we now have 697 new humans in throughput per hour, over 5 serial threads, though you would obviously assign more than 5 tasks, and context switch between them.

Probably 2025 has 50 percent more AI accelerators built than 2024 and so on, so I suggest you add this factor to your world modeling. This is extremely meaningful to timelines.

(2) once serial speed is no longer a bottleneck any recursive improvement process bottlenecks on the evaluation system. For a simple example, once you can achieve the highest average score on a suite of tests that is possible, no further improvement will happen. Past a certain level of intelligence you would assume the AI system will just bottleneck on human written evals, learning at the fastest rate that doesn't overfit. Yes you could go to AI written evals but how do humans tell if the eval is optimizing for a useful metric?

(3) once an AI system is maxed, bottlenecked on computer or data, it may be many times smarter than humans but limited by real world robotics or data. I gave a mock example of an engine design task in the comments here, and the speedup is 50 times, not 1 million times, because the real world has steps limited by physics. This is relevant for modeling an "explosion" and why once AGI is achieved in a few years it probably won't immediately change everything as there aren't enough robots.

I am well aware of Amdahl's law. By intelligence explosion I mean the period where progress in AI R&D capabilities is at least 10x faster than it is now; it need not be infinitely faster. I agree it'll be bottlenecked on the slowest component, but according to my best estimates and guesses the overall speed will still be >10x what it is today.

I also agree that there aren't enough robots; I expect a period of possibly several years between ASI and the surface of the earth being covered in robots.

intelligence explosion I mean the period where progress in AI R&D capabilities is at least 10x faster than it is now; it need not be infinitely faster

Well a common view is that AI now is only possible with the compute levels available recently. This would mean it's already compute limited.

Say for the sake of engagement that GPTs are inefficient by 10 times vs the above estimate, that "AI progress" is leading to more capabilities on equal compute, and other progress is leading to more capabilities with more compute.

So in this scenario needs 8 H100s per robot. You want to "cover the earth". 8 billion people don't cover the earth, let's assume that 800 billion robots will.

Well how fast will progress happen? Let's assume we started with a baseline of 2 million H100s in 2024, and each year after we add 50 percent more production rate to the year prior, and every 2.5 years we double the performance per unit, then in 94 years we will have built enough H100s to cover the earth with robots.

How long do you think it requires for a factory to build the components used in itself, including the workers? That would be another way to calculate this. If it's 2 years, and we started with 250k capable robots (because of H100 shortage) then it would take 43 years for 800 billion. 22 years if it drops to 1 year.

People do bring up biological doubling times but neglect the surrounding ecosystem. When we say "double a robot" we don't mean theres a table of robot parts and one robot assembles another. That would take a few hours max. It means building all parts, mining for materials, and also doubling all the machinery used to make everything. Double the factories, double the mines, double the power generators.

You can use China's rate of industrial expansion as a proxy for this. At 15 percent thats a doubling time of 5 years. So if robots double in 2 years they are more than than twice as efficient as China, and remember China got some 1 time bonuses from a large existing population and natural resources like large untapped rivers.

Cellular self replication is using surrounding materials conveniently dissolved in solution, lichen for instance add only a few mm per year.

"AI progress" is then time bound to the production of compute and robots. You make AI progress, once you collect some "1 time" low hanging fruit bonus, at a rate equal to the log(available compute). This is compute you can spare not tied up in robots.

What log base do you assume? What's the log base for compute vs capabilities measurer at now? Is 1-2 years doubling time reasonable or do you have some data that would support a faster rate of growth? What do you think the "low hanging fruit" bonus is? I assumed 10x above because smaller models seem to currently have to throw away key capabilities to Goodheart benchmarks.

Numbers and the quality of the justification really really matter here. The above says that we shouldn't be worried about foom, but of course good estimates for the parameters would convince me otherwise.

The above does not say that we shouldn't be worried about foom. Foom happens inside a lab with a fixed amount of compute; then, the ASIs in the lab direct the creation of more fabs, factories, wetlabs, etc. until the economy is transformed. Indeed the second stage of this process might take several years. I expect it to go at maybe about twice the speed of China initially but to accelerate rather than slow down.

I expect it to go at maybe about twice the speed of China initially

Ok this sounds like a fair estimate. so 30% annual growth rate, or 2.4 years. I estimated 2 years, which would be faster growth. One concrete reason to think that this is a fair estimate is that AI controlled robots will work 24 hours a day. China's brutal 996 work schedule, which is 72 hours a week, is still only 43% duty cycle - a robot can work at at least a 95% duty cycle. (other 5% is swapping parts as parts of the robot fail)

So trying to engage with the 'foom' idea, you develop in the lab some 'ASI'. That ASI is 'directing' these things? Why? Why do humans trust the outputs?

I was thinking the 'foom' idea was ok, the ASI robs lies and steals and cons. It finds some way to scam humans into doing work for it. And ok, every 2.4 years it's resources are doubling. (there might be some early 'low hanging fruit', for example people have suggested an ASI might be able to extract money from financial markets with better trades. This will saturate)

Meanwhile, other humans use the same technology that ASI unlocks, and make controlled ASI - models you prompt without context or memory, weaker or distilled ASI models that are specialized. Then they simultaneously, under human direction, invest hundreds of billions of dollars into robot factories and double the equipment available to humans. Say the humans are less efficient, well, how much less efficient? Is there doubling time 3 years? 5?

There is a set of numbers where the ASI win, but so long as the initial starting point for the humans is a large enough resource multiplier, and the humans eventually notice the actions of the rebel ASI, it's going to usually be human victory. For example:

ASI steals 1 billion dollars and covertly starts doubling. Doubling time is 2 years, 10 years later, they have 32 billion in resources.

Humans do some funding rounds and get 100 billion dollars and legally start doubling. Doubling time is 4 years. 10 years later, they have 400 billion in resources.

Humans spot the unregistered factories belonging to the ASI via IR or serendipity. The first round of AI wars starts...

ASI needs a pretty large utility modifier to win with such a resource disparity, especially as humans can keep feeding the same context for each battle to the ASI models the humans have, stripping off the context bits that let the ASI know when it's being Ender's Gamed*, and getting solutions to the tactical scenarios.

I was understanding that 'foom' meant somewhat more science fiction takeoff speeds, such as doubling times of a week. That would be an issue if humans do not also have the ability to order their resources doubled on a weekly basis.

*c'mon give me credit for this turn of phrase

Throughput doesn't straightforwardly accelerate history, serial speedup does. At a serial speedup of 10x-100x, decades pass in a year. If an autonomous researcher AGI develops better speculative decoding and other improvements during this time, the speedup quickly increases once the process starts, though it might still remain modest without changing hardware or positing superintelligence, only centuries a year or something.

For neurons-to-transistors comparison, probably both hardware and algorithms would need to change to make this useful, but then the critical path length of transformers is quite low. Two matrices of size NxN only need on the order of log(N) sequential operations to multiply them. It's not visibly relevant with modern hardware, but laws of physics seem to allow hardware that makes neural nets massively faster, probably a millionfold speedup of human level thought is feasible. This is a long term project for those future centuries that happen in a year.

This is a long term project for those future centuries that happen in a year.

Unless the project is completely simulable (example go or chess), then you're rate limited by the slowest serial steps. This is just Amdahls law.

I mean absolutely this will help and you also can build your prototypes in parallel or test the resulting new product in parallel, but the serial time for a test becomes limiting for the entire system.

For example, if the product is a better engine, you evaluate all data humans have ever recorded on engines, build an engine sim, and test many possibilities. But there is residual uncertainty in any sim - to resolve this you need thousands of experiments. If you can do all experiments in parallel you still must wait the length of time for a single experiment.

Accelerated lifecycle testing is where you compress several years of use into a few weeks of testing. You do this by elevating the operating temperature and running the device under extreme load for the test period.

So if you do "1 decade" of engine design in 1 day, come up with 1000 candidate designs, then you manufacture all 1000 in parallel over 1 month (casting and machining have slow steps), then 1 more month of accelerated lifecycle testing.

Suppose you need to do this iteration loop 10 times to get to "rock solid" designs better than currently used ones. Then it took you 20 months, vs 100 years for humans to do it.

50 times speedup is enormous but it's not millions. I think a similar argument applies for most practical tasks. Note that tasks like "design a better aircraft" have the same requirement for testing. Design better medicine crucially does.

One task that seems like an obvious one for AI R&D: design a better AI processor, is notable because current silicon fabrication processes take months of machine time, and so are a pretty extreme example, where you must wait months between iteration cycles.

Also note you needed enough to robotic equipment to do the above. Since robots can build robots thats not going to take long, but you have several years or so of "latency" to get enough robots.

Any testing can be done in simulation, as long as you have a simulator and it's good enough. A few hundreds times speedup in thinking allows very quickly writing very good specialized software for learning and simulation of all relevant things, based on theory that's substantially better. The speed of simulation might be a problem, and there's probably a need for physical experiments to train the simulation models (but not to directly debug object level engineering artifacts).

Still, in the physical world activity of an unfettered 300x speed human level AGI probably looks like building tools for building tools without scaling production and on first try, rather than cycles of experiments and reevaluation and productization. I suspect macroscopic biotech might be a good target. It's something obviously possible (as in animals) and probably amenable to specialized simulation. This might take some experiments to pin down, but probably not years of experiments, as at every step it takes no time at all to very judiciously choose what data to collect next. There is already a bootstrapping technology, fruit fly biomass doubles every 2 days, energy from fusion will help with scaling, and once manufactured, cells can reconfigure.

A millionfold speedup in thinking (still assuming no superintelligence) probably requires hardware that implies ability to significantly speed up simulations.

No this hardware would not make simulations faster. Different hardware could speed it up some, but since simulations already are done on supercomputers running at multiple ghz, the speedup would be about 1 OOM, as this is typical for going from general purpose processors to ASICs. It would still be the bottleneck.

This lesswrong post argues pretty convincingly that simulations cannot model everything, especially for behavior relevant to nanotechnology and medicine:

https://www.lesswrong.com/posts/etYGFJtawKQHcphLi/bandgaps-brains-and-bioweapons-the-limitations-of

Assuming the physicist who wrote that lesswrong post is correct, cycles of trial and error and prototyping and experiments are unavoidable.

I also agree with the post for a different reason : real experimental data, such as human written papers on biology or nanoscale chemistry, leave enough uncertainty to fit trucks through. The issue is that you have hand copied fields of data, large withheld datasets because they had negative findings, needlessly vague language to describe what was done, different labs at different places with different staff and equipment, different subjects (current tech cannot simulate or build a living mockup of a human body and there is insufficient data due to the above to do either), and so on.

You have to try things, even if it's just to collect data you will use in your simulation, and 'trying stuff' is slow. (mammalian cells take hours to weeks to grow complex structures. electron beam nanolathes take hours to carve a new structure. etc.)

When you design a thing, you can intentionally make it more predictable and faster to test, in particular with modularity. If the goal is designing cells that grow and change in controllable ways, all experiments are tiny. Like with machine learning, new observations from the experiments generalize by improving the simulation tools, not just object level designs. And much more advanced theory of learning should enable much better sample efficiency with respect to external data.

If millionfold speedup is currently already feasible, it doesn't take hardware advancement and as a milestone indicates no hardware benefit for simulation. That point responded to the hypothetical where there is already massive scaling in hardware compared to today (such as through macroscopic biotech to scale physical infrastructure), which should as another consequence make simulation of physical designs much better (on its own hardware specialized for being good at simulation). For example, this is where I expect uploading to become feasible to develop, not at the 300x speedup stage of software-only improvement, because simulating wild systems is harder than designing something predictable.

(This is exploratory engineering not forecasting, I don't actually expect human level AGI without superintelligence to persist that long, and if nanotech is possible I don't expect scaling of macroscopic biotech. But neither seems crucial.)

When you design a thing, you can intentionally make it more predictable and faster to test,

Absolutely. This happens today, where there is only time in silicon release cycles for a few revisions.

My main point with the illustrative numbers was to show how the time complexity works. You have this million times faster AI - it can do 10 years of work in 2.24 minutes it seems.

(Assuming a human is working 996 or 72 hours a week)

Even if we take the most generous possible assumptions about how long it takes to build something real and test it, then fix your mistakes, the limiting factors are 43,000 times slower than we can think. Say we reduce our serial steps for testing and only need 2 prototypes and then the final version instead of 10.

So we made it 3 times faster!

So the real world is still slowing us down by a factor of 14,400.

Machining equipment takes time to cut an engine, nano lathe a part, or if we are growing human organs to treat VIPs it takes months for them to grow. Same for anything else you think of. Real world just has all these slow steps, from time for concrete to cure, paint to dry, molten metal in castings to cool, etc.

This is succinctly why FOOM is unlikely. But 5-50 years of research in 1 year is still absolutely game changing.

Machining equipment takes time to cut an engine, nano lathe a part, or if we are growing human organs to treat VIPs it takes months for them to grow.

That's why you don't do any of the slower things at all (in a blocking way), and instead focus on the critical path of controllable cells for macroscopic biotech or something like that, together with the experiments needed to train simulators good enough to design them. This enables exponentially scaling physical infrastructure once completed, which can be used to do all the other things. Simulation is not the methods of today, it's all the computational shortcuts to making the correct predictions about the simulated systems that the AGIs can come up with in subjective centuries of thinking, with a few experimental observations to ground the thinking. And once the initial hardware scaling project is completed, it enables much better simulation of more complicated things.

You can speed things up. The main takeaway is there's 4 orders of magnitude here. Some projects that involve things like interplanetary transits to setup are going to be even slower than that.

And you will most assuredly start out at 4 oom slower bootstrapping from today's infrastructure. Yes maybe you can eventually develop all the things you mentioned, but there are upfront costs to develop them. You don't have programmable cells or self replicating nanotechnology when you start, and you can't develop them immediately just by thinking about it for thousands of years.

This specifically is an argument again sudden and unexpected "foom" the moment agi exists. If 20-50 years later in a world full of robots and rapid nanotechnology and programmable biology you start to see exponential progress that's a different situation.

Projects that involve interplanetary transit are not part of the development I discuss, so they can't slow it down. You don't need to wait for paint to dry if you don't use paint.

There are no additional pieces of infrastructure that need to be in place to make programmable cells, only their design and what modern biotech already has to manufacture some initial cells. It's a question of sample efficiency in developing simulation tools, how many observations does it take for simulation tools to get good enough, if you had centuries to design the process of deciding what to observe and how to make use of the observations to improve the simulation tools.

So a crux might be impossibility of creating the simulation tools with data that can be collected in the modern world over a few months. It's an issue distinct from inability to develop programmable cells.

I think you might have accidentally linked to your comment instead of the LessWrong post you intended to link to.

I agree that processor clock speeds are not what we should measure when comparing the speed of human and AI thoughts. That being said, I have a proposal for the significance the fact that the smallest operation for a CPU/GPU is much faster than the smallest operation for the brain.

The crux of my belief is that having faster fundamental operations means you can get to the same goal using a worse algorithm in the same amount of wall-clock time. That is to say, if the difference between the CPU and neuron is ~10x, then the CPU can achieve human performance using an algorithm with 10x as many steps as the algorithm that humans actually use in the same clock period.

If we view the algorithms with more steps than human ones as sub-human because they are less computationally efficient, and view a completion of the steps of an algorithm such that it generates an output as a thought, this implies that the AI can get achieve superhuman performance using sub-human thoughts.

A mechanical analogy: instead of the steps in an algorithm consider the number of parts in a machine for travel. By this metric a bicycle is better than a motorcycle; yet I expect the motorcycle is going to be much faster even when it is built with really shitty parts. Alas, only the bicycle is human-powered.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year. Will this post make the top fifty?

I often encounter some confusion about whether the fact that synapses in the brain typically fire at frequencies of 1-100 Hz while the clock frequency of a state-of-the-art GPU is on the order of 1 GHz means that AIs think "many orders of magnitude faster" than humans. In this short post, I'll argue that this way of thinking about "cognitive speed" is quite misleading.

The clock speed of a GPU is indeed meaningful: there is a clock inside the GPU that provides some signal that's periodic at a frequency of ~ 1 GHz. However, the corresponding period of ~ 1 nanosecond does not correspond to the timescale of any useful computations done by the GPU. For instance; in the A100 any read/write access into the L1 cache happens every ~ 30 clock cycles and this number goes up to 200-350 clock cycles for the L2 cache. The result of these latencies adding up along with other sources of delay such as kernel setup overhead etc. means that there is a latency of around ~ 4.5 microseconds for an A100 operating at the boosted clock speed of 1.41 GHz to be able to perform any matrix multiplication at all: