To be honest, I don't know if I'd have the guts to choose an 18% chance of death on the basis of spooky correlations.

Assuming that other humans choose outcomes like you do in games is a recipe for disaster; people are all over the place and as was shown by my poll they are more influenced by wording than by the logic of the game.

https://twitter.com/RokoMijic/status/1691081095524093952

Playing against random humans, the correct choice is red in this game as it is the unique Trembling Hand Perfect Equilibrium as well as being a dominant strategy.

People are all over the place but definitely not 50/50. The qualitative solution I have will hold no matter how weak the correlation with other people's choices (for large enough values of N).

If you make the very weak assumption that some nonzero number of participants will choose blue (and you prefer to keep them alive), then this problem becomes much more like a prisoner's dilemma where the maximum payoff can be reached by coordinating to avoid the Nash equilibrium.

If you make the very weak assumption that some nonzero number of participants will choose blue (and you prefer to keep them alive)

There is also a moral dimension of not wanting to encourage perverse behaviour

This game has a stable, dominant NE with max reward, just use that

There are no blue-choosers in the stable NE, so no, I don't mind at all about zero people dying.

Yes, but if someone accidentally picks blue, that's their own fault. The blue-picker injures only themselves, hence the stability against trembling hands. I would care enough to warn them against doing that, but I'm not going to quixotically join in with that fault, just so that I can die as well.

Sometimes one must cut the Gordian Knot; there is a clear right answer here, some people are bozos and that is out of my control. It is not a good idea to try and empathize with bozos as you will end up doing all sorts of counterproductive things.

Thanks for doing the math on this :)

My first instinct is that I should choose blue, and the more I've thought about it, the more that seems correct. (Rough logic: The only way no-one dies is if either >50% choose blue, or if 100% choose red. I think chances of everyone choosing red are vanishingly small, so I should push in the direction with a wide range of ways to get the ideal outcome.)

I do think the most important issue not mentioned here is a social-signal, first-mover one: If, before most people have chosen, someone loudly sends a signal of "everyone should do what I did, and choose X!", then I think we should all go along with that and signal-boost it.

There, the chances of everyone or nearly everyone choosing red seem (much!) higher, so I think I would choose red.

Even in that situation, though, I suspect the first-mover signal is still the most important thing. If the first person to make a choice gives an inspiring speech and jumps in, I think the right thing to do is choose blue with them.

That depends on your definition of isomorphism. I'm aware of the sense on which that is true. Are you aware of the sense it is false? Do you think think I'm wrong when I say "There, the chances of everyone or nearly everyone choosing red seem (much!) higher"?

I cannot read your mind, only your words. Please say in what sense you think it is true, and in what sense you think it is false.

Do you think think I'm wrong when I say "There, the chances of everyone or nearly everyone choosing red seem (much!) higher"?

The chances depend on the psychology of the people answering these riddles.

It is obviously true in a bare bones consequences sense. It is obviously false in the aesthetics, which I expect will change the proportion of people answering a or b--as you say, the psychology of the people answering affects the chances.

false in the aesthetics

What do you mean by this expression?

If someone is going to make a decision differently depending only on how the situation is described, both descriptions giving complete information about the problem, that cannot be defended as a rational way of making decisions. It predictably loses.

Which is why you order the same thing at a restaurant every time, up to nutritional equivalence? And regularly murder people you know to be organ donors?

Two situations that are described differently are different. What differences are salient to you is a fundamentally arational question. Deciding that the differences you, Richard, care about are the ones which count to make two situations isomorphic cannot be defended as a rational position. It predictably loses.

Which is why you order the same thing at a restaurant every time, up to nutritional equivalence? And regularly murder people you know to be organ donors?

I have no idea where that came from.

Nathaniel is offering scenarios where the problem with the course of action is aesthetic in a sense he finds equivalent. Your question indicates you don't see the equivalence (or how someone else could see it for that matter).

Trying to operate on cold logic alone would be disastrous in reality for map-territory reasons and there seems to be a split in perspectives where some intuitively import non-logic considerations into thought experiments and others don't. I don't currently know how to bridge the gap given how I've seen previous bridging efforts fail; I assume some deep cognitive prior is in play.

The scenarios he suggested bear no relation to the original one. I order differently on different occasions because I am different. I have no favorite food; my desire in food as in many other things is variety. Organ donors—well, they’re almost all dead to begin with. Occasionally someone willingly donates a kidney while expecting to live themselves, and of course blood donations are commonplace. Well, fine. I don’t see why I would be expected to have an attitude to that based on what I have said about the red-pill-blue-pill puzzle.

As it happens, I do carry a donor card. But I expect to be dead, or at least dead-while-breathing by the time it is ever used.

Yeah, this seems close to the crux of the disagreement. The other side sees a relation and is absolutely puzzled why others wouldn't, to the point where that particular disconnect may not even be in the hypothesis space.

When a true cause of disagreement is outside the hypothesis space the disagreement often ends up attributed to something that is in the hypothesis space, such as value differences. I suspect this kind of attribution error is behind most of the drama I've seen around the topic.

My System 1 had the inconsistent intuitions that I should choose the blue pill but I shouldn't step into the blender. This is probably because my intuition didn't entirely register that red pill = you survive no matter what. I was not-quite-thinking along the lines "Either red pill wins or blue pill wins, the blue pill outcome is better, therefore blue pill." As for getting the opposite intuition in the other case, well, "don't go in the scary people blender" is probably a more obvious Schelling point than "take the pill that kills people."

Game-theory considerations aside, this is an incredibly well-crafted scissor statement!

The disagreement between red and blue is self-reinforcing, since whichever you initially think is right, you can say everyone will live if they'd just all do what you are doing. It pushes people to insult each other and entrench their positions even further, since from red's perspective blues are stupidly risking their lives and unnecesarily weighing on their conscience when they would be fine if nobody chose blue in the first place, and from blue's perspective red is condemning them to die for their own safety. Red calls blue stupid, blue calls red evil. Not to mention the obvious connection to the wider red and blue tribes, "antisocial" individualism vs "bleeding-heart" collectivism. (though not a perfect correspondance, I'd consider myself blue tribe but would choose red in this situation. You survive no matter what and the only people who might die as a consequence also all had the "you survive no matter what" option.)

"That means that for our posterior from above, if you choose blue, the probability of the majority choosing red is about 0.1817. If you choose red, it's the opposite: the probability that the majority will choose red is 1-0.1817 = 0.8183, over 4 times higher."

It may well be that I don't understand Beta priors, or maybe I just see myself as unique or non-representative. But intuitively, I would not think that my own choice can justify anywhere close to that large of a swing in my estimate of the probabilities of the majority outcome. Maybe I am subconsciously conflating this in my mind with the objective probability that I actually cast the tiebreaking vote, which is very small.

I mean definitely most people will not use a decision procedure like this one, so a smaller update seems very reasonable. But I suspect this reasoning still has something in common with the source of the intuition a lot of people have for blue, that they don't want to contribute to anybody else dying.

Great post! I hadn't considered the fact that your decision was information, which feels obvious in retrospect. If someone is truly interested in this topic, it would be interesting to create a calculator where they can adjust nu, their posterior, and N.

Am I missing something obvious here? Is this a "cake or death" kind of deal, where you can run out of red pills? Does the blue pill come with a reward, like a million dollars? Why is picking blue here not retarded?

You might also assign different values to red-choosers and blue-choosers (one commenter I saw said they wouldn't want to live in a world populated only by people who picked red) but I'm going to ignore that complication for now.

Roko has also mentioned they think people choose blue for being bozos and I think it's fair to assume from their comments that they care less about bozos than smart people.

I'm very interested in seeing the calculations where you assign different utilities to people depending on their choice (and possibly, also depending on yours, like if you only value people who choose like you).

I'm not sure how I would work it out. The problem is that presumably you don't value one group more because they chose blue (it's because they're more altruistic in general) or because they chose red (it's because they're better at game theory or something). The choice is just an indicator of how much value you would put on them if you knew more about them. Since you already know a lot about the distribution of types of people in the world and how much you like them, the Bayesian update doesn't really apply in the same way. It only works on what pill they'll take because everyone is deciding with no knowledge of what the others will decide.

In the specific case where you don't feel altruistic towards people who chose blue specifically because of a personal responsibility argument ("that's their own fault"), then trivially you should choose red. Otherwise, I'm pretty confused about how to handle it. I think maybe only your level of altruism towards the blue choosers matters.

It's been going around twitter. I answered without thinking. Then I thought about it for a while and decided both answers should be fine, then I thought some more and decided everything I first thought was probably wrong. So now it's time to try math. Ege Erdil did a solid calculation on LW already, and another one is up on twitter. But neither of them accounts for the logical correlation between your decision and the unknown decisions of the other players. So let's try Bayes' theorem!

(If you just want the answer, skip to the end.)

Epistemic status: there are probably some mistakes in here, and even if there aren't, I still spent like 8 hours of my life on a twitter poll.

We'll begin with the state of least possible knowledge: a Jeffreys non-informative beta prior for the probability x that a randomly selected player will choose blue. That is,

x∼beta(0.5,0.5)

Of course if you think you understand people you could come up with a better prior. (Also if the blue pill looks like a scary people blender, that should probably change your prior.) I saw the poll results but I'm pretending I didn't, so I'll stick with Jeffreys.

Now, you yourself are one of the players in this group, so you need to update based on your choice. That's right, we're using acausal logic here. Your decision is correlated with other people's decisions. How do you update when you haven't decided yet? Luckily there are only two choices, so we'll brute force it, try both and see which turns out better in expectation.

To do a Bayesian update of a beta prior based on 1 data point, we just add 1 to one of the parameters. (If you think you're a special snowflake/alien and other people are not like you... then maybe update your side by less than 1.)

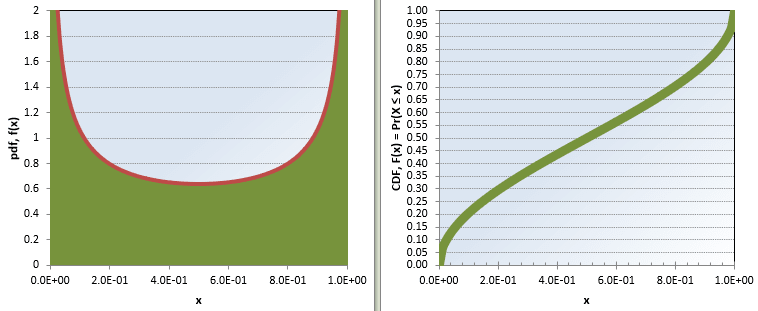

So if you choose blue, your posterior over x is now beta(1.5,0.5):

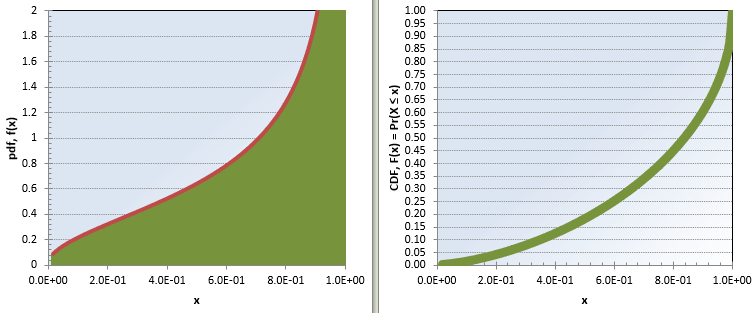

Whereas if you choose red, your posterior is beta(0.5,1.5):

Take N to be the number of participants in this pill game, besides you. I will assume N is large, so the number B who choose blue is approximately xN. I will also assume N is even, so the total number of players is odd, to avoid having to guess what happens in a tie.

Now, consider your utility function. We can start by assuming it's linear[1], and that you value everyone else's life equally, but at a multiple ν of your own life (following Ege Erdil's nomenclature for consistency). Then, scaling so that the value of your own life is 1, we can define your utility prior to playing:

Uinit=νN+1You might also assign different values to red-choosers and blue-choosers (one commenter I saw said they wouldn't want to live in a world populated only by people who picked red) but I'm going to ignore that complication for now.

Now, if ν=0 (total selfishness) then you should choose red. But I think most people would give their lives heroically to save a crowd of a hundred strangers. Or at least I'd like to think that. So for the sake of illustration, let's imagine ν=1/100.

Your final utility will depend on x (how likely others are to choose blue), and on your choice (let's say C=1 if you choose blue and C=0 if you choose red).

Ufinal=⎧⎨⎩Uinit=νN+1B>N/2ν(N−B)+(1−C)B<N/2Utiebreaker=ν(N/2+CN/2)+1B=N/2

In the tiebreaker case, you will live regardless of your choice, but choosing red will kill half of the other players. The probability that you have the tie-breaking vote is the PDF of the binomial function,

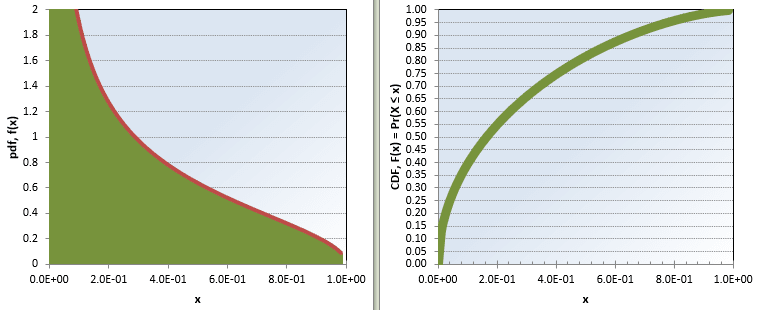

P(B=N/2)=(NN/2)xN/2(1−x)N/2But this still has x in it, so we'll have to take the expectation over x∼beta(0.5,1.5). That led me into a mess of approximating large factorials, but code interpreter eventually coached me through it. Here's P(tiebreaker) for N from 10 to 10 billion. It can be approximated very well by P(tiebreaker)=0.6275N−1.

For the non-tiebreaker cases, you have to evaluate P(B>N/2) and P(B<N/2), again as an expectation over x. This is where I'll use the approximation that B=xN. Then the expected probability that B<N/2 is just the CDF of the beta distribution up to 0.5. This approximation is valid for values of N above a couple hundred:

That means that for our posterior from above, if you choose blue, the probability of the majority choosing red is about 0.1817. If you choose red, it's the opposite: the probability that the majority will choose red is 1-0.1817 = 0.8183, over 4 times higher.

The last thing we need to calculate is the number of players we should expect to die, in the cases where the majority chooses red. This consideration will push in the opposite direction. If you chose blue, it's more likely that the majority also chose blue - but if blue falls short of a majority, it will probably be a little short. That means more deaths.

Specifically, we want the expectation of x over the interval (0, 0.5). That's shown by the dotted lines in the plots below. You can roughly see that each of them divides the green area in half. For the case where you chose red, the expected fraction of players who die (conditional on a red majority) is 0.1527. If you chose blue, it's 0.3120, or about twice as many.

Now we can go back and calculate the expected utility function for each case:

E[Ublue]=(1−0.1817)(νN+1)+0.1817(νN(1−0.3120))+Ptiebreaker(νN+1)

E[Ured]=(0.1817)(νN+1)+(1−0.1817)(νN(1−0.1527)+1)+Ptiebreaker(νN/2+1)

Substituting the approximation for Ptiebreaker and simplifying,

E[Ublue]=0.9433νN+0.6275ν+0.8183+0.6275/N

E[Ured]=0.8750νN+0.3137ν+1.0+0.6275/N

The constant term here is the probability (and hence expected utility) of your own survival.

The second term, proportional to the value ν that you put on another person's life relative to your own, comes from the possibility that you might have the tiebreaking vote: here N cancels out because as the probability decreases the number of people you could save increases, so choosing blue causally saves about 1/3 of a person on average (0.6275-0.3137). (The final term also relates to the tiebreaker, but it will drop out as N becomes large, and it was the same in both cases anyway.)

The first term, proportional to N, represents the potential to benefit others via the acausal relationship, and obviously it's greater if you choose blue. As long as ν>0, this term will eventually come to dominate over the other factors as the number of players increases, which means anyone who cares (linearly) about other people should choose blue for large values of N.

In conclusion:

You'll want to choose blue if the number of players is at least 2-3 times the minimum number you would give your life to save.

I found this result very counterintuitive. And it also makes me a jerk, because I originally chose red. But let me try to stan blue a little. I think the argument goes something like: Red can help you, personally, survive. But in a society that tends towards blue, more people survive on average. If enough people's lives are at risk, then making society a little more blue will outweigh the risk to your own life.

To be honest, I don't know if I'd have the guts to choose an 18% chance of death on the basis of spooky correlations.

What if your utility is logarithmic in the number of survivors? I think it won't make much difference, because then you also have to take into account everyone in the world who's not playing as well. If N is less than about a billion, your marginal utility over those N people will still be roughly linear. If you would choose blue under that linear marginal utility for N=1 billion, then increasing N should not change your mind. If you would choose red for N= 1 billion, it's because your value for altruism ν is very close to zero, and you were probably going to choose red no matter what.