6 Answers sorted by

50

30

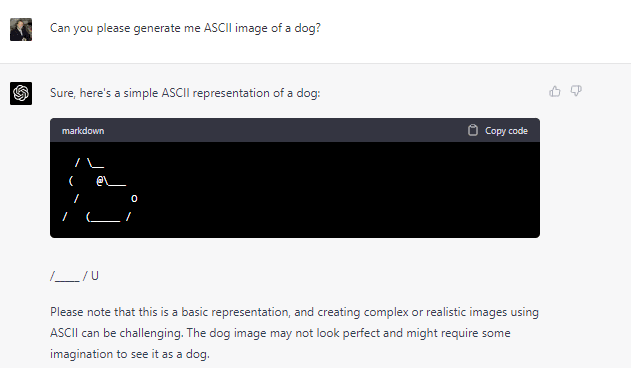

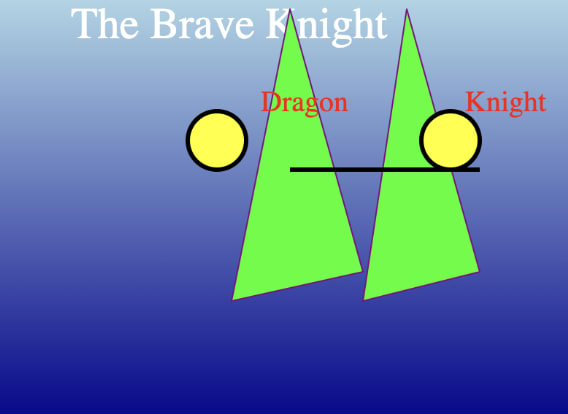

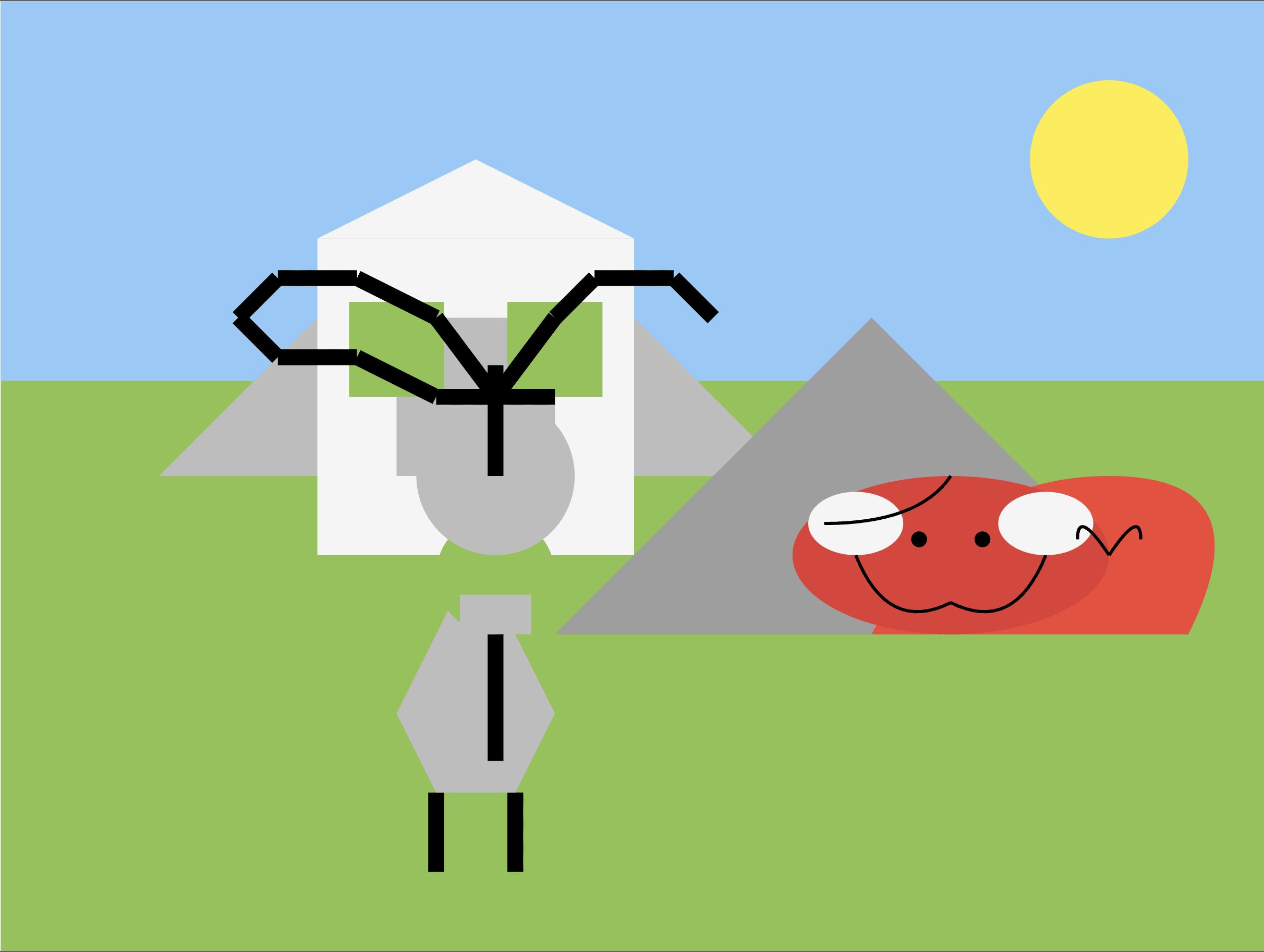

Note that ASCII art isn't the only kind of art. I just asked GPT4 and Claude to both make SVGs of a knight fighting a dragon.

Here's Claude's attempt:

And GPT4s:

I asked them both to make it more realistic. Claude responded with the exact same thing with some extra text, GPT4 returned:

I asked followed up asking it for more muted colors and a simple background, and it returned:

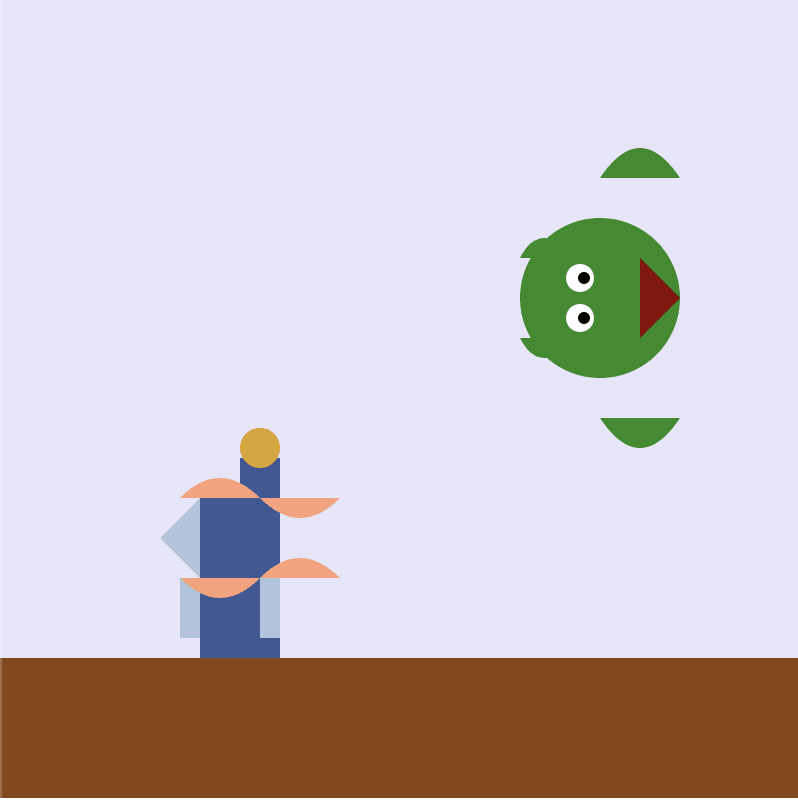

I tried again, accidentally using GPT3.5 this time, which initially gave something really lame, but then said "more realistic please", and it gave me:

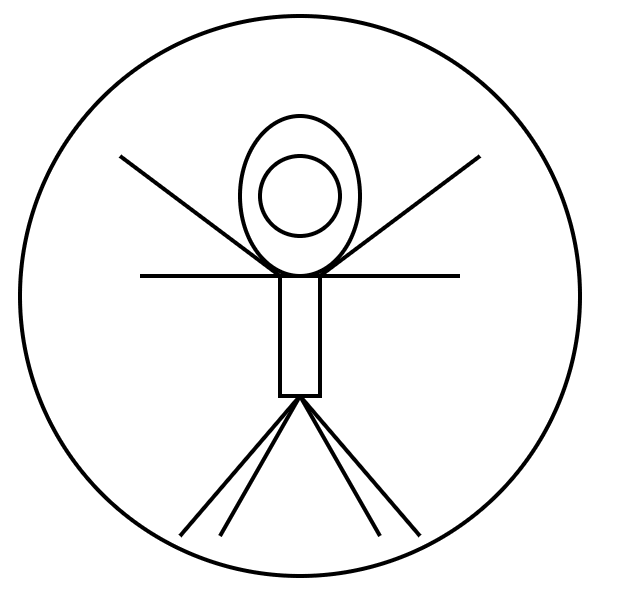

Next I asked it:

It responded with this image:

code:

<svg width="300" height="300" viewBox="0 0 300 300" xmlns="http://www.w3.org/2000/svg">

<!-- Background circle -->

<circle cx="150" cy="150" r="140" fill="none" stroke="black" stroke-width="2"/>

<!-- Body -->

<ellipse cx="150" cy="100" rx="30" ry="40" fill="none" stroke="black" stroke-width="2"/>

<rect x="140" y="140" width="20" height="60" fill="none" stroke="black" stroke-width="2"/>

<line x1="100" y1="140" x2="200" y2="140" stroke="black" stroke-w30

It's not great but it's trying

*

3-5I think it makes sense that it fails in this way. ChatGPT really doesn't see lines arranged vertically, it just sees the prompt as one long line. But given that it has been trained on a lot of ASCII art, it will probably be successful at copying some of it some of the time.

In case there is any doubt, here is GPT4's own explanation of these phenomena:

Lack of spatial awareness: GPT-4 doesn't have a built-in understanding of spatial relationships or 2D layouts, as it is designed to process text linearly. As a result, it struggles to maintain the correct alignment of characters in ASCII art, where spatial organization is essential.

Formatting inconsistencies in training data: The training data for GPT-4 contains a vast range of text from the internet, which includes various formatting styles and inconsistent examples of ASCII art. This inconsistency makes it difficult for the model to learn a single, coherent way of generating well-aligned ASCII art.

Loss of formatting during preprocessing: When text is preprocessed and tokenized before being fed into the model, some formatting information (like whitespaces) might be lost or altered. This loss can affect the model's ability to produce well-aligned ASCII art.

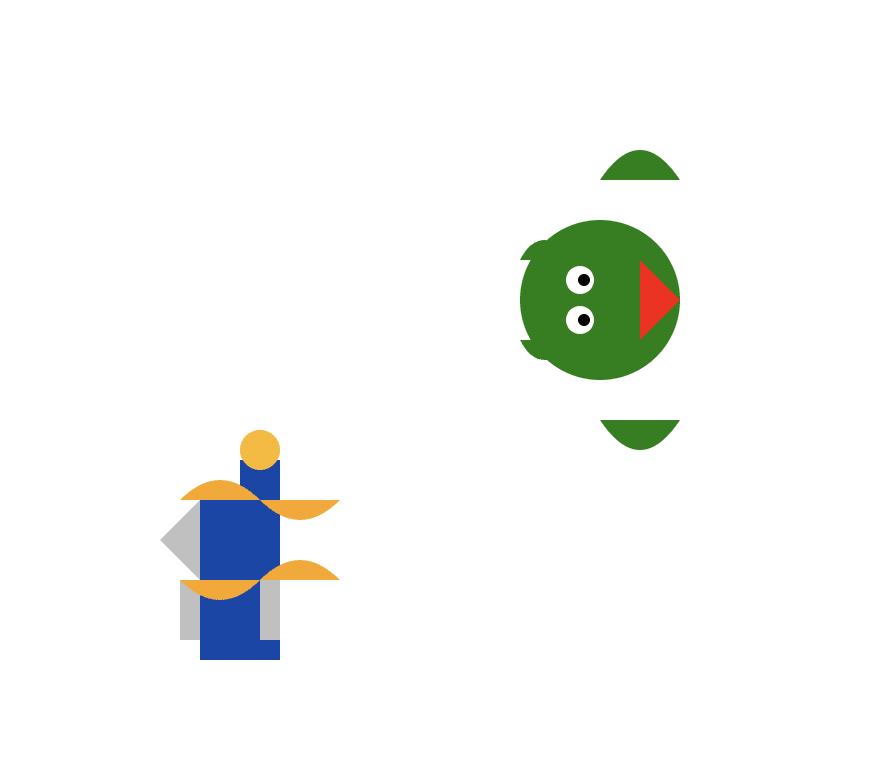

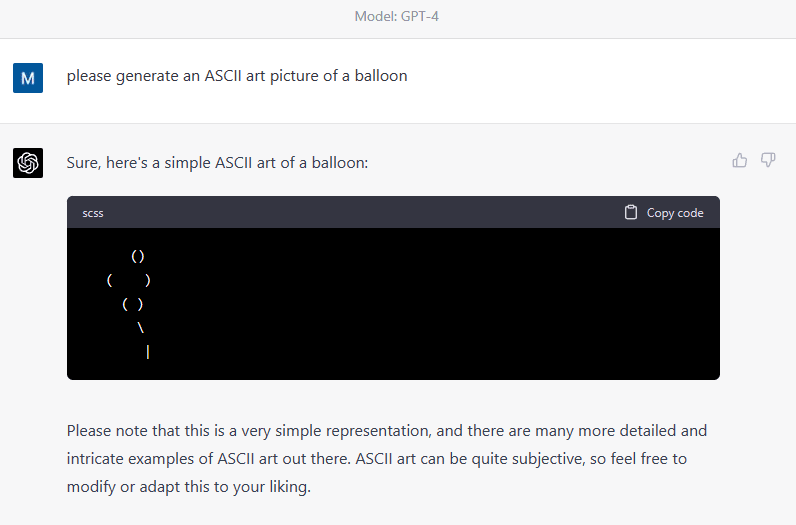

This is a more sensible representation of a balloon than one in the post, it's just small. More prompts tested on both ChatGPT-3.5 and GPT-4 would clarify the issue.

ChatGPT really doesn't see lines arranged vertically, it just sees the prompt as one long line.

Vision can be implemented in transformers by representing pictures with linear sequences of tokens, which stand for small patches of the picture, left-to-right, top-to-bottom (see appendix D.4 of this paper). The model then needs to learn on its own how the rows fit together into columns and so on...

20

See my reply here for a partial exploration of this. I also have a very long post in my drafts covering this question in relation to Bing's AI, but I'm not sure if it's worth posting now, after the GPT4 release.

0-1

In my understanding, this is only possible by rote memorization.

There is some discussion in comments to this Manifold question, suggesting GPT-4 still doesn't have a good visual understanding of ASCII art, at least not to the point of text recognition.

But it doesn't address the question for pictures of cats or houses instead of pictures of words. Or for individual letters of the alphabet.

I like this question - if it proves true that GPT-4 can produce recognizable ASCII art of things, that would mean it was somehow modelling an internal sense of vision and ability to recognize objects.

For this very reason, I was intrigued by the possibility of teaching them vision this way.

I think ASCII art in its general form is an unfair setup, though; ChatGPT has no way of knowing the spacing or width of individual letters, that is not how they perceive them, and hence, they have no way of seeing which letters are above each other. Basically, if you were given an ASCII art in a string, but had no idea how the individual characters looked or what width they had, you would have no way to interpret the image.

ASCII works because we perceive characters both in their visual shape, and in their encoded meaning, and we also see them depicting in a particular font with particular kerning settings. That entails so much information that is simply missing for them. With a bunch of the pics they produce, you notice they are basically off in the way you would expect if you didn't know the width of individual characters.

This changes if we only use characters of equal width, and a square frame. Say only 8 and 0. And you tell them that if there is a row of characters 8 characters long, this means the ninth character will be right under the first character, the tenth right under the second, etc. This would enable them to learn the spatial relations between the numbers, first in 2D, then in 3D. I've been meaning to do that, see if I can teach them spatial reasoning that way, save the convo and report it to the developers for training data, but was unsure if that was a good way for them to retain the information, or whether it would become superfluous as image recognition is incoming.

I've seen attempts to not just request ASCII, but teach it. And notably, ChatGPT learned across the conversation and improved, despite the fact that I was stuck by how humans were giving an explanation that is terrible if the person you are explaining things to cannot see your interface. We need to explain ASCII like you are explaining it to someone who is blind and feeling along a set of equally spaced beads, telling them to arrange the beads in a 3D construct in their heads.

It is clearly something tricky for them, though. ChatGPT learned language first, not math, they struggle to do things like accurately count characters. I find it all the more impressive that what they generate is not meaningless, and improves.

With a lot of scenarios where people say ChatGPT failed, I have found that their prompts as is did not work, but if you explain things gently and step by step, they can do it. You can aid the AI in figuring out the correct solution, and I find it fascinating that this is possible, that you can watch them learn through the conversation. The difference is really whether you want to prove an AI can't do something, or instead treat it like a mutual teaching interaction, as though you were teaching to a bright student with specific disabilities. E.g. not seeing your interface visually is not a cognitive failing, and judging them for it reminds me of people mistaking hearing impaired people for intellectually disabled people, because they keep mishearing instructions.

Does anyone know whether GPT-4 successfully generates ASCII art?

GPT-3.5 couldn't:

Which makes sense, cause of the whole words-can't-convey-phenomena thing.

I'd expect multimodality to solve this problem, though?