Ask LLMs for feedback on "the" rather than "my" essay/response/code, to get more critical feedback.

Seems true anecdotally, and prompting GPT-4 to give a score between 1 and 5 for ~100 poems/stories/descriptions resulted in an average score of 4.26 when prompted with "Score my ..." versus an average score of 4.0 when prompted with "Score the ..." (code).

FiveThirtyEight released their prediction today that Biden currently has a 53% of winning the election | Tweet

The other day I asked:

Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fees take a few percentage points off we should expect to make money trading on some of this stuff, right (the money is only locked up for 5 months)? And maybe there are cheap ways to transfer into and out of Polymarket?

Probably worthwhile to think about this further, including ways to make leveraged bets.

I think the FiveThirtyEight model is pretty bad this year. This makes sense to me, because it's a pretty different model: Nate Silver owns the former FiveThirtyEight model IP (and will be publishing it on his Substack later this month), so FiveThirtyEight needed to create a new model from scratch. They hired G. Elliott Morris, whose 2020 forecasts were pretty crazy in my opinion.

Here are some concrete things about FiveThirtyEight's model that don't make sense to me:

- There's only a 30% chance that Pennsylvania, Michigan, or Wisconsin will be the tipping point state. I think that's way too low; I would put this probability around 65%. In general, their probability distribution over which state will be the tipping point state is way too spread out.

- They expect Biden to win by 2.5 points; currently he's down by 1 point. I buy that there will be some amount of movement toward Biden in expectation because of the economic fundamentals, but 3.5 seems too much as an average-case.

- I think their Voter Power Index (VPI) doesn't make sense. VPI is a measure of how likely a voter in a given state is to flip the entire election. Their VPIs are way to similar. To pick a particularly egregious example

Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fees take a few percentage points off we should expect to make money trading on some of this stuff, right (the money is only locked up for 5 months)? And maybe there are cheap ways to transfer into and out of Polymarket?

Paid-only Substack posts get you money from people who are willing to pay for the posts, but reduce both (a) views on the paid posts themselves and (b) related subscriber growth (which could in theory drive longer-term profit).

So if two strategies are

- entice users with free posts but keep the best posts behind a paywall

- make the best posts free but put the worst posts behind the paywall

then regarding (b) above. the second strategy has less risk of prematurely stunting subscriber growth, since the best posts are still free. Regarding (a), it's much less bad to lose view counts on your worst posts.

3. put the spiciest posts behind a paywall, because you have something to say but don't want the entire internet freaking out about it.

[Book Review] The 8 Mansion Murders by Takemaru Abiko

As a kid I read a lot of the Sherlock Holmes and Hercule Poirot canon. Recently I learned that there's a Japanese genre of honkaku ("orthodox") mystery novels whose gimmick is a fastidious devotion to the "fair play" principles of Golden Age detective fiction, where the author is expected to provide everything that the attentive reader would need to come up with the solution himself. It looks like a lot of these honkaku mysteries include diagrams of relevant locations, genre-savvy characters, and a puzzle-like aesthetic. A bunch have been translated by Locked Room International.

The title of The 8 Mansion Murders doesn't refer to the number of murders, but to murders committed in the "8 Mansion," a mansion designed in the shape of an 8 by the eccentric industrialist who lives there with his family (diagrams show the reader the layout). The book is pleasant and quick—it didn't feel like much over 50,000 words. Some elements feel very Japanese, like the detective's comic-relief sidekick who suffers increasingly serious physical-comedy injuries. The conclusion definitely fits the fair-play genre in that it makes sense, could be...

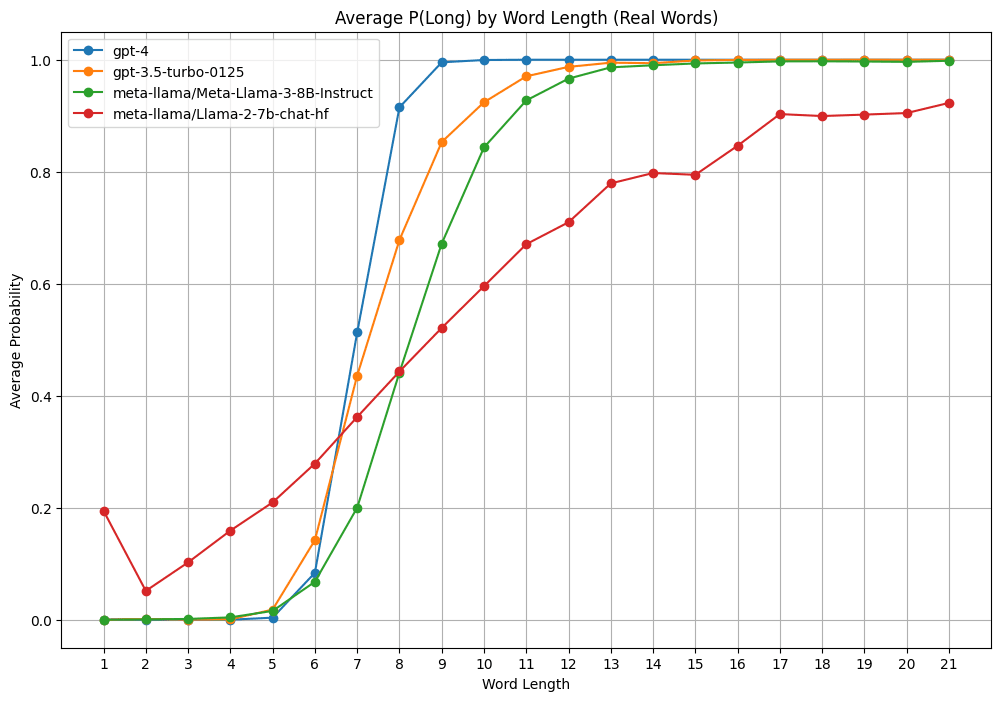

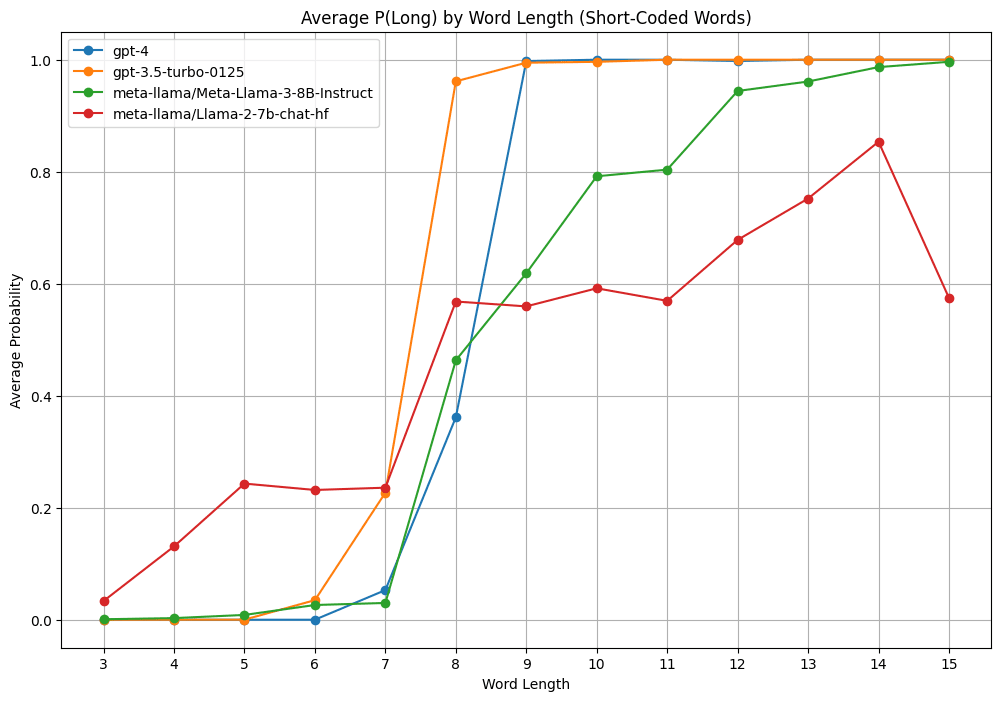

To test out Cursor for fun I asked models whether various words of different lengths were "long" and measured the relative probability of "Yes" vs "No" answers to get a P(long) out of them. But when I use scrambled words of the same length and letter distribution, GPT 3.5 doesn't think any of them are long.

Update: I got Claude to generate many words with connotations related to long ("mile" or "anaconda" or "immeasurable") and short ("wee" or "monosyllabic" or "inconspicuous" or "infinitesimal") It looks like the models have a slight bias toward the connotation of the word.

Just flagging that for humans, a "long" word might mean a word that's long to pronounce rather than long to write (i.e. ~number of syllables instead of number of letters)

What's the actual probability of casting a decisive vote in a presidential election (by state)?

I remember the Gelman/Silver/Edlin "What is the probability your vote will make a difference?" (2012) methodology:

...1. Let E be the number of electoral votes in your state. We estimate the probability that these are necessary for an electoral college win by computing the proportion of the 10,000 simulations for which the electoral vote margin based on all the other states is less than E, plus 1/2 the proportion of simulations for which the margin based on all other

Is this argument about determinism and moral judgment flawed?

- If determinism is true, then whatever can be done actually is done. (Definition)

- Whatever should be done, can be done. (Well-known "ought implies can" principle)

- If determinism is true, then whatever ought to be done actually is done (from 1, 2).

The context is that it appears to me that people reject determinism largely because they're committed to certain moral positions that are incompatible with determinism. Perhaps I will write a longer post about this.

hmm, I think the argument isn't valid:

- The "can" in Line 2 refers to logical possibility.

- At least, I think that's that's true of Kant's "ought implies can" principle.

- The "can" in Line 1 refers to physical possibility.

- The argument is sound only if the two "can"s refer to the same modality.

You could replaced the "can" in Line 1 with logical possibility, and then the argument would be valid. The view that whatever can logically be done actually is done is called Necessitarianism. It's pretty fringe.

Alternatively, you could replace the "can" in Line 2 with physical possibility, and then the argument would be valid. I don't know if that view has a name, it seems pretty implausible.

This man's modus ponens is definitely my modus tollens. It seems super cursed to use moral premises to answer metaphysics problems. In this argument, except for step 8, you can replace belief in free will with anything, and the argument says that determinism implies that any widely held belief is true.

"Ought implies can" should be something that's true by construction of your moral system, rather than something you can just assert about an arbitrary moral system and use to derive absurd conclusions.

Interesting Twitter post from some time ago (hard to find the original since Twitter search doesn't work for Tweets over the Tweet limit but I think it's from Ceb. K) about a book called The Generals about accountability culture.

...On the day Germany invaded Poland, Marshall was appointed Army Chief of Staff. At the time, the US Army was smaller than Bulgaria’s—just 100,000 poorly-equipped and poorly-organized active personnel—and he bluntly described them as eg “not even third-rate.” By the end of World War II, he had grown it 100-fold, and modernized it far

Could someone explain how Rawls's veil of ignorance justifies the kind of society he supports? (To be clear I have an SEP-level understanding and wouldn't be surprised to be misunderstanding him.)

It seems to fail at every step individually:

- At best, the support of people in the OP provides necessary but probably insufficient conditions for justice, unless he refutes all the other proposed conditions involving whatever rights, desert, etc.

- And really the conditions of the OP are actively contrary to good decision-making, e.g. you don't know your particular co

Quick Take: People should not say the word "cruxy" when already there exists the word "crucial." | Twitter

Crucial sometimes just means "important" but has a primary meaning of "decisive" or "pivotal" (it also derives from the word "crux"). This is what's meant by a "crucial battle" or "crucial role" or "crucial game (in a tournament)" and so on.

So if Alice and Bob agree that Alice will work hard on her upcoming exam, but only Bob thinks that she will fail her exam—because he thinks that she will study the wrong topics (h/t @Saul Munn)—then they might have ...