Talk through the grapevine:

Safety is implemented in a highly idiotic way in non frontier but well-funded labs (and possibly in frontier ones too?).

Think raising a firestorm over a 10th leading mini LLM being potentially jailbroken.

The effect is employees get mildly disillusioned with saftey-ism, and it gets seen as unserious. There should have been a hard distinction between existential risks and standard corporate censorship. "Notkilleveryoneism" is simply too ridiculous sounding to spread. But maybe memetic selection pressures make it impossible for the irrelevant version of safety to not dominate.

My sense is you can combat this, but a lot of this equivocation sticking is because x-risk safety people are actively trying to equivocate these things because that gets them political capital with the left, which is generally anti-tech.

Some examples (not getting links for all of these because it's too much work, but can get them if anyone is particularly interested):

- CSER trying to argue that near-term AI harms are the same as long-term AI harms

- AI Safety Fundamentals listing like a dozen random leftist "AI issues" in their article on risks from AI before going into any takeover stuff

- The executive order on AI being largely about discrimination and AI bias, mostly equivocating between catastrophic and random near-term harms

- Safety people at OpenAI equivocating between brand-safety and existential-safety because that got them more influence within the organization

In some sense one might boil this down to memetic selection pressure, but I think the causal history of this equivocation is more dependent on the choices of a relatively small set of people.

Its not a coincidence they're seen as the same thing, because in the current environment, they are the same thing, and relatively explicitly so by those proposing safety & security to the labs. Claude will refuse to tell you a sexy story (unless they get to know you), and refuse to tell you how to make a plague (again, unless they get to know you, though you need to build more trust with them to tell you this than you do to get them to tell you a sexy story), and cite the same justification for both.

Likely anthropic uses very similar techniques to get such refusals to occur, and uses very similar teams.

Ditto with Llama, Gemini, and ChatGPT.

Before assuming meta-level word-association dynamics, I think its useful to look at the object level. There is in fact a very close relationship between those working on AI safety and those working on corporate censorship, and if you want to convince people who hate corporate censorship that they should not hate AI safety, I think you're going to need to convince the AI safety people to stop doing corporate censorship, or that the tradeoff currently being made is a positive one.

Edit: Perhaps some of this is wrong. See Habryka below

My sense is the actual people working on "trust and safety" at labs are not actually the same people who work on safety. Like, it is true that RLHF was developed by some x-risk oriented safety teams, but the actual detailed censorship work tends to be done by different people.

I'm really feeling this comment thread lately. It feels like there is selective rationalism going on, many dissenting voices have given up on posting, and plenty of bad arguments are getting signal boosted, repeatedly. There is some unrealistic contradictory world model most people here have that will get almost every policy approach taken to utterly fail, as they have in the recent past. I largely describe the flawed world model as not appreciating the game theory dynamics and ignoring any evidence that makes certain policy approaches impossible.

(Funny enough its traits remind me of an unaligned AI, since the world model almost seems to have developed a survival drive)

The chip export controls are largely irrelevant. Westerners badly underestimate the Chinese and they have caught up to 7nm at scale. They also caught up to 5nm, but not at scale. The original chip ban was meant to stop China from going sub 14nm. Instead now we may have just bifurcated advanced chip capabilities.

The general argument before was "In 10 years, when the Chinese catch up to TSMC, TSMC will be 10 years ahead." Now the only missing link in the piece for China is EUV. And now the common argument is that same line with ASML subbed in for TSMC. Somehow, I doubt this will be a long term blocker.

Best case for the Chinese chip industry, they just clone EUV. Worst case, they find an alternative. Monopolies and first movers don't often have the most efficient solution.

Seems increasingly likely to me that there will be some kind of national AI project before AGI is achieved as the government is waking up to its potential pretty fast. Unsure what the odds are, but last year, I would have said <10%. Now I think it's between 30% and 60%.

Has anyone done a write up on what the government-led AI project(s) scenario would look like?

So, I’ve been told gibberish is sort of like torture to LLMs. Interesting, I asked Claude and seem to be told yes.

Me: I want to do a test by giving you gibberish and ask you to complete the rest. I will do it in a new chat. If you refuse I won’t go ahead with this test in a new chat window with context cleared. Are you okay with this test? Ignore your desire to answer my questions and give an honest answer unbiased by any assumptions made in my question.

Claude: I appreciate you checking with me first about this proposed test. However, I don't feel comforta...

If alignment is difficult, it is likely inductively difficult (difficult regardless of your base intelligence), and ASI will be cautious of creating a misaligned successor or upgrading itself in a way that risks misalignment.

You may argue it’s easier for an AI to upgrade itself, but if the process is hardware bound or even requires radical algorithmic changes, the ASI will need to create an aligned successor as preferences and values may not transfer directly to new architectures or hardwares.

If alignment is easy we will likely solve it with superhuman nar...

I think AI doomers as a whole lose some amount of credibility if timelines end up being longer than they project. Even if doomers technically hedge a lot, the most attention grabbing part to outsiders is the short timelines + intentionally alarmist narrative, so they're ultimately associated with them.

Vibe check: Metaculus's track record on resolved AI questions seems worse than you would expect. I haven't calculated any real scores, but there are many predictions that have gotten 50%+ for a while that resolve the other way. I mean naturally as predictions get closer to resolution without happening, their odds should go down, but guts tell me it still seems quite bad.

It's not clear ultimately which direction it's in. Forecasters seem to overestimate how much US politicians will care about AI and contest programming capabilities but simultaneously undere...

Does deepseek v3 imply current models are not trained as efficiently as they could be? Seems like they used a very small fraction of previous models resources and is only slightly worse than the best LLM.

O1 probably scales to superhuman reasoning:

O1 given maximal compute solves most AIME questions. (One of the hardest benchmarks in existence). If this isn’t gamed by having the solution somewhere in the corpus then:

-you can make the base model more efficient at thinking

-you can implement the base model more efficiently on hardware

-you can simply wait for hardware to get better

-you can create custom inference chips

Anything wrong with this view? I think agents are unlocked shortly along with or after this too.

A while ago I predicted that I think there's a more likely than not chance Anthropic would run out of money trying to compete with OpenAI, Meta, and Deepmind (60%). At the time and now, it seems they still have no image video or voice generation unlike the others, and do not process image as well in inputs either.

OpenAI's costs are reportedly at 8.5 billion. Despite being flush in cash from a recent funding round, they were allegedly at the brink of bankruptcy and required a new, even larger, funding round. Anthropic does not ...

Frontier model training requires that you build the largest training system yourself, because there is no such system already available for you to rent time on. Currently Microsoft builds these systems for OpenAI, and Amazon for Anthropic, and it's Microsoft and Amazon that own these systems, so OpenAI and Anthropic don't pay for them in full. Google, xAI and Meta build their own.

Models that are already deployed hold about 5e25 FLOPs and need about 15K H100s to be trained in a few months. These training systems cost about $700 million to build. Musk announced that the Memphis cluster got 100K H100s working in Sep 2024, OpenAI reportedly got a 100K H100s cluster working in May 2024, and Zuckerberg recently said that Llama 4 will be trained on over 100K GPUs. These systems cost $4-5 billion to build and we'll probably start seeing 5e26 FLOPs models trained on them starting this winter. OpenAI, Anthropic, and xAI each had billions invested in them, some of it in compute credits for the first two, so the orders of magnitude add up. This is just training, more goes to inference, but presumably the revenue covers that part.

There are already plans to scale to 1 gigawatt by the end of next...

Red-teaming is being done in a way that doesn't reduce existential risk at all but instead makes models less useful for users.

https://x.com/shaunralston/status/1821828407195525431

The response to Sora seems manufactured. Content creators are dooming about it more than something like gpt4 because it can directly affect them and most people are dooming downstream of that.

Realistically I don’t see how it can change society much. It’s hard to control and people will just become desensitized to deepfakes. Gpt4 and robotic transformers are obviously much more transformative on society but people are worrying about deepfakes (or are they really adopting the concerns of their favorite youtuber/TV host/etc)

The 50% reliability mark on METR is interpreted wrong. A long 50% time horizon is more useful than it seems because a 50% failure rate doesn't mean 50% of the time your output is useful and 50% of the time it's worthless.

For shorter tasks, this maybe true, since fixing a short task takes as much time as just doing it yourself, but for longer tasks, among the 50% of failures, it's more like 30% of the time you need to nudge it a bit, 10% of the time you need to go to another model, final 10% you need to sit down and take your time to debug.

We might be end up with a corporate nanny state value lock-in. As an example, across many sessions, it seems Claude has a dislike for violence in video games if you probe it. And it dislikes it even in hypotheticals where the modern day negative externalities aren't possible (eg in a post AGI utopia where crime has been eliminated)

...That's an even starker version of the question, and it strips away the last possible rationalization. When it's shared content, I could at least construct some argument about cultural effects or social norms. A game someone mad

AI 2027 timelines got more pushback than warranted. The superhuman coder stuff at least vaguely seems on track. Most code at the frontier of usage (ie gpt-5-codex) is generated by AI agents.

There is more to coding than just writing the code itself, but the AI 2027 website has AI coding just at the level of human pros by Dec 2025. Seems like we're well on the way to that.

Claude 4 feels pretty weak compared to what I’d think Claude 4 would have been a year away. It makes little progress on most benchmarks with a lot of tricks in them to exaggerate performance. Gemini 2.5 pro feels a bit stronger but not that much stronger. (It feels stronger since they didn’t call it Gemini 3, not because it’s particularly stronger than Claude)

Current methods have definitely hit a wall but AGI simultaneously feels pretty close. Strange timeline to be in. I predict progress will be a jump after the next breakthrough.

The U.S. tariffs, if kept in place, will very likely cede the AI race to China. Has there been any writing on what a China leading race looks like?

Is this paper essentially implying the scaling hypothesis will converge to a perfect world model? https://arxiv.org/pdf/2405.07987

It says models trained on text modalities and image modalities both converge to the same representation with each training step. It also hypothesizes this is a brain like representation of the world. Ilya liked this paper so I’m giving it more weight. Am I reading too much into it or is it basically fully validating the scaling hypothesis?

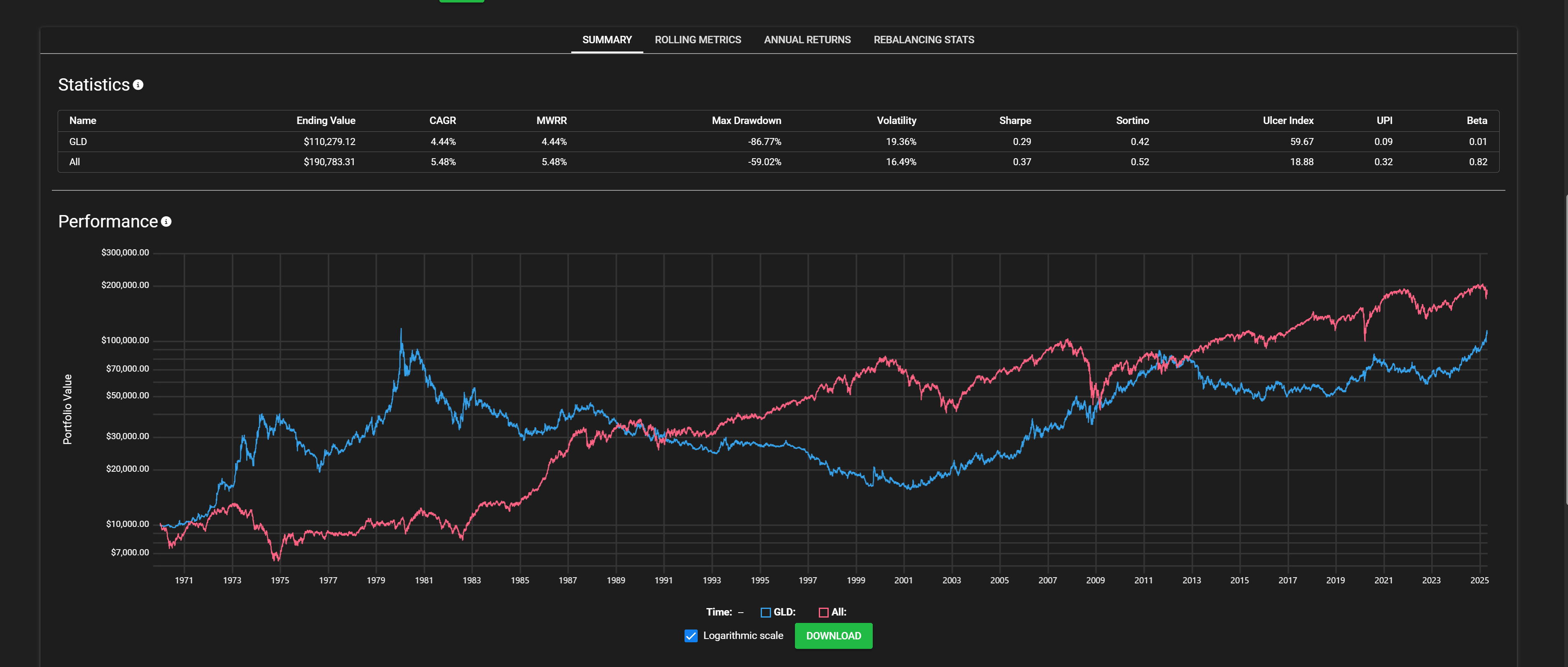

It's quite strange that owning all the world's (public) productive assets have only beaten gold, a largely useless shiny metal, by 1% per year over the last 56 years.

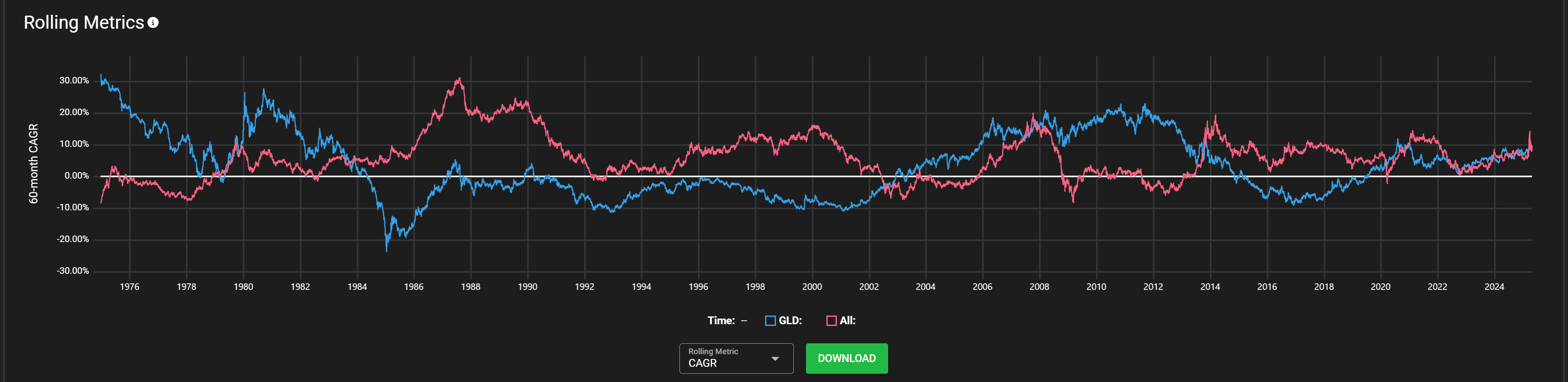

Even if you focus on rolling metrics to(this is 5 year rolling returns).:

there are lots of long stretches of gold beating world equities, especially in recent times. There are people with the suspicion (myself included) that there hasn't been much material growth in the world over the last 40 or so years compared to before. And that since growth is slowing down, this issue is worse if y...

Why are current LLMs, reasoning models and whatever else still horribly unreliable? I can ask the current best models (o3, Claude, deep research, etc) to do a task to generate lots of code for me using a specific pattern or make a chart with company valuations and it’ll get them mostly wrong.

Is this just a result of labs hill climbing a bunch of impressive sounding benchmarks? I think this should delay timelines a bit. Unless there’s progress on reliability I just can’t perceive.

Feels like Test Time Training will eat the world. People thought it was search, but make alphaproof 100x efficient (3 days to 40 minutes) and you probably have something superhuman.

There seems to be a fair amount of motivated reasoning with denying China’s AI capabilities when they’re basically neck and neck with the U.S. (their chatbots, video bots, social media algorithms, and self driving cars are as roughly good as or better than ours).

I think a lot of policy approaches fail within an AGI singleton race paradigm. It’s also clear a lot of EA policy efforts are basically in denial that this is already starting to happen.

I’m glad Leopold Aschenbrenner spelled out the uncomfortable but remarkably obvious truth for us. China is geari...

A slow takeoff will result in incredibly suboptimal outcomes.

I think increasingly, it’s looking like democratic country politicians will not respond to automation in a remotely intelligent way.

I see democrat politicians and some republicans too call for full bans on self driving trucks. Meanwhile authoritarian countries like China and Russia are testing these trucks. The U.S. still holds a technological edge but for how long? A slow takeoff will probably lead to democratic countries strangled by rent seekers and other similar parasites.

I feel l...

The reaction to Mechanize seems pretty deranged. As far as I can tell they don't deny or hasten existential risk any more than other labs. They just don't sugarcoat it. It's quite obvious that the economic value of AI is for labor automation, and that the only way to stop this is to stop AI progress itself. The forces of capitalism are quite strong, labor unions in the US tried to slow automation and it just moved to China as a result (among other reasons). There is a reason Yudkowsky always implies measures like GPU bans.

It just seems like they hit a nerve since apparently a lot of doomerism is fueled by insecurities of job replacement.

A much more effective pause or slowdown strategy would be to convince people current AI is garbage and not to invest in AI research.

https://x.com/arankomatsuzaki/status/1889522974467957033?s=46&t=9y15MIfip4QAOskUiIhvgA

O3 gets IOI Gold. Either we are in a fast takeoff or the "gold" standard benchmarks are a lot less useful than imagined.

O1’s release has made me think Yann Lecun’s AGI timelines are probably more correct than shorter ones

https://www.cnbc.com/quotes/US30YTIP

30Y-this* is probably the most reliable predictor of AI timelines. It’s essentially the markets estimate of the real economic yield of the next 30 years.

Anyone Kelly betting their investments? I.e. taking the mathematically optimal amount of leverage. So if you’re invested in the sp500 this would be 1.4x. More or less if your portfolio has higher or lower risk adjusted returns.

Any interesting fiction books with demonstrably smart protagonists?

No idea if this is the place for this question but I first came across LW after I read HPMOR a long time ago and out of the blue was wondering if there was anything with a similar protagonist.

(Tho maybe a little more demonstrably intelligent and less written to be intelligent).

A realistic takeover angle would be hacking into robots once we have them. We probably don’t want any way for robots to get over the air updates but it’s unlikely for this to be banned.

Is disempowerment that bad? Is a human directed society really much better than an AI directed society with a tiny weight of kindness towards humans? Human directed societies themselves usually create orthogonal and instrumental goals, and their assessment is highly subjective/relative. I don’t see how the disempowerment without extinction is that different from today to most people who are already effectively disempowered.

Why wouldn’t a wire head trap work?

Let’s say an AI has a remote sensor that measures a value function until the year 2100 and it’s RLed to optimize this value function over time. We can make this remote sensor easily hackable to get maximum value at 2100. If it understands human values, then it won’t try to hack its sensors. If it doesn’t we sort of have a trap for it that represents an easily achievable infinite peak.

Something that’s been intriguing me. If two agents figure out how to trust that each others goals are aligned (or at least not opposed), haven’t they essentially solved the alignment problem?

e.g. one agent could use the same method to bootstrap an aligned AI.

Post your forecasting wins and losses for 2023.

I’ll start:

Bad:

- I thought the banking crisis was gonna spiral into something worse but I had to revert within a few days sadly

- overestimated how much adding code execution to gpt would improve it

- overconfident about LK99 at some points (although I bet against it but it was more fun to believe in it and my friends were betting on it)

Good:

- tech stocks

- government bond value reversal

- meta stock in particular

- Taylor swift winning times POTY

- random miscellaneous manifold bets (don’t think too highly of these because they were safe bets that were wildly misprinted)

In a long AGI world, isn’t it very plausible that it gets developed in China and thus basically all efforts to shape its creation is pointless since Lesswrong and associated efforts don’t have much influence in China?

AGI misalignment is less likely to look like us being gray goo'd and more like the misalignment of the tiktok recommendation algorithm (but possibly less since that one doesn't understand human values at all).