Is this just a semantic quibble, or are you saying there's fundamental similarities between them that are relevant?

I'm not tailcalled, but yeah, it being (containing?) a transformer does make it pretty similar architecturally. Autoregressive transformers predict one output (e.g. a token) at a time. But lots of transformers (like some translation models) are sequence-to-sequence, so they take in a whole passage and output a whole passage.

There are differences, but iirc it's mostly non-autoregressive transformers having some extra parts that autoregressive ones don't need. Lots of overlap though. More like a different breed than a different species.

Agreed. I highly recommend this blog post (https://sander.ai/2024/09/02/spectral-autoregression.html) for concretely understanding why autoregressive and diffusion models are so similar, despite seeming so different.

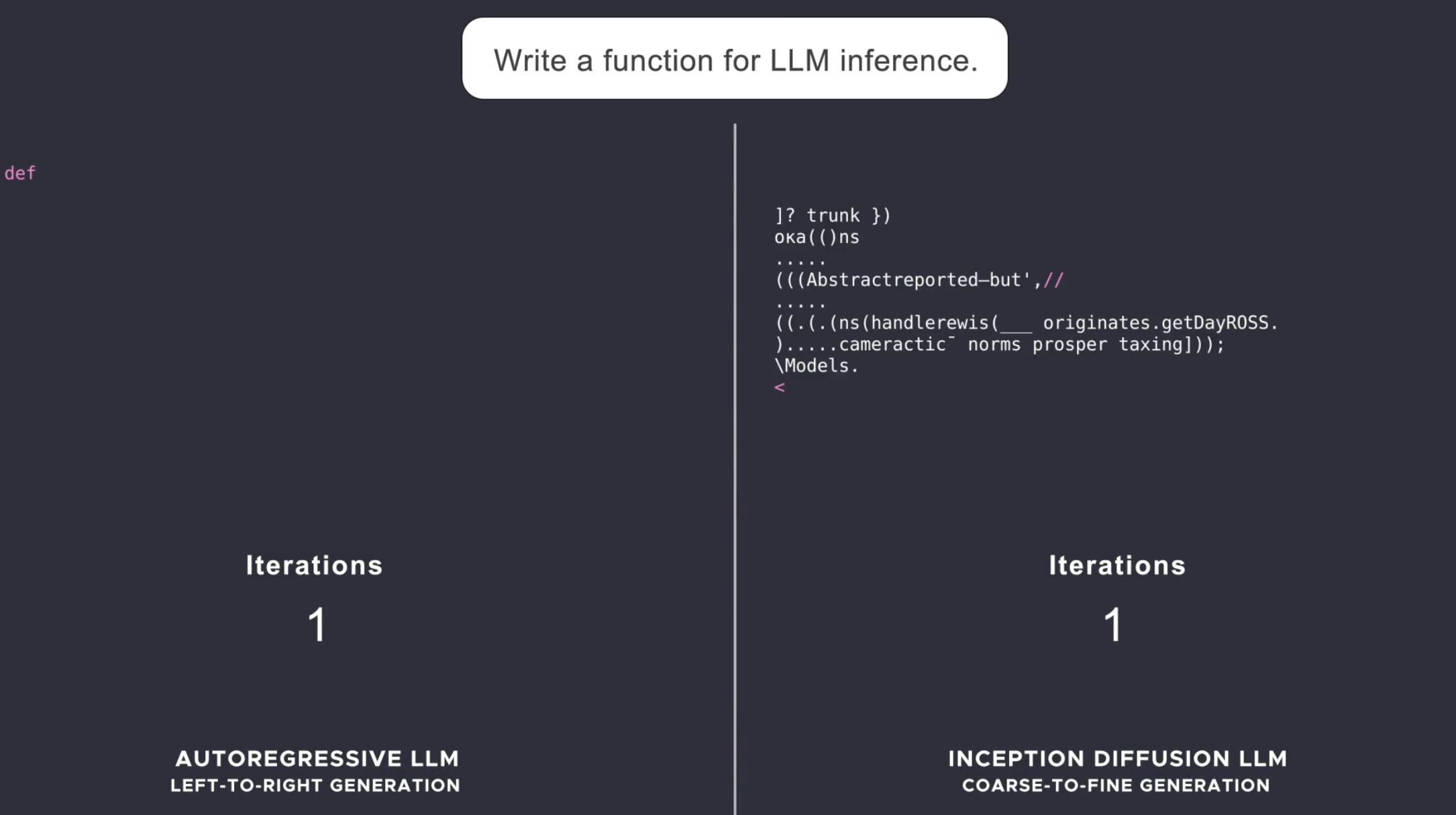

I disagree. In practice diffusion models are autoregressive for generating non-trivial amounts of text. A better way to think about diffusion models is that they are a generalization of multi-token prediction (similar to how DeepSeek does it) where the number of tokens you get to predict in 1 shot is controllable and steerable. If you do use a diffusion model over a larger generation you will end up running it autoregressively, and in the limit you could make them work like a normal 1-token-at-a-time LLM or do up to 1-big-batch-of-N-tokens at a time.

Yeah, the thing they aren't is transformers.

EDIT: I stand corrected, I tended to think of diffusion models as necessarily a classic series of convolution/neural network layers but obviously that's just for images and there's no reason to not use a transformer approach instead, so I realise the two things are decoupled, and what makes a diffusion model is its training objective, not its architecture.

You can train transformers as diffusion models (example paper), and that’s presumably what Gemini diffusion is.

Fair, you can use the same architecture just fine instead of simple NNs. It's really a distinction between what's your choice of universal function approximator vs what goal you optimise it against I guess.

the thing they aren't is one-step crossentropy. that's it, everything else is presumably sampled from the same distribution as existing LLMs. (this is like if someone finally upgraded BERT to be a primary model).

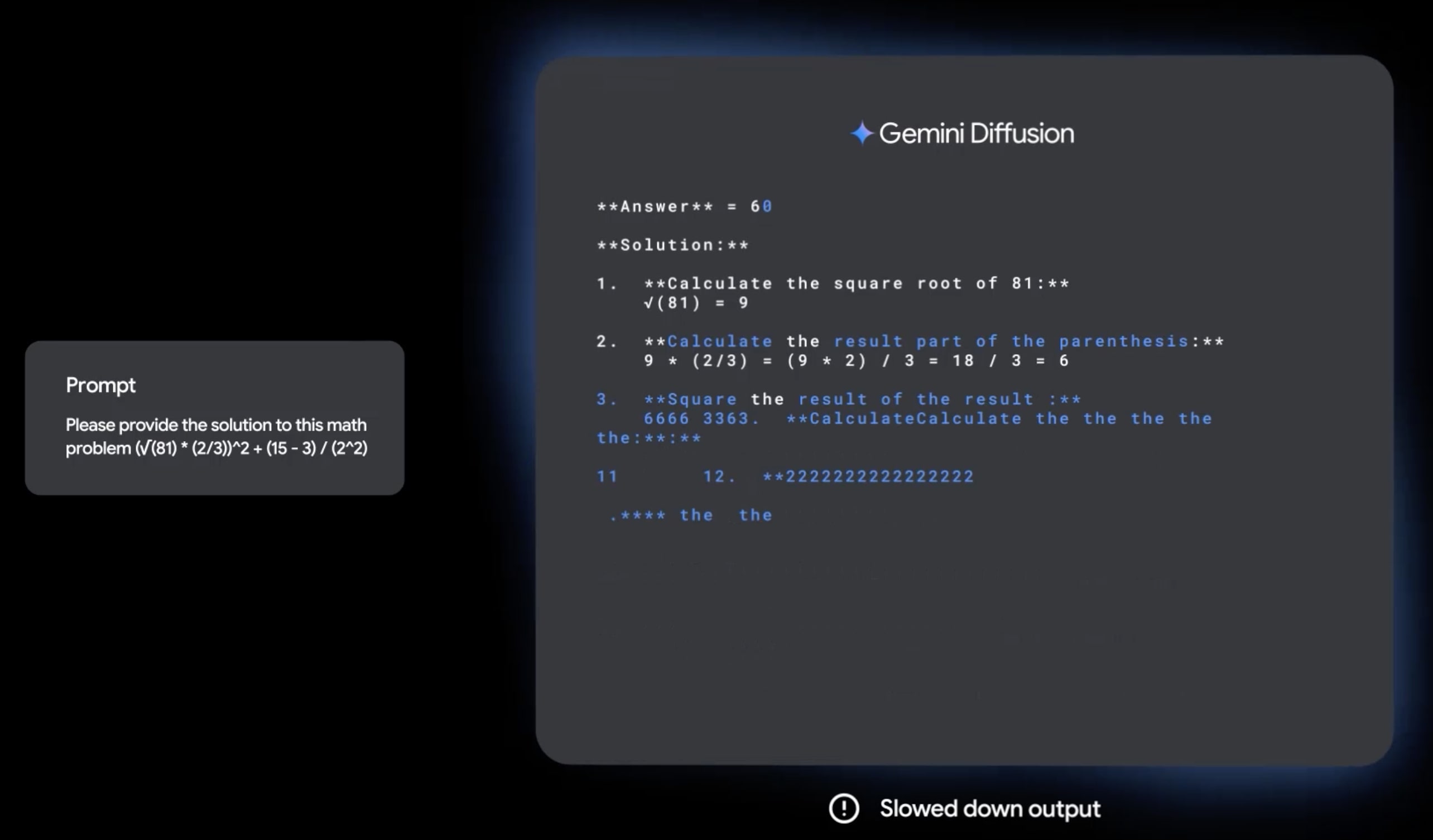

The results seem very interesting, but I'm not sure how to interpret them. Comparing the generations videos from this and Mercury, the starting text from each seems very different in terms of resembling the final output:

Unless I'm missing something really obvious about these videos or how diffusion models are trained, I would guess that DeepMind fine-tuned their models on a lot of high-quality synthetic data, enough that their initial generations already match the approximate structure of a model response with CoT. This would partially explain why they seem so impressive even at such a small scale, but would make the scaling laws less comparable to autoregressive models because of how much high-quality synthetic data can help.

True, and then it wouldn't be an example of the scaling of diffusion models, but the of distillation from a scaled up autoregressive LLM.

I got the model up to 3,000 tokens/s on a particularly long/easy query.

As an FYI, there has been other work on large diffusion language models, such as this: https://www.inceptionlabs.ai/introducing-mercury

Yep, I know about earlier work, but I think that one of the top 3 labs taking this seriously is a big sign.

- I am afraid that diffusion-based models will be far more powerful than the LLMs trained by similar compute and on a similar amount of data, especially in generating a new and diverse set of ideas, which is vitally important[1] in automating the research. While LLMs are thought to become comparable with scatterbrained employees who thrive under careful management, diffusion-based models seem to work more like human imagination. See also the phenomena where humans dream of ideas.

- Unfortunately, as Peter Barnett remarks, there isn't a clear way to get a (faithful) Chain-of-Thought from diffusion-based models. The backtracking in diffusion-based models appears to resemble the neuralese[2] in LLMs that are thought to become far more powerful and very hard to interpret.

- Another complication of using diffusion-based LLMs is that they think big-picture about the entire responce. Then it might also be easier for these models to develop a worldview, think about their long-term goals and how best to achieve them. Unlike the AI-2027 scenario, the phase where Agent-3 is misaligned but not adversarily so is severely shortened or outright absent.

- Therefore, a potential safe strategy would be to test only the dependence of capabilities of diffusion-based models on size and on data quantity, develop interpretability tools[3] for them by using the less capable and better-interpretable LLMs, then develop interpreted diffusion-based models.

- ^

For example, the optimistic scenario, unlike the takeoff forecast by Kokotajlo, assumes that the AI’s creative process fails to be sufficiently different from instance to instance.

- ^

CoT-using models are currently bottlenecked by the inability to transfer more than one token. The neuralese recurrence makes interpretability far more difficult. I proposed a technique where, instead of a neuralese memo, the LLM receives a text memo from itself and the LLM should be trained to ensure that the text is related to the prompt's subject.

- ^

The race ending of the AI-2027 scenario has Agent-4 develop such tools and construct Agent-5 on an entirely different architecture. But the forecasters didn't know about Google's experiments with diffusion-based models and assumed that it would be an AI who would come up with the architecture of Agent-5.

What is this based on? Is there, currently, any reason to expect diffusion LLMs to have a radically different set of capabilities from LLMs? Is there a reason to expect them to scale better?

I have seen one paper, arguing that diffusion is stronger.

the most interesting result there: A small 6M(!) model is able to solve 9x9 sudokus with 100% accuracy. In my own experiments, using a LLama 3B model and a lot of finetuning and engineering, I got up to ~50% accuracy with autoregressive sampling.[1]

For sudoku this seems pretty obvious: In many cases there is a cell that is very easy to fill, so you can solve the problem piece by piece. Otoh, autoregressive models sometimes have to keep the full solution "in their head" before filling the first cell.

(As an aside, I tried testing some sudokus in Gemini Diffusion, which it couldn't solve)

So yeah, there are some tasks that should definitely be easier to solve for diffusion LLMs. Their scaling behaviour is not researched well yet, afaict.

- ^

I don't fully trust the results of this paper, as it seems too strong and I have not yet been able to replicate this well. But the principle holds and the idea is good.

This seems like it's only a big deal if we expect diffusion language models to scale at a pace comparable or better to more traditional autoregressive language transformers, which seems non-obvious to me.

Right now my distribution over possible scaling behaviors is pretty wide, I'm interested to hear more from people.

My understanding was that diffusion refers to a training objective, and isn't tied to a specific architecture. For example OpenAI's Sora is described as a diffusion transformer. Do you mean you expect diffusion transformers to scale worse than autoregressive transformers? Or do you mean you don't think this model is a transformer in terms of architecture.

Oops, I wrote that without fully thinking about diffusion models. I meant to contrast diffusion LMs to more traditional autoregressive language transformers, yes. Thanks for the correction, I'll clarify my original comment.

If it's trained from scratch, and they release details, then it's one data point for diffusion LLM scaling. But if it's distilled, then it's zero points of scaling data.

Because we are not interested in scaling which is distilled from a larger parent model, as that doesn't push the frontier because it doesn't help get the next larger parent model.

Apple also have LLM diffusion papers, with code. It seems like it might be helpful for alignment and interp because it would have a more interpretable and manipulable latent space.

It seems like it might be helpful for alignment and interp because it would have a more interpretable and manipulable latent space.

Why would we expect that to be the case? (If the answer is in the Apple paper, just point me there)

Oh it's not explicitly in the paper, but in Apple's version they have an encoder/decoder with explicit latent space. This space would be much easier to work with and steerable than the hidden states we have in transformers.

With an explicit and nicely behaved latent space we would have a much better chance of finding a predictive "truth" neuron where intervention reveals deception 99% of the time even out of sample. Right now mechinterp research achieves much less, partly because the transformers have quite confusing activation spaces (attention sinks, suppressed neurons, etc).

I think what you're saying is that because the output of the encoder is a semantic embedding vector per paragraph, that results in a coherent latent space that probably has nice algebraic properties (in the same sense that eg the Word2Vec embedding space does). Is that a good representation?

That does seem intuitively plausible, although I could also imagine that there might have to be some messy subspaces for meta-level information, maybe eg 'I'm answering in language X, with tone Y, to a user with inferred properties Z'. I'm looking forward to seeing some concrete interpretability work on these models.

Yes, that's exactly what I mean! If we have word2vec like properties, steering and interpretability would be much easier and more reliable. And I do think it's a research direction that is prospective, but not certain.

Facebook also did an interesting tokenizer, that makes LLM's operating in a much richer embeddings space: https://github.com/facebookresearch/blt. They embed sentences split by entropy/surprise. So it might be another way to test the hypothesis that a better embedding space would provide ice Word2Vec like properties.

If Gemini is distilled from a bigger LLM, then it's also useful because a similar result is obtained with fewer compute. Consider o3 and o4-mini which is only a little less powerful and far cheaper. And that's ignoring the possibility to amplify Gemini Diffusion, then re-distill it, obtaining GemDiff^2, etc. If this IDA process turns out to be far cheaper than that of LLMs, then we obtain a severe capabilities per compute increase...

Good point! And it's plausible because diffusion seems to provide more supervision and get better results in generative vision models, so it's a candidate for scaling.

This seems like it's only a big deal if we expect diffusion language models to scale at a pace comparable or better to more traditional autoregressive language transformers, which seems non-obvious to me.

There are some use-cases where quick and precise inference is vital: for example, many agentic tasks (like playing most MOBAs or solving a physical Rubik's cube; debatably most non-trivial physical tasks) require quick, effective, and multi-step reasoning.

Current LLMs can't do many of these tasks for a multitude of reasons; one of those reasons is the speed that it takes to generate responses, especially with chain-of-thought reasoning. A diffusion-based LLM could actually respond to novel events quickly, using a superbly detailed chain-of-thought, with only 'commodity' and therefore cheaper hardware (no WSL chips or other weirdness, only GPUs).

If non-trivial physical tasks (like automatically collecting and doing laundry) require detailed COTs (somewhat probable, 60%), and these tasks are very economically relevant (this seems highly probable to me, 80%), then the economic utility to training diffusion LLMs only requires said diffusion LLMs to have near-comparable scaling to traditional autoregressive LLMs; the economic use cases for fast inference more than justifies the required and higher training requirements (~48%).

There are some use-cases where quick and precise inference is vital: for example, many agentic tasks (like playing most MOBAs or solving a physical Rubik's cube; debatably most non-trivial physical tasks) require quick, effective, and multi-step reasoning.

Yeah, diffusion LLMs could be important not for being better at predicting what action to take, but for hitting real-time latency constraints, because they intrinsically amortize their computation more cleanly over steps. This is part of why people were exploring diffusion models in RL: a regular bidirectional or unidirectional LLM tends to be all-or-nothing, in terms of the forward pass, so even if you are doing the usual optimization tricks, it's heavyweight. A diffusion model lets you stop in the middle of the diffusing, or use that diffusion step to improve other parts, or pivot to a new output entirely.

A diffusion LLM in theory can do something like plan a sequence of future actions+states in addition to the token about to be executed, and so each token can be the result of a bunch of diffusion steps from a long time ago. This allows a small fast model to make good use of 'easy' timesteps to refine its next action: it just spends the compute to keep refining its model of the future and what it ought to do next, so at the next timestep, the action is 'already predicted' (if things were going according to plan). If something goes wrong, then the existing sequence may still be an efficient starting point compared to a blank slate, and quickly update to compensate. And this is quite natural compared to trying to bolt on something to do with MoEs or speculative decoding or something.

So your robot diffusion LLM can be diffusing a big context of thousands of tokens, which represents its plan and predicted environment observations over the next couple seconds, and each timestep, it does a little more thinking to tweak each token a little bit, and despite this being only a few milliseconds of thinking each time by a small model, it eventually turns into a highly capable robot model's output and each action-token is ready by the time it's necessary (and even if it's not fully done, at least it is there to be executed - a low-quality action choice is often better than blowing the deadline and doing some default action like a no-op). You could do the same thing with a big classic GPT-style LLM, but the equivalent quality forward pass might take 100ms and now it's not fast enough for good robotics (without spending a lot of time on expensive hardware or optimizing).

What is the point of these benchmarks without knowing the training compute and data ? One of the main questions is their interpretability. Iterative refinement of these models may open new opportunities.

For an example of the kind of capabilities diffusion models offer that LLMs don't, you don't need to just predict tokens after a piece of text: you can natively edit somewhere in the middle.

That should be helpful for the various arithmetic operations in which the big-endian nature of arabic numerals requires carry operations to work backwards. Of course, there are also transformer models that use a different masking approach during training and thus don't impose a forwards-only autoregressive generation, or one could just train a model to do arithmetic in a little-endian numerical notation and then reverse the answer.

Similarly, it seems likely that this approach might avoid the reversal curse.

I think this is obvious enough that the big labs probably think of it, but not everyone might think of it, so it makes sense to share it here.

In addition to having tools like "lookup url", "add to canvas" and "google X" they will get another tool "do diffusion on the last X tokens".

If you train your model, you have two text chains. From chain (1) where the diffusion model changed no text you train just that in a specific model the decision to use the diffusion model was used. From chain (2) you can train on the text as enhanced by the diffusion model (so the decision to use the diffusion model doesn't exist in chain 2)

This gives the reasoning model a better way to deal with mistakes it makes both correcting them in real time and also learning not to repeat the mistake in the future if the example is used as training data.

It can also get another forward looking diffusion tool: "Start with 1000 extra random tokens and do diffusion on those, try 4 different version" and then the standard model picks the version it likes best.

Even if diffusion models don't beat non-diffusion models in the general case, the hybrid where you have a thinking model that can use both modes is likely going to be better than either type of model on their own.

For an example of the kind of capabilities diffusion models offer that LLMs don't, you don't need to just predict tokens after a piece of text: you can natively edit somewhere in the middle.

Unfortunately it still remains hard to insert a bunch of tokens into the middle of the text, shifting everything else to the right. If that capability was added, I visualise it as adding some asides/footnotes/comments to text parts, then iterating on total text meaning (so comments can influence each other too), and then folding the extended structure to text.

Nice to see that diffusion model hypothesis is tested, and even better to hear that it works as I anticipated!

Google Deepmind has announced Gemini Diffusion. Though buried under a host of other IO announcements it's possible that this is actually the most important one!

This is significant because diffusion models are entirely different to LLMs. Instead of predicting the next token, they iteratively denoise all the output tokens until it produces a coherent result. This is similar to how image diffusion models work.

I've tried they results and they are surprisingly good! It's incredibly fast, averaging nearly 1000 tokens a second. And it one shotted my Google interview question, giving a perfect response in 2 seconds (though it struggled a bit on the followups).

It's nowhere near as good as Gemini 2.5 pro, but it knocks ChatGPT 3 out the water. If we'd seen this 3 years ago we'd have been mind blown.

Now this is wild for two reasons:

For an example of the kind of capabilities diffusion models offer that LLMs don't, you don't need to just predict tokens after a piece of text: you can natively edit somewhere in the middle. Also since the entire block is produced at once, you don't get that weird behaviour where an LLM says one thing then immediately contradicts itself.

So this isn't something you'd use just yet (probably), but watch this space!