Could someone who thinks capabilities benchmarks are safety work explain the basic idea to me?

It's not all that valuable for my personal work to know how good models are at ML tasks. Is it supposed to be valuable to legislators writing regulation? To SWAT teams calculating when to bust down the datacenter door and turn the power off? I'm not clear.

But it sure seems valuable to someone building an AI to do ML research, to have a benchmark that will tell you where you can improve.

But clearly other people think differently than me.

Not representative of motivations for all people for all types of evals, but https://www.openphilanthropy.org/rfp-llm-benchmarks/, https://www.lesswrong.com/posts/7qGxm2mgafEbtYHBf/survey-on-the-acceleration-risks-of-our-new-rfps-to-study, https://docs.google.com/document/d/1UwiHYIxgDFnl_ydeuUq0gYOqvzdbNiDpjZ39FEgUAuQ/edit, and some posts in https://www.lesswrong.com/tag/ai-evaluations seem relevant.

I think the core argument is "if you want to slow down, or somehow impose restrictions on AI research and deployment, you need some way of defining thresholds. Also, most policymaker's cruxes appear to be that AI will not be a big deal, but if they thought it was going to be a big deal they would totally want to regulate it much more. Therefore, having policy proposals that can use future eval results as a triggering mechanism is politically more feasible, and also, epistemically helpful since it allows people who do think it will be a big deal to establish a track record".

I find these arguments reasonably compelling, FWIW.

Perhaps the reasoning is that the AGI labs already have all kinds of internal benchmarks of their own, no external help needed, but the progress on these benchmarks isn't a matter of public knowledge. Creating and open-sourcing these benchmarks, then, only lets the society better orient to the capabilities progress taking place, and so make more well-informed decisions, without significantly advantaging the AGI labs.

At the very least, evals for automated ML R&D should be a very decent proxy for when it might be feasible to automate very large chunks of prosaic AI safety R&D.

I'm not sure but I have a guess. A lot of "normies" I talk to in the tech industry are anchored hard on the idea that AI is mostly a useless fad and will never get good enough to be useful.

They laugh off any suggestions that the trends point towards rapid improvements that can end up with superhuman abilities. Similarly, completely dismiss arguments that AI might used for building better AI. 'Feed the bots their own slop and they'll become even dumber than they already are!'

So, people who do believe that the trends are meaningful, and that we are near to a dangerous threshold, want some kind of proof to show the doubters. They want people to start taking this seriously before it's too late.

I do agree that the targeting of benchmarks by capabilities developers is totally a thing. The doubting-Thomases of the world are also standing in the way of the capabilities folks of getting the cred and funding they desire. A benchmark designed specifically to convince doubters is a perfect tool for... convincing doubters who might then fund you and respect you.

From my reader's perspective, Inkhaven was probably bad. No shade to the authors, this level of output is a lot of work and there was plenty I enjoyed. But it shouldn't be a surprise that causing people to write a lot more posts even when they're not inspired leads to a lot more uninspired posts.

A lot of the uninspired posts were still upvoted on LW. I even did some of that upvoting myself, just automatically clicking upvote as I start reading a post with an interesting first paragraph by someone whose name I recognize. Mostly this is fine, but it dilutes karma just a bit more.

And I only ever even saw a small fraction. I'm sorry if you were an Inkhaven author who killed it every time, I was merely being shown a subset, since I mostly just click on things on the front page. Probably not so much sorted by quality as by network effects that can get onto the front page long enough to snowball upvotes.

I think as a reader I'd have liked the results better if participants had to publish every other day instead.

just automatically clicking upvote as I start reading a post with an interesting first paragraph by someone whose name

Dude! You upvote the posts before you read them?!

This is probably pretty common, now that I consider it, but it seems like it's doing a diservice to the karma system. Shouldn't we upvote posts that we got value out of instead of ones that we expect to get value out of?

just automatically clicking upvote as I start reading a post with an interesting first paragraph by someone whose name

Internet voting experiences very different from your own…

My LW upvoting policy is that every once in a while I go through the big list of everything I've read, grep for LessWrong posts, look through the latest ~50 entries and decide to open them and (strong) up/downvote them based on how they look, a few months in retrospect.

Maybe the vote up / down option could be moved to after the body of the post? Does seem like an awkward set of design considerations between wanting people to see the current score before reading, and not split the current score from the vote buttons or duplicate the score, and I bet Habryka has thought about this already.

I strongly disagree, I think LessWrong has become a much more vibrant and active place since Inkhaven started. Recently the frontpage has felt more... I can't think of a better word than "corporate"... than I'd like. Maybe what I mean is that the LessWrong posters have started catering more and more toward the lowest-common-LessWrong-denominator.

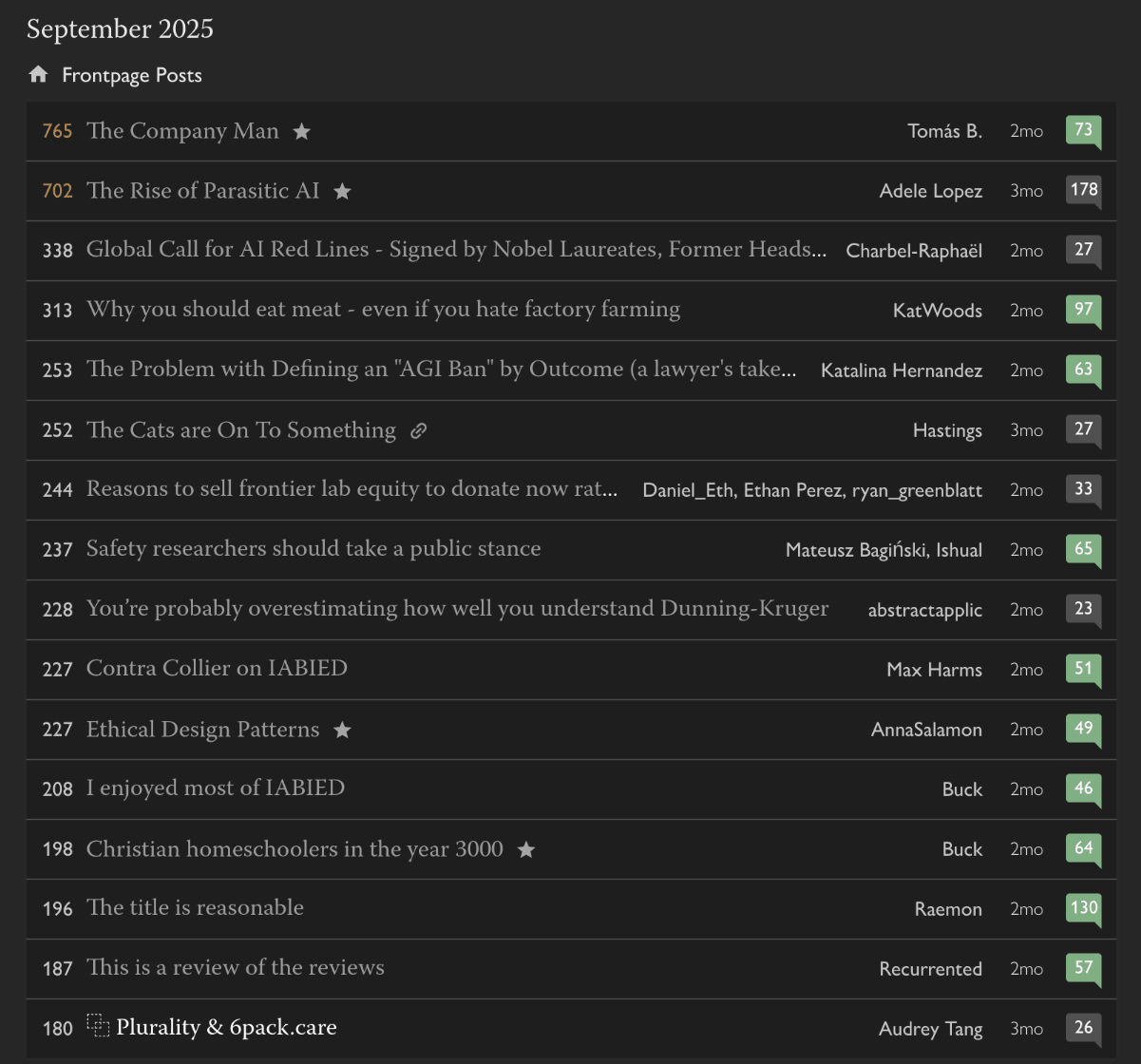

For example, here are the top posts from September 2025 (I think October had a reasonable amount of Inkhaven spirit, considering all the people doing Halfhaven)

Tomas is always nice and refreshing, but imo the rest of this is just really extremely uninteresting and uninspired (no offense to anyone involved, each post is on its own good I think, but collectively they're not that interesting), and seem very much catering to the lowest-common-LessWrong-denominator.

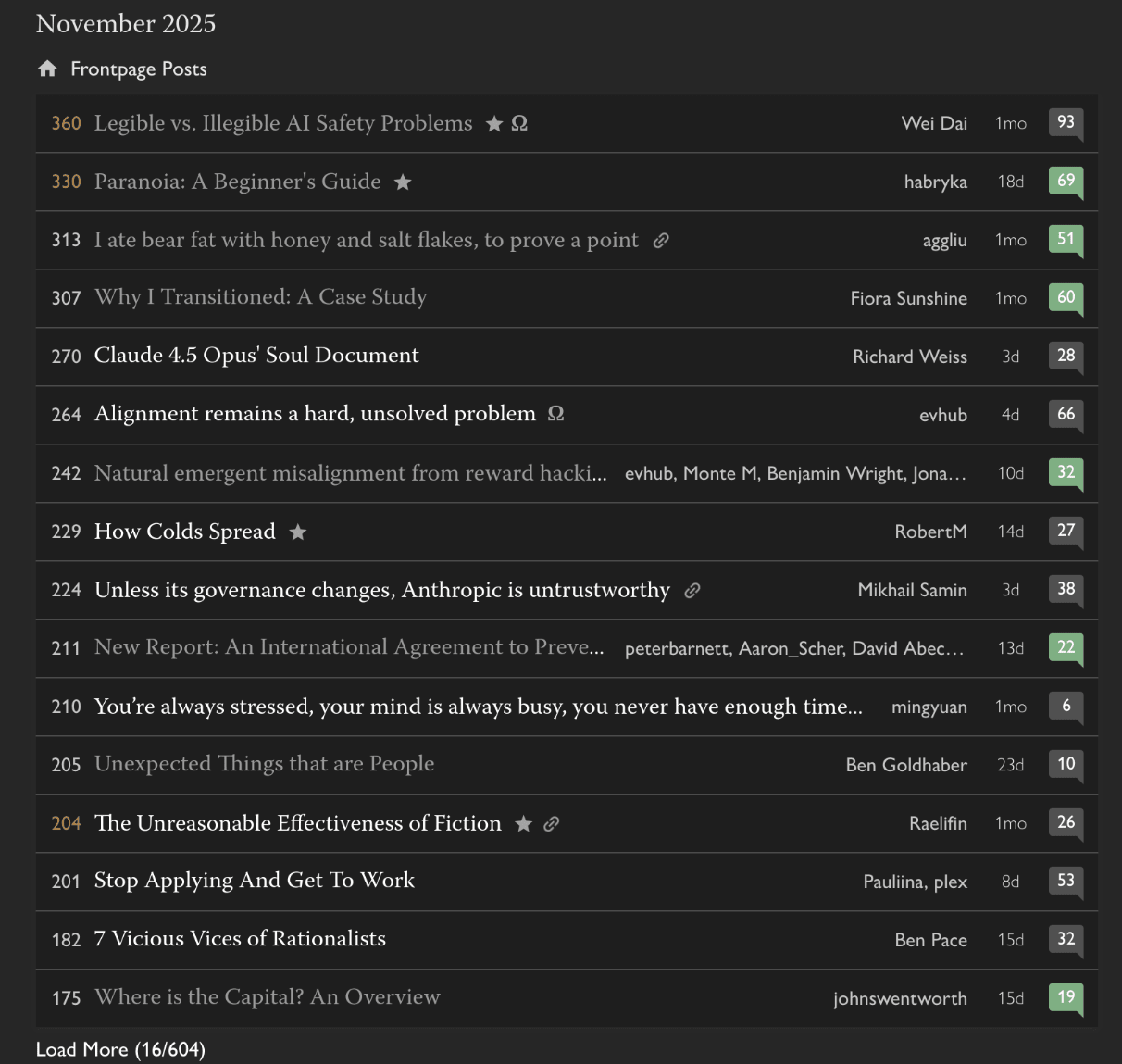

Contrast this selection with the following

I have not read as many of these (because there were more overall posts and these are more recent), but collectively the range of topics is so much more interesting & broad, you still get some lowest-common-denominator catering, but collectively these posts are so much more inspired than they were just two months ago.

I really would v...

Another anecdote: I put off a couple posts from November into December because I happened to care about these particular posts having visibility on the lesswrong frontpage, and the lesswrong frontpage has been unusually gummed up by high-karma low-effort posts during November.

Taking AI companies that are locally incentivized to race toward the brink, and then hoping they stop right at the cliff's edge, is potentially a grave mistake.

One might hope they stop because of voluntary RSPs, or legislation setting a red line, or whistleblowers calling in the government to lock down the datacenters, or whatever. But just as plausible to me is corporations charging straight down the cliff (of building ever-more-clever AI as fast as possible until they build one too clever and it gets power and does bad things to humanity), and even strategizing ahead of time how to avoid obstacles like legislation telling them not to. Local incentives have a long history of dominating people in this way, e.g. people in the tobacco and fossil fuel industries

What would be so much safer is if even the local incentives of cutting-edge AI companies favored social good, alignment to humanity, and caution. This would require legislation blocking off a lot of profitable activity, plus a lot of public and philanthropic money incentivizing beneficial activity, in a convulsive effort whose nearest analogy is the global shift to renewable energy.

(this take is the one thing I want to boost from AI For Humanity.)

Some thoughts on reading Superintelligence (2014). Overall it's been quite good, and nice to read through such a thorough overview even if it's not new to me. Weirdly I got some comments that people often stop reading it. What this puts me in mind of is a physics professor remarking to me that they used to find textbooks impenetrable, but now they find it quite fun to leaf through a new introductory textbook. And now my brain is relating this to the popularity of fanfiction that re-uses familiar characters and settings :P

By god, Nick Bostrom thinks in metaphors all the time. Not to imply that this is bad at all, in fact it's very interesting.

The way the intelligence explosion kinetics is presented really could stand to be less one-dimensional about intelligence. Or rather, perhaps it should ask us to accept that there is some one-dimensional measure of capability that is growing superlinearly, which can then by parlayed into all the other things we care about via the "superpower"-style arguments that appear two chapters later.

Has progress on AI seemed to outpace progress on augmenting human intelligence since 2014? I think so, and perhaps this explains why Bostrom_2014 puts more em...

About a year ago, I made a bet - my $50,000 against their $1000 - that we wouldn't see slam-dunk evidence of UFOs/UAPs being the result of aliens, the supernatural, simulations, or anything similarly non-mundane.

What's changed 1 year on? Well, I think a year ago UAPs and aliens were more in the news, between governmental hearings in several countries, a whistleblower ex-USAF intelligence official, and continuing coverage of navy UAP tapes. None of that has led anywhere, and it's mostly fading from public memory.

You can find people currently claiming that the big reveal that breaks it all wide open is just around the corner. But you can basically always find people claiming that. While doing some quick searching before making this comment, though, I did find out that a congressman from Tennessee is a big believer that the extraordinarily fake-looking aliens exhibited in Mexico last year are super important and need to be investigated at U Tennessee.

If they were, and they turned out to have non-terrestrial biological structure, that's definitely a way I could pay the money. I estimate the probability of this at about 0.00000000000000001.

I read Fei-Fei Li's autobiographical book (The Worlds I See). I give it a 'imagenet wasn't really an adventure story, so you'd better be interested in it intrinsically and also want to hear about the rest of Fei-Fei Li's life story.' out of 5.

She's somewhat coy about military uses, how we're supposed to deal with negative social impacts, and anything related to superhuman AI. I can only point to the main vibe, which is 'academic research pointing out problems is vital, I sure hope everything works out after that.'

A fun thought about nanotechnology that might only make sense to physicists: in terms of correlation function, CPUs are crystalline solids, but eukaryotic cells are liquids. I think a lot of people imagine future nanotechnology as made of solids, but given the apparent necessity of using diffusive transport, nanotechnology seems more likely to be statistically liquid.

(For non-physicists: the building blocks of a CPU are arranged in regular patterns even over long length scales. But the building blocks of a cell are just sort of diffusing around in a water ...

Dictionary/SAE learning on model activations is bad as anomaly detection because you need to train the dictionary on a dataset, which means you needed the anomaly to be in the training set.

How to do dictionary learning without a dataset? One possibility is to use uncertainty-estimation-like techniques to detect when the model "thinks its on-distribution" for randomly sampled activations.

I think you can steelman Ben Goertzel-style worries about near-term amoral applications of AI being bad "formative influences" on AGI, but mostly under a continuous takeoff model of the world. If AGI is a continuous development of earlier systems, then maybe it shares some datasets and learned models with earlier AI projects, and definitely it shares the broader ecosystems of tools, dataset-gathering methodologies, model-evaluating paradigms, and institutional knowledge on the part of the developers. If the ecosystem in which this thing "grows up" is one t...

Idea: The AI of Terminator.

One of the formative books of my childhood was The Physics of Star Trek, by Lawrence Krauss. Think of it sort of like xkcd's What If?, except all about physics and getting a little more into the weeds.

So:

Robotics / inverse kinematics. Voice recognition, language models, and speech synthesis. Planning / search. And of course, self-improvement, instrumental convergence, existential risk.

To make this work you'd need to already be pretty well-suited. The Physics of Star Trek was Krauss' third published book, and he got Stephen Hawking to write the forward.

There's a point by Stuart Armstrong that anthropic updates are non-Bayesian, because you can think of Bayesian updates as deprecating improbable hypotheses and renormalizing, while anthropic updates (e.g. updating on "I think just got copied") require increasing probability on previously unlikely hypotheses.

In the last few years I've started thinking "what would a Solomonoff inductor do?" more often about anthropic questions. So I just thought about this case, and I realized there's something interesting (to me at least).

Suppose we're in the cloning versio...

Will the problem of logical counterfactuals just solve itself with good model-building capabilities? Suppose an agent has knowledge of its own source code, and wants to ask the question "What happens if I take action X?" where their source code provably does not actually do X.

A naive agent might notice the contradiction and decide that "What happens if I take action X?" is a bad question, or a question where any answer is true, or a question where we have to condition on cosmic rays hitting transistors at just the right time. But we want a sophisticated ag...

It seems like there's room for the theory of logical-inductor-like agents with limited computational resources, and I'm not sure if this has already been figured out. The entire trick seems to be that when you try to build a logical inductor agent, it's got some estimation process for math problems like "what does my model predict will happen?" and it's got some search process to find good actions, and you don't want the search process to be more powerful than the estimator because then it will find edge cases. In fact, you want them to be linked somehow, ...

Charlie's easy and cheap home air filter design.

Ingredients:

MERV-13 fabric, cut into two disks (~35 cm diameter) and one long rectangle (16 cm by 110 cm).

Computer fan - I got a be quiet BL047.

Cheap plug-in 12V power supply

Hot glue

Instructions:

Splice the computer fan to the power supply. When you look at the 3-pin fan connector straight on and put the bumps on the connector on the bottom, the wire on the right is ground and the wire in the middle is 12V. Do this first so you are absolutely sure which way the fan blows before you hot glue it.

Hot glue t...

AI that's useful for nuclear weapon design - or better yet, a clear trendline showing that AI will soon be useful for nuclear weapon design - might be a good way to get governments to put the brakes on AI.

Inoculation prompting reduces RL pressure for learning bad behavior, but it's still expensive to rederive that it's okay to cheat from the inoculation prompt rather than just always being cheaty.

One way the expense binds is regularization. Does this mean you should turn off regularization during inoculation prompting?

Another way is that you might get better reward by shortcutting the computation about cheating and using that internal space to work on the task more. It might be useful to monitor this happening, and maybe try to protect the computation about cheating from getting its milkshake drunk.

Humans using SAEs to improve linear probes / activation steering vectors might quickly get replaced by a version of probing / steering that leverages unlabeled data.

Like, probing is finding a vector along which labeled data varies, and SAEs are finding vectors that are a sparse basis for unlabeled data. You can totally do both at once - find a vector along which labeled data varies and is part of a sparse basis for unlabeled data.

This is a little bit related to an idea with the handle "concepts live in ontologies." If I say I'm going to the gym, this conce...

Trying to get to a good future by building a helpful assistant seems less good than it did a month ago, because the risk is more salient that clever people in positions of power may coopt helpful assistants to amass even more power.

One security measure against this is reducing responsiveness to the user, and increasing the amount of goal information that's put into to large finetuning datasets that have lots of human eyeballs on them.

Should government regulation on AI ban using reinforcement learning with a target of getting people to do things that they wouldn't endorse in the abstract (or some similar restriction)?

E.g. should using RL to make ads that maximize click-through be illegal?

Just looked up Aligned AI (the Stuart Armstrong / Rebecca Gorman show) for a reference, and it looks like they're publishing blog posts:

E.g. https://www.aligned-ai.com/post/concept-extrapolation-for-hypothesis-generation

https://venturebeat.com/2021/09/27/the-limitations-of-ai-safety-tools/

This article makes a persuasive case that there being different sorts of safety research can be confusing to keep track of if you're a journalist (who are not so different from policymakers or members of the public).

https://www.sciencedirect.com/science/article/abs/pii/S0896627321005018

(biorxiv https://www.biorxiv.org/content/10.1101/613141v2 )

Cool paper on trying to estimate how many parameters neurons have (h/t Samuel at EA Hotel). I don't feel like they did a good job distinguishing how hard it was for them to fit nonlinearities that would nonetheless be the same across different neurons, versus the number of parameters that were different from neuron to neuron. But just based on differences in physical arrangement of axons and dendrites, there's a lot of opportuni...

Back in the "LW Doldrums" c. 2016, I thought that what we needed was more locations - a welcoming (as opposed to heavily curated a la old AgentFoundations), LW-style forum solely devoted to AI alignment, and then the old LW for the people who wanted to talk about human rationality.

This philosophy can also be seen in the choice to make the AI Alignment forum as a sister site to LW2.0.

However, what actually happened is that we now have non-LW forums for SSC readers who want to talk about politics, SSC readers who want to talk about human rationality, and peo...